Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

How to add the Tiny Yolo2 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Camera category tab.

- Press the Tiny Yolo2 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Tiny Yolo2 robot skill.

How to use the Tiny Yolo2 robot skill

Object detection is fundamental to computer vision: Recognize the objects inside the robot camera and where they are in the image. This robot skill attaches to the Camera Device robot skill to obtain the video feed for detection.

Demo

Directions

Add a Camera Device robot skill to the project

Add this robot skill to the project. Check the robot skill's log view to ensure the robot skill has loaded the model correctly.

START the camera device robot skill, so it displays a video stream

By default, the TinyYolo skill will not detect objects actively. Check the "Active" checkbox to begin processing the camera video data stream.

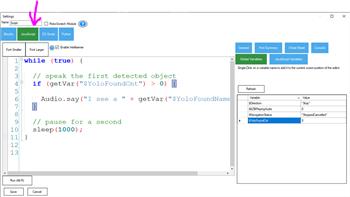

Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.

Camera Device Integration

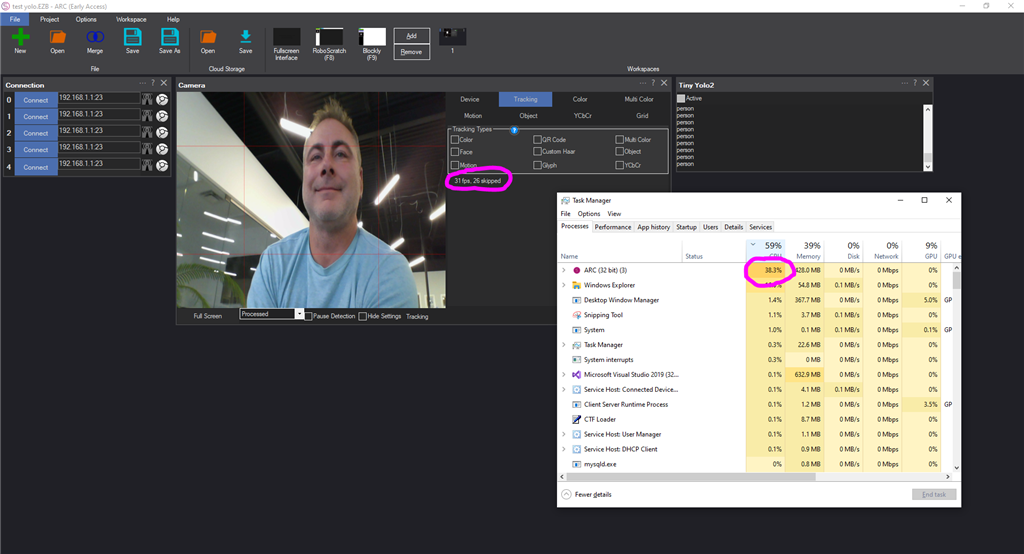

This robot skill integrates with the camera device by using the tracking features. If the servo tracking is enabled, this robot skill will move the servos. This is an extension of the camera robot skill. The On Tracking Start script will execute, and camera device variables will be populated when tracking objects.Performance

In HD webcam resolution, Tiny Yolo is processing 30+ FPS with 38% CPU, sometimes more, depending on the processor of your PC.Variables

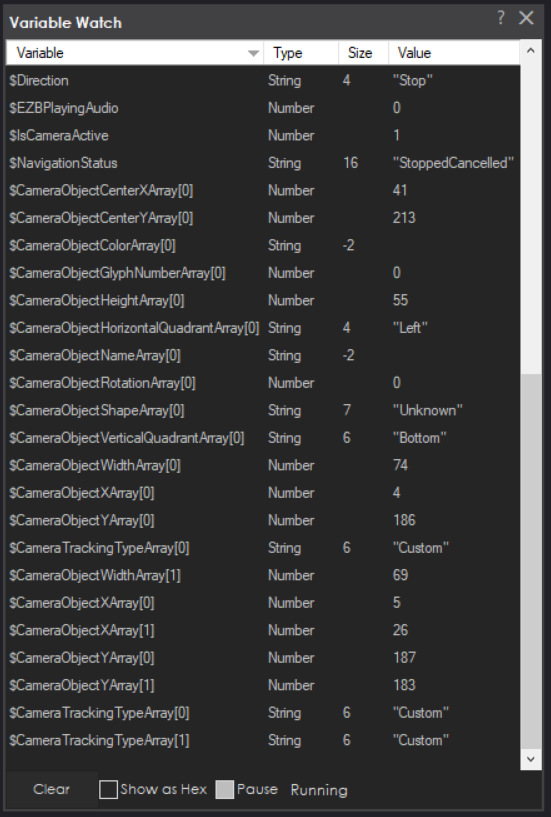

The detected objects are stored in global variables in the array provided by the camera robot skill. The number of detected objects determines the size of the array. The detected object's location, confidence, and name are all stored in variables. Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.Trained Objects

Tiny Yolo robot skill includes an ONNX model with 20 trained objects. They are... "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"The ImageNetData is trained with the image resolution of 416x416 because it uses the TINY model. Regular-sized models are trained at 608x608.

ToDo

- control commands for starting, stopping

- ability to specify custom training model files

Related Questions

True Autonomous Find, Pick Up And Movemovement

Using Variables For TY2 And Camera

Upgrade to ARC Pro

With Synthiam ARC Pro, you're not just programming a robot; you're shaping the future of automation, one innovative idea at a time.

i'll also take a look and see if there's an update to onyx which might be helpful

I dunno - i tested it with the advanced inmoov head project and it works as well - even found my bicycle! This computer only has 16gb Windows 10. Do you have virus scanners and spam testers and registry cleaners and a bunch of "helper free" utilities running in the taskbar?

I just formatted an intel NUC installed windows 10 and Latest ARC and Loaded Cam and Yolo2 and nothing else. 8GB RAM.

Loading models: C:\ProgramData\ARC\Plugins\19a75b67-c593-406c-9789-464aa3ba998b\models\TinyYolo2_model.onnx... Set Configuration: The type initializer for 'Microsoft.ML.OnnxRuntime.NativeMethods' threw an exception.

I am using the IoTiny and the EZ-Robot camera so I also tried a webcam.

Opening a beer.

haha - well i don't know what to tell ya! When I get time I can take a look but it's not written by Synthiam. The Onnx ML library is a Microsoft product, so there's nothing inside that I can do. I can update the libraries to the latest nuget packages and hope there's no breaking changes (confidence is low)

But in the meantime, it is a lot of effort for 20 trained items lol. According to the manual above the list is... "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"

PS, why did they include sheep and cow an dhorse and train? Ugh ppl are funny

Yeah I just wanted person and book (it does book as well) then if book then read cover then look up book then provide a summary of book. If short story (kids book) read book. I can do it another way but this was just to run in background chew few resources and if someome walked up and showed robot a book then kick in the other tools.

maybe it doesn't do book the Darknet one does hmmm

I updated v13 to the latest onnx libraries - which, as i predicted, broke the build. They deprecated bitmap and required column name compiler attributes... all of which weren't documented. So I'm gluing the hair I pulled out back onto my head haha

I think you're confusing the models with frameworks and their components. There are numerous Machine Learning frameworks that can work with the same machine learning data set. The example dataset that you're using must have a "book" added. It might make sense to allow tiny Yolo ml datasets to be added easily to this robot skill in the future. Darknet is the basis for YOLO. So the ML model you're using is different than the one included with this skill.

While the models can be specified, the labels need to be somehow imported as well. I'll have to think about how to do that and make it dynamic.