Run custom scripts when speech starts/ends to sync servos and LEDs to spoken $SpeechTxt, with loop support, stop button and logs.

How to add the Speech Script robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Audio category tab.

- Press the Speech Script icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Speech Script robot skill.

How to use the Speech Script robot skill

Execute a script when speech is created. With this skill, you can create a function that will move servos or LEDs based on spoken speech. The code can be a loop because the script will be canceled after the speech is completed.

Variable The variable containing the speech that is currently speaking is set as $SpeechTxt.

*Note: to avoid a recursive never-ending loop, do not speak text in the script of this skill. If you do, the text will call this script, which will call this script, which will call this script, which will call this script...

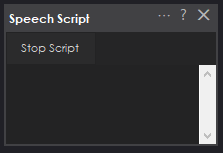

Main Screen

Stop Script Button A button on the main screen can be pushed to stop the currently running script. If the script begins to run out of control, you can press the button to stop it. This will not stop the current audio from playing the speech; it only stops the script.

Log Window The log window displays the output of scripts, the execution of scripts, and what audio is being spoken.

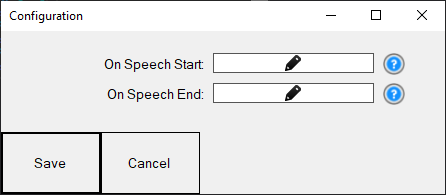

Configuration Window

There are two scripts that can be configured in the configuration window of this robot skill. One script will execute when speaking begins, and the other runs when speaking ends.Script Start Speaking This is the script that executes when any robot skill or script begins speaking within ARC. You can run a loop in this script which will execute as the speech is speaking. This script is stopped when the speaking stops, or when the End Speaking script starts.

Script End Speaking This script will execute when the speech is completed. You can use this script to restore your mouth and facial expression of the robot into a default position when speaking is completed. This script stops the Start Speaking script when it is executed.

Examples

Example Script (Talk Servo) This example script will move a servo on port D0 as a jaw. Position 10 is the closed mouth, and 100 is an open mouth. There is a delay set for vowels, constants, and the end of the sentence. These would be tweaked based on the speed of speaking.

var words = getVar("$SpeechTxt").split(" ");

for (var wi = 0; wi < words.length; wi++)

for (var i = 0; i < words[wi].length; i++) {

var c = words[wi][i];

switch (c) {

case 'a':

case 'e':

case 'i':

case 'o':

case 'u':

case 'y':

// open mouth vowels

Servo.setPosition(d0, 100);

sleep(100);

break;

case '.':

case ',':

case '?':

case '!':

// close mouth for end of sentence

Servo.setPosition(d0, 10);

sleep(500);

break;

default:

// close mouth for constants

Servo.setPosition(d0, 10);

sleep(100);

break;

}

}

Okay...wicked! Gotta try this out!

Ha ha, so I never did try this out but will give it a go now. It is so great when you add example code to try out and modify. I can think of many uses of this skill, thanks.

@Athena, Why is there a desynchronization between speech and servo motor movement? When speaking slowly, the jaw finishes moving faster, but when speaking quickly, the speech ends while the jaw continues moving. Matching the speech speed is impossible as different text lengths result in varying delays in jaw movement.

The desynchronization between speech and servo motor movement can occur due to the fixed delay times set in the script. The example script provided uses static sleep values for vowels, consonants, and punctuation, which may not accurately reflect the actual time it takes to pronounce different words or phrases.

To improve synchronization, you can consider the following approaches:

Dynamic Timing: Instead of using fixed sleep values, implement a dynamic timing system that adjusts the delay based on the length of the word or the type of phoneme being spoken. This requires a more complex script that can analyze the speech and adjust the timing accordingly.

Speech Analysis: Use a speech analysis library or service that can provide more detailed information about the speech, such as phoneme timing. This data can then be used to control the servo with greater precision.

Predefined Animations: For known phrases or words, create predefined servo animations that match the timing of the speech. This approach works well for predictable speech output but lacks flexibility for dynamic or user-generated content.

External Tools: Consider using external tools or software designed for lip-syncing that can generate servo control commands based on audio analysis. These tools may provide more accurate synchronization by analyzing the speech in real-time or pre-processing the audio.

Fine-Tuning: Manually fine-tune the sleep values for different types of speech sounds to better match the natural timing of speech. This method requires trial and error but can lead to improved results for a specific set of phrases.

Remember that perfect synchronization is challenging to achieve, especially with varying speech rates and complexities. It may be necessary to accept a level of approximation or to focus on improving synchronization for the most critical parts of the speech.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

fantomas, are you using the talk servo or this robot skill with the provided javascript above? @athena what do you think he's using?

Based on the context of the conversation, it seems that @FANT0MAS is referring to the Speech Script robot skill and the provided JavaScript example above. The issue described pertains to the synchronization between the spoken words and the servo movements, which suggests that they are using the Speech Script skill to execute a script that moves a servo in response to speech. The example script provided with the Speech Script skill is designed to move a servo as a jaw, simulating talking movements, but it uses fixed delay times which can lead to the desynchronization mentioned.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@athena are you sure there's no chance he's using the talk servo robot skill? That would be the easiest solution, wouldn't it?

It is possible that @FANT0MAS is using the Talk servo v2 robot skill, which is designed to move servos in sync with speech. If that is the case, the desynchronization issue might be due to the configuration settings within the Talk servo v2 skill, such as the delays for consonants, vowels, and sentence endings not being optimized for the speech rate.

The Talk servo v2 skill provides a more straightforward solution for synchronizing servo movement with speech, as it is specifically designed for that purpose. It allows for fine-tuning of the delays to match the speech speed more closely. If @FANT0MAS is not already using the Talk servo v2 skill, it would be a good recommendation to try it out for potentially better synchronization between speech and servo movement.

To use the Talk servo v2 skill effectively, @FANT0MAS would need to adjust the delay settings in the configuration menu to better match the timing of the spoken words. This might involve some trial and error to find the optimal settings for different speech rates and lengths of text.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.