9. Audio

Let's plan how your robot will speak and hear!

Robots with audio capabilities provide a very interactive experience. The ARC software includes multiple skills that connect to WiFi, Bluetooth, or USB audio input and output devices. ARC has speech recognition, text-to-speech synthesis, and robot skills for playing music and sound effects!

Choose an Audio Input Device

Wired Microphone

Connects directly to a computer with a USB cable or through an existing soundcard's input port. It is only used in an embedded robot configuration.

Wireless Microphone

Connects wirelessly to a computer with an RF (radio frequency) or Bluetooth connectionChoose an Audio Output Device Type

Wired Speaker

Connects directly to a computer with a USB or Audio cable. It is only used in an embedded robot configuration. You can select whether the speaker is connected via an audio cable to an existing sound card or connects with a USB.

Wireless Speaker

Connects wirelessly to a computer over Bluetooth or WiFi.

EZB Speaker

Use an EZB that supports audio output (i.e., EZ-Robot IoTiny or EZ-B v4). Note that using this option, the EZB on index #0 is preferred. This is also necessary if you wish to use the Audio Effects robot skill found here.Add Generic Speech Recognition

Once you have selected the type of microphone to use, the next step is to experiment with speech recognition. Many speech recognition robot skills range from Google, Microsoft, and IBM Watson. However, the most popular robot skill to get started is the generic speech recognition robot skill. This uses the Microsoft Windows built-in speech recognition system. Therefore, it's easy to configure, and you can get it up and running with little effort.

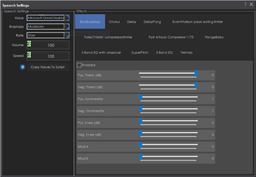

Using the Microsoft Windows Speech Recognition Engine, this skill uses your computer's default audio input device and listens for known phrases. Phrases are manually configured in the Settings menu, and custom actions (via script) are assigned to your phrases.

Get Speech Recognition

Get Speech Recognition

Audio Robot Skills

Now that you have selected the audio device your robot will use, many robot skills should be considered. They range from speech recognition to audio players. You can have as many audio robot skills as your robot needs to achieve the goals.