Customizable inverse/forward kinematics editor for robot arms: add joints/bones, map XYZ in cm, auto-calc joint angles for precise 3D positioning.

How to add the Inverse Kinematic Arm robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Servo category tab.

- Press the Inverse Kinematic Arm icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Inverse Kinematic Arm robot skill.

How to use the Inverse Kinematic Arm robot skill

This Synthiam ARC robot skill is a powerful, customizable tool that provides advanced kinematic solutions for your robot arm. This tool allows you to define and manipulate the structure of your robot arm by adding joints and bones, providing a high level of customization to suit your specific needs.

It is designed to be user-friendly and intuitive, making it accessible to beginners and experienced users. Whether designing a robot for industrial applications, research, or personal projects, the Synthiam ARC Inverse Kinematics robot skill provides the flexibility and precision you need to create an efficient robot arm.

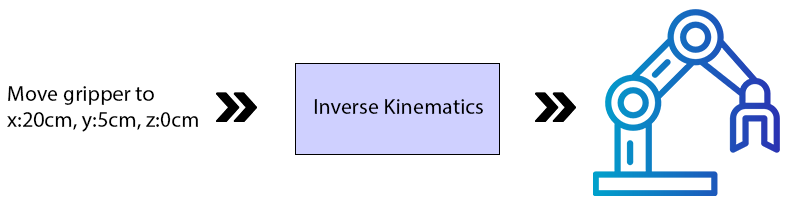

Inverse Kinematics

Inverse kinematics lets you specify 3D coordinates in CM to move the robot arm's end effector (i.e., gripper). This is especially useful when the task requires the robot to reach a specific location, and you need the joint angles calculated automatically to allow the robot to reach that position.Forward Kinematics

Forward kinematics displays the position of the robot's end effector (the part of the robot that interacts with the environment, such as a gripper) in 3D coordinates. If you programmatically move the servos, the 3D coordinates of the robot arm's end effector are calculated and displayed in the robot skill X, Y, and Z values in CM.Use Cases

1. Manufacturing: In manufacturing, an inverse kinematics robot arm can perform precise tasks such as welding or assembly. For instance, if a specific component needs to be placed at a certain position in 3D space, the robot arm can calculate the necessary joint angles to reach that position with high precision.2. Medical Procedures: Inverse kinematics robot arms can be used in medical procedures such as surgery or rehabilitation. For example, in a surgical procedure, the robot arm can be programmed to move a surgical instrument to a specific location within the patient's body. The 3D Cartesian coordinates of the target location can be determined using medical imaging techniques, and the robot arm can then calculate the necessary joint angles to move the instrument to that location.

3. Research and Development: In a research setting, an inverse kinematics robot arm can perform experiments that require precise positioning of objects or instruments. For example, in a physics experiment, a sensor might need to be positioned at a specific location in 3D space to collect data. The robot arm can calculate the necessary joint angles to move the sensor to that location.

4. Art and Entertainment: An inverse kinematics robot arm can create complex and precise movements in art and entertainment. For instance, in animation or video game development, a robot arm could capture motion data for a character. The robot arm's end effector could be attached to an actor, and the robot arm could then be programmed to move the actor to specific positions in 3D space, capturing the actor's movements in the process.

5. Food Industry: In the food industry, a robot arm can be used for food preparation or packaging tasks. For example, a robot arm could be programmed to move a food item to a specific location on a conveyor belt for packaging. The robot arm can calculate the necessary joint angles to move the food item to the specified location.

Terms To Know

End Effector In robotics and automation, an end effector is a device or tool at the tip of a robotic arm or manipulator. It serves as the "hand" of the robot, and its primary function is to interact with the environment, perform specific tasks, and manipulate objects. End effectors are critical components in a wide range of robotic applications, and their design can vary significantly based on the intended task.For instance, the end effector might be a gripper used to pick up and manipulate objects in a manufacturing setting. In a medical application, it could be a surgical instrument. In a painting robot, it could be a brush. Essentially, the end effector is the part of the robot that performs the task it is designed to do.

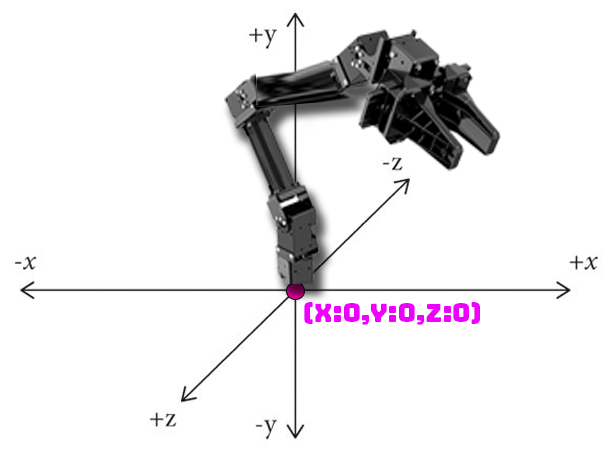

Cartesian Coordinates In 3D Cartesian space, also known as three-dimensional space, positions are represented using three coordinates: x, y, and z, typically measured in centimeters (CM). This space allows us to describe the location of a point or object in a three-dimensional environment. The coordinates for the 3D cartesian space are relative to the axis on which you have the robot arm mounted. For example, suppose you mounted the robot arm on a table. In that case, the X coordinate specifies the horizontal position, the y-coordinate represents the vertical position, and the z-coordinate indicates the depth, forming a 3D reference system essential for various applications, including computer graphics, engineering, and robotics. If the robot arm is mounted on the shoulder of a humanoid, these coordinates will be rotated 90 degrees.

By adjusting these X, Y, and Z settings in the robot skill, you can precisely control the position of the end effector in 3D space. For example, if you want the robot arm to move the end effector to a specific point, you can use the ControlCommand("Inverse Kinematics Arm", "MoveTo", x, y, z) command. This command will calculate the necessary joint angles using inverse kinematics and move the end effector to the specified X, Y, and Z positions in the Cartesian 3D space.Joint Types

1. Rotation Joint Also known as a revolute joint, a rotation joint allows rotational movement around a single axis. This is most commonly found at the base of a robot to rotate the arm side to side, similar to how a swivel chair can turn around its base. In the context of a robot arm, this joint can swing the arm from side to side, allowing the robot to reach different areas without moving its base. This is controlled mainly by the X coordinate.2. Lever Joint A servo that lifts a robot arm is typically associated with a type of joint known as a "lever joint." A lever (a prismatic joint) allows linear motion along a single axis, similar to how a hydraulic lift works. This type of joint is often used in robot arms to provide vertical movement, allowing the arm to move up and down. The servo would control the extension and retraction of the joint, effectively lifting or lowering the arm. This works similarly to a human elbow. These are controlled mainly by the Y and Z coordinates.

Main Window

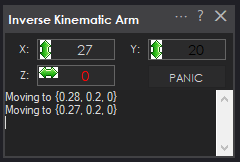

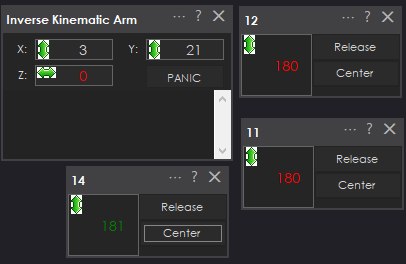

X Value With inverse kinematics, modifying this value moves the end effector to the specific X (left/right) offset from 0 (robot base) in centimeters. With forward kinematics, this value will display the current calculated X offset.Y Value With inverse kinematics, modifying this moves the end effector to the specific Y (up/down) offset from 0 (ground) in centimeters. With forward kinematics, this value will display the current calculated Y offset.

Z Value With inverse kinematics, modifying this moves the end effector to the specific Z (forward/backward) offset from 0 (center) in centimeters. With forward kinematics, this value will display the current calculated Z offset.

*Note: The X/Y/Z values will be updated automatically to the current cartesian coordinate using forward kinematics if the servos are moved from another robot skill or through code.

Panic Releases the servos on the arm if it is jammed or stuck due to specifying an incorrect location that collides with something.

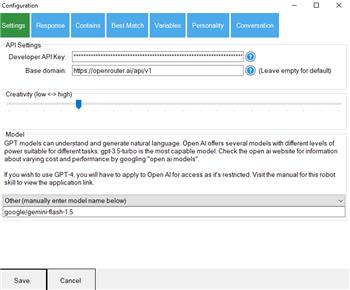

Configuration

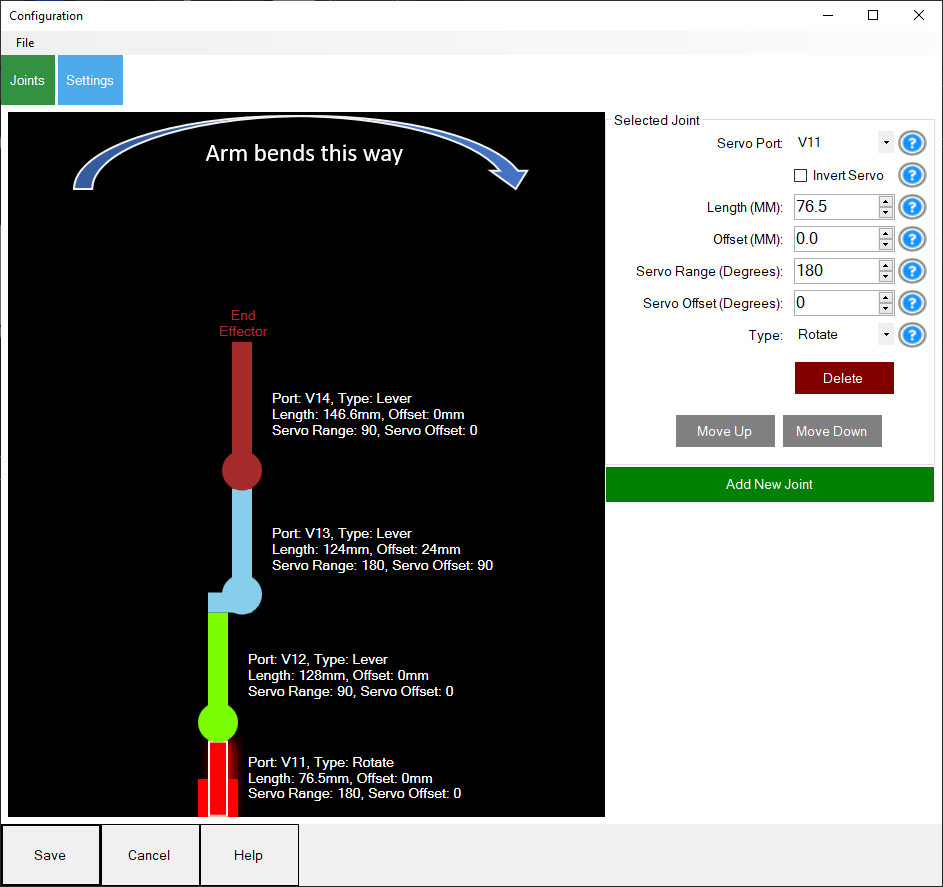

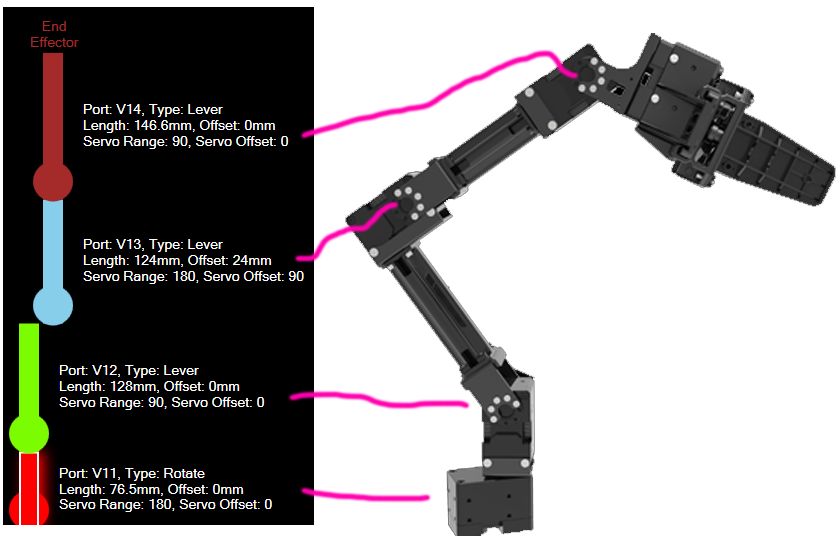

This is the configuration window where you design the robot arm details. By adding joints, you can specify the distance between the shaft of each joint, offset from the previous joint, and servo parameters.Servo Port This is the port that the servo for this joint is connected to. This robot skill requires all servos to be controlled by the first EZB Index #0

Invert Servo If the servo moves in the opposite direction, this option can be checked to reverse the direction.

Length (mm) The length of this joint's bone to the next joint. Joints are measured from the center of the rotation shaft of the joint to the center of the rotation shaft of the following joint. Or, in the case of the end effector, the length is from the center of its rotation shaft to the tip of the end effector. This measurement is crucial for accurate inverse and forward kinematic calculations. Therefore, using a high-precision measurement caliper is recommended if you do not have the CAD drawings of the robot arm.

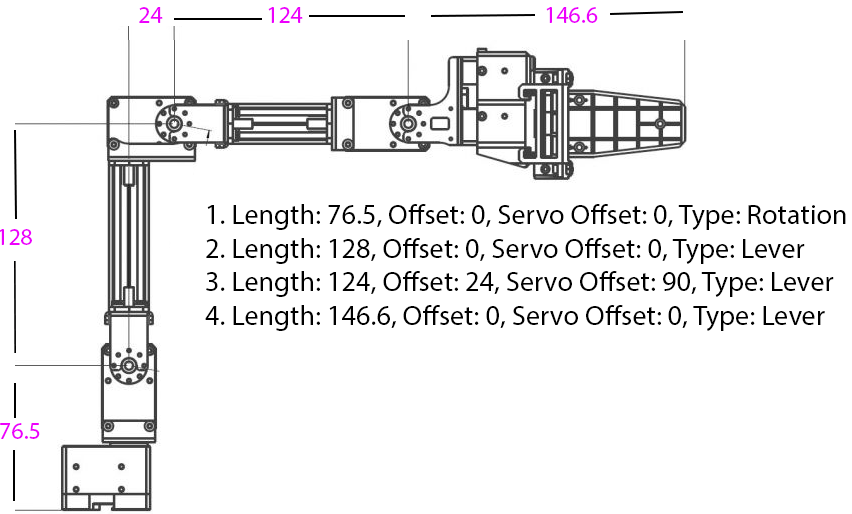

Offset (mm) This is a horizontal offset for this joint's start position relative to the previous joint. If your robot arm is standing straight upward, such as how the designer displays, if the joint is offset to the right or left, you will configure this setting. The most common example is using the Robotis Open ManipulatorX robot arm. The 3rd joint is offset 24mm to the right from the previous joint, as seen in the example screenshot above.

Servo Range (Degrees) The range the servo can freely move in either direction from the center. For example, a PWM hobby servo has 180 degrees of resolution and a 90-degree range from either side of the center (90 degrees). For a PWM hobby servo, you would enter 90 here if the servo has a free range of the available 180 degrees. If the servo had 360 degrees of resolution, you would enter 180 here. This value limits the rotation degrees of a servo to prevent damage to the arm.

Servo Offset (Degrees) The angle offset that the servo is physically mounted relative to the previous servo when the arm is extended upward. If the servo is laying sideways (rotated 90 degrees offset), you will enter 90 here. The 3rd servo in the Robotis Open Manipulator X would be 90 degrees offset, while all other servos will have an offset of 0.

Type The type of joint can either be a Lever or Rotation. The base of a robot arm is generally set as a Rotation because it rotates the entire arm side to side, while the remaining joints are Lever, as they lift the robot arm up and down.

File Menu The top file menu can be accessed to load and save configurations for robot arms. These files are saved as ".kinematic" in the ARC documents folder.

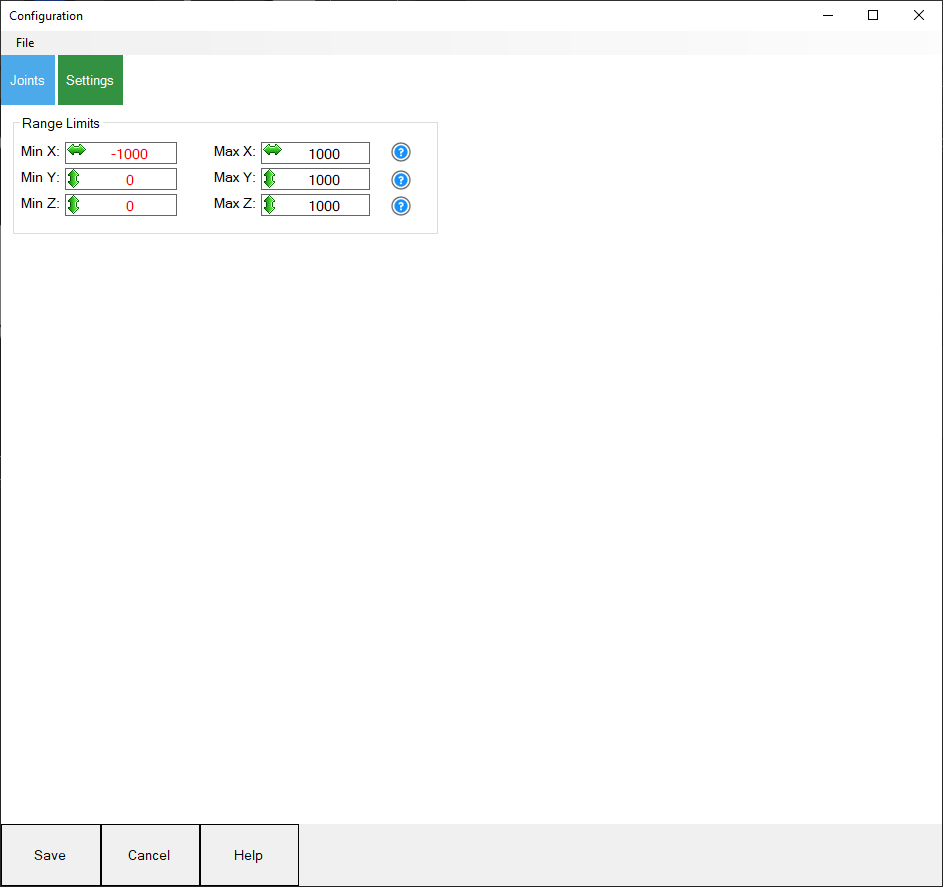

Range Limits To protect the robot arm servos and end effector, you can configure limits to the cartesian coordinates. The limits are specified for X, Y and Z axis. Enter the limits and they are saved when the SAVE button is pressed for the configuration window.

Control Commands

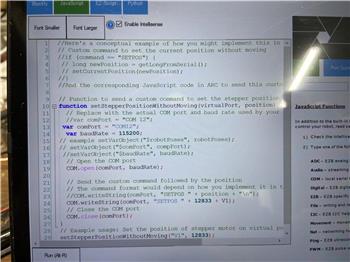

The robot arm can be instructed to move into a specific cartesian coordinate with the MoveTo control command. This is a JavaScript example of moving the servo into the X position of 0cm, Y position of 10cm, and Z position of 15cm. The available ControlCommand() can be viewed using the Cheat Sheet or right-click in any code editor.

ControlCommand("Inverse Kinematics Arm", "MoveTo", 0, 10, 15);

Tips

Length Measurements When using the configuration to create a robot arm definition, a few tips will help you understand how the visual representation works with your robot arm. To begin, it's important to note that the length of each joint is essential to be as accurate as possible. The distances are measured between the center of each joint shaft, which can be done with a digital caliper. The lengths are the most essential value as they are required to correctly calculate the inverse and forward kinematics using trigonometric math.A drawing is an excellent place to start, as you can visualize each joint and the length measurements. This is an example of the well-documented Robotis Open Manipulator X robot arm.

When designing the robot, each joint and the offset can be mapped by an example, such as demonstrated here for the Robotis Open ManipulatorX.

*Note: The robot arm should be designed in the graphical configuration as it is bending toward the editor's right. Initialization When initializing the position of the robot arm at startup for the first time, it is recommended to programmatically move the arm into a known position using code or servo robot skills. This will ensure the forward kinematics are calculated correctly, and you can see a known position to move the arm into. Do not try and guess the 3D cartesian coordinates without first knowing if the kinematics are calculated correctly. The diagram below shows that the robot arm has been moved into 180-degree positions, where the forward kinematic calculations correctly display the end effector coordinates. This is how you can verify the arm is configured and will not damage itself.

Testing

When your robot configuration is first defined, it may not be accurate. Moving the robot using these robot skills coordinate adjustments is not advisable. This is because the robot may move into an unwanted position, which puts strain on the servo motors. Moving the servos manually using a servo robot skill and viewing the forward kinematics calculation is advised. The end effector should be within the range calculated as the robot moves. This will give you confidence that the robot arm is configured correctly.If the robot arm moves into a position that strains the servo, the PANIC button can be pressed to release the servos. At this point, you will have to revisit the configuration to correct the invalid settings.

Examples

Camera Pickup Object You may have a camera above a spot, looking down where a robot arm can reach. The Camera Device robot skill is smart and can recognize objects, like a special marker called a glyph. When the camera sees this object, it notes its position as X and Y coordinates in pixels in the global variable storage.

We want the robot arm to reach and grab the object. Now, this robot skill works coordinates in centimeters (CM), and the camera uses pixels. You can use a function called JavaScript Utility.map() to convert from pixels to CM. But remember, the exact conversion depends on how your robot arm is set up and how far the camera is from the area. So, you'll need to fine-tune those CM coordinates based on your specific setup.

The code below is a script for controlling a robot arm with a gripper and a camera. Here's a breakdown of what it does:

Servo.setPosition(v15, 260);- This line opens the gripper by setting the servo to open the gripper.sleep(1000);- This line pauses the script for 1000 milliseconds (or 1 second) to allow the gripper to open fully.The following code block calculates the X and Z coordinates for the robot arm to move to. It does this by mapping the X and Y coordinates of the detected object in the camera view (pixels) to the robot arm's coordinate system (CM).

ControlCommand("Inverse Kinematics Mover", "MoveTo", x, 1, z);- This line sends a command to the robot arm to move to the calculated X and Z coordinates. The Y coordinate is set to 1, representing a fixed height of 1cm from the table/surface.sleep(2000);- This line pauses the script for 2000 milliseconds (or 2 seconds) to allow the robot arm to move to the target position.Servo.setPosition(v15, 190);- This line closes the gripper by setting the servo controlling the gripper to a specific position. The value to close the gripper should be customized for your object to not strain the servo.

*Note: The specific values used for the servo positions and the mapping of the camera coordinates to the robot arm coordinates might need to be adjusted for your particular robot arm and camera setup.

This could be pasted in the Camera Device's "Tracking Start" script for JavaScript...

// Open gripper

Servo.setPosition(v15, 260);

// Pause while the gripper opens

sleep(1000);

// Get the X axis (left/right) based on the X axis of the detected object in the camera

var x = Utility.map(

getVar("$CameraObjectX"),

0,

320,

-20,

20);

// Get the Z axis (back/forth) based on the size of the detected object in the camera

var z = Utility.map(

getVar("$CameraObjectY"),

0,

240,

30,

15);

// Move the arm to the location of the detected object

ControlCommand("Inverse Kinematics Mover", "MoveTo", x, 1, z);

// Pause while the robot moves to the position

sleep(2000);

// Close gripper (fine-tune for your object size)

Servo.setPosition(v15, 190);

Camera Object Tracking Example Imagine making a computer program that controls a robot's hand (the part that grabs things) based on what a camera sees. In this case, let's pretend the camera has a picture with 320 pixels across and 240 pixels up and down. We'll use this picture to make the robot hand move up and down (from -20 to 20 centimeters) and left to right (5 to 35 centimeters). We can also determine how close an object is to the camera by looking at its size and mapping it to a distance from 20 to 30 centimeters.

The provided code is a continuous loop that uses a camera to detect an object and then moves the robot arm based on the object's position and size. The Utility.map function converts the object's position and size from the camera's coordinate system to the robot arm's coordinate system.

Here's a breakdown of what the code does:

The

while (true)loop causes the code to run indefinitely. This is common in robotics and real-time systems where you want to monitor sensors and adjust the system's behavior accordingly continuously.The

getVarfunction is used to get the current position and size of the detected object from the camera in the global variable storage. The$CameraObjectX,$CameraObjectY, and$CameraObjectWidthvariables represent the object's horizontal position, vertical position, and size.The

Utility.mapfunction converts these values from the camera's coordinate system to the robot arm's coordinate system. For example, thexvalue is calculated based on the object's size, with larger objects resulting in a largerxvalue. This moves the robot arm closer to larger objects and further from smaller objects.The

ControlCommandfunction is then used to move the robot arm to the calculated position. The"Inverse Kinematics Mover"argument to this robot skill, and the"MoveTo"argument is the command to move to a specific position.The

x,y, andzvalues calculated earlier is passed as arguments to theControlCommandfunction. These represent the robot arm's desired position in its coordinate system (units are CM)

This code would be used for a camera to track an object and a robot arm to follow the object or maintain a particular position relative to it. This code can be placed in the Camera Device's "Tracking Start" script...

while (true) {

// Get the X axis (rotation) based on the X axis of the detected object in the camera

var x = Utility.map(

getVar("$CameraObjectX"),

0,

320,

-20,

20);

// Get the Y axis (up/down) based on the Y axis of the detected object in the camera

var y = Utility.map(

getVar("$CameraObjectY"),

0,

240,

35,

5);

// Get the Z axis (back/forth) based on the size of the detected object in the camera

var z = Utility.map(

getVar("$CameraObjectWidth"),

40,

100,

20,

30);

ControlCommand("Inverse Kinematics Mover", "MoveTo", x, y, z);

}

Related Questions

Home Stepper Motor

Using Openai Skill With Other Products

Wiring Stepper Motor

Upgrade to ARC Pro

Unleash your creativity with the power of easy robot programming using Synthiam ARC Pro

Very nice! This one will come in handy along with an array.

Version 5 has been updated to swap X and Z axis because they were reversed. X should be horizontal and Z should be depth.

very cool. The Ez-robot_arm works quite well with this. Is there an easy way to connect a joystick?

while (true) loop, read joystick position, use ControlCommand to move robot arm? That's probably the easiest.

Great skill and very interesting. I installed it for my customized arm and it seems it is working well. I will dedicate some time to fine tune and verify. It is mounted in a different position so probably I will need to make some changes in the coordinate system. Thanks.

Noice. I think for mounting sideways or what ever, just treat the x y z differently. That’s the easiest way to do it. I thought of adding an option but it starts to get confusing for the interface

easier just to picture the arm sideways and the axis rotated

Hi, is it possible to consider in the ControlComand the speed / Acceleration variables? also the limits to the coordinates to avoid damages in the arm? Thanks

Speed and acceleration are servo specific parameters. Use the speed and acceleration script commands to set them for the servos. You can do that in an initialization script. I’m on my phone so I can’t paste a link. But look in the support section under your desired scripting language. Look in the servo category. There you will see the commands.

Remember the servos you’re using must support velocity and acceleration.

The coordinate limits can be added