Immersive Meta Quest VR control for Synthiam ARC robots: stereoscopic camera view, map servos to hands/controllers, plus built-in remote desktop.

How to add the Oculus Quest Robot robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Virtual Reality category tab.

- Press the Oculus Quest Robot icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Oculus Quest Robot robot skill.

How to use the Oculus Quest Robot robot skill

Use the Oculus Meta Quest VR Headset to control a real robot and view the camera with your hands or controllers. Servos can be attached to finger movements, hand movements, controllers, and buttons. Additionally, a built-in remote desktop controls ARC without removing the VR headset. This robot skill requires the Meta Quest 2 app installed from the Oculus Store to control a robot.Transform your robot interaction experience with the Oculus Quest robot skill for Synthiam ARC. This skill enables immersive control of robot cameras and servos using the Meta Quest 2 VR headset. This innovative skill offers a hands-on approach to robotics, complete with a remote desktop feature for seamless ARC control. It promises a future where remote work and safety in hazardous environments are enhanced through virtual reality.

Why Control Robots From VR?

While it is exciting to use this robot skill and have a robot mimic your movements with VR accessories, this is also a preview of future technology in the workplace. Robots can perform many jobs, but the computing power to automate the task is unavailable. Today, VR technology is advanced enough to allow humans to control robots remotely and accomplish tasks. This means, for example, that warehouse workers can work from the comfort and safety of their homes while improving outcomes by remotely controlling robots. As artificial intelligence improves, robots will require less human intervention, but humans will still be needed to help the robot when it gets stuck. This means one person can control many robots, complementing Synthiam's approach with our Exosphere product.Imagine people working from home while operating robots remotely in dangerous locations, such as mining, disaster recovery, or space exploration. With Synthiam's agnostic approach, our platform can program any robot. With the addition of virtual reality remote control, we are saving lives and improving job safety. There is no better time to familiarize yourself with this technology at home, school, or the workplace.

Downloading Quest Robot Controller App

Get the Oculus Quest Robot App: Oculus Quest Robot.apk (v0.10 June 25, 2022).

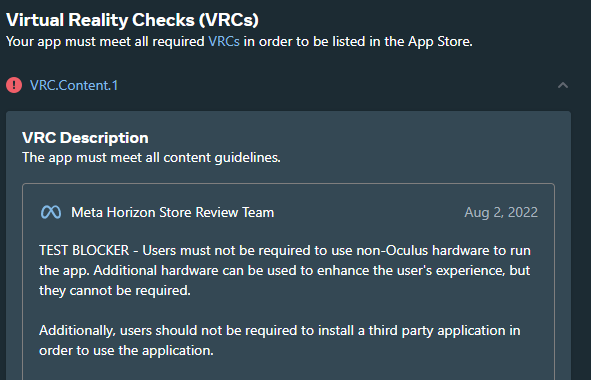

*Note: Due to Meta's review process complications, this app must be side-loaded while we work with Meta to have the app accepted for their store. Meta is currently disallowing this app because it connects to a PC. We're working to convince Meta that this useful VR app should be accepted for their review process so that students, robot builders, and inventors can use it. Stay tuned!

Meta Refuses To Accept Our App

We apologize for the method of installing the app rather than making it available in the Oculus app store. No matter how hard we plead, Meta will not accept our app in the store. The Meta Oculus Store denies our Oculus Quest Robot App because they do not like that it controls physical hardware. We continue to plead to their process, but they do not respond, and we continue to receive this same response.

Stereoscopic Camera View

This robot skill and Oculus Quest VR app optionally support two cameras (left and right eye), which provide the user with a 3D stereoscopic view. If two cameras are not required, a single camera can be used instead. The position of the two cameras is critical to prevent eye strain for the viewer. It is generally advised to measure the distance between your pupils. Use that measurement when distancing each camera from the center of each lens.Using Oculus Meta Quest App with Virtual Reality

When the Oculus Meta Quest robot control app is loaded, you will find yourself on the bridge of a spaceship. The left controller can move your view around the ship to explore. The right controller can interact with the menu connecting to the robot.

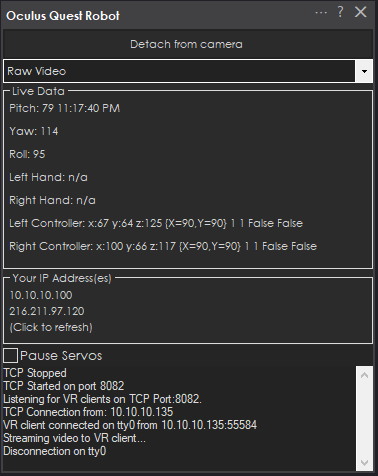

Enter the IP Address of the Synthiam ARC software. The robot skill will show public and private IP addresses you can enter in this field. If you use this robot skill on your local network, use the local IP address. If you connect over the internet through a port on your router, use the public IP address.

The port can be edited, but the default value is always 8082.

Pressing the connect button will load the robot control view.

About The Oculus Meta Quest 2

The Oculus Meta Quest 2 (marketed since November 2021 as Meta Quest 2) is a low-cost virtual reality (VR) headset developed by Facebook Reality Labs (formerly Oculus). It is the successor to the company's previous headset, the Oculus Quest. The Quest 2 was unveiled on September 16, 2020, during Facebook Connect 7.As with its predecessor, the Quest 2 can run as a standalone headset with an internal, Android-based operating system that does not require a PC or high-cost graphics card. It is a refresh of the original Oculus Quest with a similar design but lighter weight, updated internal specifications, a display with a higher refresh rate and per-eye resolution, and updated Oculus Touch controllers.

Main Window

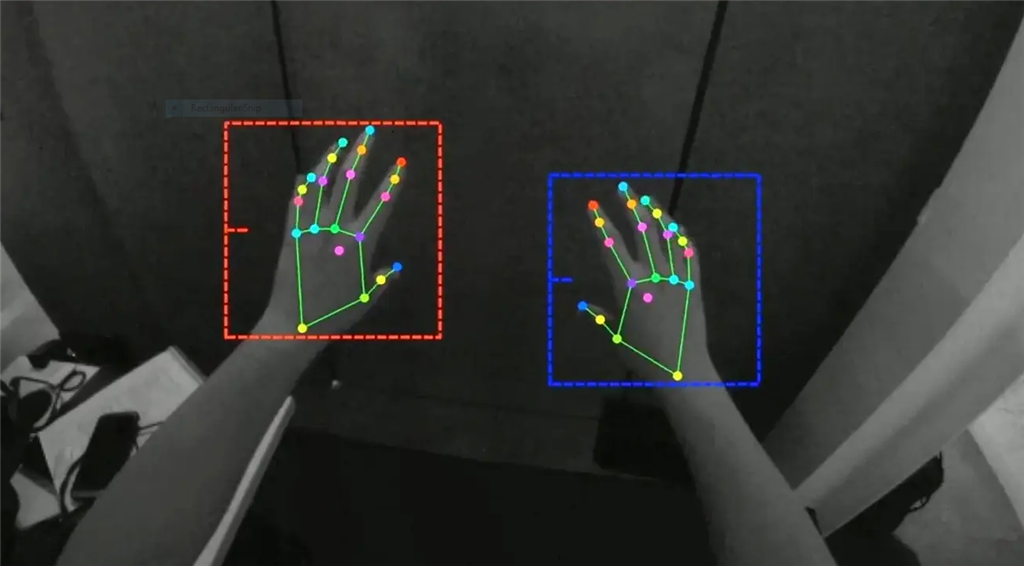

The main window displays real-time tracking information of Quest input devices. Either the controllers or hand detection can be used. If hand tracking is used, each finger position will be displayed, starting with the thumb and the hand's position. If controllers are used, the controller location, analog stick, buttons, and trigger positions will be displayed.Controller Inputs

Both hand tracking and controller tracking can control their respective servos. When the controllers are set aside, and hand tracking is detected, the configured hand servos will be enabled. The hand servos will be disabled when used, and the controller servos will become enabled. This switches automatically based on what control method you use. Switching control methods can be done on the fly.Configuration

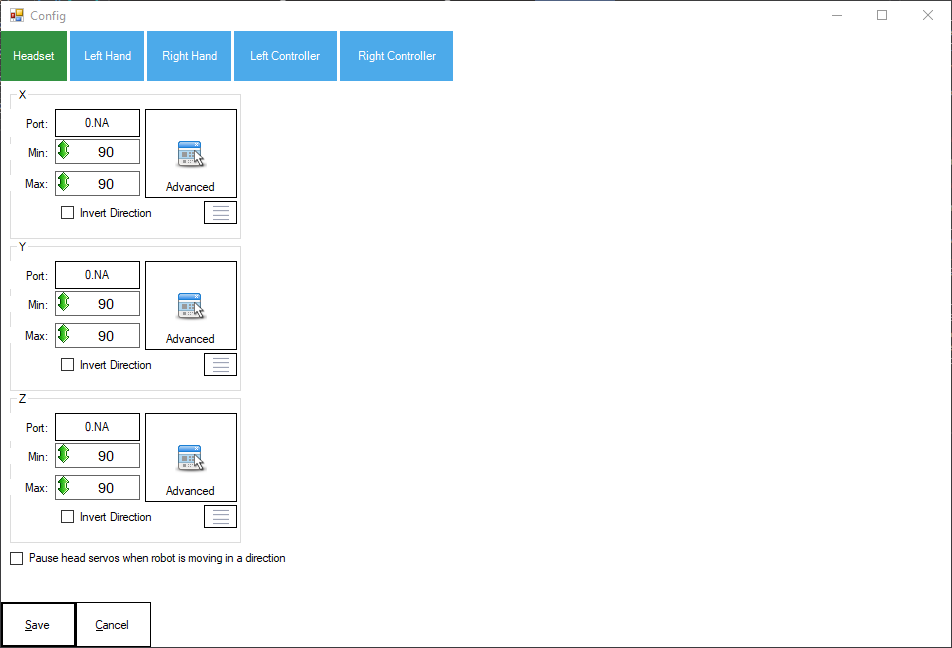

Press the configure button on the robot skill to view the configuration screen for this robot skill.Headset

The servos for X (horizontal), Y (vertical), and Z (tilt) can be assigned. These servos will move with your headset to look around. Generally, you will want these servos attached to a camera so you can look around at the environment with the headset.

There is an option to pause servos when the robot moves and pause the headset servos while any Movement Panel is being moved. When checked, the headset servos are only usable when the robot is not moving. This is useful to reduce motion sickness while the robot is moving.

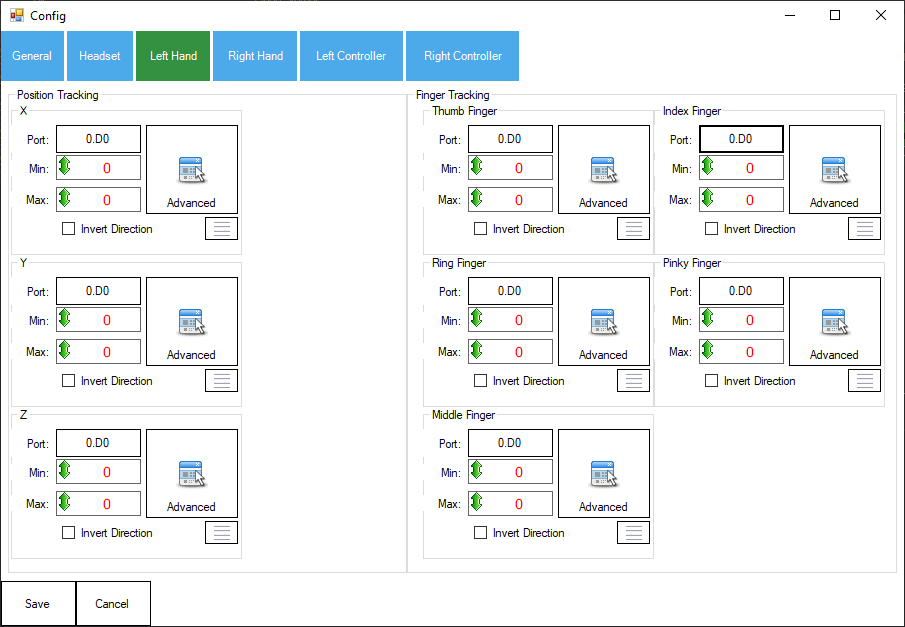

Left Hand & Right Hand

The position tracking group will bind servos to the hand's position. The X is horizontal, Y is vertical, and Z is the distance outward from your body.

The finger tracking group allows assigning servos to each finger. Gripping each finger into a fist will move the respective servos. This is useful when using a robot hand, such as the InMoov, to control each finger individually.

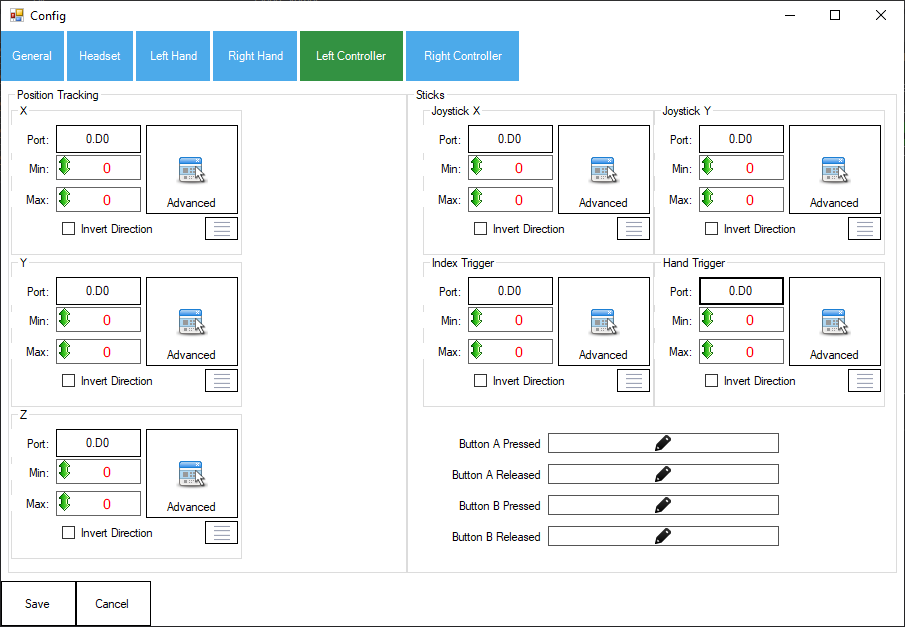

Left Controller & Right Controller

The position tracking will bind servos to the hand's position. The X is horizontal, Y is vertical, and Z is the distance outward from your body.

Triggers and analog sticks can also control servos. The triggers are the index trigger and the hand trigger. The analog stick for each controller can be assigned servos.

Scripts can be assigned to the A and B buttons when pressed or released.

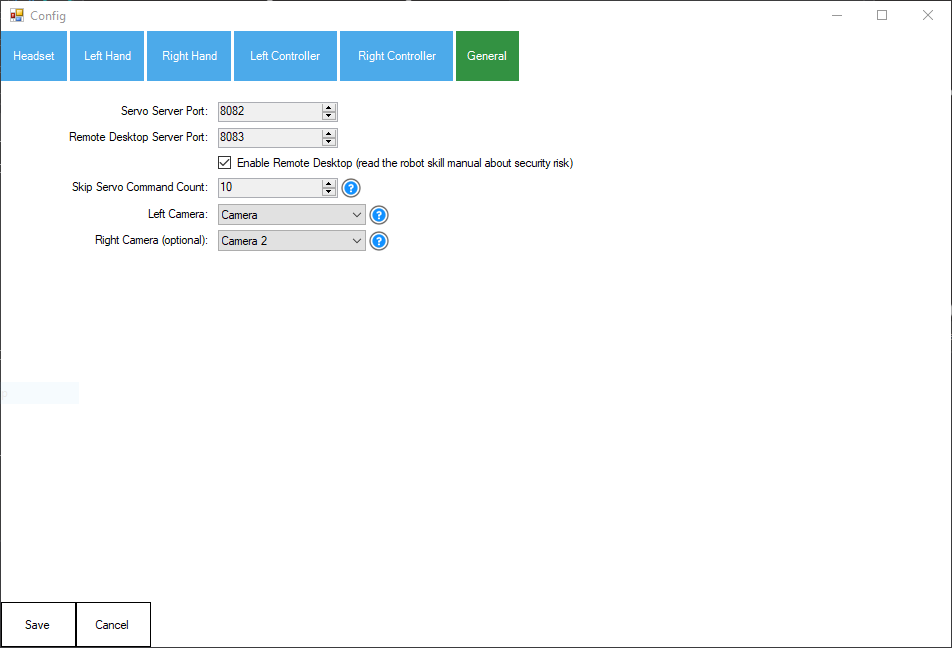

1) The TCP ports for servo Server and Remote Desktop are read-only. They cannot be changed,

Enable/Disable the remote desktop feature. This feature has no password protection, so be cautious if you enable it. Do not enable this feature on public networks.

The number of frames to skip when transmitting servo positions. If too many servo positions are set, the EZB may brown out due to a lack of power supply.

Left Camera (or single camera if using only one camera)

Right camera (optional if using only the left/single camera)

Remote Desktop

The remote desktop lets you move the mouse with the right controller. You can use the trigger to left-click and the hand grab to right-click. The remote desktop feature does not provide authentication, so only use this on private networks.

Press the menu button on the left controller to exit the remote desktop mode.

The analog stick on the left controller can move around the floating screenshot window.

Robot Control

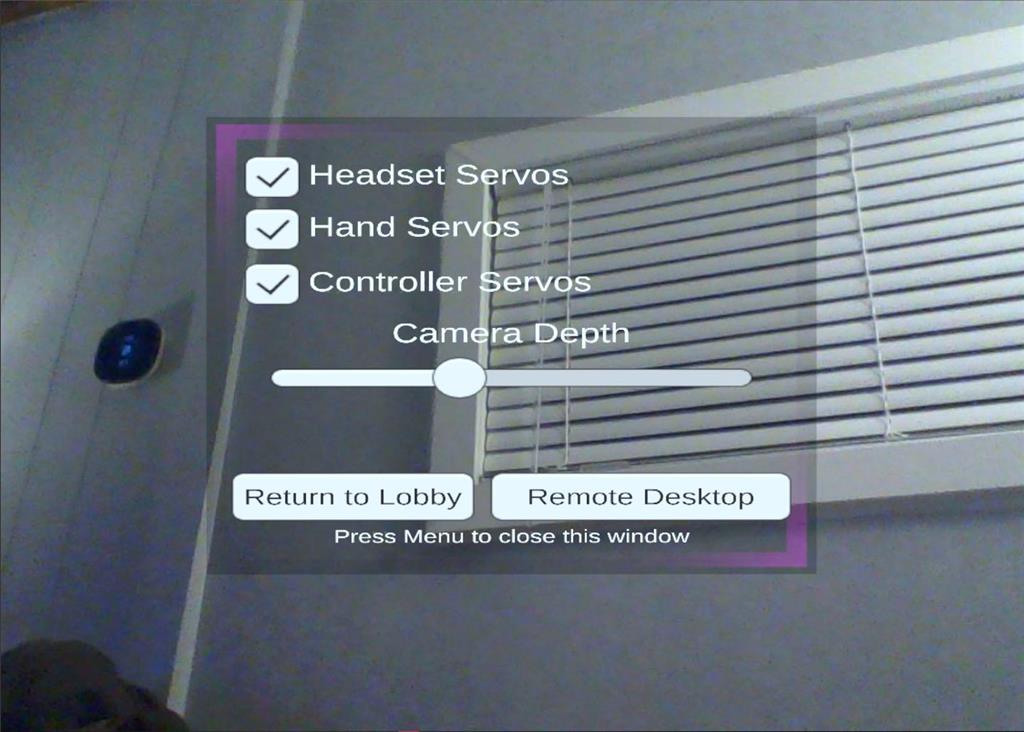

When viewing the robot control, the menu button on the left controller will load the menu to enable and disable specific servos.

In the popup menu, you can adjust the camera depth for comfort.

The return to lobby option will do just that: return you to the main menu lobby.

The remote desktop can remotely control ARC through the VR headset if the robot skill configuration is enabled.

Hardware Info

Hardware Info

We are waiting for the app to be available in the Oculus store. A link will be provided in the robot skill manual above when approved.

The Oculus Quest Robot App can be downloaded on the Oculus Quest 2 headset from here: https://www.oculus.com/experiences/quest/5090145164400422

Also see a link in the manual description above

Anyone have one of these that could shoot a video. Would love to see it in action before I spend $620 on another VR headset. Is the 128GB headset sufficient or do you need 256GB

I don’t think the games will impress you if you come from Steam VR with a real GPU. The 128GB is sufficient because the games are tiny for it. It’s about as powerful as a 2015 iPhone doing VR, IMO. The games are very cartoony and do not have much detailed texture.

But, as for controlling robots, it’s super great - probably the best. The hand tracking is marvelous. No controllers are needed - and no computer is needed (other than for arc).

There are a few other features we are throwing in soon. Such as Remote Desktop into an ARC so you can control it from the headset. As it is now, it’s super stable and surprisingly impressive as an all-contained unit.

I should also add that finger tracking is perfect for inmoov owners. I think that’s super awesome.

OK thanks I will wander over to best buy in the morning and get one. Hopefully I can finally throw out my wii controllers and vuzic 920VR I use to control my robot (I think you and I are the only people who still own a vuzic anyway). Now I just need to upoload my robot to the metaverse...

Oh gosh, that's funny - I forgot about the Vuzix! Yeah, that can be donated, haha. It'll be pretty amazing once you can control ARC from this headset. I'm excited about it.

I think there's also a scan ability being added to the app. So it can scan to find arc. I haven't seen the feature list in-depth, but it's pretty incredible what Synthiam is doing with it.

Definitely excited to start using this! I'll download it tonight and let you know how it goes!

I have the 128GB Version and enjoy the games on it. Moss is an amazing game on there and I enjoy playing Walkabout mini-golf too

Beat Saber is a good game if you're looking for exercise! I don't think I've ever not broken a sweat playing that game.

EDIT: After looking more closely is seems that I'm hoping for way too much out of this. Looks like one cant actually edit ARC using a virtual keyboard and mouse but only move servos. I've been watching way too much Star Trek Discovery lately. LOL.