8. Vision

Let's choose your robot's vision system! If your robot does not have a camera, skip to the next step.

Robots with cameras provide navigation, tracking, and interactive benefits. Synthiam ARC's software is committed to making robot programming easy, including computer vision tracking. The ARC software includes a robot camera skill to connect WiFi, USB, or video capture devices. ARC's camera device skills include tracking types for objects, colors, motions, glyphs, faces, and more. You can add additional tracking types and computer vision modules from the skill store.

Choose the Camera Type

Connects directly to a computer with a USB cable. You can only use this type of camera in an embedded computer configuration. This is because the USB cable will tether the camera to the PC. You can use any USB camera in ARC. Some advantages of using USB cameras are high resolution and increased framerate.

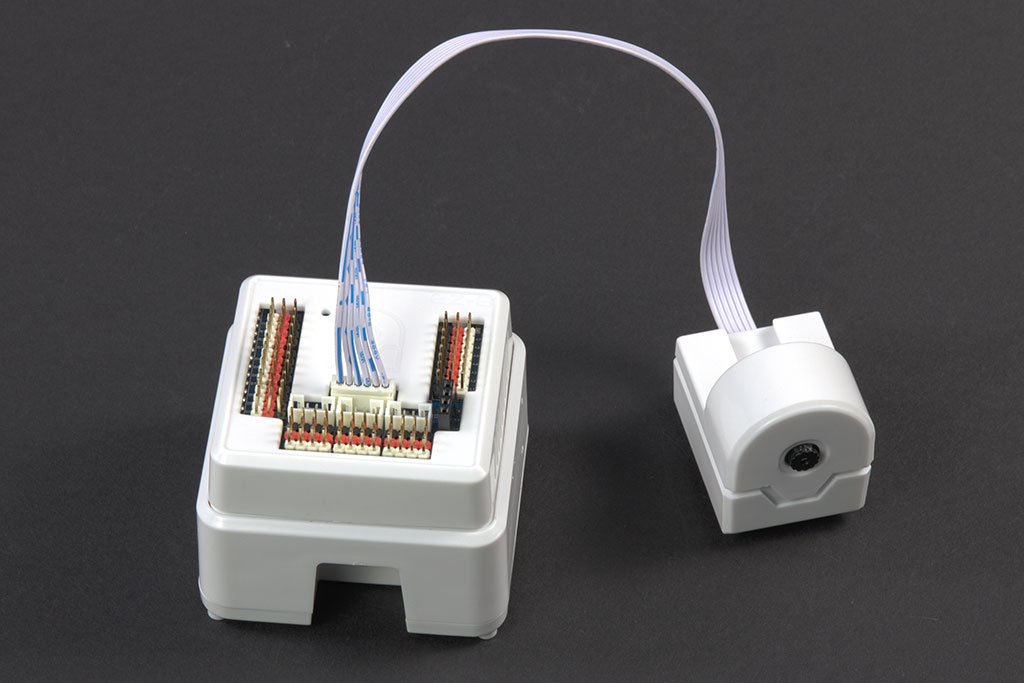

Connects wirelessly to a PC/SBC over a WiFi connection. Generally, this approach is only used in remote computer configurations. Few I/O controllers support a WiFi wireless camera transmission due to latency causing low resolution and potentially unreliable radio interference. For a wireless camera application, the most popular I/O controllers are the EZ-Robot EZ-B v4 and IoTiny.

Add Camera Device Robot Skill

Whichever camera type you choose, the robot skill needed to connect to the camera is the Camera Device Robot Skill. Add this robot skill to connect to the camera and begin viewing the video feed. Reading the manual for this robot skill, you will find many options for tracking objects.

Add Camera Device Robot SkillAdditional Computer Vision Robot Skills

Now that you have the camera working on your robot, you may wish to add additional computer vision robot skills. Computer vision is a general term for extracting and processing information from images. The computer vision robot skills will use artificial intelligence and machine learning to track objects, detect colors, and recognize faces. There are even robot skills to detect facial expressions to determine your mood.