T265 VSLAM for ARC: SLAM-based mapping and precise way-point navigation, low-power tracking, and NMS telemetry.

How to add the Intel Realsense T265 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Navigation category tab.

- Press the Intel Realsense T265 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Intel Realsense T265 robot skill.

How to use the Intel Realsense T265 robot skill

With its small form factor and low power consumption, the Intel RealSense Tracking Camera T265 has been designed to give you the tracking performance for your robot. This ARC user-friendly robot skill provides an easy way to use the T265 for way-point navigation.

The T265 combined with this robot skill provides your robot a SLAM, or Simultaneous Localization and Mapping solution. It allows your robot to construct a map of an unknown environment while simultaneously keeping track of its own location within that environment. Before the days of GPS, sailors would navigate by the stars, using their movements and positions to successfully find their way across oceans. VSLAM uses a combination of cameras and Inertial Measurement Units (IMU) to navigate in a similar way, using visual features in the environment to track its way around unknown spaces with accuracy. All of these complicated features are taken care of for you in this ARC robot skill.

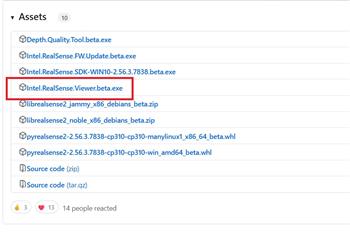

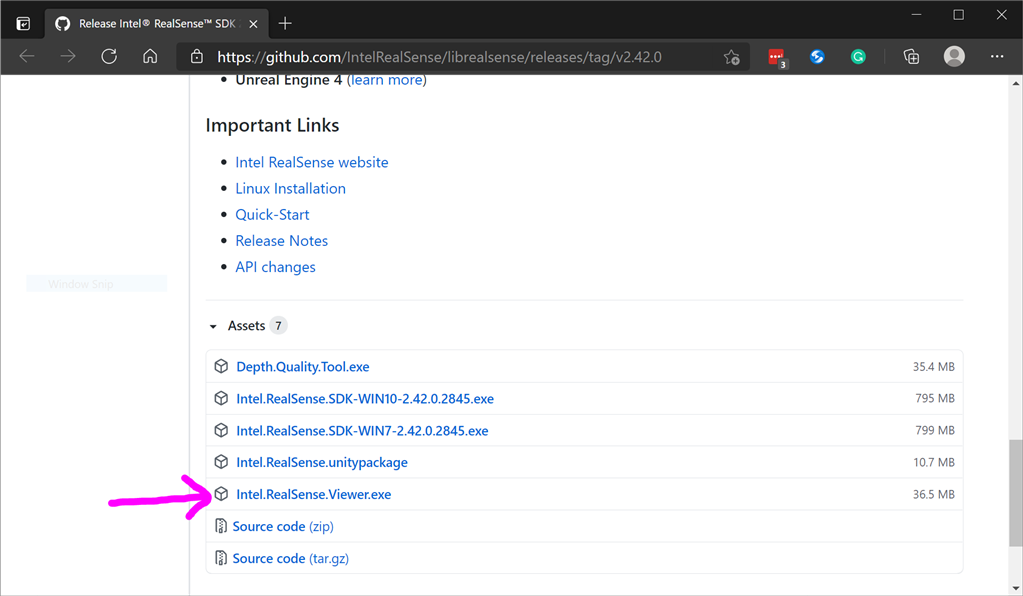

Update Firmware The device sensor may require a firmware update.

Visit the Realsense GitHub page, scroll to the bottom of the page, and install the Intel.Realsense.Viewer.exe from here: https://github.com/IntelRealSense/librealsense/releases/latest

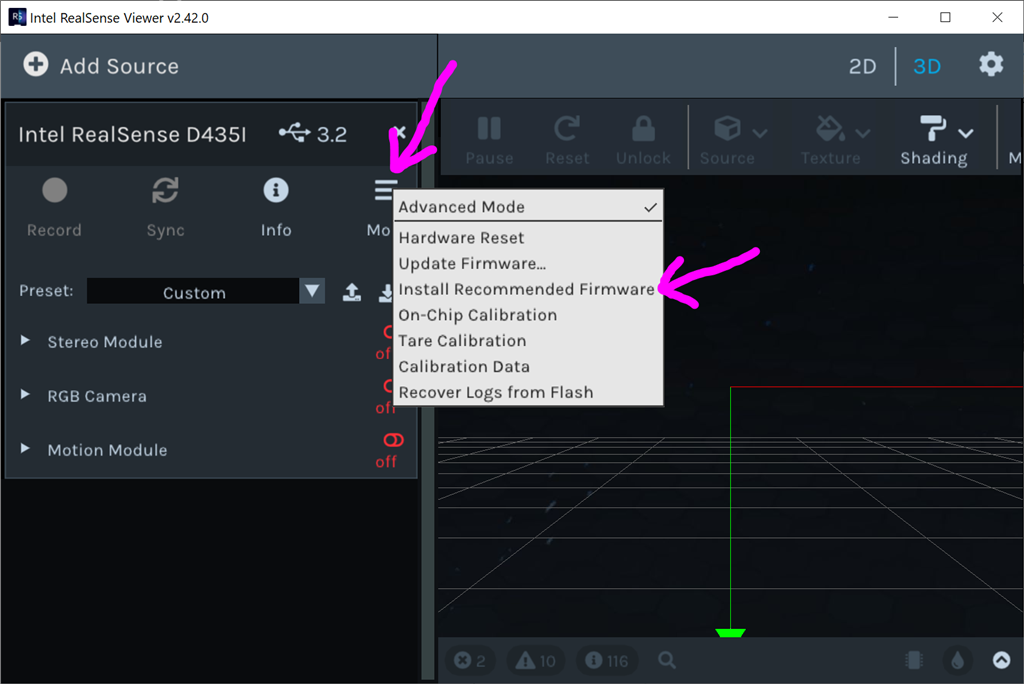

Click the hamburger settings icon and select Install Recommended Firmware

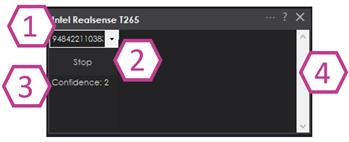

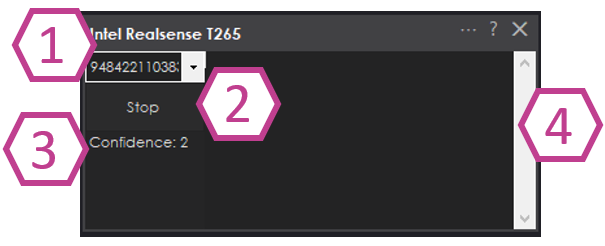

Robot Skill Window The skill has a very minimal interface because it pushes data in the NMS and is generally used by other robot skills (such as The Navigator).

Drop-down to select Realsense device by the serial number. This is useful if there are multiple devices on one PC.

START/STOP the Intel T265 connection.

The confidence of the tracking status between 0 (low) and 3 (highest). In a brightly lit room with many points of interest (not just white walls), the tracking status will be high. Tracking will be low if the room does not have enough light and/or detail for the sensor to track.

Log text display for errors and statuses.

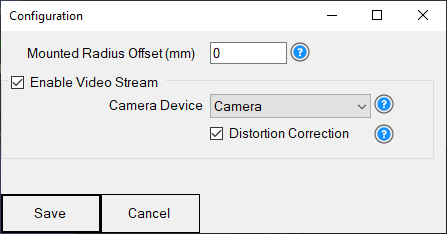

Config Menu

1) Mounted Radius Offset (mm) is the distance in mm of the T265 from the center of the robot. A negative number is toward the front of the robot, and a positive number is toward the rear. The sensor must be facing 0 degrees toward the front of the robot. The sensor must not be offset to the left or right of the robot.

Enable Video Stream will send the fisheye b&w video from the T265 to the selected camera device. The selected camera device robot skill must have Custom specified as the input device. Also, the camera device will need to be started to view the video.

Distortion Correction will use a real-time algorithm to correct the fisheye lens, which isn't always needed and is very CPU intensive.

Video Demonstration Here's a video of the Intel RealSense T265 feeding The Navigator skill for way-point navigation

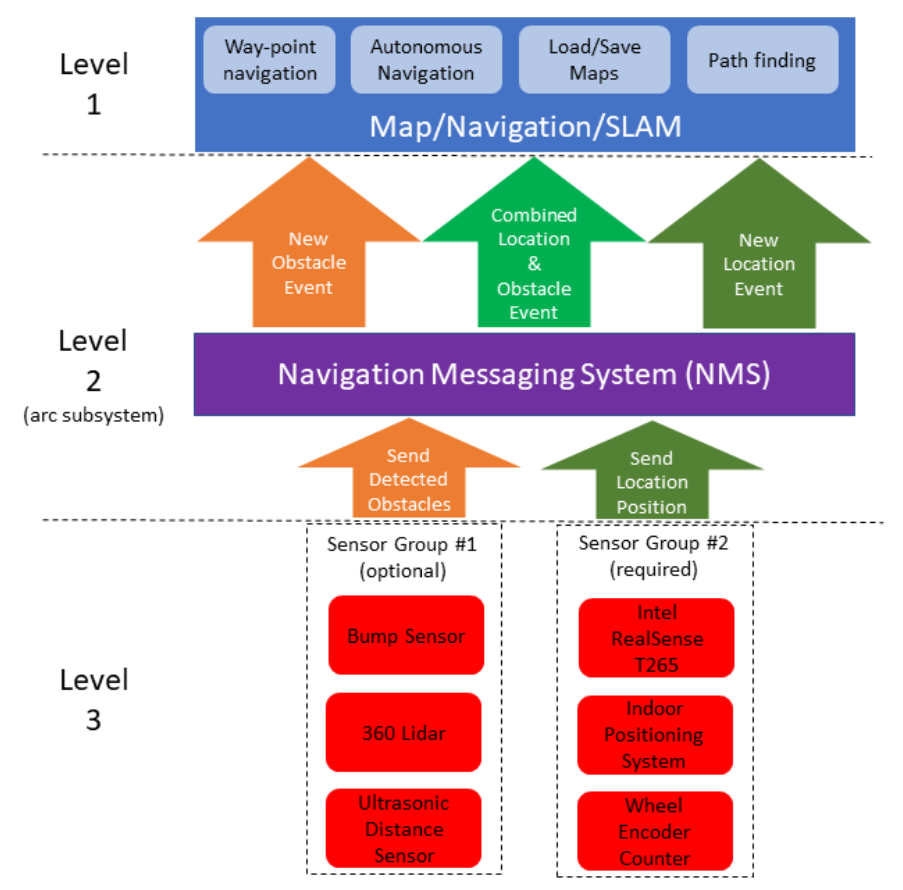

ARC Navigation Messaging System This skill is part of the ARC navigation messaging system. It is encouraged to read more about the messaging system to understand available skills HERE. This skill is in level #3 group #2 in the diagram below. This skill contributes telemetry positioning to the cartesian positioning channel of the NMS. Combining this skill with Level #3 Group #1 skills for obstacle avoidance. And for Level #1, The Navigator works well.

Environments This T265 will work both indoors and outdoors. However, bright direct light (sunlight) and darkness will affect performance. Much like how our eyes see, the camera will is also susceptible to glare and lack of resolution in the dark. Because the camera visual data is combined with the IMU, the camera must have reliable visible light. Without the camera being able to detect the environment, the algorithm will be biased to use the IMU and will experience drift, which greatly affects the performance of the sensor's accuracy.

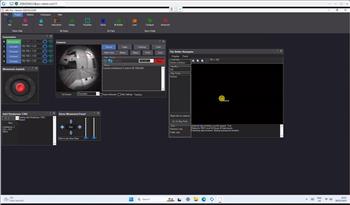

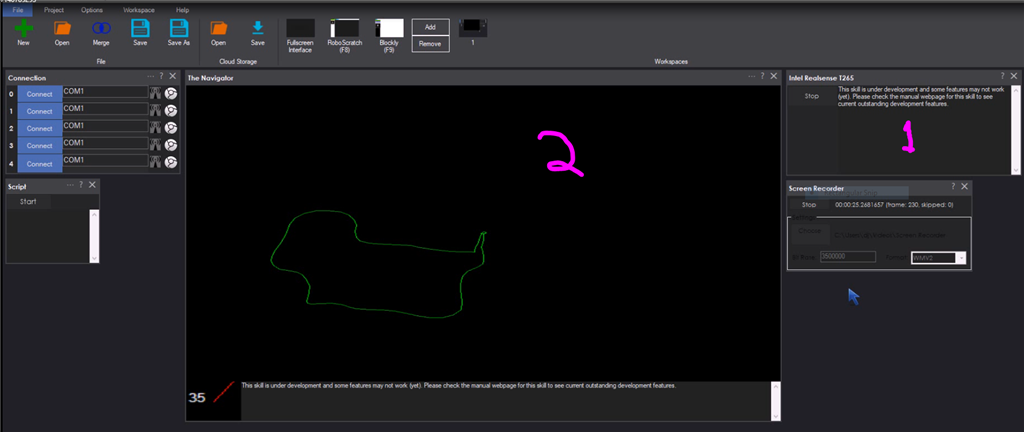

Screenshot Here is a screenshot of this skill combined with The Navigator in ARC while navigating through a room between two way points.

Starting Location The T265 does not include a GPS/Compass or any ability to recognize where it is when initialized. This means your robot will have to initialize from a known location and direction to reuse saved maps. Make sure you mark the spot on the ground with masking tape where the robot starts from.

How To Use This

Connect your Intel RealSense T265 camera to the computers USB port

Load ARC (version must be >= 2020.12.05.00)

Add this skill to your project

Now we'll need a Navigation skill. Add The Navigator to your project

Press START on the Intel RealSense skill and data will begin mapping your robot's position

How Does It Work? Well, magic! Actually, the camera is quite interesting and it breaks the world down into a point cloud of features. It remembers the visual features so it can re-align itself on the internal map. It uses a VPU, is what Intel calls it. Here's a video of what the camera sees.

Related Hack Events

A Little Of This, A Little Of That

DJ's K8 Intel Realsense & Navigation

Related Questions

T265 Realsence Viewer.Exe On An Upboard

Navigator With Mecanum (Omni) Wheel Robot Platforms

Questions Regarding Navigation

In The Better Navigator No Movement From The T265

Upgrade to ARC Pro

Your robot can be more than a simple automated machine with the power of ARC Pro!

Hardware Info

Hardware Info

1 the two fisheye cameras If you load up the intel tools you get the view from the two cameras. Would be nice if we could use them.

2 having to line up robot is not really practical in real world. If robot does not know where it is there should be a way to auto align to a known way point via some method. Ie look for a glyph and go ok I know where I am now. And then choose a known path from that waypoint to the new destination.

You cant use the T256 cameras as they are only for vslam. The charging station is the starting point for my robot.

this is what the fish eye looks like using the intel sdk. What would you want to do with that?

My advice is to have the robot search for a glyph and align itself, then start the intel t265 tracking. There's no way to modify the tracking data. The data returned by the t265 is the data from the sdk made by Intel. The alignment of the robot in the real world is quite practical because that's how it's being used. The robots start from a docking station and begin navigating from there.

There's also a huge but with the intel sdk, where you can't start, stop and start the driver. While ptp made good points about working with Intel is difficult because they abandon projects easily, he's accurate. What we have from the t265 is about as good as it'll get.

The one thing I can sort-of think of is having to apply an offset to the t265 coordinates to "re-align" itself to the home position. Or I guess, to any position that you specify. But, you'd have to be REALLY REALLY accurate on whatever offset you provide. I can make a ControlCommand that allows you to specify the offset, which essentially would be the "new position". Then, if your glyph is at 100cmx100cm @ 90 degrees heading from home, you can specify that is where the robot is and it would re-align itself to that position. Now, remember that the degrees also matter to the t265. If the degrees are off, then the t265 will be off.

This is also why I am much more fond of indoor positioning from external sensors (ie cameras) to know where the robot is. You'll notice that even game consoles (ie Wii, Sony Playstation) motion controllers use a camera for tracking along with side inertia sensors. This is because unlike a human or animal, having a robot know its position is somewhat of a philosophical discussion.

Stereo Camera access was to triangulate glyph if that makes sense.

That issue with the stat, stop and start issue rarely happens to me. A camera reset button and some message output would be nice to have in the T256 plugin.

@nink, did you watch the video? that camera video feed will never ever detect a glyph or anything. Their SDK does not provide a usable image. Also, the fish eye angle would not work with a glyph so that'll be out.

@proteusy, what do you mean a camera reset button? The issue with the Intel SDK is that when you stop the t265, it cannot be restarted without restarting arc. It is a bug in the intel sdk, not arc's robot skill. I don't have control over the t265 driver and their hardware problems as it's a product made by Intel. We get the blunt response of their bugs

I know what you meant. The reset I was talking about is the factory reset to erase the slam data from the camera.

I couldn’t find anything like that in the sdk either. It’s quite incomplete as ptp predicted by an intel product. We got what we got I guess