T265 VSLAM for ARC: SLAM-based mapping and precise way-point navigation, low-power tracking, and NMS telemetry.

How to add the Intel Realsense T265 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Navigation category tab.

- Press the Intel Realsense T265 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Intel Realsense T265 robot skill.

How to use the Intel Realsense T265 robot skill

With its small form factor and low power consumption, the Intel RealSense Tracking Camera T265 has been designed to give you the tracking performance for your robot. This ARC user-friendly robot skill provides an easy way to use the T265 for way-point navigation.

The T265 combined with this robot skill provides your robot a SLAM, or Simultaneous Localization and Mapping solution. It allows your robot to construct a map of an unknown environment while simultaneously keeping track of its own location within that environment. Before the days of GPS, sailors would navigate by the stars, using their movements and positions to successfully find their way across oceans. VSLAM uses a combination of cameras and Inertial Measurement Units (IMU) to navigate in a similar way, using visual features in the environment to track its way around unknown spaces with accuracy. All of these complicated features are taken care of for you in this ARC robot skill.

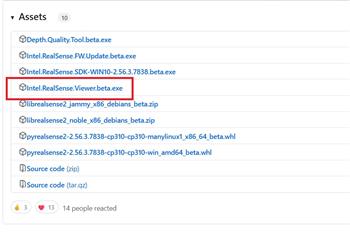

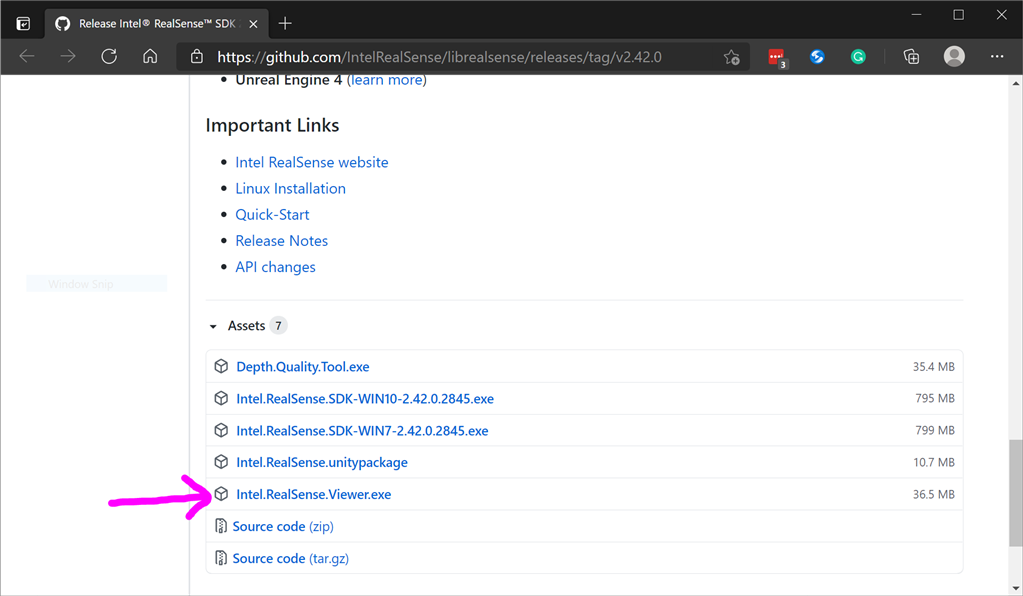

Update Firmware The device sensor may require a firmware update.

Visit the Realsense GitHub page, scroll to the bottom of the page, and install the Intel.Realsense.Viewer.exe from here: https://github.com/IntelRealSense/librealsense/releases/latest

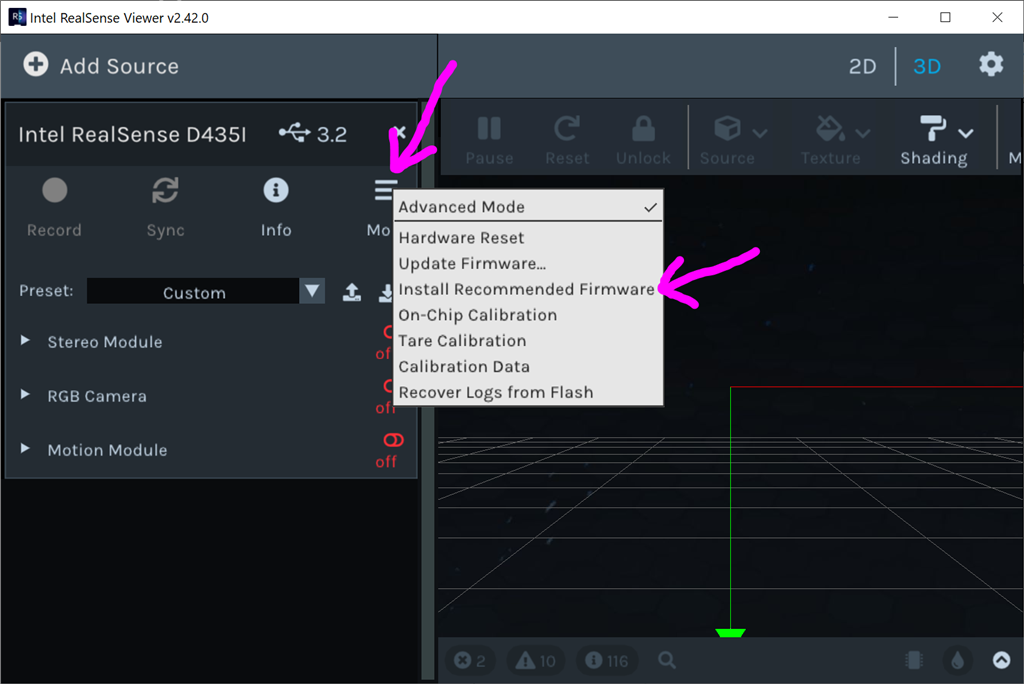

Click the hamburger settings icon and select Install Recommended Firmware

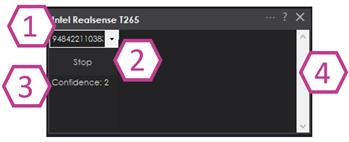

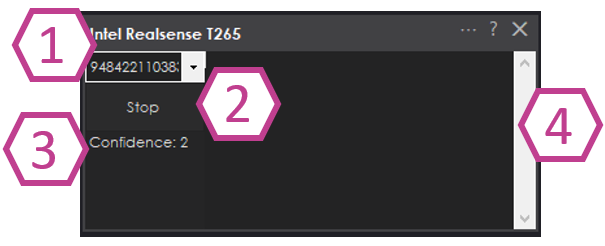

Robot Skill Window The skill has a very minimal interface because it pushes data in the NMS and is generally used by other robot skills (such as The Navigator).

Drop-down to select Realsense device by the serial number. This is useful if there are multiple devices on one PC.

START/STOP the Intel T265 connection.

The confidence of the tracking status between 0 (low) and 3 (highest). In a brightly lit room with many points of interest (not just white walls), the tracking status will be high. Tracking will be low if the room does not have enough light and/or detail for the sensor to track.

Log text display for errors and statuses.

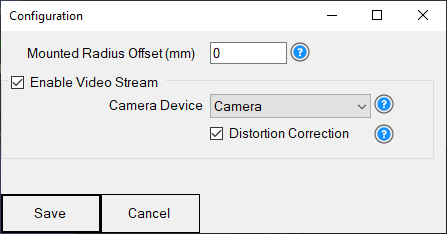

Config Menu

1) Mounted Radius Offset (mm) is the distance in mm of the T265 from the center of the robot. A negative number is toward the front of the robot, and a positive number is toward the rear. The sensor must be facing 0 degrees toward the front of the robot. The sensor must not be offset to the left or right of the robot.

Enable Video Stream will send the fisheye b&w video from the T265 to the selected camera device. The selected camera device robot skill must have Custom specified as the input device. Also, the camera device will need to be started to view the video.

Distortion Correction will use a real-time algorithm to correct the fisheye lens, which isn't always needed and is very CPU intensive.

Video Demonstration Here's a video of the Intel RealSense T265 feeding The Navigator skill for way-point navigation

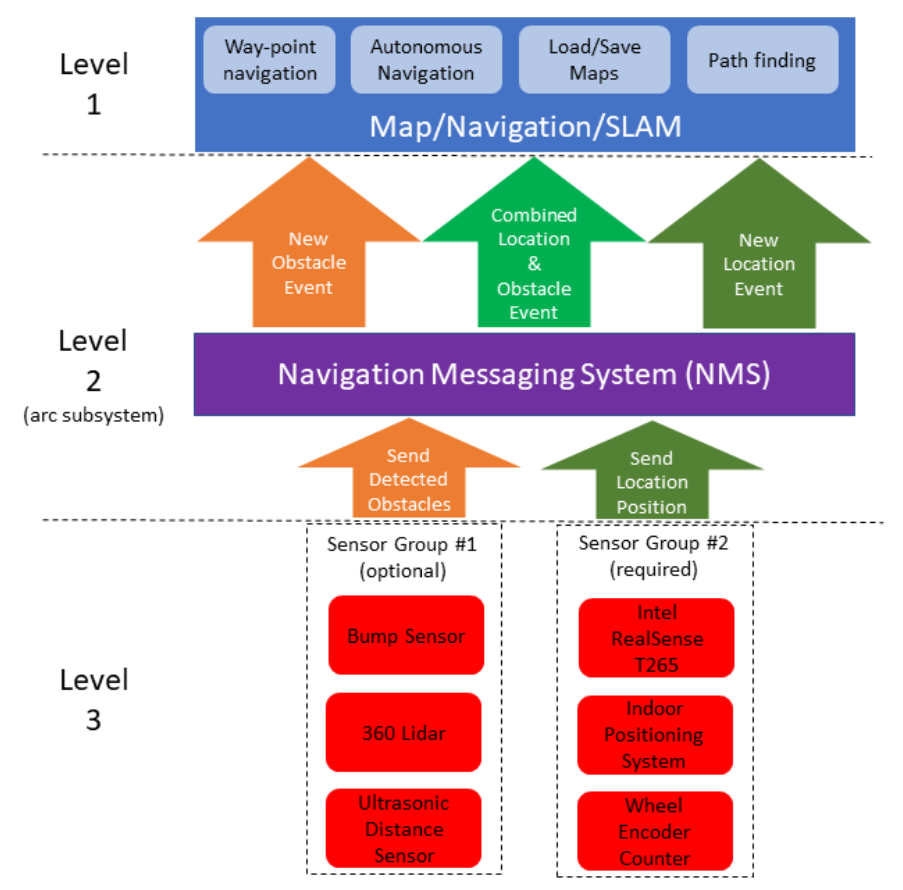

ARC Navigation Messaging System This skill is part of the ARC navigation messaging system. It is encouraged to read more about the messaging system to understand available skills HERE. This skill is in level #3 group #2 in the diagram below. This skill contributes telemetry positioning to the cartesian positioning channel of the NMS. Combining this skill with Level #3 Group #1 skills for obstacle avoidance. And for Level #1, The Navigator works well.

Environments This T265 will work both indoors and outdoors. However, bright direct light (sunlight) and darkness will affect performance. Much like how our eyes see, the camera will is also susceptible to glare and lack of resolution in the dark. Because the camera visual data is combined with the IMU, the camera must have reliable visible light. Without the camera being able to detect the environment, the algorithm will be biased to use the IMU and will experience drift, which greatly affects the performance of the sensor's accuracy.

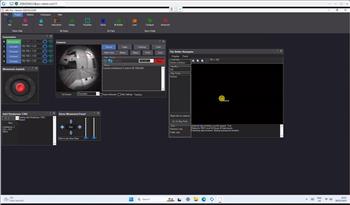

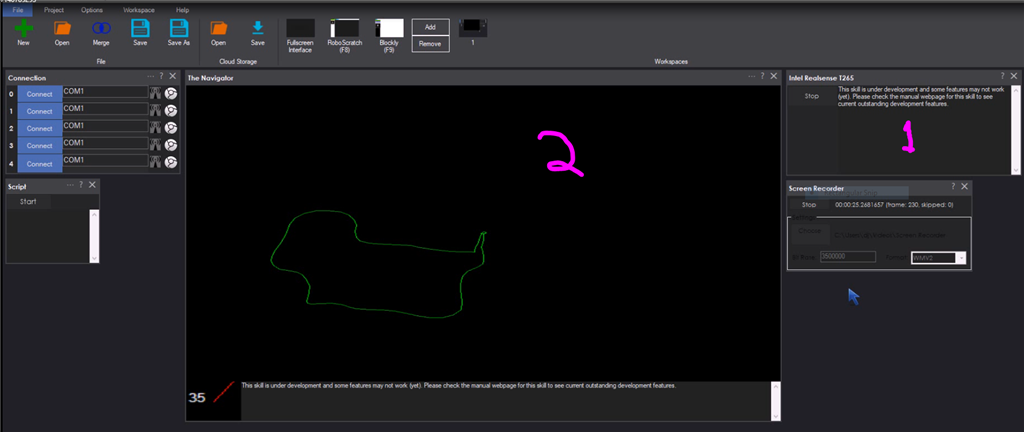

Screenshot Here is a screenshot of this skill combined with The Navigator in ARC while navigating through a room between two way points.

Starting Location The T265 does not include a GPS/Compass or any ability to recognize where it is when initialized. This means your robot will have to initialize from a known location and direction to reuse saved maps. Make sure you mark the spot on the ground with masking tape where the robot starts from.

How To Use This

Connect your Intel RealSense T265 camera to the computers USB port

Load ARC (version must be >= 2020.12.05.00)

Add this skill to your project

Now we'll need a Navigation skill. Add The Navigator to your project

Press START on the Intel RealSense skill and data will begin mapping your robot's position

How Does It Work? Well, magic! Actually, the camera is quite interesting and it breaks the world down into a point cloud of features. It remembers the visual features so it can re-align itself on the internal map. It uses a VPU, is what Intel calls it. Here's a video of what the camera sees.

Related Hack Events

A Little Of This, A Little Of That

DJ's K8 Intel Realsense & Navigation

Related Questions

T265 Realsence Viewer.Exe On An Upboard

Navigator With Mecanum (Omni) Wheel Robot Platforms

Questions Regarding Navigation

In The Better Navigator No Movement From The T265

Upgrade to ARC Pro

Your robot can be more than a simple automated machine with the power of ARC Pro!

Hardware Info

Hardware Info

@DJ, I found this?

rs2config cfg; rs2pipeline pipe; cfg.resolve(pipe).get_device().hardware_reset();

https://github.com/IntelRealSense/librealsense/issues/4113

FYI here is an example of the T265 fisheye camera using Fiducial (essentially a glyph) to detect location. https://github.com/IntelRealSense/librealsense/tree/master/examples/pose-apriltag

Neither of those work with the c# .net wrapper. Maybe one day I can look into converting the c++ to c#. That's just a lot of work for right now. I'll add to the list

Added config option to send fish eye data to the specified camera device

*Note: requires ARC 2021.02.08.00 or greater

Works great DJ, thanks. Now to see if we can do some glyph detection.

Nice work! I noticed when I was reading the Boston Dynamics Spot documentation on GraphNav that it used a similar stereo fisheye camera and IMU (although it has 5 of them two front, one each side and one rear). It aligns to a fiducial (up to 4m away) and then recalibrates its position. The T265 works great for our wheeled robots running around the living room but as soon as you get to larger legged robots moving long distances they drift as they move further away from the start location. In order to recalibrate a fiducial is required of known size the robot can triangulate its new position.

This seems like a really primitive way to do this but environments change (pallet moved in a warehouse etc) so you need something guaranteed to lock on to so you can move along the next edge to the next way point. We need a better solution than this in the future to deal with drift, the need to recalibrate and the need to enter into a known path. I guess ML is the long term answer but in the short term the world will be filled with fiducial's.

About 11 min mark onwards.

It’s interesting that with so much funding their solution was to spend more money by adding more sensors - because that’s how large the problem is to solve.

Put it this way, the problem of a robot knowing where it is in the world is so big that Boston dynamics merely threw a bunch of redundant vslam LOL. And most likely a bias shifting complimentary filter fusing the sensor data. The sensor that starts to drift first would begin getting a lower weight in the fusing calculation.

Anyway - the much larger conversation on this topic is not about sensors, but AGI. Artificial general intelligence.

Even if you close your eyes and I move you to a new location, you’ll have no idea where you are. The robot needs some level of self in relation to the world to create an adaptive internal model. Like how we do

I have spent a bit of time playing with this. It is really good!. I like the way it follows the path you set to get around obstacles. I do get a bit of a drift the longer it is away from home.

Question is there a way to tell robot it is currently in a certain location when it drifts. I start in the docking station and turn Realsense on. Then I tell roomba to move back about 1 foot from docking station and then give waypoint voice commands. It knows where it is because I started realsense when it was docked. Now it runs around my house but starts to drift. I guess I could send it back to a point in front of docking station and then dock using ControlCommand Seek Dock on roomba then stop realsense, then start again then backup one foot again etc, but it would be good if there was a way to tell realsense I am here. please reset your tracking location to hear now?

We talked about glyph as being a way to fix with camera. Maybe if we had 2 cameras working we could triangulate and get position. I thought about some lines on the ground in various locations that the robot could follow and when he crosses them he starts to follow and finds a dash code etc to recalibrate using a sensor like this https://cnclablb.com/adjustable-line-tracking-sensor-module.html But I think what I need is a way to send a command to Navigator to say HELLO I AM HERE. Maybe something like a ControlCommand("The Navigator", "ResetWayPoint", "Kitchen");