Hitachi-LG 360° LiDAR driver that feeds scans into ARC's Navigation Messaging System for obstacle detection, SLAM mapping, and navigation integration

How to add the Hitachi-LG LDS Lidar robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Navigation category tab.

- Press the Hitachi-LG LDS Lidar icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Hitachi-LG LDS Lidar robot skill.

How to use the Hitachi-LG LDS Lidar robot skill

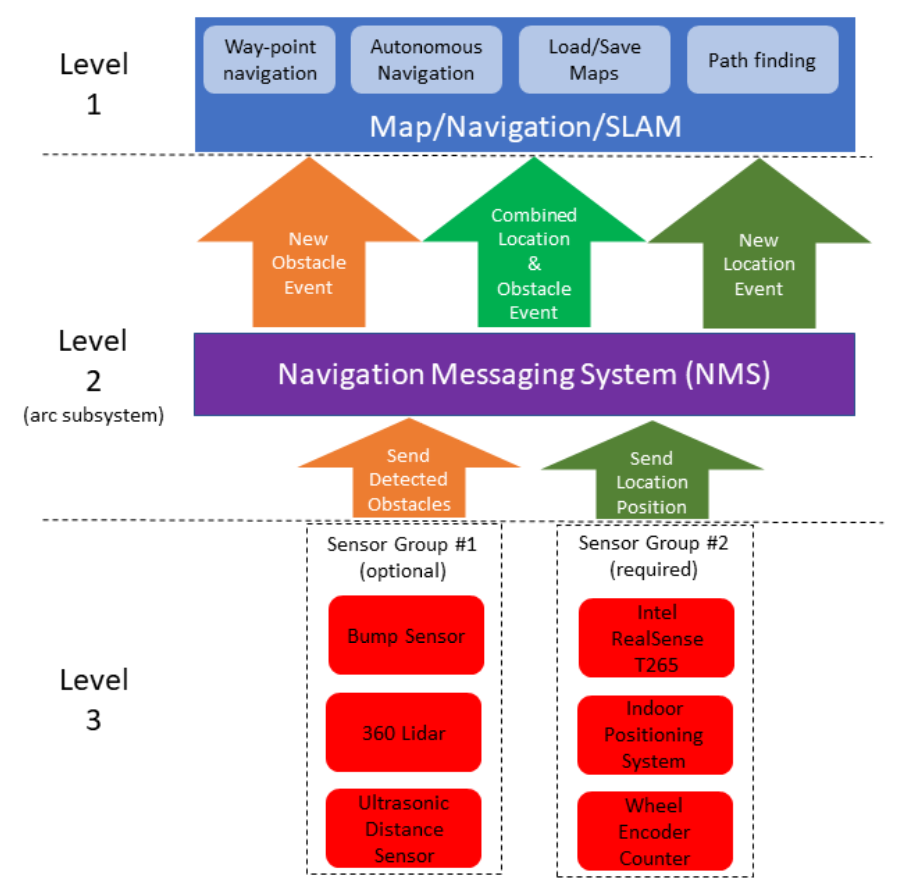

NMS Driver for the Hitachi-LG LDS 360-degree lidar. This robot skill connects to the Lidar and pushes the data into ARC's NMS (navigation messaging system) to be used with level 1 navigation viewers. To understand how this skill can be used, reference the NMS manual page.

Screenshot

Configuration

1) BaudRate value The baud rate for communication to the USB serial adapter or Arduino. By default, the baud rate for the lidar should be 230400. However, some USB serial converters use different baud rates between the PC's USB port. The baud rate between the lidar and USB adapter must be 230400.

2) Offset Degrees value Correct the angle that the lidar is mounted

3) Advanced communication parsing checkbox Include debug information in the log window. This is only recommended if asked by Synthiam to help debug a communication issue. Otherwise, this will use a lot of resources.

4) Set variables with location data checkbox If you're not using the NMS, check this option. The global variables will be created for the scan data if this checkbox is checked.

5) Fake NMS Pose hint event checkbox The Better Navigator can use the Hector SLAM pose hint, which will not require a pose sensor. You can enable this option if you have The Better Navigator configured to use Hector as the pose hint. This will send a "fake" pose hint of 0,0 to the NMS so that the pose event will run after every lidar scan.

6) RTS Enabled checkbox RTS (Ready To Send) is an optional signal line for UART communication. Some USB adapters, specifically some Arduinos, may require this to be enabled. If your USB Serial adapter is not responding, you may need to enable or disable this option.

7) DTR Enabled checkbox DTR (Data Terminal Ready) is an optional signal line for UART communication. Some USB adapters, specifically some Arduinos, may require this to be enabled. If your USB Serial adapter is not responding, you may need to enable or disable this option.

NMS (Navigation Messaging System)

This skill operates in Level #3 Group #1 and publishes obstacle detection to the NMS. While some SLAM systems will attempt to determine the robot's cartesian position without telemetry, it is best to combine this skill with a Group #2 sensor.A recommended navigation robot skill is The Better Navigator, which uses the NMS data.

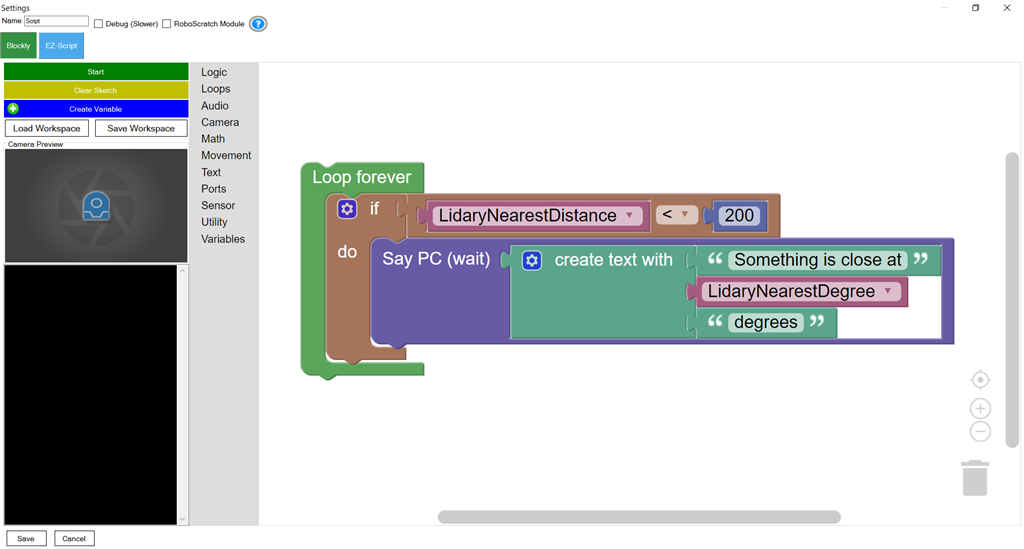

Program the LIDAR in Blockly Like all Synthiam controls, the Blockly programming language can be used. In the example below, the robot will speak when an object has come close to it. It will also speak the degrees of where the object was. To see this program in action, click HERE.

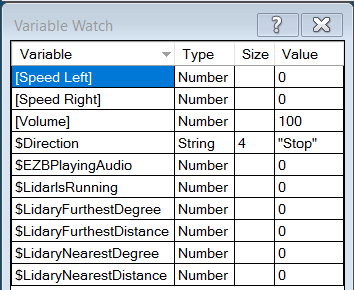

Variables You can view the variables this skill creates in the configuration menu of this control. The variables hold the most recent values of the lidar's minimum/maximum distance, degrees, and running status. Here is a list showing the variables using the Variable Watcher robot skill.

Arduino Sketch

There are two ways to connect to this lidar. You can use a standard USB<->UART converter, such as those on Amazon or eBay. Or you can use a 32u4-based Arduino board (i.e., Micro) to make your own USB<->UART converter. The 32u4-based boards are necessary Arduino versions because they support emulating USB HID devices, such as serial devices.Here is a simple sketch that can be used on a 32u4-based board...

void setup() {

Serial1.begin(230400); // Init Hardware Serial port on pins 0 and 1

Serial.begin(230400); // Init Serial Port

}

void loop() {

if (Serial.available())

Serial1.write(Serial.read());

if (Serial1.available())

Serial.write(Serial1.read());

}

Wiring

GREY - Ground RED - +5 GREEN - RX (connects to TX on Arduino or USB Converter) BROWN - TX (connects to RX on Arduino or USB converter)Robotis USB <-> UART Converter

The Robotis version of this sensor may include their USB UART converter, which uses a CP210x chipset. The driver for Windows can be found by clicking here: CP210x_Windows_Drivers.zipVideos

Real-time SLAM mappingRoom Mapping

Near Object Detection

Hardware Info

Hardware Info Source Code

Source Code

Is this the same hardware? https://www.robotis.us/360-laser-distance-sensor-lds-01-lidar/

The hardware link above goes to a Hitachi-LG page specs but no link to purchase the device, and searching Google I can't find it for sale anywhere with that specific name.

Alan

Yah - it's the same but i don't think you can buy this easily. It's difficult to find but the only lidar i currently have to test with at the moment. This was the lidar that we had partnered with hitachi-lg to distribute back at the ez-robot days. Robotis uses it in their waffle and burger. There's a number of more cost effective lidar's at robotshop i think

Since the NMS is so versatile, we can start adding more 360 degree lidars. If you guys decide on one that you think is a good fit, let me know and i'll add an NMS driver for it.

Neato has an affordable lidar ($80) ...you can get some used ones on ebay for about $40.

Guys,

I have the lidar, I'm using in one of my bots with ROS.DJ wrote multiple times they were working in releasing a EZ-Robot lidar so I didn't wanted to create any competition between Robotis Lidar and the EZ-Robot Lidar.

And I have that one also in another bot. David (Cochran Robotics) wrote a plugin, so I didn't wanted to create a duplicate plugin. It seems David never finished the plugin, and he left the forum so maybe make sense to wrap up a plugin.I'll write a plugin for both Lidars.

Hey wow PTP, DJ, we have more on Lidar this year than anything last year, even if 2020 was terrible ,the start of 2021 looking brighter already!

Wow thanks @PTP the Neato starts at around $36 on Ebay + about $10 shipping so this meets the wife doesn't need to know about it price category. https://www.ebay.com/sch/i.html?_from=R40&_trksid=p2380057.m570.l1313&_nkw=neato+xv11+lidar&_sacat=0

Besides the Neato you will need an arduino micro (Leonard) has a serial port and a small h-bridge (<$10) to control the lidar RPM. I'll do a video and I'll post the details.

PTP - Actually I'm modifying the lidar plugin to connect directly with the lidar via a USB <-> UART

I haven't done it yet - but it's what i'm working on next. Less hardware needed