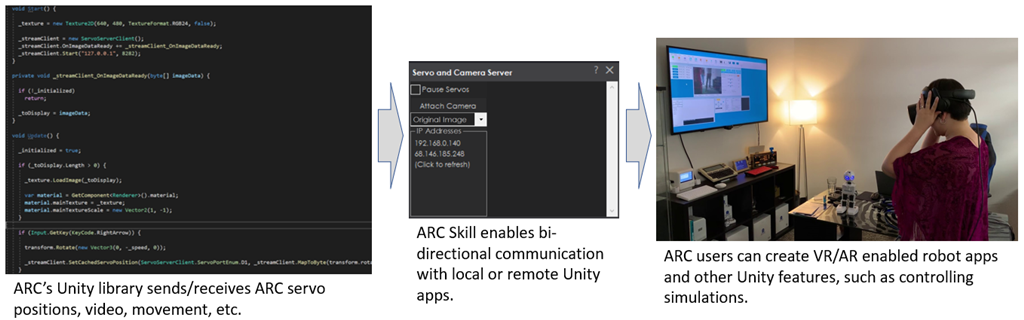

Remote camera & servo TCP server for ARC - streams video to clients and accepts servo positions from Unity/C# apps; includes example projects.

How to add the Single Camera Servo Server robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Virtual Reality category tab.

- Press the Single Camera Servo Server icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Single Camera Servo Server robot skill.

How to use the Single Camera Servo Server robot skill

This is a servo & camera video server which allows a remote client to move servos and receive video stream from a camera device. This is specifically useful for those creating Unity apps that operate as a client to ARC. where the camera video stream can be received and servo positions can be sent. The camera must be streaming for the servo positions to transmit.

Demo #1 This is an overview of how this robot skill can integrate with a unity scene using the Unity animation tool.

Demo #2 This example uses a block in Unity that controls an EZ-Robot JD shoulder. It's a very simple example of how powerful and easy it is to control any servo in ARC. The blocks have gravity and a hinge, so as it swings the angle is read and pushed into ARC to move the respective servo.

Demo #3 This is Mickey's demonstration of controlling the servos through unity and joysticks.

How It Works Code from the client (ie unity in this case) will connect to the camera servo skill over tcp. It streams the servo positions and receives the camera view.

Example Client App Source Code Here is an example test app src that connects to localhost (127.0.0.1), moves a servo on port D2 and displays the camera video stream. The sample app is C# .Net source-code and can be downloaded.

Download C# .Net Example Source code: Test App.zip (2020/12/17)

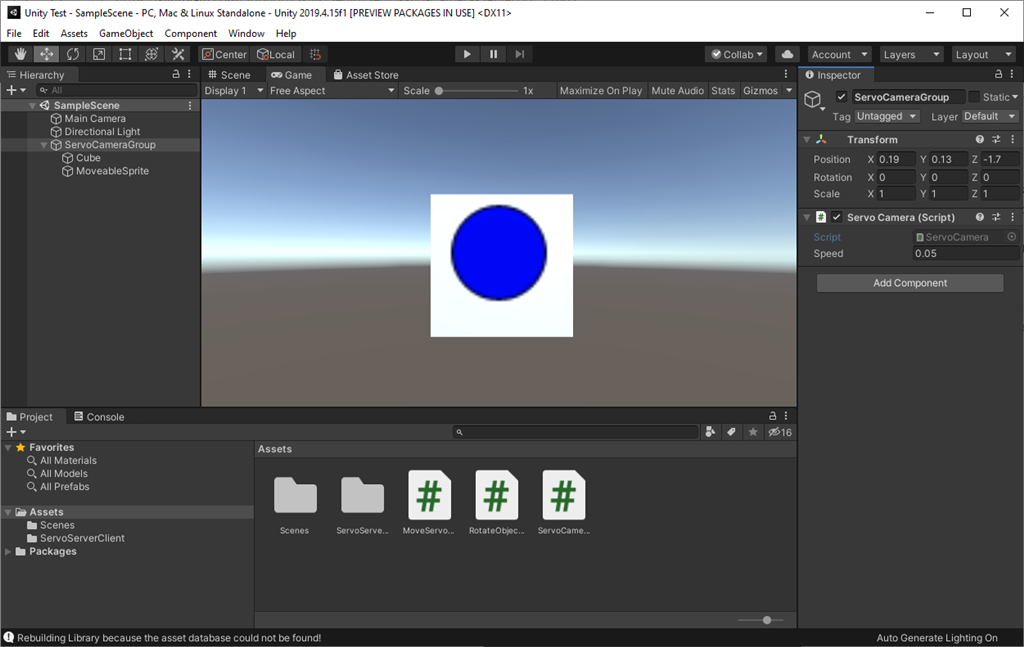

Test Unity Project I have included a test Unity project for example reference. The example rotates a cube on the screen using the ARROW keys. The cube projects the texture from the camera stream onto it. The arrow keys will also move the servos connected to port D0 and D1 relative to the rotation of the cube.

You can download the project here: Unity Test.zip (2020/12/17)

Use In Unity The stream client files in the "Test App" can be included in a Unity project to receive the video and move servos. The test app demonstrates how to move the servos using the methods, and how to display the video on Windows. To display the video in Unity, follow the steps below. The video works by becoming a Texture2D that can be applied to any material.

To use this in your Unity App, copy the files from the Test App\ServoServerClient*.cs into your Unity project.

Examine Test Project The Unity project displays the ARC camera stream on a rotating cube. While, allowing the 2d sprite to control servos D0 and D1 by the X and Y position, respectively. Clicking on the scene will move the sprite and also move the servos.

Any components within the group can have their position or rotation, etc. extracted and sent to ARC. If you have a 3d model of a robot, each joint position/rotation can be sent to ARC.

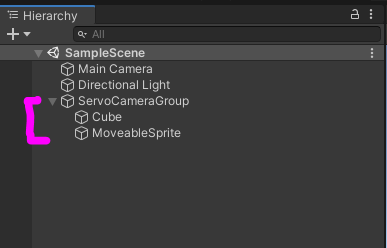

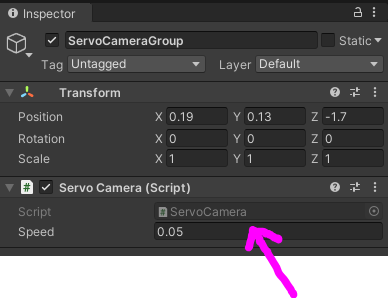

The most interesting thing to look at is the Scene object -> ServoCameraGroup. Notice it has child GameObjects. Those child GameObjects can be queried for their rotation or position or whatever is desired and sent to ARC as servo positions. Also, the camera image can be rendered to any material as a 2d texture.

Look at the ServoCameraGroup to see the script The script ServoCamera.cs will be responsible for Start - create and instance of the StreamClient object

- have the StreamClient connect to ARC at an IP address (this case it's using local machine 127.0.0.1)

- assign child gameobjects to local variables that we will be using in Update (this makes cpu happy)

- connecte to the ARC server

Update

- obtaining rotation/position/whatever data from children and add to the servo position cache (in this example a sprite position)

- sending the cache of servo positions

- displaying the incoming image on a material as a texture

Let's take a look at the code for ServoCamera.cs and read the comments of how it is working

using EZ_Robot_Unity_DLL;

using UnityEngine;

public class ServoCamera : MonoBehaviour {

ServoServerClient _streamClient;

bool _initialized = false;

Texture2D _texture;

volatile byte [] _toDisplay = new byte[]{ };

Transform _cube;

Transform _sprite;

///

/// We have this script added to a parent that has children.

/// Because we can access the children's transformation or position from here to set servo positions

/// In the update, we'll just grab the children and use thier data to send to ARC

///

void Start() {

// This is the texture that will hold the camera image from ARC

// We apply this texture to a cube

_texture = new Texture2D(640, 480, TextureFormat.RGB24, false);

// assign a local variable to the children so we don't have to search for them on each frame (makes cpu happy)

_cube = gameObject.transform.Find("Cube");

_sprite = gameObject.transform.Find("MoveableSprite");

//Create a client that will connect to ARC at the specified IP address

// Once connected, any available video data from the ARC camera will raise the OnImageDataReady event

_streamClient = new ServoServerClient();

_streamClient.OnImageDataReady += _streamClient_OnImageDataReady;

_streamClient.Start("127.0.0.1", 8282);

}

///

/// This event is raised for every camera image that is received from the connected ARC server.

/// We assign the image data to a volatile array that will be used in the update the texture with the latest image

///

private void _streamClient_OnImageDataReady(byte[] imageData) {

if (!_initialized)

return;

_toDisplay = imageData;

}

void OnDisable() {

_streamClient.Stop();

}

///

/// Unity runs this on every render frame

/// We get the keyboard input to move the camera around

/// And we map the cube's X and Y rotation values to D0 and D1 servo positions in ARC, respectively

///

void Update() {

_initialized = true;

if (Input.GetKey(KeyCode.Escape))

Application.Quit();

// add the position of the servos to the cache based on the location of the sprice

// We set the positions to cache in this loop rather than trying to send a position each time

// That way we can send a bulk change which is much faster on bandwidth

// So, add your servo positions to the cache and then send them all after

_streamClient.SetCachedServoPosition(ServoServerClient.ServoPortEnum.D0, _streamClient.MapToByte(_sprite.transform.position.x));

_streamClient.SetCachedServoPosition(ServoServerClient.ServoPortEnum.D1, _streamClient.MapToByte(_sprite.transform.position.y));

// Send all the servo positions that have been cached

_streamClient.SendCachedServoPositions();

// Display the latest camera image by rendering it to the texture and applying to the cube's material

if (_toDisplay.Length > 0) {

_texture.LoadImage(_toDisplay);

var material = _cube.GetComponent().material;

material.mainTexture = _texture;

material.mainTextureScale = new Vector2(1, -1);

}

}

}

That gets used a lot in universities. As it’s cheaper to program a virtual robot than real. It was popular a number of years ago but slowly faded due to physics engines limitations. The trouble is that there’s a larger game market than robot/physics market. So game engines simulate physics but don’t actually use anything close to reality. Turns out that a semi complicated vr robot doesn’t work when connected to a real one.

but, for some stuff it does like creating an animation. But for navigating or using real physics for walking or interacting with the world it doesn’t

the best use case for AAA engines is what will is using it for. Creating animations with a digital twin.

This of course is the software version of using a real twin like demonstrated here:

I loved your puppet hack but yeah that is physical to physical. But if you took virtual JD and Ran him through something like unity simulation for robotics to complete a task https://github.com/Unity-Technologies/Unity-Simulation-Docs

Remember the IK work @Mickey666maus @FXRTST and @PTP were doing 2 years ago.

This is one of the best collaborative build threads I have seen on here. https://synthiam.com/Community/Questions/Vr-Options-3693/comments

@DJSures

OMG!!! This is such a cool implementation!! It never ever even crossed my mind!!@fxrtst I think I actually got you wrong!

I was trying to figure out why you are planning to move away from Unity. Since it offers an Asset Store, where you can buy skills like eg REST client and it also natively runs lightweigt IOT sollutions, like WebRTC or gRPC. So we got all the tools we need for robot/iot building and ARC is already plug and play!

So my guess for the reason for switching to some AAA game engine was, you are planning to lift some pretty heavy stuff... Unreal is eg know for its unbelievable performance when it comes to its rendering engine!! But is of course this is also creating the need for massive hardware resources...so I thought it might be a bit much for some robot running a SBC!

But its not the first time you would amaze me with something I would have never imagined! So I am really curious of what it will be!!

@DJ I have a fun project slated in 2021 using the digital twin idea......such a great hack to get feed back from a standard servo!

@Nink Thanks for the kudos on the concept. Team effort. I have resurrected some of those videos on my channel...and it got me thinking about some other ideas exploring this stuff even further. I've been fascinated about getting AI to teach robots how to move/walk, traverse. Seems Google is leading the way with this at the moment. I can see a future where you can build plug and play modules for robots like lower legs section and the AI will calculate loads for upper torso and arm length, weight, then calculate for a biped walking gait!!! That is the future!

@mickey Yay! Its hard sometimes for me to type out my thoughts clearly. Glad you get it now. And Unreal has an asset store as well, but I'm trying to put together as much free technology as I can ..I.E, no plug ins needed, etc. You can use their visual programming tool called BluePrint, which is alot like Blocky to code. Here is my set up of connecting to an Arduino and activating the onboard LED. And its mostly a C++ environment for coding outside Blueprint. Oh and the blueprints are active all over the web...and you can copy one and take it apart....so its like active code.

Also. something that I never realized is...there is git for Unity!!

https://unity.github.com/

The funny thing about node based coding...more visual, but sadly not less complicated!!

Its funny every package has adopted nodes in one form or another..be it animation, or surface painting or coding....I started getting familiar with it when Lightwave moved over several years ago. As long as you understand the stack then its fairly simple. But yeah its pretty to look at but the complexity is still there.