Robot Skills for Synthiam ARC

Discover how Synthiam ARC Robot Skills give your robot advanced capabilities, including vision, navigation, speech recognition, hardware control, and more. Browse skill categories, watch the overview video, and learn how to add the right behaviors to your ARC project.

Robot apps are built from Robot Skill Controls. Each skill represents a specific behavior or capability for your robot, running as a process (or node). There are skills for controllers, cameras, sensors, navigation, speech recognition, and hundreds more.

Add Robot Skills to your ARC robot project from the Add Skill menu to quickly integrate powerful features without writing low-level code.

Browse Robot Skill Categories

ADC Robot Skills

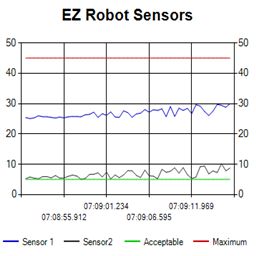

Historical ADC graph and numeric readout (0-255) for 0-3.3/5V inputs; configurable board/port, sample interval, color, and pause for ezb controllers.

ADC VU meter displaying 0-3.3/5V as a 0-255 linear meter; configurable board/port, sampling interval and color; pausable real-time readings.

Display ADC port voltage and 0-255 linear values (0-3.3/5V); configurable board/port, units, multiplier and sample interval.

Placeholder ARCx development skill compatible with Windows, Linux, Raspberry Pi and macOS; provides functionality during ARCx platform rollout

Artificial Intelligence Robot Skills

Localized AIML chatbot with editable AIML files, ez-script support, session memory and ControlCommand API; integrates with speech and robot skills.

Autonomous AI for Synthiam ARC: conversational self-programming that interprets commands, generates code, and learns to improve robot tasks.

AIML2 chatbot client for ARC connecting robots to Bot Libre cloud for customizable, private chatbots and personalities.

Detects positive sentiment percentage of input text using cognitive ML; returns $SentimentPercentage, shows face, graph, and status for analysis.

Add Google DialogFlow NLP to ARC: conversational intents, parameter prompts, scripted responses, service-account setup and programmatic robot control.

ChatGPT conversational AI for ARC robots-configurable personality, memory, image description, script execution, speech and API integration.

Connect ARC to Pandorabots AIML2 to run AI-driven virtual personalities for human-like text or voice chat, using API keys and script hooks.

Connects ARC to Pandorabots for AI chat, sends text and gets replies (executes [EZ-Script]), integrates with speech recognition and custom bots

Adds a Personality Forge chatbot to ARC, giving memory, emotions, AIScript dialogue, persistent conversation IDs and speech/chat integration via API.

Synbot plugin for ARC: local Bot Server chatbot integration with SIML dialogs, ControlCommand API, multi-language, learning and emotion support.

Translate text via Microsoft Translator (auto-detect); EZ-B plugin stores translated and detected language variables. Requires Azure key.

VADER sentiment analyzer for ARC returns positive, neutral, negative percents and a compound score from text; enables emotion-driven behaviors.

Valence-arousal emotion engine for ARC robots: register events, track mood/personality, output emotion variables to drive servos, displays & actions

Audio Robot Skills

Advanced Azure-backed speech-to-text for ARC allowing custom Azure Cognitive Service keys, scripting hooks, and configurable output variables.

Advanced multilingual speech synthesis using Azure's natural voices for lifelike robot speech.

Enable UWP voices, change default audio devices, capture audio and route it to EZB controllers with session tracking and device control.

Azure speech recognition for ARC using your custom subscription key for speech-to-text, billed to your Azure account.

Azure TTS for ARC robots: generate natural, customizable neural voices for companion, educational, assistive, and entertainment applications.

English-only speech synthesis using a remote server to generate audio.

Accurate Bing cloud speech-to-text for ARC: wake-word, programmable control, $BingSpeech output, Windows language support, headset compatible

Voice menu tree to navigate options and run scripts on ARC robots. Multi-level, customizable prompts, speech I/O, timeout and repeat/back.

Detect audio frequencies via PC microphone (FFT), output a variable and drive servos within configurable min/max ranges with waveform feedback.

Google Speech for ARC: cloud speech recognition with waveform, configurable response scripts and PandoraBot support

Record audio from your PC mic, auto-trigger and edit sample rate/effects, then play or export recordings to an EZ-B v4 SoundBoard for robot playback.

MIDI I/O for ARC: send/receive notes, run per-note scripts, control PC or external instruments, with panic to stop stuck notes.

Serial MP3 Trigger for EZ-B: plays MP3s from mini‑SD; configurable port/baud. Deprecated-replaced by EZB v4 streaming audio. 3rd‑party.

Convert text to dynamic, real-time speech with nine expressive OpenAI voices-natural, varied, accessible robot communication.

Example ARC skill demonstrating converting, compressing and streaming MP3/WAV to an EZ-B speaker, with play/stop commands and TTS examples.

Stereo mic sound localization triggers left/right scripts to control robot movement, enabling directional responses (turn/move) to audio.

Trigger a script when EZB audio plays, exposing live Min, Max and Avg audio-level variables for looped monitoring; stops when audio ends.

Map EZ-B audio volume to servos; multi-servo mirroring, scalar range control, invert/min-max, waveform feedback to sync mouth motion.

Maps PC microphone volume to servo positions - control multiple servos (e.g., robotic mouth) with scalar, min/max and invert options.

Maps PC audio volume to servos in real time with scalar, min/max, invert & multi-servo options-ideal for syncing robot mouth to sound

Play WAV/MP3 via EZ-B v4, manage tracks, add timed scripts for synced robot actions, control via ControlCommand(), volume and clipping indicators.

Play and manage MP3/WAV sound effects from a PC soundboard, load tracks, trigger or script playback (one file at a time), export and automate.

Play MP3/WAV via PC sound output with timeline scripts to trigger movements, auto-position actions, and optional looping for synced routines

Run ARC scripts from any speech-to-text source for voice-controlled automation, command parsing and script triggering.

Windows Speech Recognition skill: detect custom phrases via PC mic, trigger configurable scripts/actions with adjustable confidence.

Run custom scripts when speech starts/ends to sync servos and LEDs to spoken $SpeechTxt, with loop support, stop button and logs.

Speak user-defined text via PC audio or EZ-B v4 speaker; configurable voices, effects and speed; uses Windows TTS; programmatically callable.

Configure Windows Audio.say()/Audio.sayEZB() TTS on EZB#0: voice, emphasis, rate, volume, speed/stretch and audio effects; copy control script.

Animate servos to simulate jaw/mouth with ARC text-to-speech; configurable vowel/consonant timing, start sync, multi-servo control, pause/stop.

Offline open-vocabulary speech recognition for Windows 10/11 - low-accuracy open-dictionary voice input with confidence & scripting, headset use.

Real-time voice activity detection for ARC robots, detects speech start/stop, customizable scripts, live audio visualization and tunable sensitivity

Watson Speech-to-Text ARC plugin: cloud AI transcription with configurable models, selectable VAD (Windows/WebRTC), audio capture and visualization.

Human-like audio via IBM Watson Text-to-Speech: multi-language, selectable voices for accessibility and automated interactions. IBM Cloud required.

Microsoft Windows built-in speech synthesis and recognition for TTS and speech-to-text input in ARC robot projects.

Camera Robot Skills

Overlay PNG/JPG images in real-time onto detected objects, faces, colors or glyphs using ARC Camera tracking; attach, preview, detach.

Interactive camera control for ARC: click-to-center and edge hotspots to pan/tilt servos, adjustable fine-tune and multi-camera support.

Use PC or robot cameras for vision tracking: color, QR, glyph, face, object, motion; record video/data, control servos and movement, run scripts

Displays trained object names as overlays on the Camera Device video stream using Camera Device's object-tracking (shows $CameraObjectName).

Save camera snapshots to 'My Robot Pictures' (Pictures folder), manual or timed (0-100s), and trigger programmatically via controlCommand.

Enable an EZB video camera as ARC's camera source for video recording, vision recognition and other robot vision features.

USB camera video source for recognition, recording and other vision tasks in ARC.

Overlay image packs onto camera feed and map images to control-variable ranges with auto/manual assignment, position and sizing options.

Uses Microsoft Cognitive Emotion cloud to analyze camera images, returning emotion descriptions and confidence for speech/output (requires internet).

Microsoft Cognitive Vision integration for ARC: real-time object, face, emotion detection, OCR, confidence data and script-triggered robot actions.

Tiny YOLOv3 CPU-only real-time object detection using a camera; offline detection, script-triggered on-changes or on-demand results with class/scores.

Detects and tracks faces from any ARC video source, providing real-time face localization for robot applications.

Control robot servos via Xbox 360 Kinect body joints with per-joint servo mapping, automatic degree calculation, smoothing and upper/lower control

Broadcast live audio and video from ARC camera to the web via HLS; cross-browser streaming. Requires router configuration for external access.

Play live web video and audio streams inside ARC from Chrome/Firefox, streaming directly to ARC camera control; network configuration may be needed.

Omron HVC-P ARC plugin: real-time body, hand, face, gaze, gender, age, expression and eye estimation; facial recognition via Omron software

Omron HVC-P/HVC-P2 ARC plugin: Python-based camera integration for body, hand and face detection, gaze, age/gender, expressions and face recognition.

Windows ARC plugin for Omron HVC-P/HVC-P2: Python-based face/body/hand detection, gaze, age/gender, expressions and recognition; dual-camera support.

Generate and modify images with DALL·E 2 inside Synthiam ARC robots-create images from text or camera input via API and control commands.

Picture-in-picture camera overlay: superimpose one camera onto another; configure source/destination, position, size, border, processed frames, and swap

Use camera-detected printed cue cards (direction, pause, start) to record and run stored movement sequences for ARC robots.

Create customizable QR codes for ARC, display/scan via Camera Control, trigger scripts on recognition and save decoded text to variables.

Capture a robot skill's display and stream selected area as video to a configured camera device with FPS and live preview crop.

Control a custom ARC robot to manipulate and solve a Rubik's Cube using calibrated arms and grippers; integrates with Thingiverse build.

Capture any screen area and stream video to a configured camera device; requires a Custom device and 100% display scaling.

Cloud-based detection of people and faces in robot camera video; returns locations, gender, age, pose, emotion, plus 68-point facial landmarks.

Stream video from any URI/protocol (RTMP, RTSP, HTTP, UDP, etc.) to a selected ARC camera device for real-time network feed playback.

Overlay translucent PNG targets onto a camera stream with loadable templates, attach/detach control, preview and status for visual alignment.

Overlay a variable on a processed camera image at X/Y coordinates; name the variable and attach to a specific or any available camera

Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

Train camera vision objects via controlcommand(), attach camera, start learning, monitor progress, and return object name for scripts.

Record any video source to a local file for playback, archiving, and processing.

Connect Vuzix 920VR AR headset to ARC to map head movement to robot servos or drive, control camera pan/tilt; deprecated 920VR support only.

Communication Robot Skills

Displays local PC COM/Serial ports opened by scripts, showing open/closed status (no communication logs); not the EZ-B UART.

Configure EZ-B v4/2 Comm Expansion: switch between UART transparent proxy and USB direct PC link (avoids WiFi); EZ-B needs separate power.

Duplicate and synchronize commands from one master EZ-B to multiple slave EZ-Bs for simultaneous, mirrored robot actions.

Diagnose EZ-B Wi-Fi connection issues with verbose EZ-B communication logging, ping tests, log export and diagnostic reports for Synthiam support.

Integrate ARCx with microcontrollers running EZB firmware to enable communication and control of EZB-equipped hardware.

Connects EZ-B I/O controllers to ARC via COM or IP (5 connections). Supports init scripts, battery monitoring, TCP/EZ-Script server and serial/I2C.

Add and manage up to 255 EZ-B I/O controller connections in ARC, select COM/IP ports or device addresses to connect or remove devices.

Local MQTT broker for ARC: host pub/sub messaging on TCP port 1883, relay topics between publisher and subscriber clients.

MQTT client for ARC: connect to brokers, publish/subscribe topics, map incoming messages to variables (incl. binary arrays) and run scripts.

Fetch RSS via GetRSS command; populates $RSSSuccess, $RSSErrorMsg and arrays $RSSTitles, $RSSDescriptions, $RSSLinks, $RSSDates for scripts.

Execute scripts on WiFi/network connect or disconnect; monitor adapter, store status in a variable and trigger custom scripts for headless SBC robots.

Send robot status alerts to iOS, Android and Windows via Pushover.net. Configure User/App keys to notify on low battery, stuck or task events.

PC and EZB serial terminals for ARC: connect, monitor and send text/ASCII to serial devices. Supports COM, flow control, baud, DTR/RTS, hex

Send SMTP email (text or camera images) from ARC robots via ControlCommand; configure SMTP/auth and sender; saves credentials in project.

Telnet-like ASCII TCP client for ARC: connect to IP:port, send ASCII, view raw or HEX server responses with local echo

Monitor incoming TCP Server connections when TCP Server is enabled under Connection > Config; view and track live connections.

TCP server for ARC accepting EZ-Script, JavaScript or Python commands, letting controllers send commands and receive line-terminated responses

WebSocket client for ARC: open/send messages, store server responses in a variable, run a response script, and track connection status.

WebSocket server for ARC: accepts clients, stores messages, runs per-message scripts, tracks connection status, supports debug, needs Windows admin

Digital Robot Skills

Read TTL digital input from an ARC I/O port; real-time red/green status for low (0V) or high (+3.3/5V), selectable board/port and read interval.

Toggle a digital I/O port between TTL low (0V) and high (3.3V/5V) in ARC; select board and port, simple on/off control-signal only.

Display Robot Skills

Configurable Chromium browser skill for ARC: navigate URLs, print, open in PC browser, maximize/restore, set page content to a global variable.

Full-screen popup display for ARC: show custom text with configurable timeout, font, text/background color, size and position via controlCommand().

Fullscreen overlay video player controlled by ControlCommand: play/pause/resume/stop; ESC cancels playback and locks new playback until unpaused.

Games Robot Skills

Play Tic Tac Toe with your ARC robot: configurable turn, win and draw scripts, cheat commands and optional speech-recognition control.

General Robot Skills

Diagnose EZ-B connection speed and reliability; benchmark ADC read rates (4-70/sec), reveal flood-control effects and optimize data throttle.

Central ARC debug window routing logs; shows UTC date/time, Windows/ARC/skill/hardware info, version/settings, with copy and clear.

Collects and sends ARC diagnostic reports to Synthiam to help troubleshoot EZ-B and robot connection issues

Configures and pushes WiFi SSID, password, system name and channel to EZ-Robot EZ-B v4 from ARC; saves settings with project for quick redeploy.

Manage EZ-B hardware settings: edit Bluetooth name and apply updates to restore altered configurations.

Displays EZ-B v4 internal temperature and battery voltage, shows built-in battery monitor and LiPo protection settings in Connection Control.

Discover EZB WiFi controllers by name, auto-bind connections, avoid IP management; live discovery and multi-EZB support for ARC.

Stress-tests EZ-B controllers (UART, ADC, voltage, temp, audio, digital I/O) to detect disconnects, corruption and stability issues.

Displays files currently open for reading by scripts, shows only read-opened files, helps diagnose read/write locks and file access errors.

Notepad for ARC: store project notes and connection details in a themed window

Record your screen to WMV1/WMV2/H263P files with selectable bitrate, save-folder and start/stop controls, creating tutorial or demo video snippets.

Shortcut Creator relocated to the ARC Options menu; new manual page provides updated Shortcut Creator documentation.

Tutorial slide plugin for ARC: create, format and embed text and images per .ezb project; navigate/update slides via ControlCommand; auto-resize images

GPS Robot Skills

Deprecated: reads latitude, longitude, UTC time and speed from ublox NEO-6M GPS; auto-inits UART0@9600, shows processed and raw data.

Graphs Robot Skills

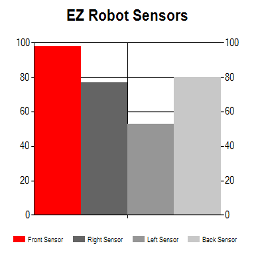

4-series bar chart for real-time sensor comparison in ARC; set titles, values, RGB bar colors and conditional coloring via EZ-Script.

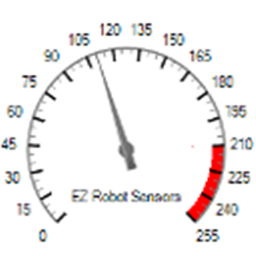

Dashboard-style configurable gauge chart with red-zone indicator, adjustable range/arc, scale ticks, title and live value updates.

4-series historical line chart to log and compare robot sensor values over time with customizable titles, colors, time axis and EZ-Script control

Plot live data with ControlCommand scripts: multi-series charts, many chart types, legend toggle, add/remove series, export graph data to CSV

Real-time 3D servo visualization with motion trails, interactive camera controls and clear trail for ARC debugging and analysis.

I2C Robot Skills

EZ-Robot 3-in-1 IMU driver: accelerometer, gyroscope and compass support for motion sensing, orientation tracking and heading/navigation.

EZB v4-Arduino I2C bridge for ARC: supports custom C# code and reads gamepad or Wii controller inputs for robot control

BlinkM I2C multi-color LED control with slide dials for custom colors; supports SendI2C for custom I2C peripherals. Third-party device.

Adds IR sensor support and button mapping for USB joysticks in ARC, enabling remote control functions; third-party hardware supported.

Dual-axis I2C magnetic sensor for EZ-B robots enabling magnetic direction sensing; requires short 2-3 in wiring. Third-party hardware.

ESP32/Arduino EZB firmware with MPU6050 support: provides accelerometer angles and Yaw/Pitch/Roll gimbal servo values for stabilization and scripting

Reads HMC5883 3-axis compass via I2C and updates EZ-Script variables on demand; polled control offers periodic heading data, for connected sensors.

Control for Adafruit 8x8 LED Matrix (HT16K33) via EZ-B v4 I2C; creates animations, supports module initialization and EZ-Script control.

Laser Lidar-Lite ARC control reads distance, velocity and signal strength (0-40m+) via EZ-B v4 i2c, setting EZ-Script vars for robotics

I2C accelerometer for EZ-B robots, supplies IMU/acceleration data over the I2C interface.

Reads MPU6050 gyro, accelerometer and temperature via I2C, initializes and returns data on-demand to EZ-Script variables using ControlCommand RunOnce

Flash Arduino Uno firmware to read DHT11 temp/humidity and MPU6050 IMU simultaneously; provides $AngleX, $AngleY and $AngleZ variables.

MPU9150 ARC control reads compass, gyro, accelerometer and temperature via I2C, initializes and sets EZ-Script variables on demand.

Create, edit and play animated frames on an RGB 8x8 LED matrix via I2C with looping, adjustable pauses and scriptable EZ-Script canvas control.

Create custom animations for JD Humanoid's 18 RGB Eyes LEDs via I2C - per-LED color frames, transitions, pauses, scripting and real-time preview.

Infrared Distance Robot Skills

Stops movement panels when a Sharp IR sensor on EZB ADC detects objects within a set range; displays ADC voltage, VU meter and 0-255 distance value.

Radar-style Sharp IR scanner for EZB ADC: sweeps sensor, displays distance dots, logs ADC voltage, and can steer/avoid obstacles via Movement Panel

Misc Robot Skills

Map Kinect, Kinect One or Asus Xtion Pro skeletal joints to EZ-Script variables and control servos with customizable angle calculations.

Read DHT11 temperature and humidity on Arduino (signal pin 4) using EZ-Genuino_DTH11_Uno firmware; exposes $Temp and $Humid variables.

Map TI eZ430-Chronos watch accelerometer X/Y tilt to ARC servos for intuitive, Wii-Remote-style control of servo positions.

Send web-triggered IFTTT events from ARC/EZ-B: configure Maker Webhooks key and trigger applets to control SmartThings, WeMo and other services.

Receive iPhone Sensor Stream data in ARC, map accelerometer, gyro and compass to variables for EZ-Script control and visualization.

ARC utilities: DataQuery for SQL/ODBC/OLEDB/Excel with parameterized queries returning array variables; Ticks/Millis timing functions.

DIY NeoPixel Blaster for ARC - open-source controller for up to 8×80 LEDs (640), port‑0 onboard RGB, color control via ControlCommand

ARC UI for onboard computers to control NeoPixel rings via serial: test/run patterns, preview and copy Arduino serial commands, includes sample code.

Scriptable Remote Mouse for ARC: control PC cursor and ARC window via ControlCommand, using voice, camera or I/O inputs; shows cursor coordinates.

Rotate and position camera or object with roll/pitch/yaw controls; save camera settings; bind or monitor a 3-value EZ-Script array for live rotation.

EZB UART parser and Serial-TCP bridge to connect EZ-Robot cameras via Teensy USB, enabling ARC camera control and USB-to-serial bridging.

Display robot image with real-time servo position readouts and basic servo management including servo deletion

Movement Panels Robot Skills

Control Parrot AR Drone v1/v2 via ARC: keyboard, joystick, speech, Wii, web; camera feed, face/color/motion/glyph tracking.

Create servo frames/actions to animate humanoid, hexapod or custom gaits with smooth transitions, SW ramping and directional control

Control brushless motor controllers (hoverboard-style) via EZ-B: configure direction, speed and optional brake ports for DIY robot movement.

Movement panel using two or more continuous servos to control robot motion via movement commands.

Control two continuous servos for bidirectional robot movement with speed sliders, configurable ports/stop values, testing and brake/coast options.

Custom Movement Panel maps directional commands to scripts, adjusts per-wheel speed (0-255), and integrates with joysticks for unsupported motors

Custom Movement V2 panel for ARC: scripts for F/R/L, Reverse, roll left/right, up/down with speed mapping to PWM for drones, mecanum & custom drives

Control DJI Tello from ARC with live camera feed for computer vision tracking; fly via scripts, speech, joysticks, Python, or Exosphere.

Two-channel Dual H-Bridge movement panel to control two DC motors (on/off) for forward, reverse, left, right via EZ-B digital ports; no PWM speed.

Dual H-Bridge w/PWM movement panel: control two DC motors' direction and speed via EZ-B PWM for responsive forward/reverse/turning.

Balance Sainsmart v3 robots via ARC and custom EZB firmware: PID tuning (Kp/Ki/Kd), angle offset, movement controls, realtime diagnostics.

Legacy iRobot Discovery and 4xxx control via EZB software-serial (D0), supports Arduino/EZ-Robot, adjustable baud rate; not for hardware UART/USB

Control Roomba/Create via ARC: drive, stream sensors, read encoders, configure COM/HW UART, and send pose to NMS for mapping/navigation.

Connects Ohmnilabs Telepresence robot's UP Board COM to ARC, enabling drive and servo control (neck V0) and integration with ARC cameras.

Deprecated Roomba control; replaced by the iRobot Roomba Movement Panel for updated movement control.

Movement panel enabling Kangaroo & Sabertooth encoder-based speed/velocity control, UART/PC serial options, tuning and encoder setup.

Sabertooth movement panel: control two motors via Simplified Serial with variable speed sliders, movement buttons, and serial port options.

Control Sphero via Bluetooth in ARC. Detects two COM ports (one connects); requires deleting and re-pairing after disconnects or reboots.

Bluetooth control for UBTech Alpha1 servos via ARC: map Vx to servo IDs, enable servos, and integrate with ARC movement & LEDs.

UART-based EZ-B/ESP32 skill to control WowWee MIP, enabling ESP32-Cam camera support and flexible battery-powered integration.

Hack WowWee MIP via UART to control it from ARC with EZB/ESP32 devices-ESP32-Cam preferred. Supports UART or SoftwareSerial and 9600/115200 baud.

Movement panel integrating WowWee Rovio with Synthiam ARC for remote drive, live camera, audio and docking control. Under development.

Power Robot Skills

Prevents a PC from entering sleep mode via a tiny, resizable control-keeps the system awake while minimizing UI real estate

PWM Robot Skills

Slider to set EZ-B digital PWM for motor speed or LED brightness; shows duty-cycle, stop button, and board/port selection.

Rafiki Robot Skills

Rafiki front bumper plugin: reads 4 proximity sensors via serial, updates ARC variables, blocks motor movement toward detected obstacles for SLAM

Remote Control Robot Skills

3D Avatar JD simulator with bidirectional servo sync, action sequencing, $SIM_ scripting, EZ-B emulator support and pose/movement visualization

Remote telepresence and control for any robot - human-assisted autonomy, live audio/video, task marketplace to improve safety, efficiency and AI

Draws a live floor map of robot movement using Movement Panel, logging direction, distance and heading; configurable speed and turn settings.

Remote web control of ARC desktop: live screen interaction, movement control, camera list, EZ-Script/ControlCommand console with remote execution.

HTTP server for ARC serving HTML/CSS/PNG/JPG from ARC/HTTP Server Root; supports AJAX tags (ez-move, ez-script, ez-camera) to control the robot.

Create touchscreen robot control panels with buttons, joysticks, sliders, camera views and scripts; multi-page fullscreen UIs for PC and mobile.

DirectInput joystick control for ARC: drive movement panels, control servos, assign button scripts, variable speed and rumble feedback.

XInput joystick skill for ARC: maps Xbox controllers to movement, servos, triggers, vibration, variables; supports analog inputs and scripts.

Assign scripts to any keyboard key (press/release), map arrow keys to movement controls; activates when the control has focus (green).

Analog joystick UI to control any ARC movement panel via mouse/touch-configurable dead zone, max speed, center-reset for smooth robot motion

On-screen joystick to control any movement panel in ARC projects, providing intuitive real-time movement control.

Map Myo armband gestures and accelerometer to servos and ARC scripts for arm-mimic control; supports multiple armbands.

PC Remote UI Client for ARC enables remote robot control via customizable multi-page interfaces from other ARC instances.

Sketch paths with finger or mouse and set turn/move speeds; interactive, educational demo of timing-based, sensorless robot navigation and limits.

Control servos, movement panels and scripts via Wii Remote or mobile accelerometer; D-pad, buttons and Home-enabled accelerometer tracking.

Scripting Robot Skills

Real-time EZ-Script console for ARC: enter and execute single-line commands, view output, clear display, and recall history with UP/DOWN.

Adds GetLineContainingFromArray() ez-script function to return the first array item that contains specified text, simplifying substring searches.

Trigger direction-specific scripts when ARC movement panels change; assign scripts per direction/stop, access direction and speed (JS/Python/EZ).

Run scripts automatically when specified ARC variables change; define variable-to-script pairs, monitor status, and trigger actions.

Background randomizer that executes timed scripts to add lifelike actions (movement, servos, camera/control) and unique personality to your robot

Record and replay EZB communications (servo & digital commands) with forward/reverse playback, save recordings and trigger them from scripts

Multi-language ARC Script: build, run and debug Blockly, JavaScript, EZ-Script or Python with Intellisense, run/save/load and Roboscratch support

Run JavaScript or Python scripts to automate processes and control other ARC robot skills.

Manage and execute multiple scripts in a single Script Manager using ControlCommand(), with Control Details showing available commands.

Monitor and diagnose running ARC scripts: view active script labels, statuses, and stop long-running or background scripts easily.

Bind scripts to servo moves (V1-V99); triggers on position/speed/accel changes and provides ports, positions and speeds arrays for custom control.

Script-driven Sketch Pad for ARC: draw shapes, text, lines and faces via controlCommand() to visualize object locations, paths and robot data.

Adjust a numeric variable via slider (0-1000) with customizable min/max, center button and scripts triggered on value change or bonus button press.

Efficient ARC Variable Watcher - lower CPU for large projects; slow initial array render but faster updates; shows name, type, value, length

Replaced by Sound Script (Speech Script) robot skill; see Synthiam's Speech Script support page.

Split complex robot tasks into sequential scripts, synchronizing asynchronous skills via NEXT/CANCEL commands for staged automation.

Add custom EZ-Script functions in C# or JS; intercept unknown calls, parse parameters and return values via AdditionalFunctionEvent.

Persist and auto-load specified global variables between ARC sessions for consistent, personalized robot behavior and quick state restoration.

Live view of script variables, types, sizes and values for debugging; auto-refresh, hex view, pause/clear; may affect program performance.

Servo Robot Skills

Create servo frames and actions to animate gaits and gestures with automatic motion planning, software ramping, movement panel, import/export

Auto-release servos after inactivity: set EZB board/ports, choose 1-60s delay, add/remove ports, pause and view status.

Control a 3-wire continuous 360-degree servo in ARC: adjustable forward/reverse speeds, start/stop buttons, board/port selection and test controls

Control up to 99 servos via Vx virtual ports over serial (Arduino/custom firmware), integrating with ARC for scalable servo management.

Control Robotis Dynamixel XL-320/AX-12/XL430 via ARC virtual servos; supports position, speed, velocity, acceleration, bidirectional UART.

Control Feetech serial-bus servos via EZB UART or PC COM; map ARC virtual ports to IDs; supports position, speed, acceleration, release.

Control Feetech SC series serial-bus servos via EZB UART or PC COM port; map virtual Vx IDs, set position, speed, acceleration, and release in ARC.

Mouse-drag horizontal servo control with configurable min/max limits, center/release functions, multi-servo mirroring and direction invert.

Customizable inverse/forward kinematics editor for robot arms: add joints/bones, map XYZ in cm, auto-calc joint angles for precise 3D positioning.

Control Kondo KRS ICS2/3.5 servos via EZ-B UART; experimental, untested. Supports chaining, virtual servo ports, Release() and Servo() commands.

Control LewanSoul LX-16A servos via ARC: assign virtual ports, read positions, switch servo/continuous modes, and set motion speed/baud.

Compact Lynxmotion Smart Servos with position feedback, safety features and UART TX/RX for Arduino/EZ-B v4; scriptable temp, load, load-dir and ping.

USB control for Pololu Maestro (6/12/18/24) via ARC Vx ports. Maps Vx to Maestro channels; supports position, speed, acceleration, release.

Add ADC positional feedback to hobby PWM servos via minor mod to EZ-B/Arduino; enables real-time position readout, calibration and puppet mirroring

Real-time servo position input via Arduino ADC over I2C; supports Nano (6 channels) or Mega (16), configurable I2C address and scalable chaining.

Synchronize servos by designating a master; slave servos mirror scaled (decimal/negative) positions with min/max limits and pause control

Inverse kinematics for Robotis OpenManipulatorX: compute joint angles and MoveTo 3D (cm) positions, supports camera-to-CM mapping for pick-and-place.

Control a servo with an on-screen mouth widget for interactive positioning and testing.

Drag mouse or finger as a virtual joystick to control pan/tilt x- and y-axis servos with configurable ports, limits, inversion, and backgrounds.

Record and replay named servo movements with adjustable speed/direction (-5 to +5), multiple recordings, ControlCommand triggers and status var

Adjust servo/PWM speed (0-20) between two positions to quickly experiment and tune motion; select board/port; settings aren't saved.

All-in-one servo view showing and editing servo positions and speeds; add servos and resize the control to fit.

ARC integration for SSC-32 servo controllers via PC COM or EZ-B/Arduino UART; maps V0-V31, configurable baud, enables servos in ARC skills.

Control up to 127 stepper motors via Arduinos mapped to ARC servos; supports any stepper driver, serial network, home calibration, speed/accel.

Control UBTECH Alpha UBT-12HC smart servos via ARC using EZ-B v4/IoTiny UART, assign virtual ports, set baud, custom bits and position mapping.

Drag vertically to control a servo within set min/max limits; center, release, invert, and mirror to other servos. For 3-wire GVS servos.

Control Waveshare servos (TTL/RS485): set position & speed, release torque, read position. RTS option for converters; reverse-engineered protocol.

Ultrasonic Robot Skills

Add HC-SR04 ultrasonic sensors with an EZ-B to AR Parrot Drone v1/v2 for collision detection and avoidance

Stops robot movement (no steering) when an EZB-connected ultrasonic sensor detects an object within a set range; integrates with scripting and paused polling.

Ultrasonic sensor triggers custom script on object detection; configurable interval & min distance, with optional forward-only trigger for navigation.

Displays HC-SR04 ultrasonic distance readings in ARC; scriptable via GetPing(), pausable, sets a variable with multiplier, optional NMS output

Ultrasonic on a servo sweeps 180°; radar shows distances and obstacles, integrates with movement panels for automatic avoidance, scriptable GetPing

Virtual Reality Robot Skills

Dual-camera server with servo control for stereo VR; includes C# test app and Unity (Oculus Quest 2) bindings for headset, hands and controllers

Immersive Meta Quest VR control for Synthiam ARC robots: stereoscopic camera view, map servos to hands/controllers, plus built-in remote desktop.

Remote camera & servo TCP server for ARC - streams video to clients and accepts servo positions from Unity/C# apps; includes example projects.

Stream robot camera to VR and control servos with headset pitch/yaw; supports SteamVR and Cardboard (iOS/Android) for immersive teleoperation