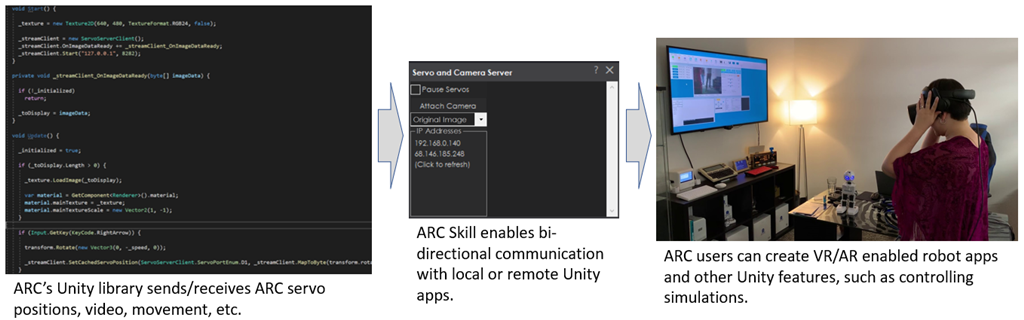

Remote camera & servo TCP server for ARC - streams video to clients and accepts servo positions from Unity/C# apps; includes example projects.

How to add the Single Camera Servo Server robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Virtual Reality category tab.

- Press the Single Camera Servo Server icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Single Camera Servo Server robot skill.

How to use the Single Camera Servo Server robot skill

This is a servo & camera video server which allows a remote client to move servos and receive video stream from a camera device. This is specifically useful for those creating Unity apps that operate as a client to ARC. where the camera video stream can be received and servo positions can be sent. The camera must be streaming for the servo positions to transmit.

Demo #1 This is an overview of how this robot skill can integrate with a unity scene using the Unity animation tool.

Demo #2 This example uses a block in Unity that controls an EZ-Robot JD shoulder. It's a very simple example of how powerful and easy it is to control any servo in ARC. The blocks have gravity and a hinge, so as it swings the angle is read and pushed into ARC to move the respective servo.

Demo #3 This is Mickey's demonstration of controlling the servos through unity and joysticks.

How It Works Code from the client (ie unity in this case) will connect to the camera servo skill over tcp. It streams the servo positions and receives the camera view.

Example Client App Source Code Here is an example test app src that connects to localhost (127.0.0.1), moves a servo on port D2 and displays the camera video stream. The sample app is C# .Net source-code and can be downloaded.

Download C# .Net Example Source code: Test App.zip (2020/12/17)

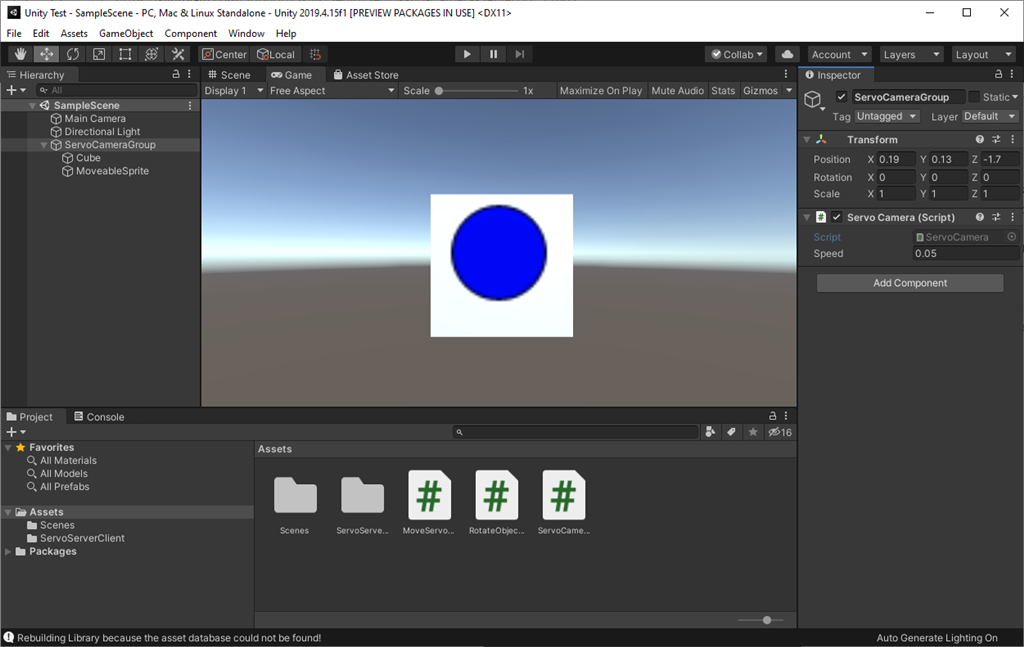

Test Unity Project I have included a test Unity project for example reference. The example rotates a cube on the screen using the ARROW keys. The cube projects the texture from the camera stream onto it. The arrow keys will also move the servos connected to port D0 and D1 relative to the rotation of the cube.

You can download the project here: Unity Test.zip (2020/12/17)

Use In Unity The stream client files in the "Test App" can be included in a Unity project to receive the video and move servos. The test app demonstrates how to move the servos using the methods, and how to display the video on Windows. To display the video in Unity, follow the steps below. The video works by becoming a Texture2D that can be applied to any material.

To use this in your Unity App, copy the files from the Test App\ServoServerClient*.cs into your Unity project.

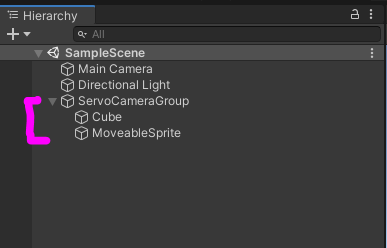

Examine Test Project The Unity project displays the ARC camera stream on a rotating cube. While, allowing the 2d sprite to control servos D0 and D1 by the X and Y position, respectively. Clicking on the scene will move the sprite and also move the servos.

Any components within the group can have their position or rotation, etc. extracted and sent to ARC. If you have a 3d model of a robot, each joint position/rotation can be sent to ARC.

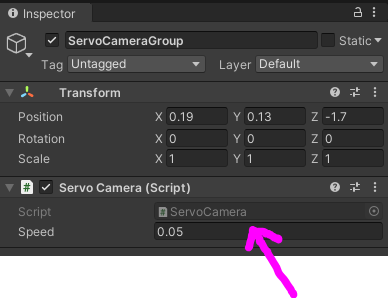

The most interesting thing to look at is the Scene object -> ServoCameraGroup. Notice it has child GameObjects. Those child GameObjects can be queried for their rotation or position or whatever is desired and sent to ARC as servo positions. Also, the camera image can be rendered to any material as a 2d texture.

Look at the ServoCameraGroup to see the script The script ServoCamera.cs will be responsible for Start - create and instance of the StreamClient object

- have the StreamClient connect to ARC at an IP address (this case it's using local machine 127.0.0.1)

- assign child gameobjects to local variables that we will be using in Update (this makes cpu happy)

- connecte to the ARC server

Update

- obtaining rotation/position/whatever data from children and add to the servo position cache (in this example a sprite position)

- sending the cache of servo positions

- displaying the incoming image on a material as a texture

Let's take a look at the code for ServoCamera.cs and read the comments of how it is working

using EZ_Robot_Unity_DLL;

using UnityEngine;

public class ServoCamera : MonoBehaviour {

ServoServerClient _streamClient;

bool _initialized = false;

Texture2D _texture;

volatile byte [] _toDisplay = new byte[]{ };

Transform _cube;

Transform _sprite;

///

/// We have this script added to a parent that has children.

/// Because we can access the children's transformation or position from here to set servo positions

/// In the update, we'll just grab the children and use thier data to send to ARC

///

void Start() {

// This is the texture that will hold the camera image from ARC

// We apply this texture to a cube

_texture = new Texture2D(640, 480, TextureFormat.RGB24, false);

// assign a local variable to the children so we don't have to search for them on each frame (makes cpu happy)

_cube = gameObject.transform.Find("Cube");

_sprite = gameObject.transform.Find("MoveableSprite");

//Create a client that will connect to ARC at the specified IP address

// Once connected, any available video data from the ARC camera will raise the OnImageDataReady event

_streamClient = new ServoServerClient();

_streamClient.OnImageDataReady += _streamClient_OnImageDataReady;

_streamClient.Start("127.0.0.1", 8282);

}

///

/// This event is raised for every camera image that is received from the connected ARC server.

/// We assign the image data to a volatile array that will be used in the update the texture with the latest image

///

private void _streamClient_OnImageDataReady(byte[] imageData) {

if (!_initialized)

return;

_toDisplay = imageData;

}

void OnDisable() {

_streamClient.Stop();

}

///

/// Unity runs this on every render frame

/// We get the keyboard input to move the camera around

/// And we map the cube's X and Y rotation values to D0 and D1 servo positions in ARC, respectively

///

void Update() {

_initialized = true;

if (Input.GetKey(KeyCode.Escape))

Application.Quit();

// add the position of the servos to the cache based on the location of the sprice

// We set the positions to cache in this loop rather than trying to send a position each time

// That way we can send a bulk change which is much faster on bandwidth

// So, add your servo positions to the cache and then send them all after

_streamClient.SetCachedServoPosition(ServoServerClient.ServoPortEnum.D0, _streamClient.MapToByte(_sprite.transform.position.x));

_streamClient.SetCachedServoPosition(ServoServerClient.ServoPortEnum.D1, _streamClient.MapToByte(_sprite.transform.position.y));

// Send all the servo positions that have been cached

_streamClient.SendCachedServoPositions();

// Display the latest camera image by rendering it to the texture and applying to the cube's material

if (_toDisplay.Length > 0) {

_texture.LoadImage(_toDisplay);

var material = _cube.GetComponent().material;

material.mainTexture = _texture;

material.mainTextureScale = new Vector2(1, -1);

}

}

}

@DJSures I just dug out the app we once made for JD, it already talks to ARC. So with the EZ-Pi Server on the Raspberry Pi, I should be able to drive my robot with Unity and ARC once you got the plugin for the breakout board done...This would be awesome!! My arm setup has one additional joint, but it should be fairly easy to run him like a JD for testing!!

I will make an input field for the IP address, so anyone can easily connect to their ARCs HTTP Server...

@Mickey, this plugin will do what you're looking for. There's example code as well to demonstrate how to get a video image from the camera to Unity + how to set servo positions from unity. There's Map() functions in the ServoServerStream as well, which will help map from Unity floats of -1 to +1 into servo degrees.

The thing is, you will need a camera connected for it to work. The Unity ServoServerClient assumes you have a camera connected and streaming for the servo positions to send.

This plugin is WAY faster and better than using the http server - because it'll be much friendlier on resources as well... Doubly so if you're using it for a VR headset or something requiring fast efficient processing.

@DJSures You are a wizzard!!! This is great!!! I will have to go to work now, but will look into it this evening! I already noticed this is not a sample scene, so I will have to figure out how to set it all up... Would you mind providing a sample scene for Unity? I know they are quiet heavy, so a Dropbox link or something would be OK!

If there is no sample scene, I guess I will figure it out myself, great progress!!!

Ok now I know I will be revisiting my Unity this week. I prolly will have 1001 questions as I work my way through this plug. (I know hard to believe..LOL)

I’m interested to know will this stream servo positions live from unity to ARC with this plug in?

Just connected or actually streaming from the camera for the servos to work?

See the video and example Unity application in the description above

...video is worth a thousand words. Thank you. I will play around!

Great video btw!

Would I be able to do this, but connected to ARC by streaming this data out using this plug in, then data to EZB..if yes would this be close to real time?