Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

How to add the Tiny Yolo2 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Camera category tab.

- Press the Tiny Yolo2 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Tiny Yolo2 robot skill.

How to use the Tiny Yolo2 robot skill

Object detection is fundamental to computer vision: Recognize the objects inside the robot camera and where they are in the image. This robot skill attaches to the Camera Device robot skill to obtain the video feed for detection.

Demo

Directions

Add a Camera Device robot skill to the project

Add this robot skill to the project. Check the robot skill's log view to ensure the robot skill has loaded the model correctly.

START the camera device robot skill, so it displays a video stream

By default, the TinyYolo skill will not detect objects actively. Check the "Active" checkbox to begin processing the camera video data stream.

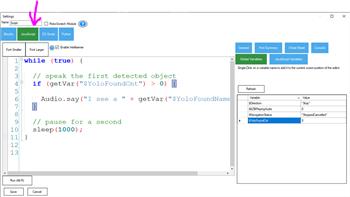

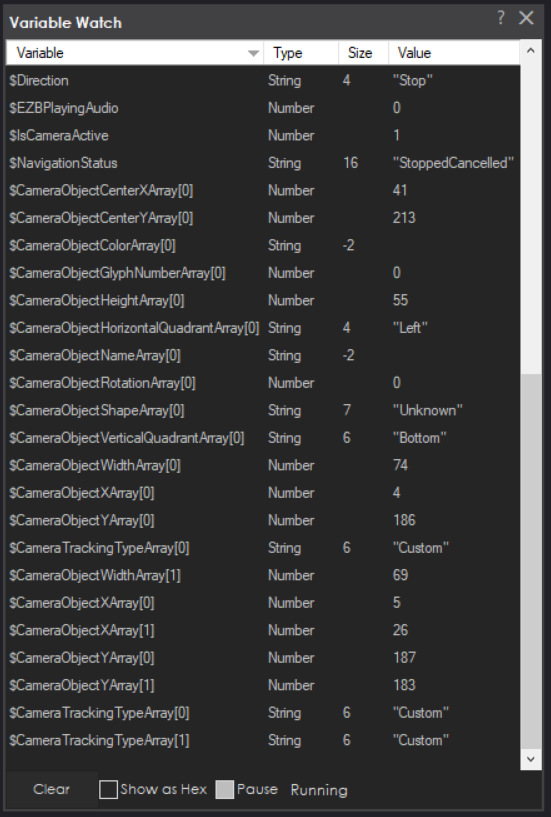

Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.

Camera Device Integration

This robot skill integrates with the camera device by using the tracking features. If the servo tracking is enabled, this robot skill will move the servos. This is an extension of the camera robot skill. The On Tracking Start script will execute, and camera device variables will be populated when tracking objects.Performance

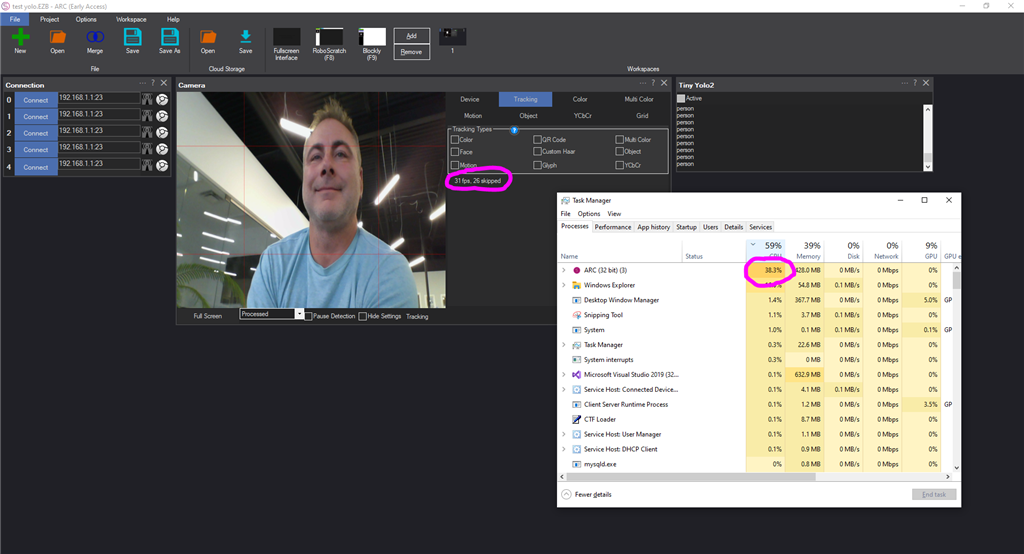

In HD webcam resolution, Tiny Yolo is processing 30+ FPS with 38% CPU, sometimes more, depending on the processor of your PC.Variables

The detected objects are stored in global variables in the array provided by the camera robot skill. The number of detected objects determines the size of the array. The detected object's location, confidence, and name are all stored in variables. Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.Trained Objects

Tiny Yolo robot skill includes an ONNX model with 20 trained objects. They are... "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"The ImageNetData is trained with the image resolution of 416x416 because it uses the TINY model. Regular-sized models are trained at 608x608.

ToDo

- control commands for starting, stopping

- ability to specify custom training model files

Related Questions

True Autonomous Find, Pick Up And Movemovement

Using Variables For TY2 And Camera

Upgrade to ARC Pro

Join the ARC Pro community and gain access to a wealth of resources and support, ensuring your robot's success.

It'll be easier if we modify the robot skill to allow loading of custom files. that way you can test it and have full control of your environment.

Any chance support for YOLO NAS?...it is incredible!

Looks like the trained model for YOLO NAS is non commercial use only. I wonder if that means synthiam would have to licence or provide the users a way to import the trained model into synthiam after the plugin was installed.

It wouldn't matter about the license because robot skills are not part of the ARC software - there is no commercial use of robot skills. They redistribute technology, not a product - which is why we have "plugin" robot skills, because they're add-ons and not part of the ARC software. If they were included in ARC's installation and/or required a subscription, then it would fall under commercial use.

*edit: I looked around a bit about the yolo-nas - not a lot of info bout it yet. It looks to be specific to opencv, and we looked into an open cv wrapper for ARC before, but i don't think it'll be very useful to anyone without advanced programming skills. i'd have to put some thought on how to make that available to ppl who don't want to be writing a bunch of single-use code hmmm

Was this one made available? "It'll be easier if we modify the robot skill to allow loading of custom files. that way you can test it and have full control of your environment." Then I will test it

You can load custom files with this one. Give it a shot. Is the yolo-nas just a different data file?

I jumped too fast at posting. Watched a video, and it is VERY new. You can play around with training models in Google Collab. And dev in RoboFlow. I think its early release. But really so good at object detection..a portion of Yolo is used in Tesla cars.

tiny Yolo doesn't like me today. Blank Project, Camera, IoTiny, Tiny Yolo See error below, Darknet Yolo not playing very nicely either. @athena any ideas?

AI is growing exponentially but I feel Vision is being left in the dark. We were supposed to have self driving cars, maps that could geo locate from a single photo, self checkouts that could scan unbarcoded items like fruits and vegetables, and thousands of other vision recognition use cases. There seems to have been a lot of focus on invasive privacy detection where we can recognize a person wearing a mask or just their gate when walking but for other applications I don't see the tools emerging. Microsoft Cognitive vision still can't recognize lots of basic objects (has it improved at all in 5 years?) . Yolo maybe fast and look impressive but it has a very limited library it can recognize. Based on other advancements in AI Vision recognition should be at the stage where we can identify an image from just a small section of that image and then generate a complete image from that small amount of data. Sadly we still can't even identify a lot of basic items.

Loading models: C:\ProgramData\ARC\Plugins\19a75b67-c593-406c-9789-464aa3ba998b\models\TinyYolo2_model.onnx... Done. System.InvalidOperationException: Splitter/consolidator worker encountered exception while consuming source data ---> Microsoft.ML.OnnxRuntime.OnnxRuntimeException: [ErrorCode:Fail] bad allocation at Microsoft.ML.OnnxRuntime.NativeApiStatus.VerifySuccess(IntPtr nativeStatus) at Microsoft.ML.OnnxRuntime.InferenceSession.RunImpl(RunOptions options, IntPtr[] inputNames, IntPtr[] inputValues, IntPtr[] outputNames, DisposableList

1 cleanupList) at Microsoft.ML.OnnxRuntime.InferenceSession.Run(IReadOnlyCollection1 inputs, IReadOnlyCollection1 outputNames, RunOptions options) at Microsoft.ML.OnnxRuntime.InferenceSession.Run(IReadOnlyCollection1 inputs, IReadOnlyCollection1 outputNames) at Microsoft.ML.OnnxRuntime.InferenceSession.Run(IReadOnlyCollection1 inputs) at Microsoft.ML.Transforms.Onnx.OnnxTransformer.Mapper.UpdateCacheIfNeeded(Int64 position, INamedOnnxValueGetter[] srcNamedOnnxValueGetters, String[] activeOutputColNames, OnnxRuntimeOutputCacher outputCache) at Microsoft.ML.Transforms.Onnx.OnnxTransformer.Mapper.<>c__DisplayClass12_01.b__0(VBuffer1& dst) at Microsoft.ML.Data.DataViewUtils.Splitter.InPipe.Impl1.Fill() at Microsoft.ML.Data.DataViewUtils.Splitter.<>c__DisplayClass7_1.b__2() --- End of inner exception stack trace --- at Microsoft.ML.Data.DataViewUtils.Splitter.Batch.SetAll(OutPipe[] pipes) at Microsoft.ML.Data.DataViewUtils.Splitter.Cursor.MoveNextCore() at Microsoft.ML.Data.RootCursorBase.MoveNext() at Microsoft.ML.Data.ColumnCursorExtensions.d__41.MoveNext() at System.Linq.Enumerable.WhereSelectEnumerableIterator2.MoveNext() at System.Linq.Enumerable.ElementAt[TSource](IEnumerable1 source, Int32 index) at Tiny_Yolo2.yolo.yoloService.processThread() in C:\My Documents\SVN\Developer - Controls\In Production\Tiny Yolo2\MY_PROJECT_NAME\yolo\yoloService.cs:line 125