Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

How to add the Tiny Yolo2 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Camera category tab.

- Press the Tiny Yolo2 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Tiny Yolo2 robot skill.

How to use the Tiny Yolo2 robot skill

Object detection is fundamental to computer vision: Recognize the objects inside the robot camera and where they are in the image. This robot skill attaches to the Camera Device robot skill to obtain the video feed for detection.

Demo

Directions

Add a Camera Device robot skill to the project

Add this robot skill to the project. Check the robot skill's log view to ensure the robot skill has loaded the model correctly.

START the camera device robot skill, so it displays a video stream

By default, the TinyYolo skill will not detect objects actively. Check the "Active" checkbox to begin processing the camera video data stream.

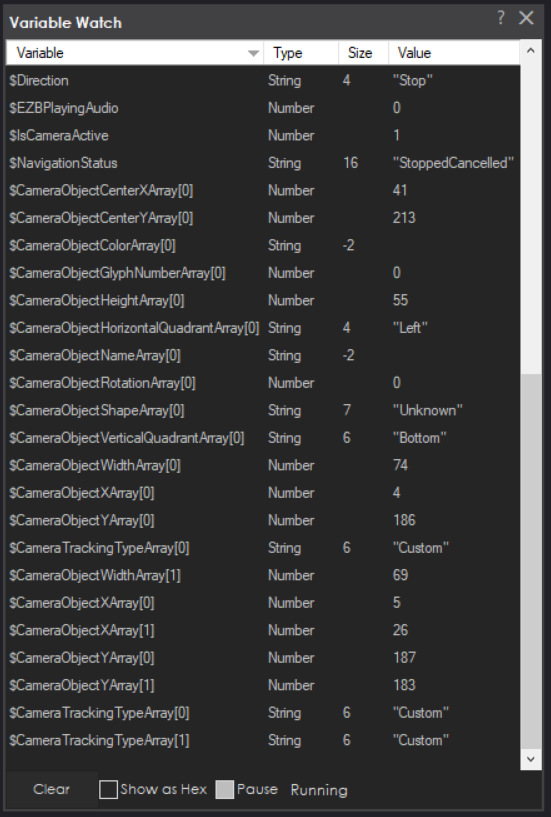

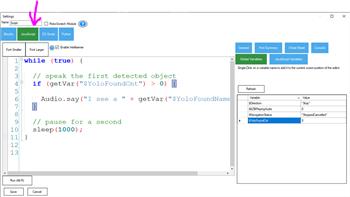

Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.

Camera Device Integration

This robot skill integrates with the camera device by using the tracking features. If the servo tracking is enabled, this robot skill will move the servos. This is an extension of the camera robot skill. The On Tracking Start script will execute, and camera device variables will be populated when tracking objects.Performance

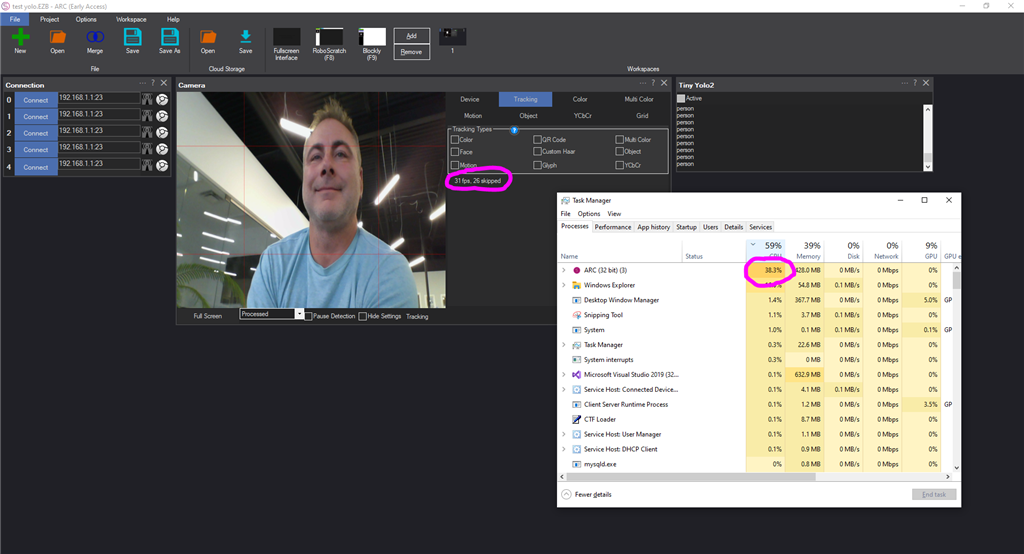

In HD webcam resolution, Tiny Yolo is processing 30+ FPS with 38% CPU, sometimes more, depending on the processor of your PC.Variables

The detected objects are stored in global variables in the array provided by the camera robot skill. The number of detected objects determines the size of the array. The detected object's location, confidence, and name are all stored in variables. Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.Trained Objects

Tiny Yolo robot skill includes an ONNX model with 20 trained objects. They are... "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"The ImageNetData is trained with the image resolution of 416x416 because it uses the TINY model. Regular-sized models are trained at 608x608.

ToDo

- control commands for starting, stopping

- ability to specify custom training model files

It see a person, but all other objects like cup, glass, glasses no good

will try again

Interesting - I handle that error in the latest release - but don't know what is causing it yet

Wait wha...???? That is so fast! Will it eventually have bounding box to display which object its capturing?

Ya - in the to do section there’s a list

hahah i see it now..doh..

I do prefer ptp's solution though - his is more elegant so far i think

When I open it now it says in the Tiny Yolo2:

Loading models: C:\ProgramData\ARC\Plugins\19a75b67-c593-406c-9789-464aa3ba998b\models\TinyYolo2_model.onnxSet Configuration: Error initializing model :Microsoft.ML.OnnxRuntime.OnnxRuntimeException: [ErrorCode:RuntimeException] Exception during initialization: bad allocation at Microsoft.ML.OnnxRuntime.NativeApiStatus.VerifySuccess(IntPtr nativeStatus) at Microsoft.ML.OnnxRuntime.InferenceSession.Init(String modelPath, SessionOptions options) at Microsoft.ML.OnnxRuntime.InferenceSession..ctor(String modelPath) at Microsoft.ML.Transforms.Onnx.OnnxModel..ctor(String modelFile, Nullable

1 gpuDeviceId, Boolean fallbackToCpu, Boolean ownModelFile, IDictionary2 shapeDictionary) at Microsoft.ML.Transforms.Onnx.OnnxTransformer..ctor(IHostEnvironment env, Options options, Byte[] modelBytes)Looks like your computer ran out of memory when loading and parsing the model. Try rebooting and using the skill again.