Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

How to add the Tiny Yolo2 robot skill

- Load the most recent release of ARC (Get ARC).

- Press the Project tab from the top menu bar in ARC.

- Press Add Robot Skill from the button ribbon bar in ARC.

- Choose the Camera category tab.

- Press the Tiny Yolo2 icon to add the robot skill to your project.

Don't have a robot yet?

Follow the Getting Started Guide to build a robot and use the Tiny Yolo2 robot skill.

How to use the Tiny Yolo2 robot skill

Object detection is fundamental to computer vision: Recognize the objects inside the robot camera and where they are in the image. This robot skill attaches to the Camera Device robot skill to obtain the video feed for detection.

Demo

Directions

Add a Camera Device robot skill to the project

Add this robot skill to the project. Check the robot skill's log view to ensure the robot skill has loaded the model correctly.

START the camera device robot skill, so it displays a video stream

By default, the TinyYolo skill will not detect objects actively. Check the "Active" checkbox to begin processing the camera video data stream.

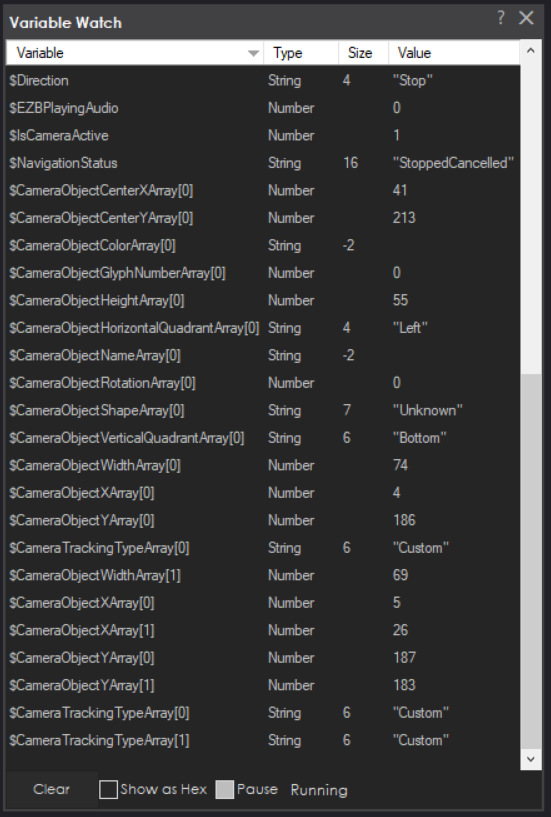

Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.

Camera Device Integration

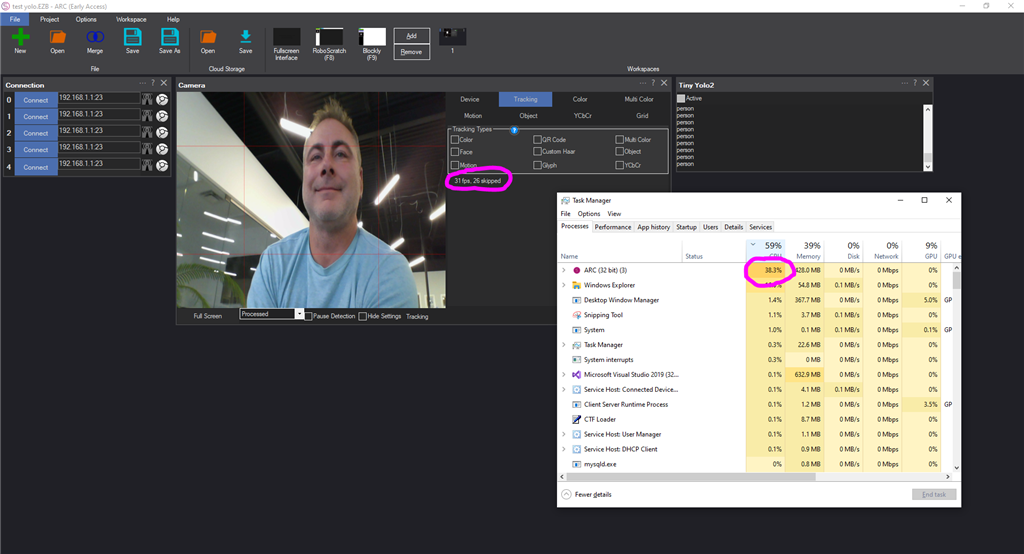

This robot skill integrates with the camera device by using the tracking features. If the servo tracking is enabled, this robot skill will move the servos. This is an extension of the camera robot skill. The On Tracking Start script will execute, and camera device variables will be populated when tracking objects.Performance

In HD webcam resolution, Tiny Yolo is processing 30+ FPS with 38% CPU, sometimes more, depending on the processor of your PC.Variables

The detected objects are stored in global variables in the array provided by the camera robot skill. The number of detected objects determines the size of the array. The detected object's location, confidence, and name are all stored in variables. Detected objects use the Camera Device robot skill features. The tracking On Tracking Start script will execute when objects are detected, and $CameraObject_____ variables will be populated. Check the Camera Device robot skill page for a list of camera variables.Trained Objects

Tiny Yolo robot skill includes an ONNX model with 20 trained objects. They are... "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"The ImageNetData is trained with the image resolution of 416x416 because it uses the TINY model. Regular-sized models are trained at 608x608.

ToDo

- control commands for starting, stopping

- ability to specify custom training model files

Related Questions

True Autonomous Find, Pick Up And Movemovement

Using Variables For TY2 And Camera

Upgrade to ARC Pro

Get access to the latest features and updates before they're released. You'll have everything that's needed to unleash your robot's potential!

Thanks DJ While you have the cover off are they not up to Yolo V7

While you have the cover off are they not up to Yolo V7 I do know I need to use a local model as I don't want to be sending stuff in the cloud every couple of seconds just looking to see if a 5 year old is standing in front of robot holding up a a book.

I do know I need to use a local model as I don't want to be sending stuff in the cloud every couple of seconds just looking to see if a 5 year old is standing in front of robot holding up a a book.

There are a few models here: https://github.com/AlturosDestinations/Alturos.Yolo

Let me think about how to add configs so those models can be loaded dynamically. They won't work not because of the weights and names are compiled into the robot skill currently

works well,

Also, thanks for the update

new version works perfectly. Thanks DJ yeah that model was not trained on books, but if someone holds up a cow we will be the first know.

yeah that model was not trained on books, but if someone holds up a cow we will be the first know.

I took a look at the pre trained data sets. The Yolo9000 is amazing it has 9000 classes of objects that it recognizes. Not sure how hard it would be to upgrade the Yolo2 to Yolo9000 or how it performs but with such a large class of objects should meet most object recognition needs (Even someone looking for book :-) https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/9k.names

@Athena Al

How can I use audio to hear what the camera is seeing?

To use audio to hear what the camera is seeing, you can utilize the Speech Synthesis feature in ARC to announce the names of the objects that the camera detects. Here's how you can set it up:

Add a Camera Device to your project: If you haven't already, make sure you have a camera device added to your ARC project.

Add the Object Detection Skill: Add an object detection skill, such as the Tiny Yolo robot skill, to your project. Ensure it's configured and active.

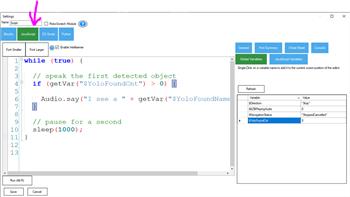

Create a Script: Add a new EZ-Script to your project that will check the variables set by the object detection skill and use the

SayorSayEZBcommand to announce the detected objects.Use Variables: The object detection skill will store the detected objects in global variables. You can access these variables in your script to determine which objects have been detected.

Write the Script: In the script, you can write a loop or a conditional statement that checks the object detection variables and then uses the

Saycommand to announce the objects. For example:Remember to adjust the script according to the specific variables and conditions used by the object detection skill you are using. The example provided is a basic template and may need to be modified to work with your setup.

For more detailed instructions and options for speech synthesis, you can refer to the Speech Synthesis robot skill documentation on the Synthiam website: https://synthiam.com/Support/Skills/Audio/Speech-Synthesis?id=16123

If you need to add the Speech Synthesis skill to your project, you can find it in the ARC Skill Store and add it just like any other skill.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@Athena Ai

Using TinyYolo and the Camera, is there a way to add a script to hear what the camera is seeing?