8. Vision

Let's choose your robot's vision system! If your robot does not have a camera, skip to the next step.

Robots with cameras provide navigation, tracking, and interactive benefits. Synthiam ARC's software is committed to making robot programming easy, including computer vision tracking. The ARC software includes a robot camera skill to connect WiFi, USB, or video capture devices. ARC's camera device skills include tracking types for objects, colors, motions, glyphs, faces, and more. You can add additional tracking types and computer vision modules from the skill store.

Choose the Camera Type

Connects directly to a computer with a USB cable. You can only use this type of camera in an embedded computer configuration. This is because the USB cable will tether the camera to the PC. You can use any USB camera in ARC. Some advantages of using USB cameras are high resolution and increased framerate.

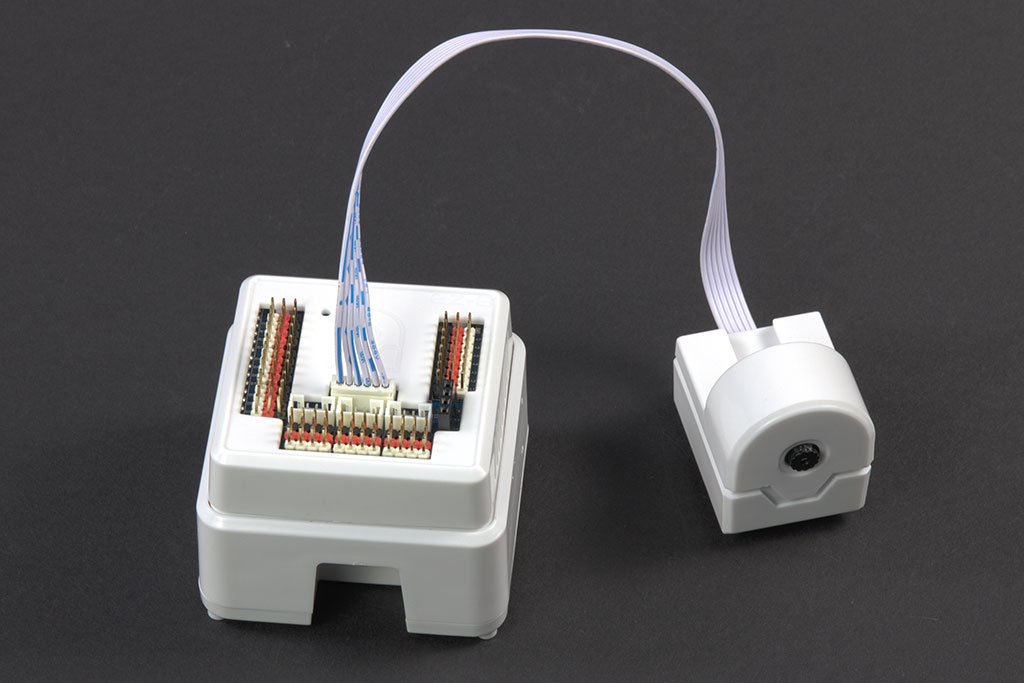

Connects wirelessly to a PC/SBC over a WiFi connection. Generally, this approach is only used in remote computer configurations. Few I/O controllers support a WiFi wireless camera transmission due to latency causing low resolution and potentially unreliable radio interference. For a wireless camera application, the most popular I/O controllers are the EZ-Robot EZ-B v4 and IoTiny.

Add Camera Device Robot Skill

Whichever camera type you choose, the robot skill needed to connect to the camera is the Camera Device Robot Skill. Add this robot skill to connect to the camera and begin viewing the video feed. Reading the manual for this robot skill, you will find many options for tracking objects.

Add Camera Device Robot SkillAdditional Computer Vision Robot Skills

Now that you have the camera working on your robot, you may wish to add additional computer vision robot skills. Computer vision is a general term for extracting and processing information from images. The computer vision robot skills will use artificial intelligence and machine learning to track objects, detect colors, and recognize faces. There are even robot skills to detect facial expressions to determine your mood.

Overlay PNG/JPG images in real-time onto detected objects, faces, colors or glyphs using ARC Camera tracking; attach, preview, detach.

Interactive camera control for ARC: click-to-center and edge hotspots to pan/tilt servos, adjustable fine-tune and multi-camera support.

Use PC or robot cameras for vision tracking: color, QR, glyph, face, object, motion; record video/data, control servos and movement, run scripts

Displays trained object names as overlays on the Camera Device video stream using Camera Device's object-tracking (shows $CameraObjectName).

Save camera snapshots to 'My Robot Pictures' (Pictures folder), manual or timed (0-100s), and trigger programmatically via controlCommand.

Enable an EZB video camera as ARC's camera source for video recording, vision recognition and other robot vision features.

USB camera video source for recognition, recording and other vision tasks in ARC.

Overlay image packs onto camera feed and map images to control-variable ranges with auto/manual assignment, position and sizing options.

Uses Microsoft Cognitive Emotion cloud to analyze camera images, returning emotion descriptions and confidence for speech/output (requires internet).

Microsoft Cognitive Vision integration for ARC: real-time object, face, emotion detection, OCR, confidence data and script-triggered robot actions.

Tiny YOLOv3 CPU-only real-time object detection using a camera; offline detection, script-triggered on-changes or on-demand results with class/scores.

Detects and tracks faces from any ARC video source, providing real-time face localization for robot applications.

Control robot servos via Xbox 360 Kinect body joints with per-joint servo mapping, automatic degree calculation, smoothing and upper/lower control

Broadcast live audio and video from ARC camera to the web via HLS; cross-browser streaming. Requires router configuration for external access.

Play live web video and audio streams inside ARC from Chrome/Firefox, streaming directly to ARC camera control; network configuration may be needed.

Omron HVC-P ARC plugin: real-time body, hand, face, gaze, gender, age, expression and eye estimation; facial recognition via Omron software

Omron HVC-P/HVC-P2 ARC plugin: Python-based camera integration for body, hand and face detection, gaze, age/gender, expressions and face recognition.

Windows ARC plugin for Omron HVC-P/HVC-P2: Python-based face/body/hand detection, gaze, age/gender, expressions and recognition; dual-camera support.

Generate and modify images with DALL·E 2 inside Synthiam ARC robots-create images from text or camera input via API and control commands.

Picture-in-picture camera overlay: superimpose one camera onto another; configure source/destination, position, size, border, processed frames, and swap

Use camera-detected printed cue cards (direction, pause, start) to record and run stored movement sequences for ARC robots.

Create customizable QR codes for ARC, display/scan via Camera Control, trigger scripts on recognition and save decoded text to variables.

Capture a robot skill's display and stream selected area as video to a configured camera device with FPS and live preview crop.

Control a custom ARC robot to manipulate and solve a Rubik's Cube using calibrated arms and grippers; integrates with Thingiverse build.

Capture any screen area and stream video to a configured camera device; requires a Custom device and 100% display scaling.

Cloud-based detection of people and faces in robot camera video; returns locations, gender, age, pose, emotion, plus 68-point facial landmarks.

Stream video from any URI/protocol (RTMP, RTSP, HTTP, UDP, etc.) to a selected ARC camera device for real-time network feed playback.

Overlay translucent PNG targets onto a camera stream with loadable templates, attach/detach control, preview and status for visual alignment.

Overlay a variable on a processed camera image at X/Y coordinates; name the variable and attach to a specific or any available camera

Real-time TinyYolo object detection for ARC Camera Device: tracks 20 classes, populates camera variables, triggers tracking scripts, 30+ FPS in HD.

Train camera vision objects via controlcommand(), attach camera, start learning, monitor progress, and return object name for scripts.

Record any video source to a local file for playback, archiving, and processing.

Connect Vuzix 920VR AR headset to ARC to map head movement to robot servos or drive, control camera pan/tilt; deprecated 920VR support only.