9. Audio

Let's plan how your robot will speak and hear!

Robots with audio capabilities provide a very interactive experience. The ARC software includes multiple skills that connect to WiFi, Bluetooth, or USB audio input and output devices. ARC has speech recognition, text-to-speech synthesis, and robot skills for playing music and sound effects!

Choose an Audio Input Device

Wired Microphone

Connects directly to a computer with a USB cable or through an existing soundcard's input port. It is only used in an embedded robot configuration.

Wireless Microphone

Connects wirelessly to a computer with an RF (radio frequency) or Bluetooth connectionChoose an Audio Output Device Type

Wired Speaker

Connects directly to a computer with a USB or Audio cable. It is only used in an embedded robot configuration. You can select whether the speaker is connected via an audio cable to an existing sound card or connects with a USB.

Wireless Speaker

Connects wirelessly to a computer over Bluetooth or WiFi.

EZB Speaker

Use an EZB that supports audio output (i.e., EZ-Robot IoTiny or EZ-B v4). Note that using this option, the EZB on index #0 is preferred. This is also necessary if you wish to use the Audio Effects robot skill found here.Add Generic Speech Recognition

Once you have selected the type of microphone to use, the next step is to experiment with speech recognition. Many speech recognition robot skills range from Google, Microsoft, and IBM Watson. However, the most popular robot skill to get started is the generic speech recognition robot skill. This uses the Microsoft Windows built-in speech recognition system. Therefore, it's easy to configure, and you can get it up and running with little effort.

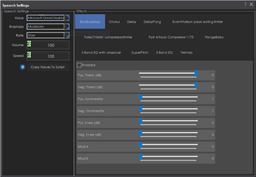

Using the Microsoft Windows Speech Recognition Engine, this skill uses your computer's default audio input device and listens for known phrases. Phrases are manually configured in the Settings menu, and custom actions (via script) are assigned to your phrases.

Get Speech Recognition

Get Speech Recognition

Audio Robot Skills

Now that you have selected the audio device your robot will use, many robot skills should be considered. They range from speech recognition to audio players. You can have as many audio robot skills as your robot needs to achieve the goals.

Advanced Azure-backed speech-to-text for ARC allowing custom Azure Cognitive Service keys, scripting hooks, and configurable output variables.

Advanced multilingual speech synthesis using Azure's natural voices for lifelike robot speech.

Enable UWP voices, change default audio devices, capture audio and route it to EZB controllers with session tracking and device control.

Azure speech recognition for ARC using your custom subscription key for speech-to-text, billed to your Azure account.

Azure TTS for ARC robots: generate natural, customizable neural voices for companion, educational, assistive, and entertainment applications.

English-only speech synthesis using a remote server to generate audio.

Accurate Bing cloud speech-to-text for ARC: wake-word, programmable control, $BingSpeech output, Windows language support, headset compatible

Voice menu tree to navigate options and run scripts on ARC robots. Multi-level, customizable prompts, speech I/O, timeout and repeat/back.

Detect audio frequencies via PC microphone (FFT), output a variable and drive servos within configurable min/max ranges with waveform feedback.

Google Speech for ARC: cloud speech recognition with waveform, configurable response scripts and PandoraBot support

Record audio from your PC mic, auto-trigger and edit sample rate/effects, then play or export recordings to an EZ-B v4 SoundBoard for robot playback.

MIDI I/O for ARC: send/receive notes, run per-note scripts, control PC or external instruments, with panic to stop stuck notes.

Serial MP3 Trigger for EZ-B: plays MP3s from mini‑SD; configurable port/baud. Deprecated-replaced by EZB v4 streaming audio. 3rd‑party.

Convert text to dynamic, real-time speech with nine expressive OpenAI voices-natural, varied, accessible robot communication.

Example ARC skill demonstrating converting, compressing and streaming MP3/WAV to an EZ-B speaker, with play/stop commands and TTS examples.

Stereo mic sound localization triggers left/right scripts to control robot movement, enabling directional responses (turn/move) to audio.

Trigger a script when EZB audio plays, exposing live Min, Max and Avg audio-level variables for looped monitoring; stops when audio ends.

Map EZ-B audio volume to servos; multi-servo mirroring, scalar range control, invert/min-max, waveform feedback to sync mouth motion.

Maps PC microphone volume to servo positions - control multiple servos (e.g., robotic mouth) with scalar, min/max and invert options.

Maps PC audio volume to servos in real time with scalar, min/max, invert & multi-servo options-ideal for syncing robot mouth to sound

Play WAV/MP3 via EZ-B v4, manage tracks, add timed scripts for synced robot actions, control via ControlCommand(), volume and clipping indicators.

Play and manage MP3/WAV sound effects from a PC soundboard, load tracks, trigger or script playback (one file at a time), export and automate.

Play MP3/WAV via PC sound output with timeline scripts to trigger movements, auto-position actions, and optional looping for synced routines

Run ARC scripts from any speech-to-text source for voice-controlled automation, command parsing and script triggering.

Windows Speech Recognition skill: detect custom phrases via PC mic, trigger configurable scripts/actions with adjustable confidence.

Run custom scripts when speech starts/ends to sync servos and LEDs to spoken $SpeechTxt, with loop support, stop button and logs.

Speak user-defined text via PC audio or EZ-B v4 speaker; configurable voices, effects and speed; uses Windows TTS; programmatically callable.

Configure Windows Audio.say()/Audio.sayEZB() TTS on EZB#0: voice, emphasis, rate, volume, speed/stretch and audio effects; copy control script.

Animate servos to simulate jaw/mouth with ARC text-to-speech; configurable vowel/consonant timing, start sync, multi-servo control, pause/stop.

Offline open-vocabulary speech recognition for Windows 10/11 - low-accuracy open-dictionary voice input with confidence & scripting, headset use.

Real-time voice activity detection for ARC robots, detects speech start/stop, customizable scripts, live audio visualization and tunable sensitivity

Watson Speech-to-Text ARC plugin: cloud AI transcription with configurable models, selectable VAD (Windows/WebRTC), audio capture and visualization.

Human-like audio via IBM Watson Text-to-Speech: multi-language, selectable voices for accessibility and automated interactions. IBM Cloud required.

Microsoft Windows built-in speech synthesis and recognition for TTS and speech-to-text input in ARC robot projects.