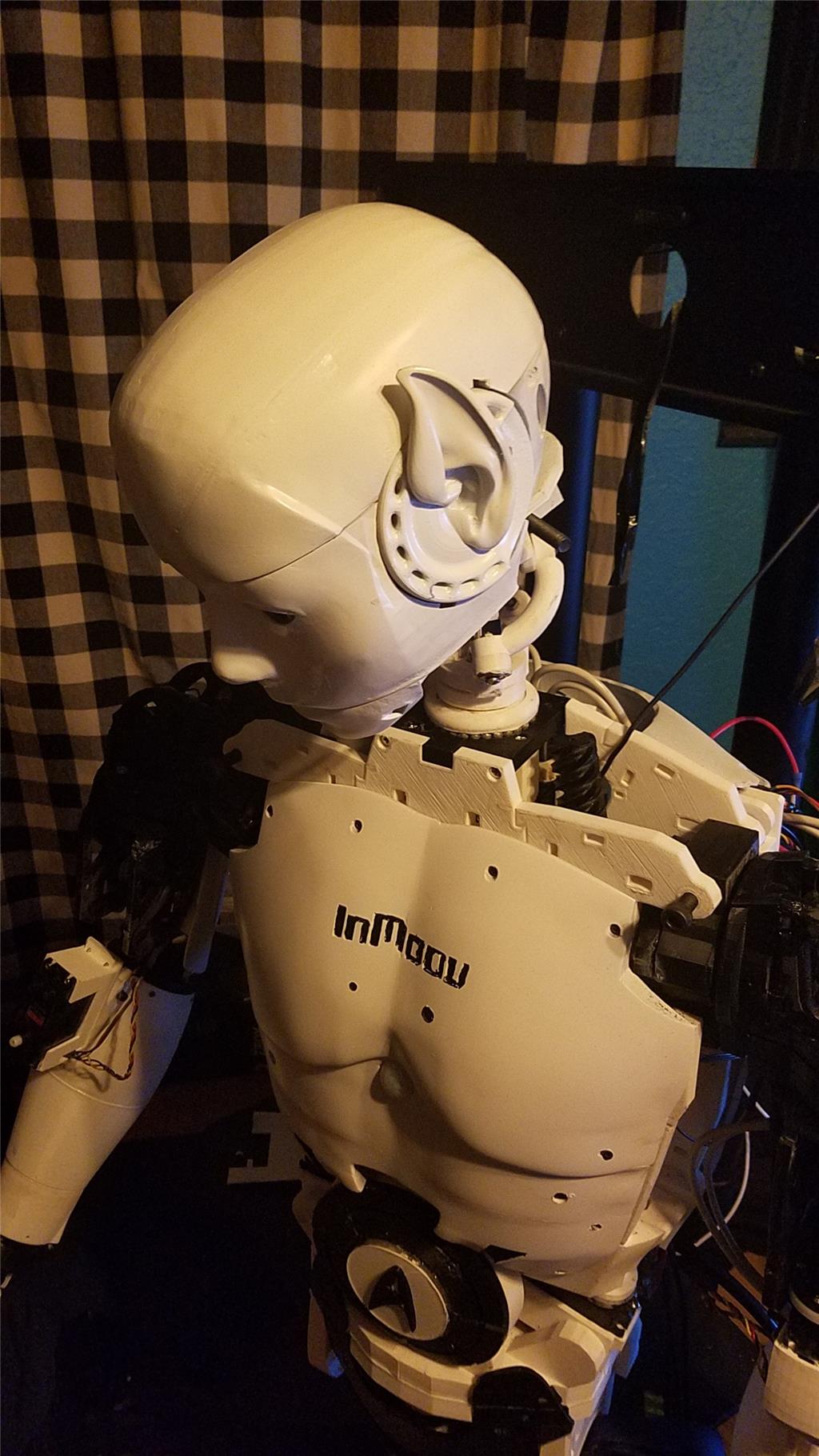

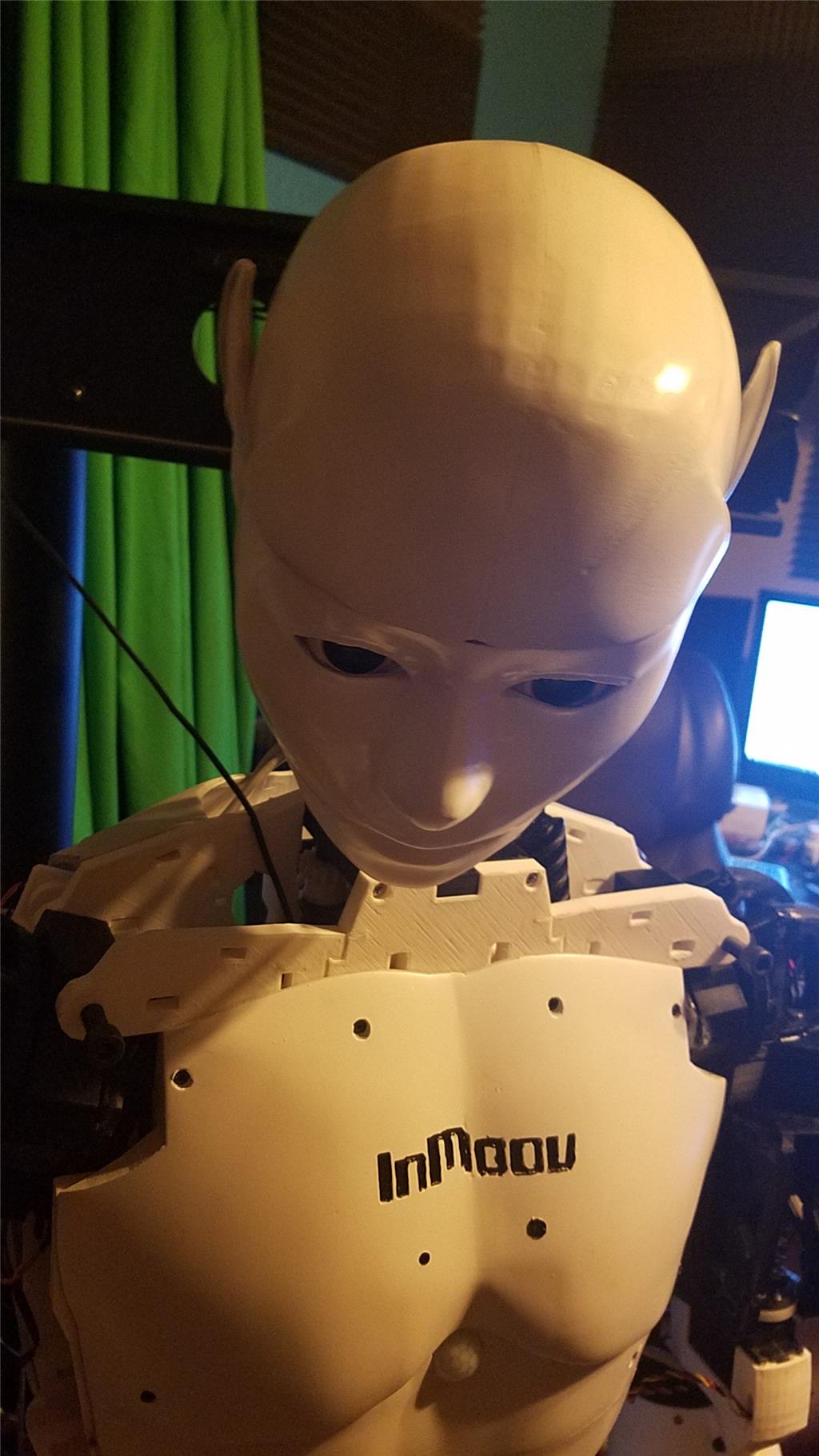

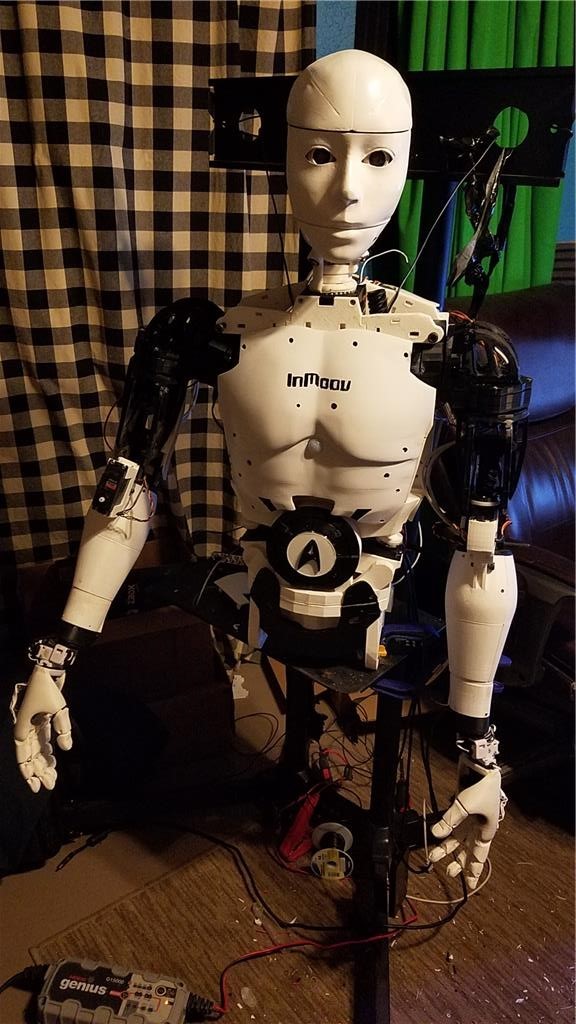

I have decided to start my InMoov project. I think I will call him Spock out of respect to Leonard Nimoy who passed away on the day that I started this project.

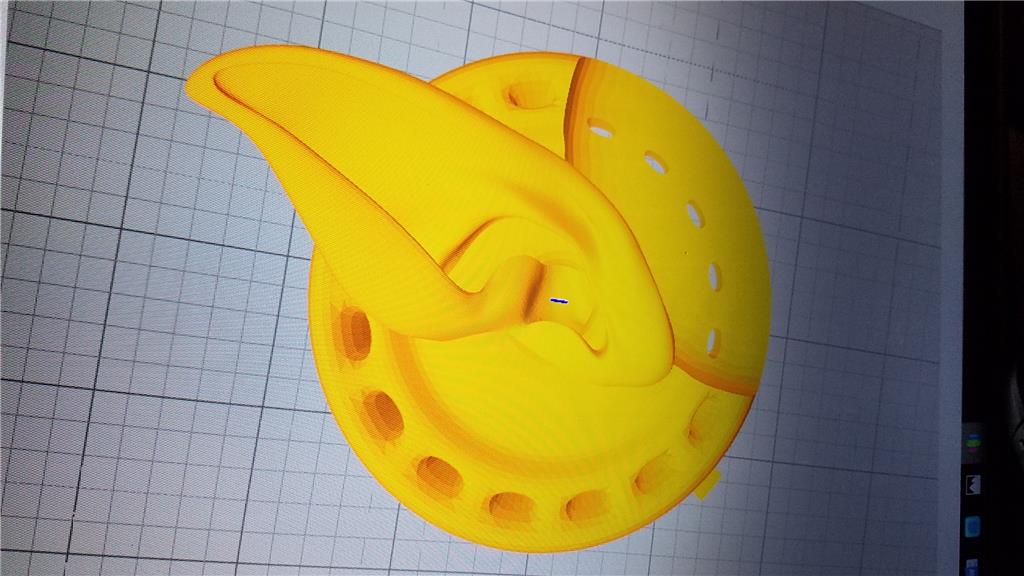

I am editing this post so as not to confuse people with the current configuration. I continue to update this post with the latest photos. If you are reading this for the first time, don't be confused. There have been a lot of changes to the InMoov over the past couple of years including starting over.

https://synthiam.com/Community/Questions/7398&page=21 Post 203 starts the rebuild of the InMoov.

I have decided to use an onboard computer. I chose the Latte Panda due to it having an onboard arduino Leonardo and also because it uses little power.

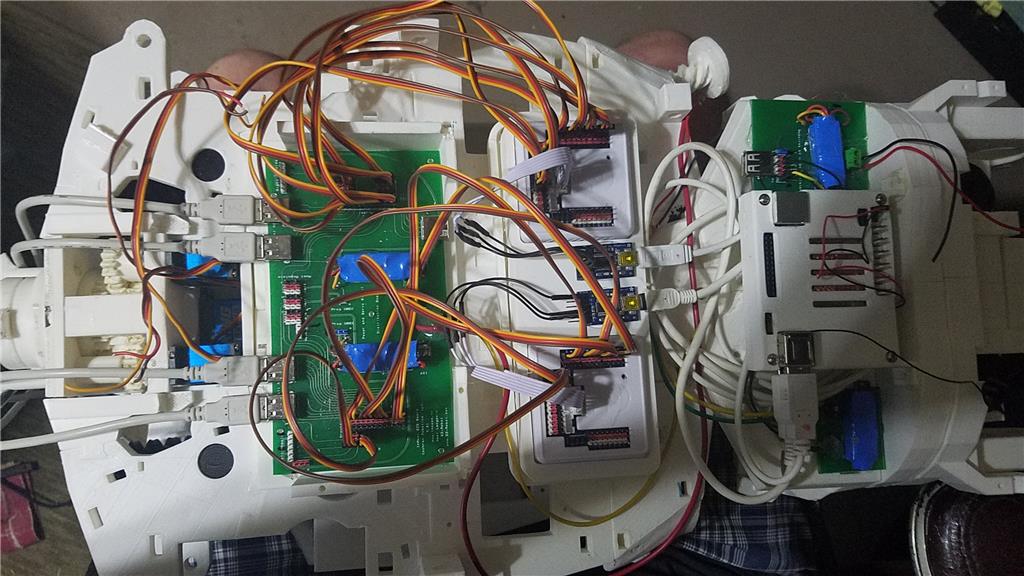

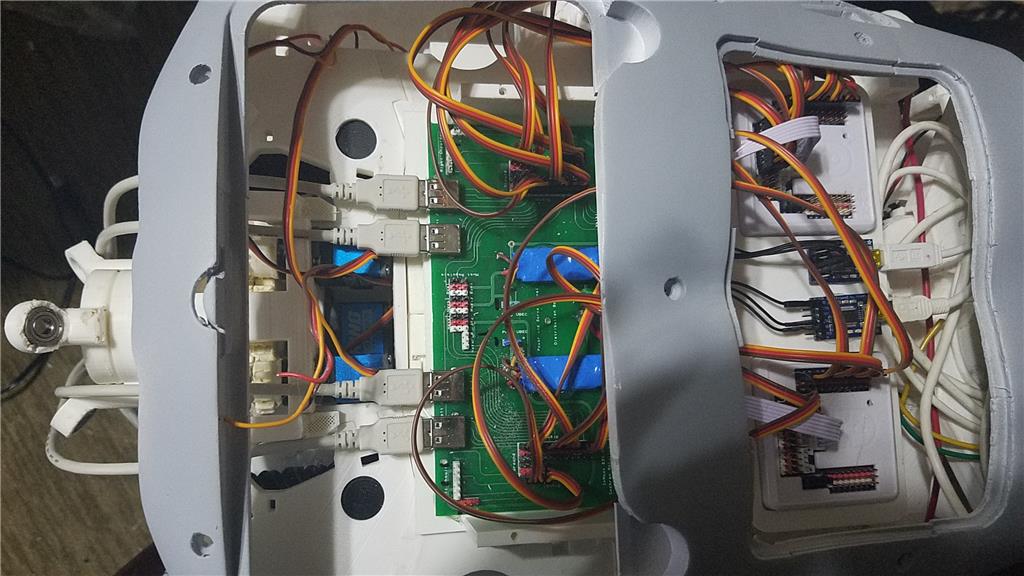

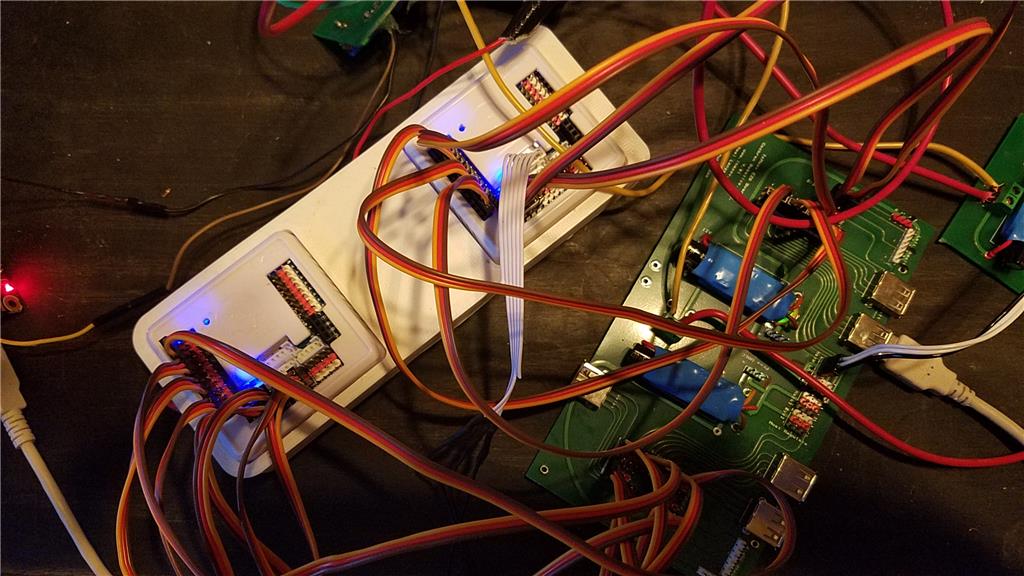

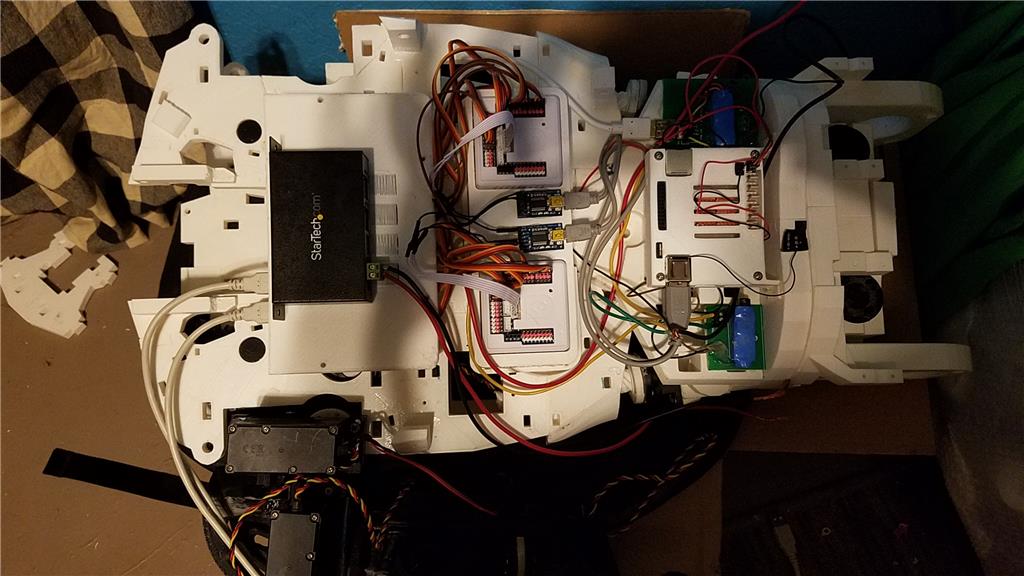

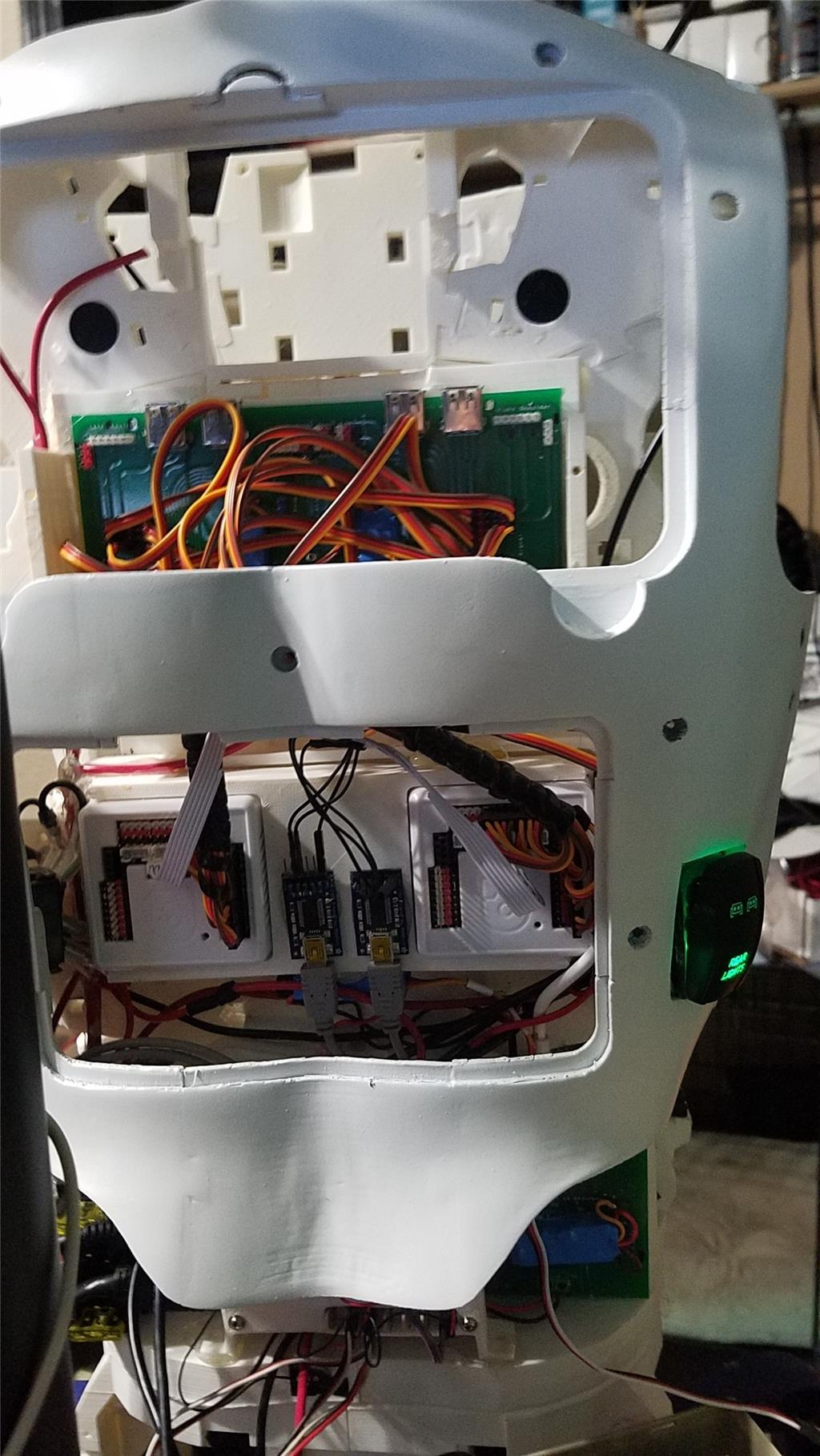

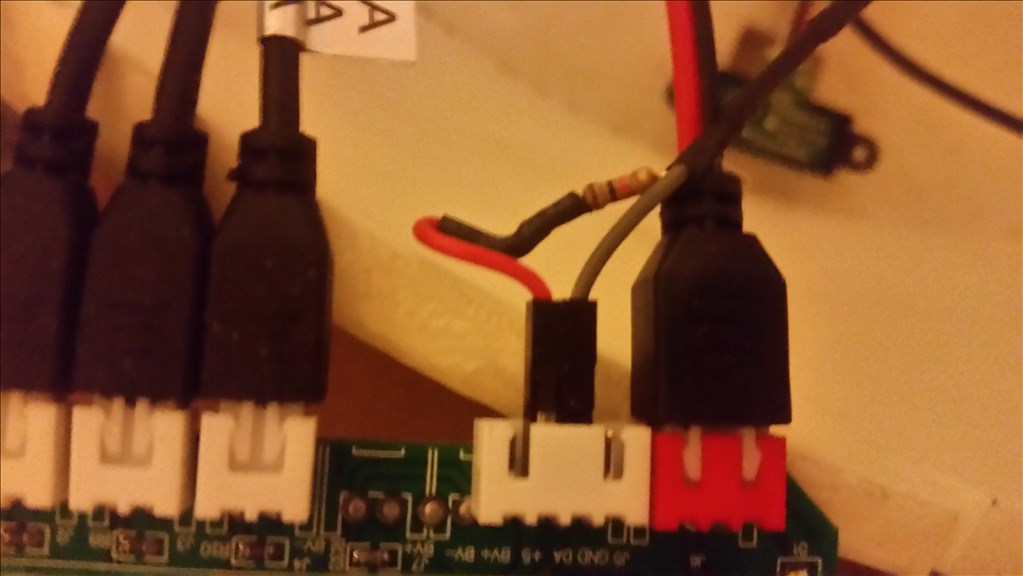

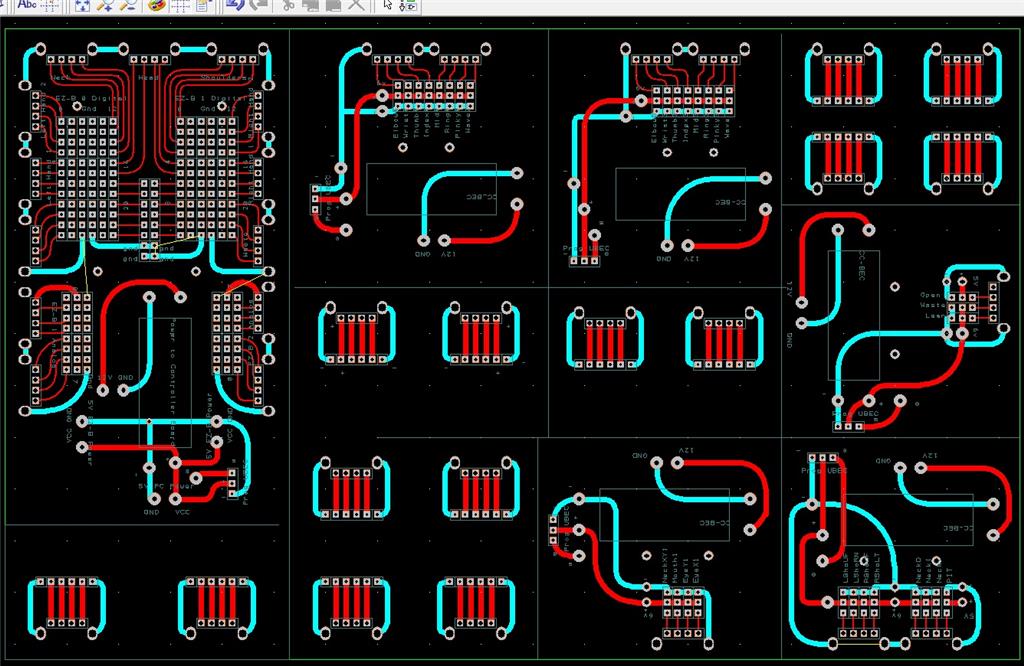

I used 2 EZ-B controllers connected via the camera port to Adafruit FTDI friend boards. This allows the Latte Panda to have a non-wifi dependent connection to the EZ-B's. I use a powered USB hub connected to the USB3 port on the Latte Panda to attach other items.

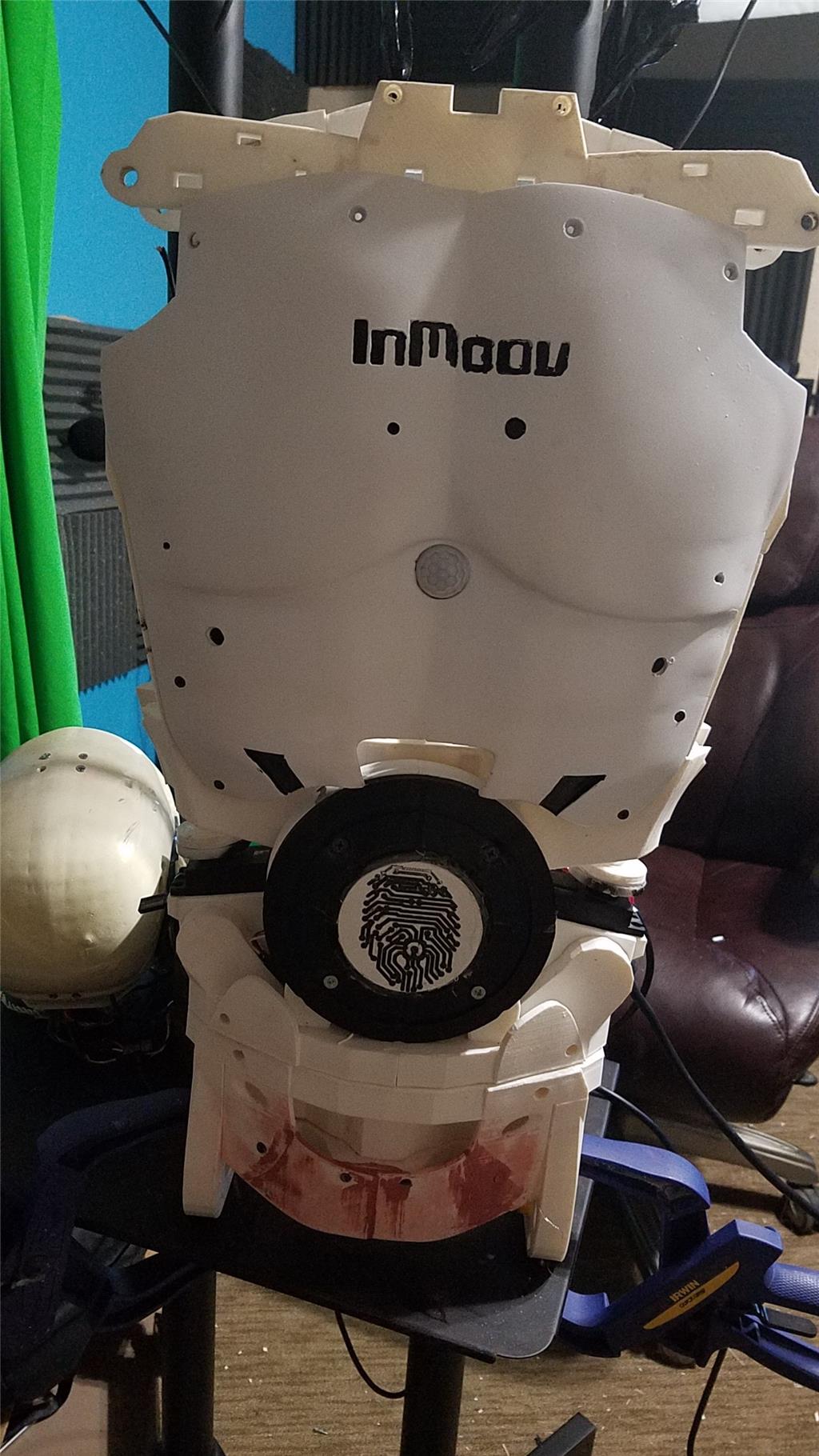

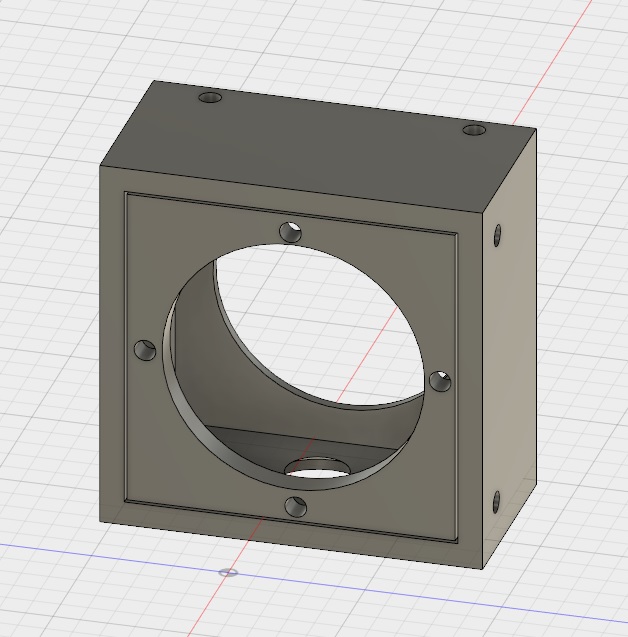

The Omron HVC-P is used to identify people, emotions, human bodies, hands, age and gender. It is attached to the Latte Panda via an FTDI friend which is then connected to the powered USB hub. It is mounted in the chest of the InMoov. I also use a 3 element microphone which is a MXL AC-404 microphone. It is disassembled and the board and microphone elements are mounted in the chest of the InMoov. This mic board is connected to the Latte Panda via a usb cable which is attached to the powered USB hub. There is a USB camera in the eye of the InMoov which is connected to the Latte Panda via the powered USB hub.

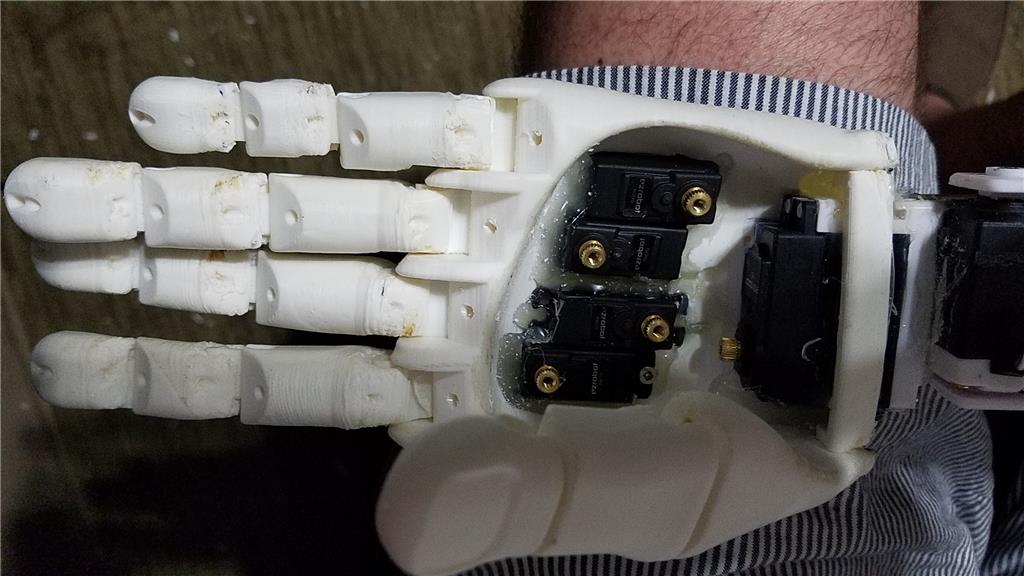

I chose to use the Flexy hand with the InMoov. The design is far more rugged than the original hand and works very well. There are 4 EZ-Robot Micro Servos in the palm of each hand which controls the main fingers. The thumb is controlled by an EZ-Robot HD servo. The wrist waves and uses an EZ-Robot HD servo to do this motion. I use the standard Rotational wrist.

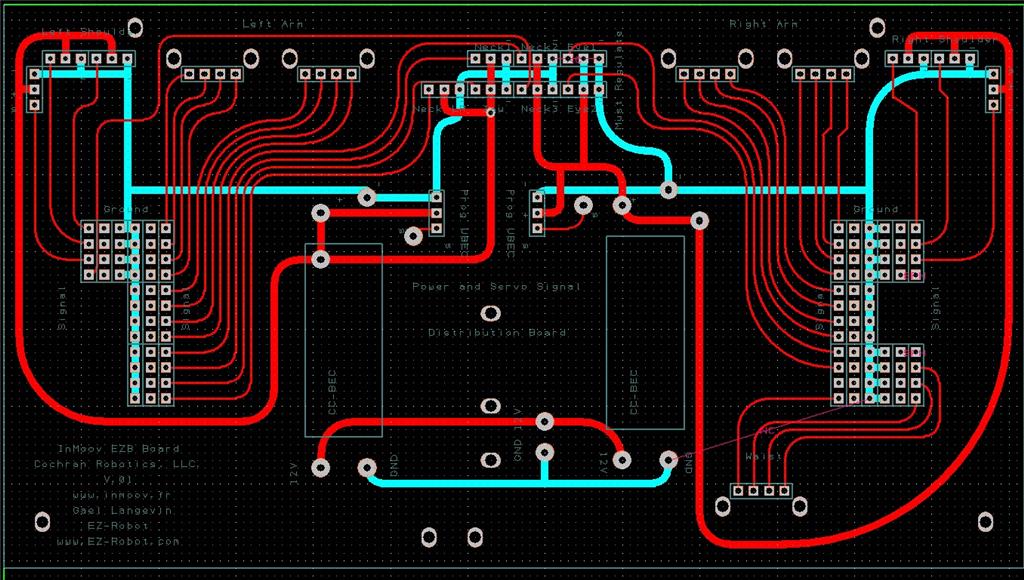

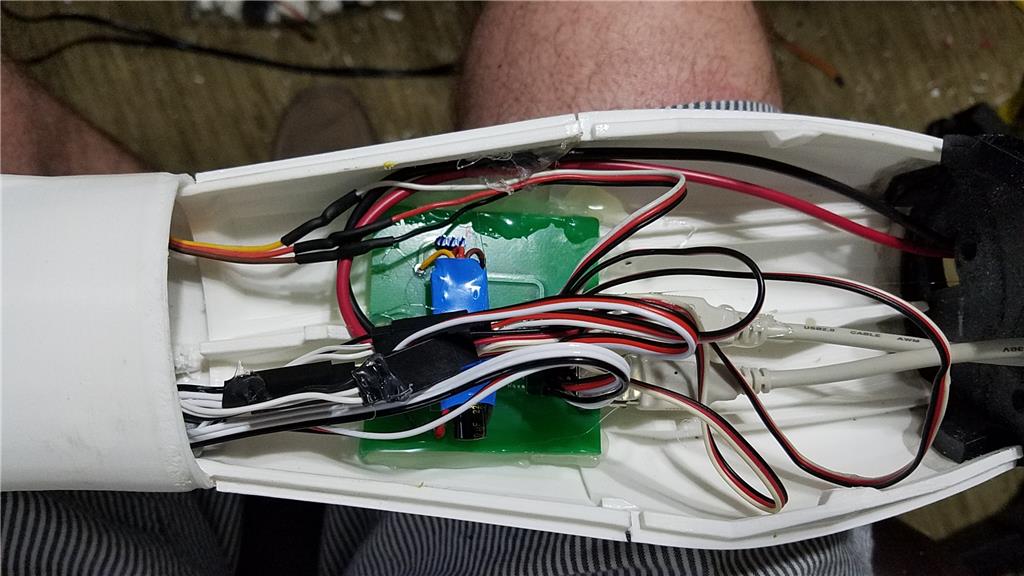

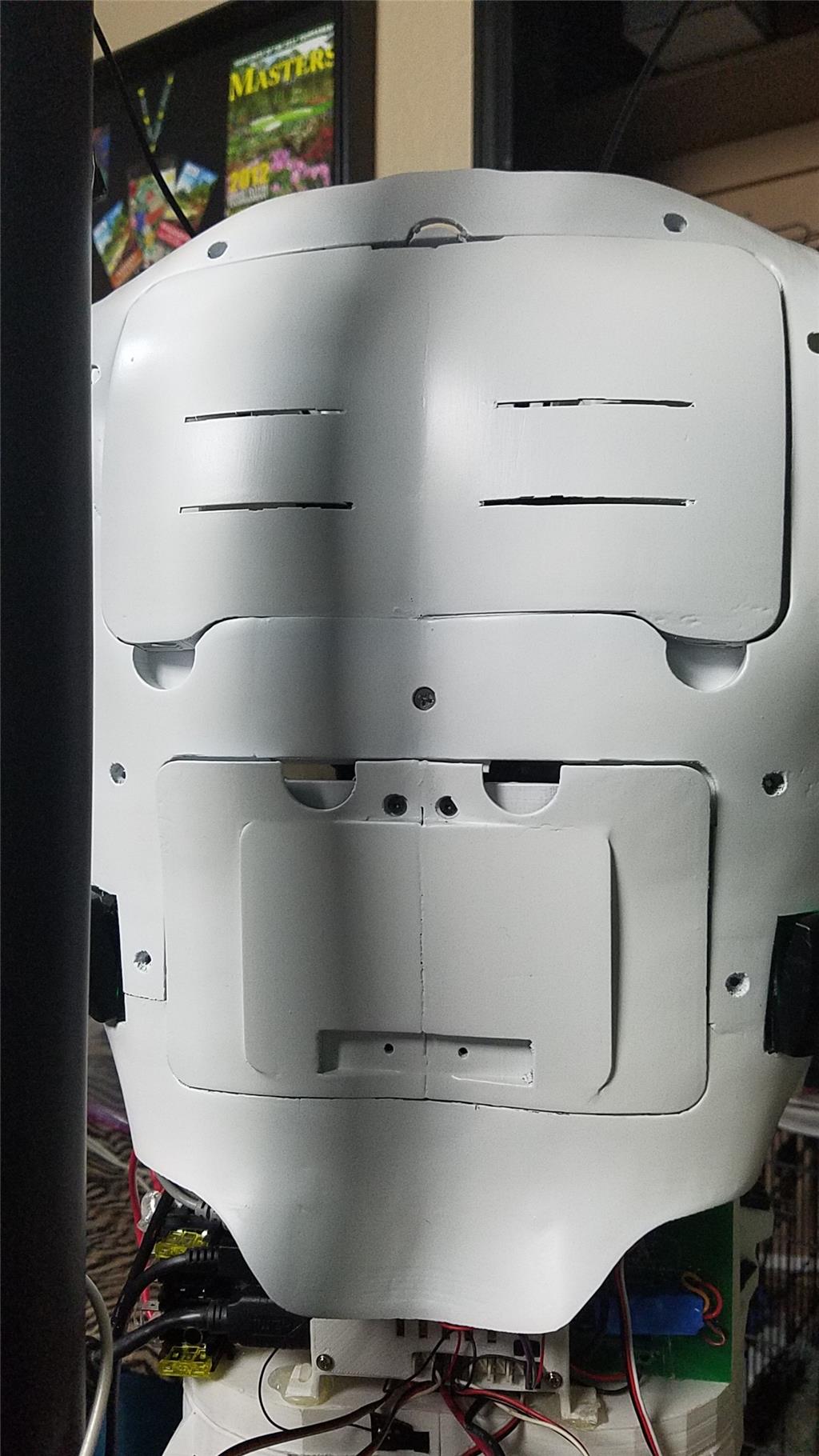

I have castle BEC's for power in the following locations set to the following voltages. Forearm's - 6.2 V - Controls fingers, wrist and elbows Custom power distribution board (2) set to 6.2 V controlling head, neck and Shoulder servos. EZ-B's - set to 6.1 V - it is mounted in the controller mounting plate and connects to the EZ-B fused power boards from a power base. Latte Panda - Set to 5.1 V and is mounted to the EZ-B controller mounting plate. Waist - set to 6.2 V and is mounted in the lower right side of the back. This provides power to the lean and pivot waist motors..

There are some custom power and signal distribution boards. These are in the forearms, lower back and in the upper back. The upper back or main board connects to these distribution points via USB cables to provide signal to the other boards for servos. The main board also has servo connector pins that are for the neck, head and shoulders. This allows the power to be distributed between multiple BEC's and also allows the servo signal cables to be shorter and more protected via the USB cables.

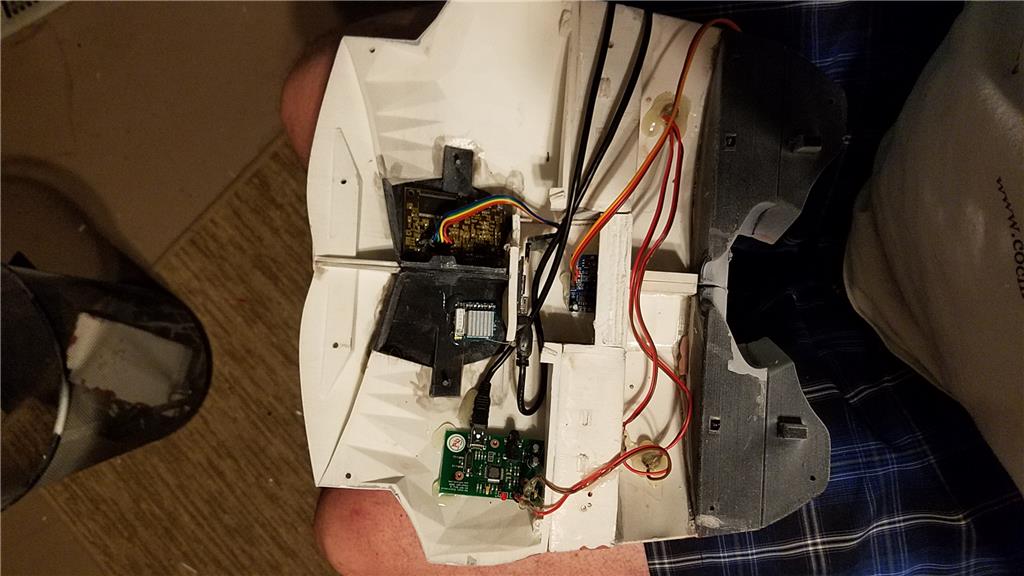

For power I use a LiFePo4 battery that is rated at 30 amps. It has the balanced charging circuit built into the battery and also has a low voltage shutoff built into the battery. This protects the battery and allows the battery to be charged with standard car chargers.

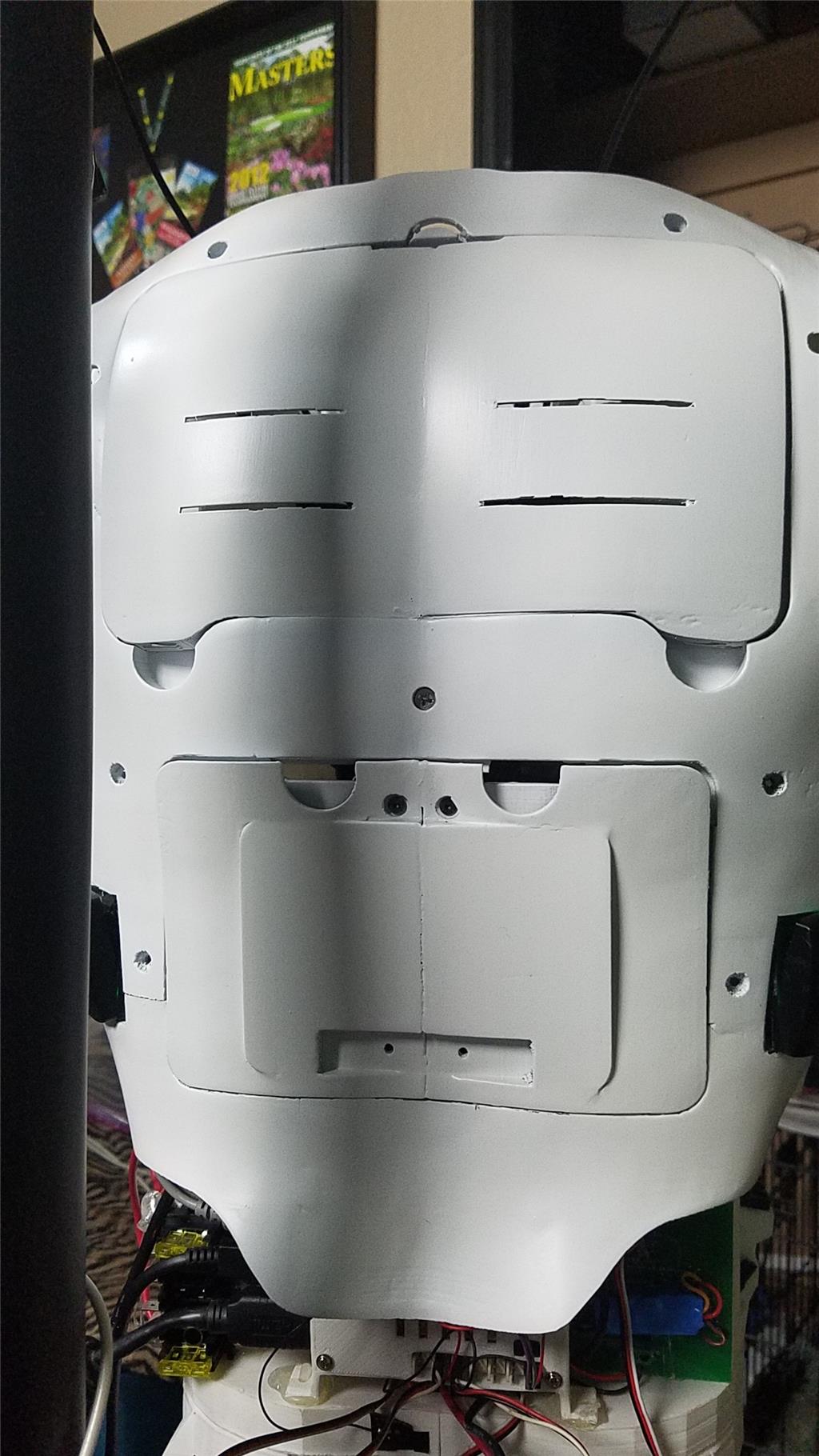

I put switches on the back on the InMoov which are rated at 20 amps at 12 volts. These are rocker switches that allow the user to pretty much slap the switch to turn it off. There are two of these switches. The servos for the elbows and fingers are on one switch. The latte panda, neck, shoulders, EZ-B's, waist motors and some lighting is on the other switch.

I also added a fuse block. This allows 20 amp fuses to be put in line to help protect things. The switches above drive the fuses for each of of the motors listed in that section.

Other robots from Synthiam community

Toymaker's Dewey (Drone:1)

Ccraig411's Roli

-636348381130562972.jpg)

-636348716348649435.jpg)

Let me word it this way... ez-ai won't be a part of this, but it was what got the majority of his attention. There will be another app developed that will use some of the same components. I will include some of the things I do in this app in ez-ai but I don't see a lot of people using it in their robots.

Ez-robot controllers can be used for things not necessarily considered a robot. The controller is great for many uses and the abilities that some really light weight computers offer with windows 10, and universalbot allows a lot of possibilities in a lot of areas.

Great! It should be a paid software, because it's just great and you've put so much time into it.

Sounds cool. I look forward to hearing about it one day !

@d.cochran

have you had a chance to play around with your kinect sensor yet? I have seen the thread about making it compatible with ez-b, Im sure you have seen it as well. I was curious if you've had any time to try it out though. I was proposed a senior project idea for robot that follows a person and I believe the kinect would be perfect if it will play nicely with the software.

Video of kinect following a human:

ez-robot forum link

Aaron

I haven't messed with it yet. I need to buy the device that supplies power and converts the connector to usb still. I have looked at the c# objects for it and there are some api's that have been make for it. I just haven't gotten to it yet.

As far as following someone, I think if you knew the size of the persons head or some other body part, a normal camera and the ARC software could do this. For example if the head was smaller than the head is at a distance of x then move forward. If it is in the left frame turn that direction until the head is in the center frames and such. I haven't tried this yet but I don't see a reason why it wouldn't work. Distance to an object of a known size is just a calculation even with one camera. Distance to an unknown size object could be done with 2 or more cameras, and this is where the kinect could be beneficial. It builds a sonar map of the objects it sees. I did read that defusing the sensor produces a better image using the sonar map. Distance is then calculated by the density of the objects in the map if I remember correctly. It seems to me that using the single camera would be easier to code against if the size of the head of the person is known. You would also have to train the person's head at multiple angles so you could know which direction the person was facing, which could give you a higher chance of predicting which direction that person was going to move. This prediction could be used to make the robot smarter and more responsive until the person realized that walking backwards was possible, but moving backwards is much slower than forwards. I think the robot would have time to compensate for someone deciding to walk backwards. Someone can run forwards so predicting that to be the direction they would move would give you a higher chance of following them.

Another option is to use the car bumper type sensors to know if the person moved left or right within 9 feet of your robot. This would require a separate controller like an arduino mini pro to handle just the monitoring of this signal. The arduino could communicate back to the ezb via serial. It returns bit bang serial which can be decoded to know the distance that the person is from any of the 4 sonar sensors.

I would think that using this with a single camera could get you pretty far. Just my thoughts on it. I would be very interested in where you take it. If you need anything or have other questions, or just want to bounce ideas off of people, throw your project out here and you will get advice from a lot of really cool people.

Also, when I get to that part of the project (kinect) I'll be sure to post where it goes.

Good luck with your project.

You might check out roborealm for using the kinect. It has the ability to connect to the kinect and get this data. This is the route that I will probably go down first when using this sensor.

You beat me to it. Was just going to say roborealm.

@David.

Your InMoov really is coming along great. I hope you get your second wind and get back to it soon. Can't wait to see how you go about doing the base for it. Awesome work so far.

@Dave S.

I completely agree with you about a next generation of power cells or a "Super Battery" as you called it. This really would be a game changer, not just for robotics, but for anything that needs a mobile power source. We can only hope something like that isn't too far away.