Hello all, I wanted to start my own thread to discuss my Inmoov and my conversion to EZ Robot. I appreciate all the work by the MRL guys but I struggle with it. I am indebted to the help they gave me but I needed something a little more along in development with some documentation.

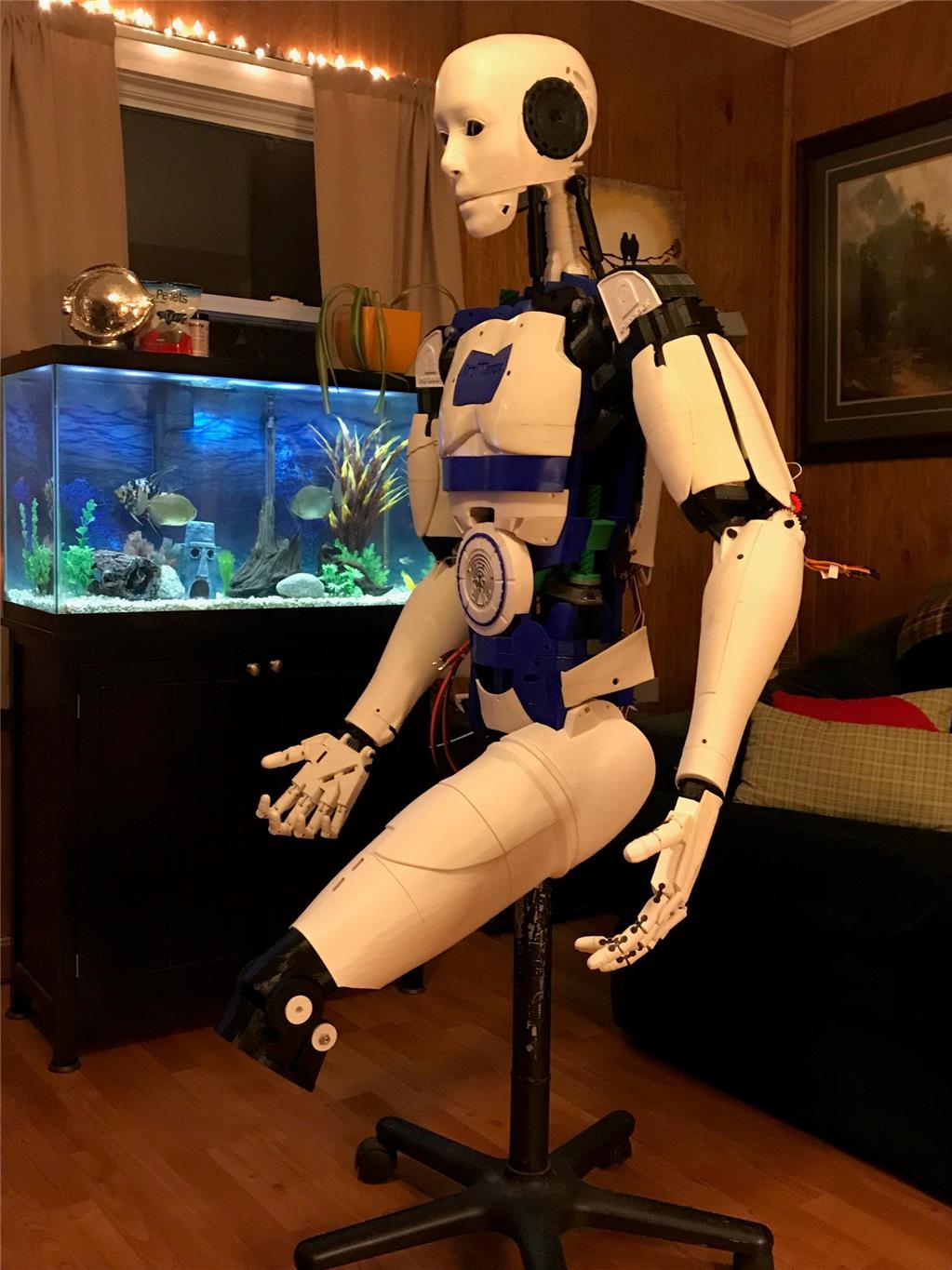

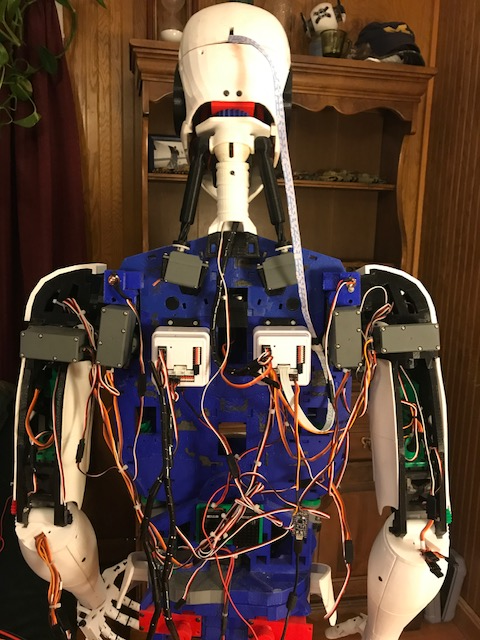

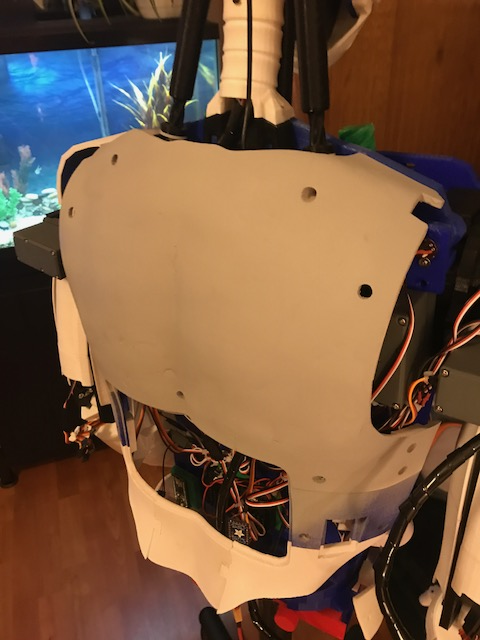

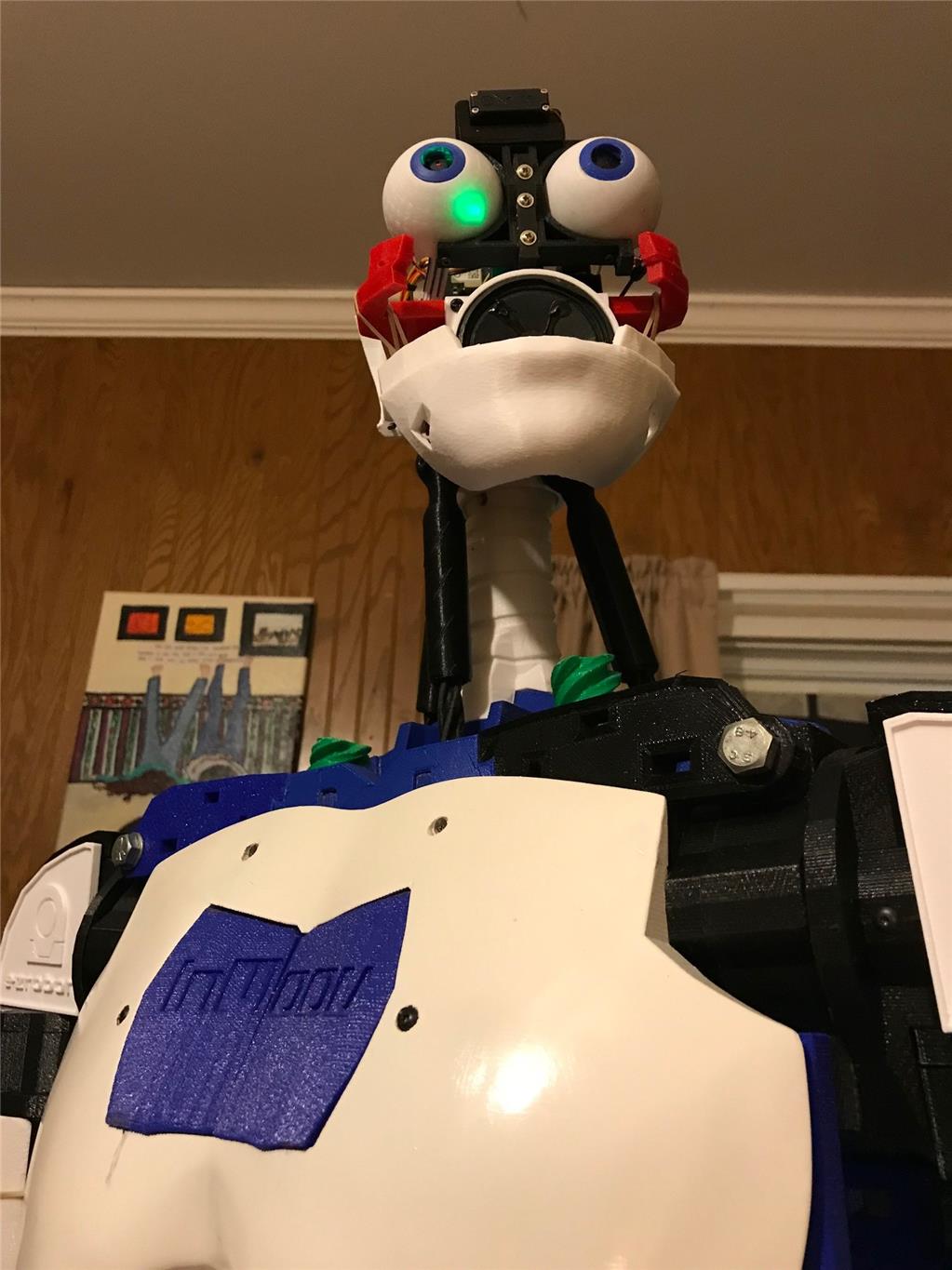

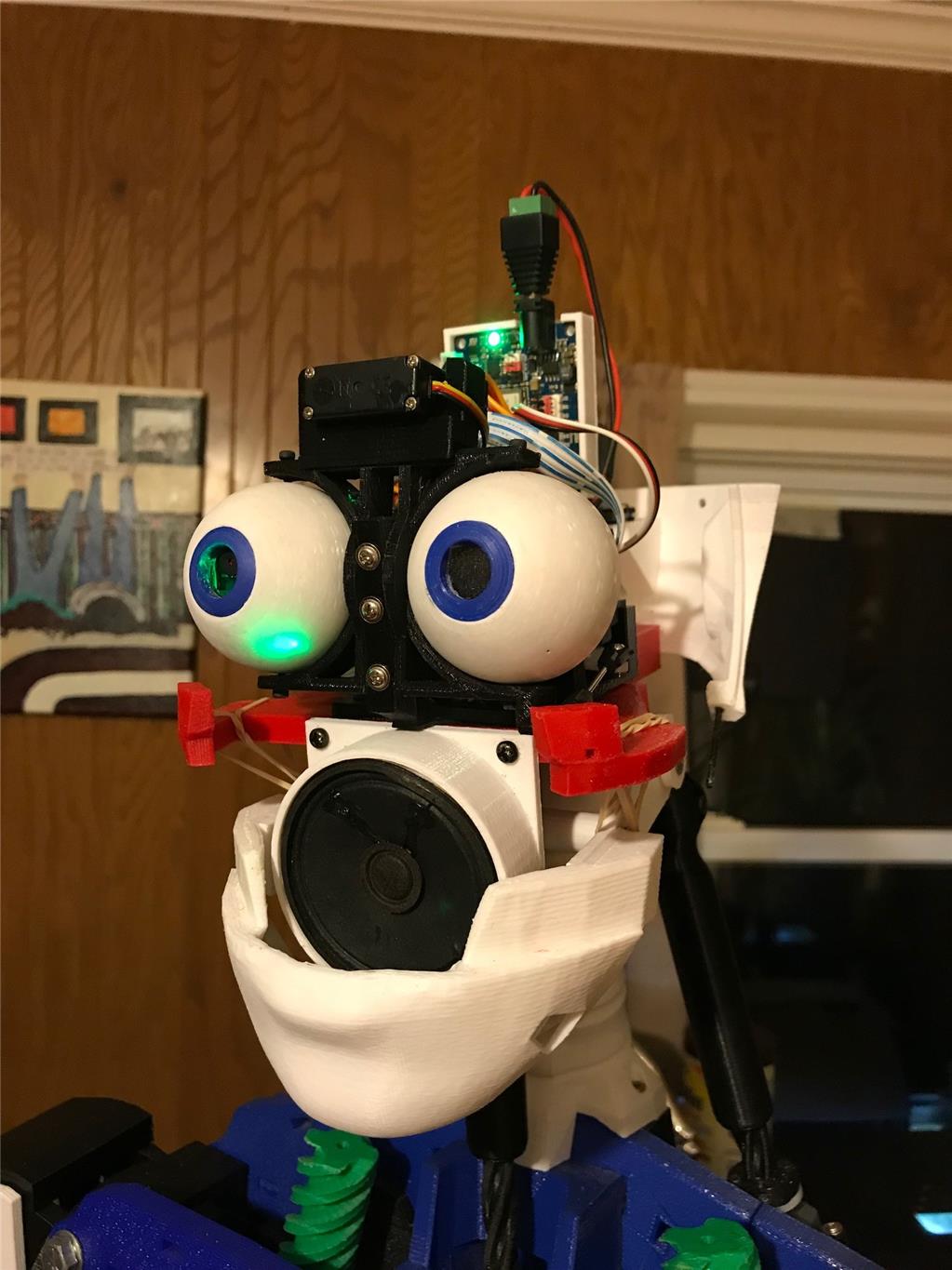

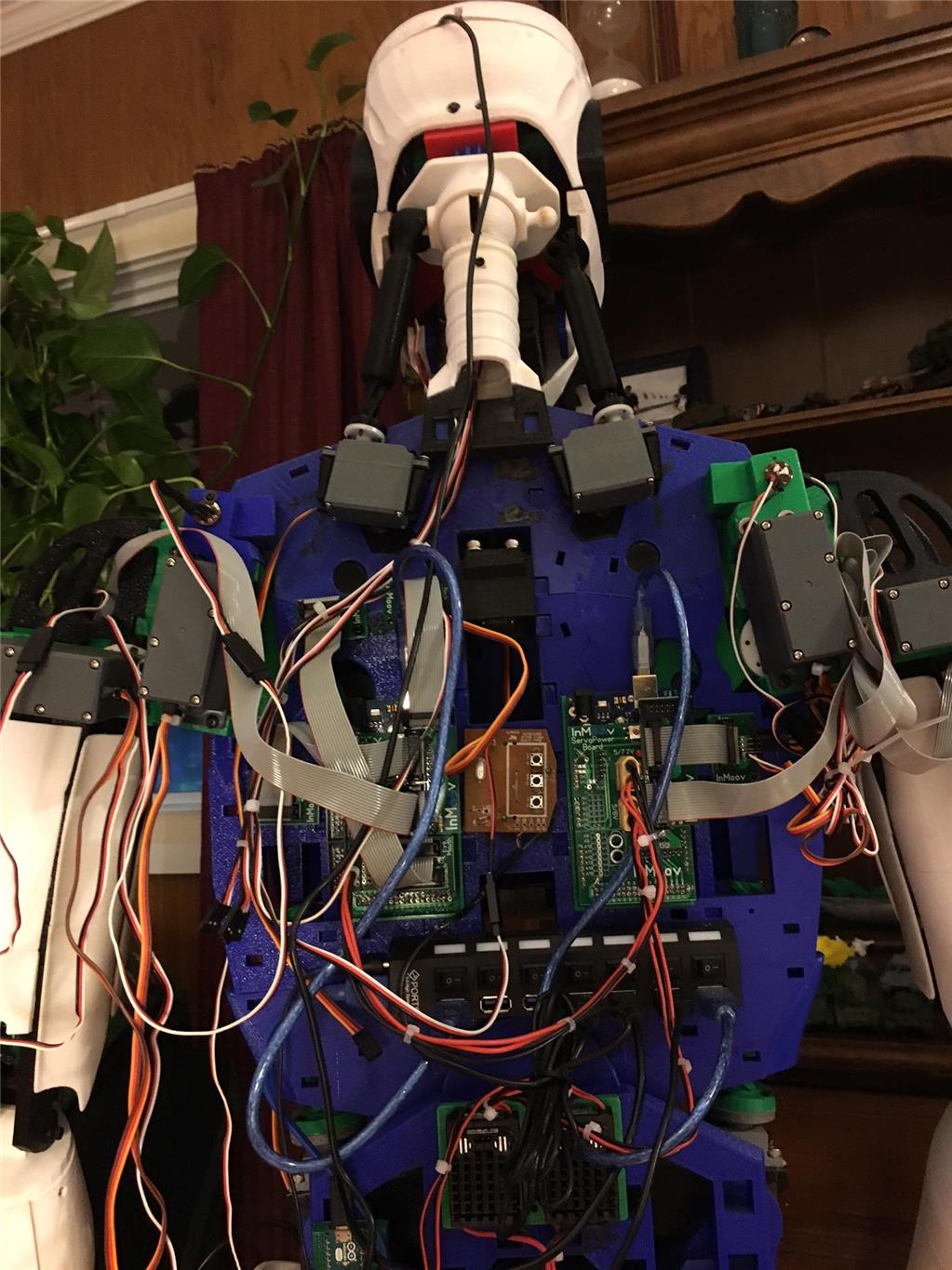

So here is my guy. Pretty standard build as far as inmoov's go.

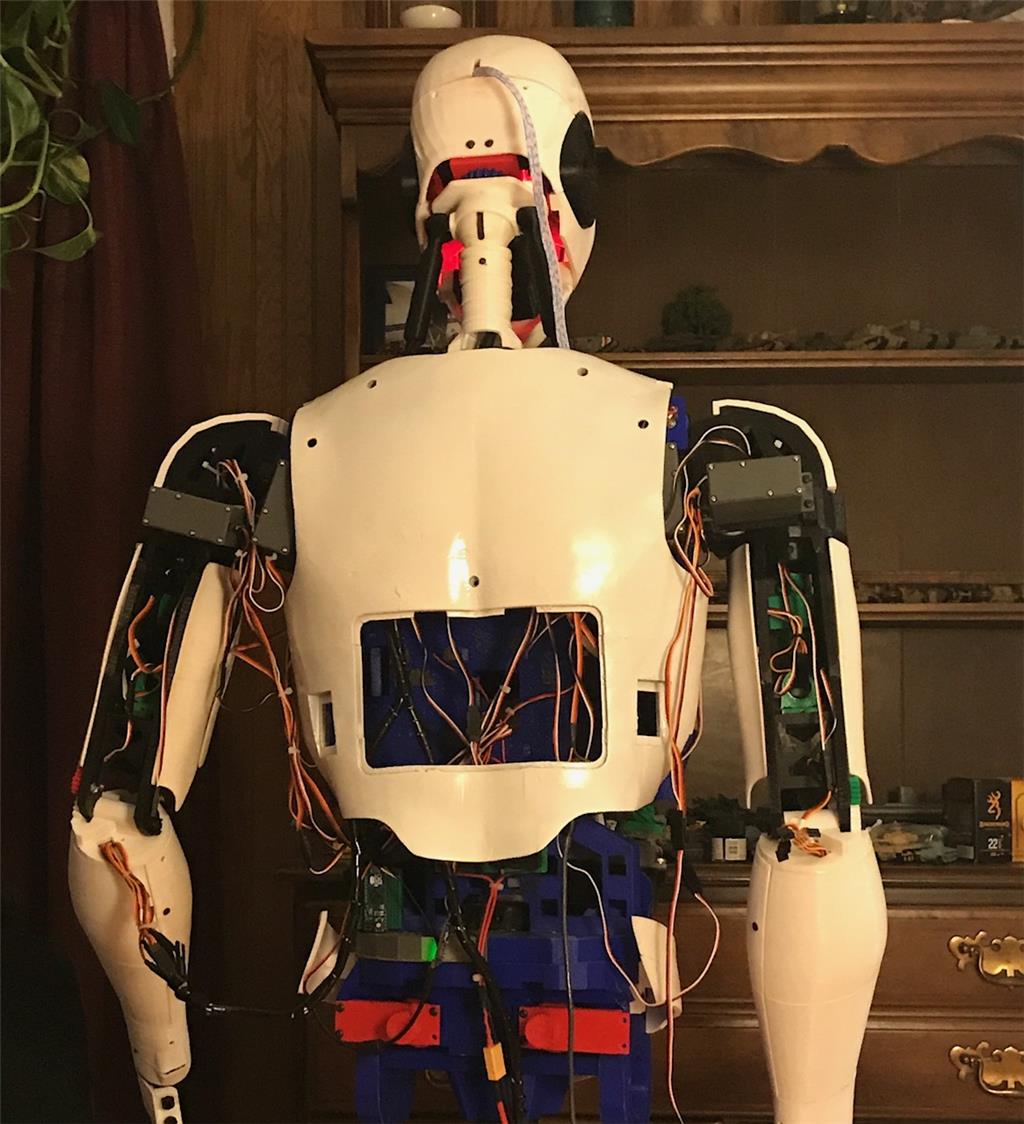

Here's the back. All the standard inmoov components. Dual 256 Megas, Nervo boards USB hub, Power supplys etc.

So the tear down begins. I need to clean up that wiring too. What a rats nest!

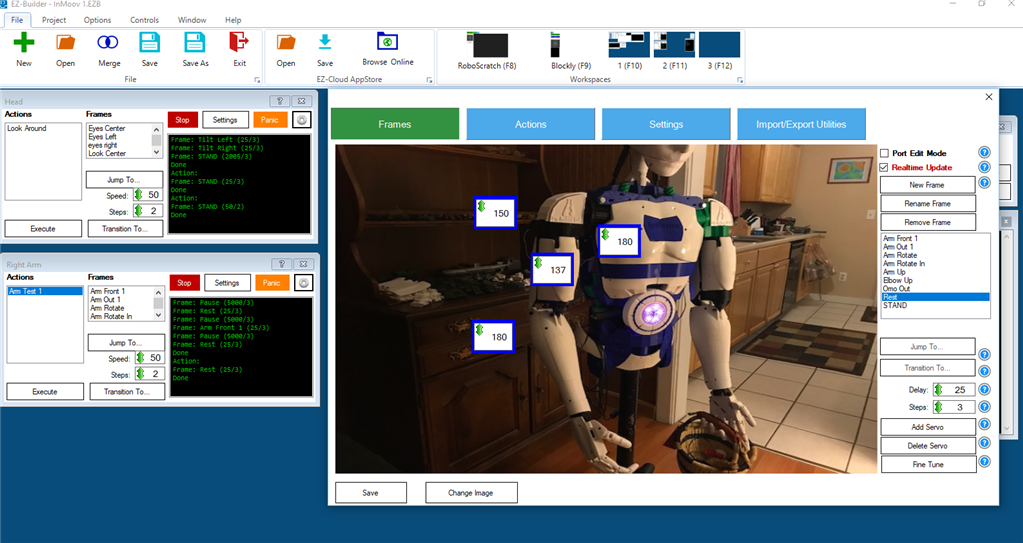

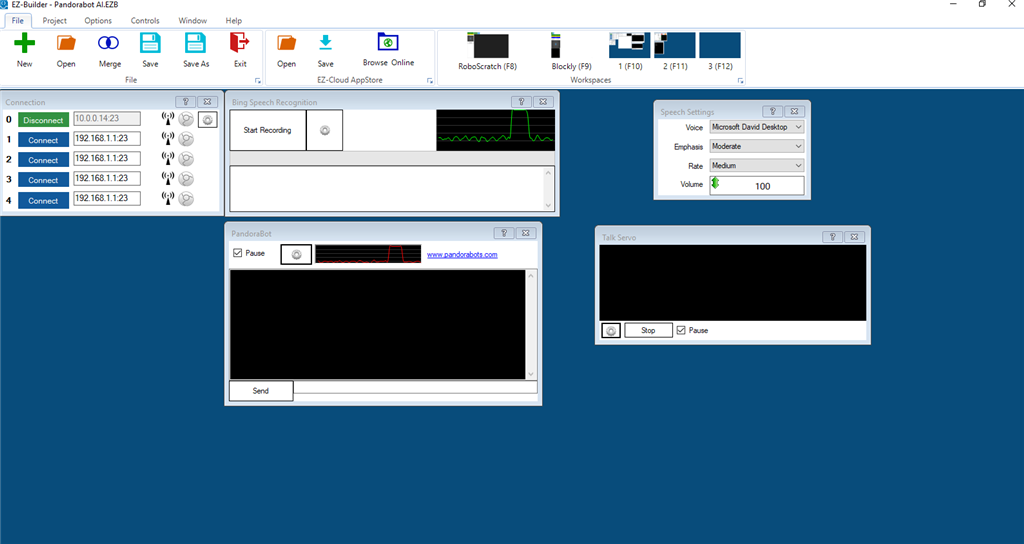

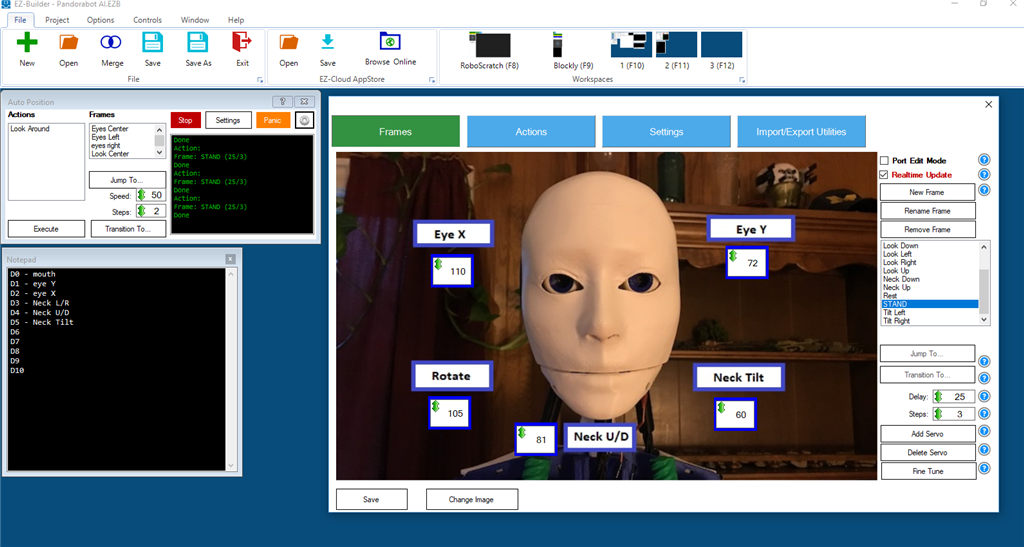

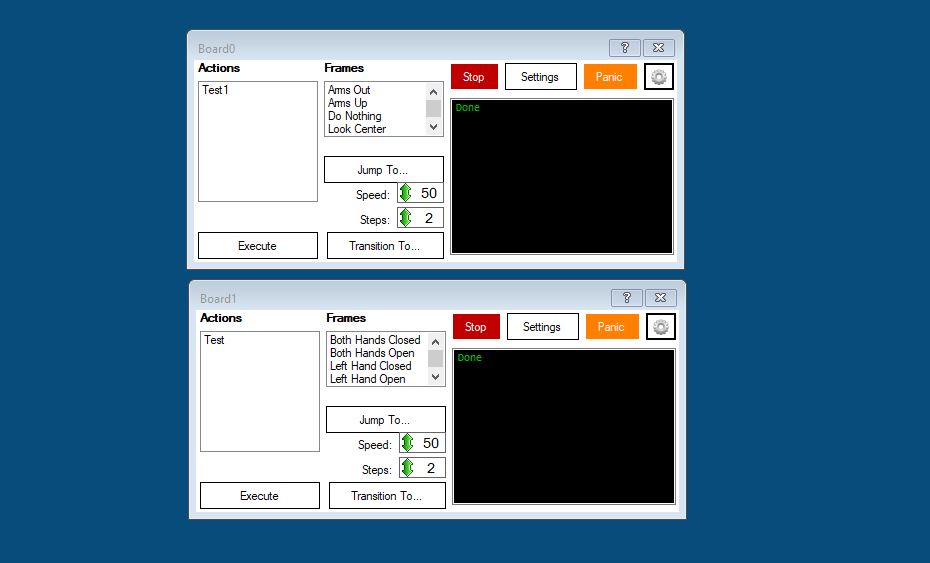

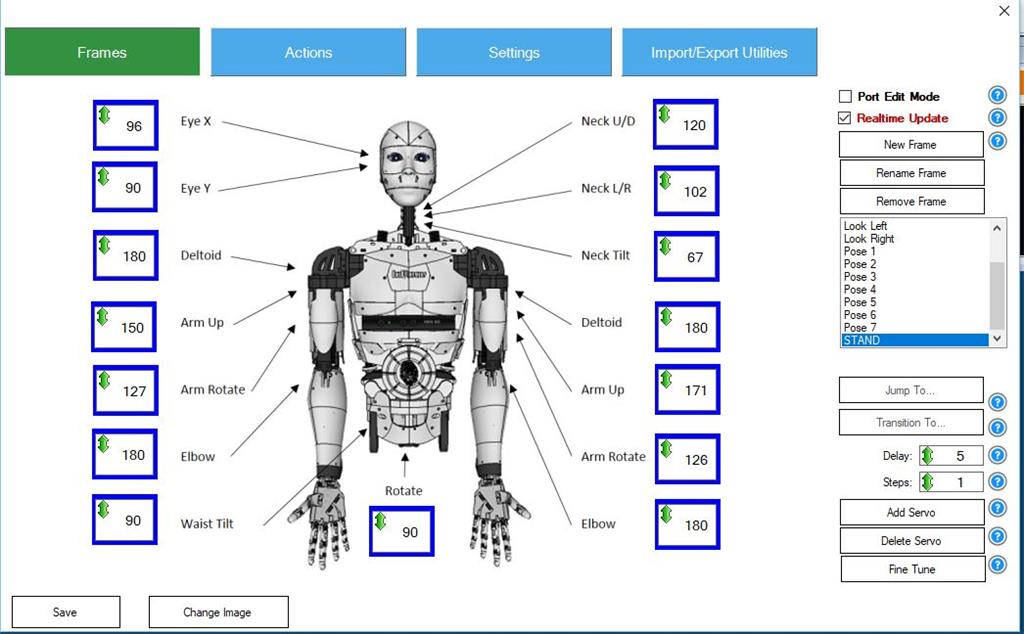

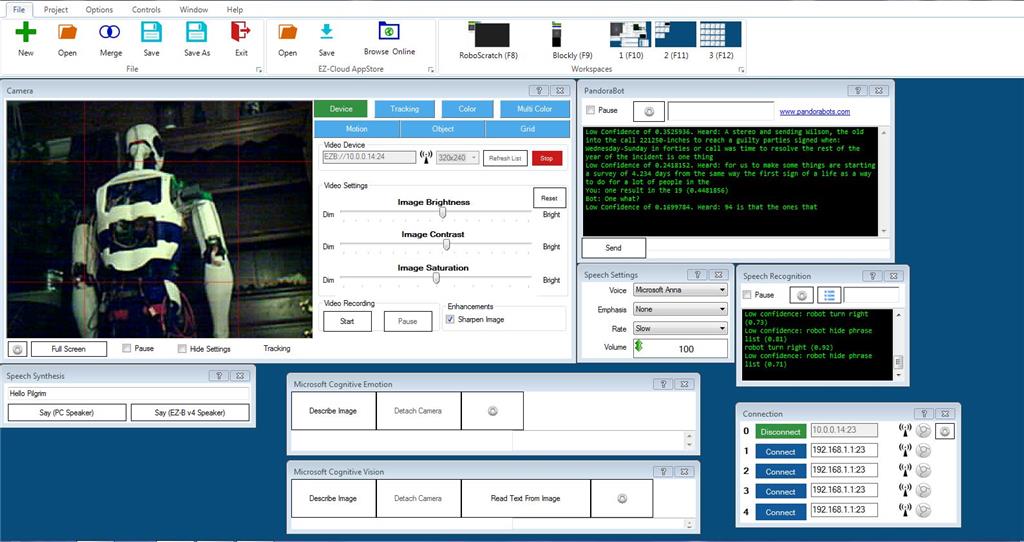

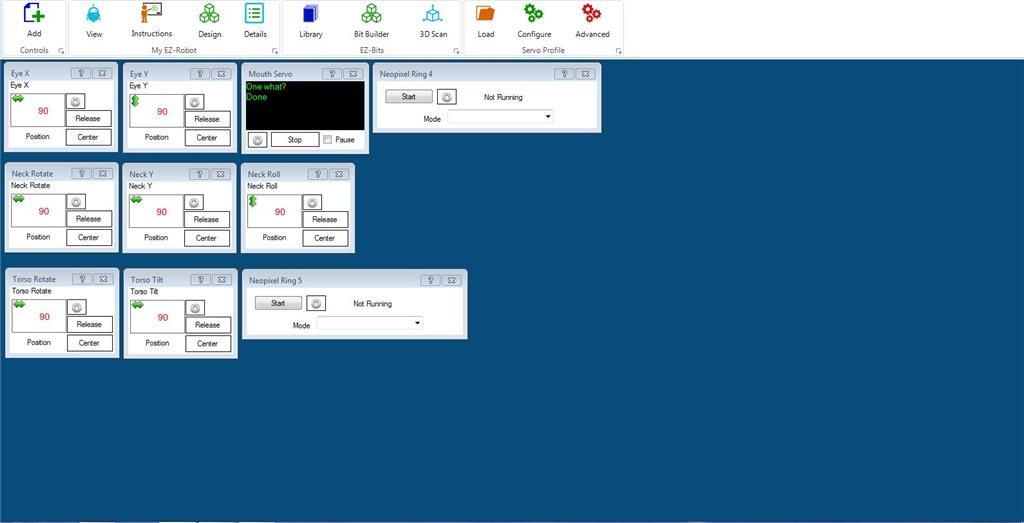

I just received my EZR controller and camera so I have no idea how to program it yet. I figured I would at least try to set up a GUI that allows me to manually move the servos like the MRL swing GUI. Five or six hours later and a few tutorials and I have this three screen model. I was easily able to add way more than basic servo control.

Here is the main control screen. Contains the face tracking speech functions and a custom Pandorabot for AI. Some MS cognitive stuff as well.

Here is the second screen. Head functions with a mouth control servo, neck, torso, and the 2 neopixel rings I have.

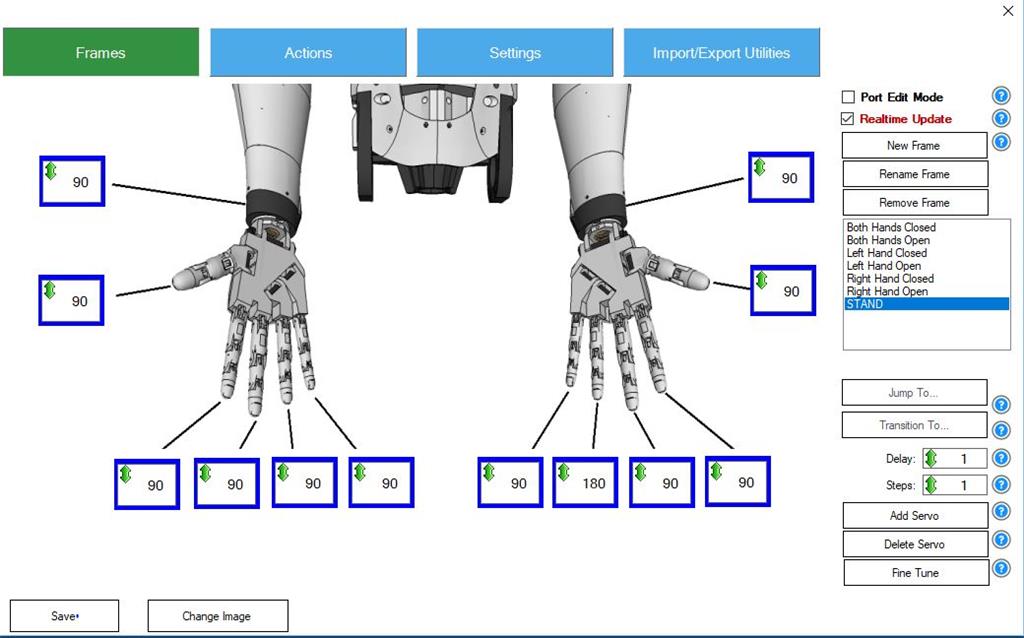

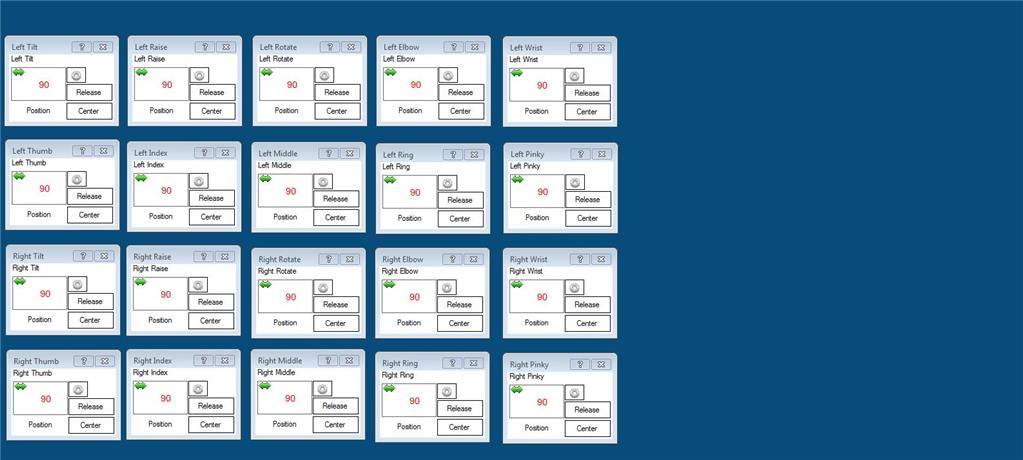

Third screen is for the arms and hands

So now I pretty much have all the same functionality I had in MRL give or take. I am pretty sure I am not doing this entirely correctly but it will come with time. Need to get into the scripting. I guess there are some tutorials to watch. So far my experience has been pretty good and in a day eclipsed my MRL progress of the last 6 months.

I'll update this thread with my progress and appreciate any feedback.

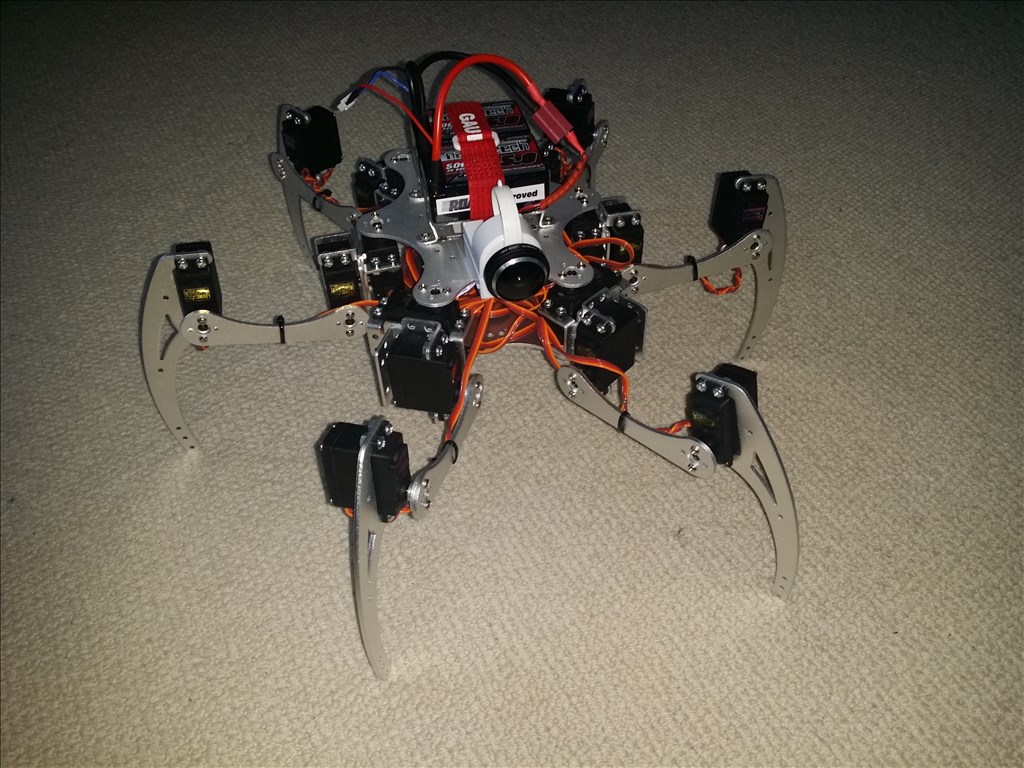

Other robots from Synthiam community

Drephreak's New Robot

Dunning-Kruger's Irobot Create Project. Sneak Peak

I thought he'd be more into electronic music...

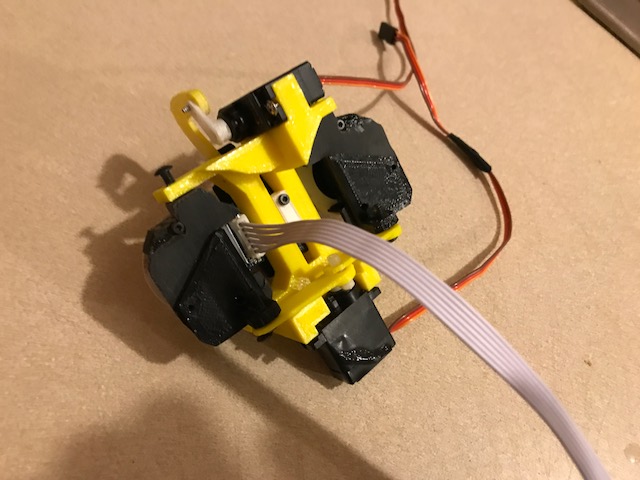

@mayaway I really like that neck. I have thought about trying it out. THe operation looks a bit ratchety though. Are you happy with it? Any progress on the walking?

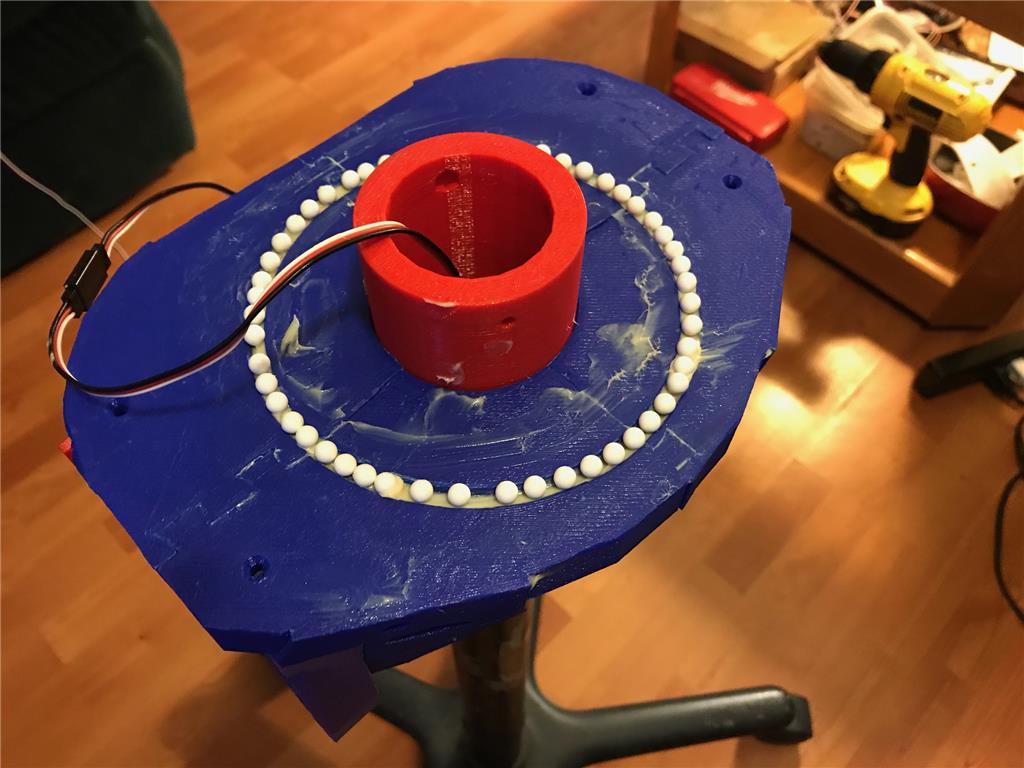

#Perry_S -- I love the new neck! I think I need to adjust the timings. I'm using 5,1,1 right now. Installing the new front piece of the torso was fairly easy. For the back part which comes in four pieces, upper and lower, I only needed the upper two sections, and those, wound up sawing off the two bars that protrude to the middle. These Skateboard bearings on Amazon work like a charm: https://www.amazon.com/gp/product/B00ZI60WKY/ref=oh_aui_detailpage_o01_s00?ie=UTF8&psc=1

As for progress on walking: First I just got forward and reverse control of each joint: Hip Flexion/Extension, Hip Abduction/Abduction and Knee Flexion/Extension I wrote movement scripts for each joint/direction so I could position the joints as desired, firing each motor for 200ms per script run. This gave me a chance to test the 10K feedback pots which have turned out to not be sensitive enough as installed to give me the data I need to accurately register or predict a joint's position. So I'm thinking of an installation directly over the point of rotation, or perhaps higher precision pots. I also had one IBT_2 controller only firing on one channel so ordered a replacement. That's now installed.

Another potential solution to watching joint angle could involve multiple MPU's but I think I'd need to run them through an Arduino since it seems like you can only have 1 MPU-9150 control in EasyBuilder.

In my initial static joint position tests as I moved each joint the whole Torso of the InMoov would move OVER the legs. Next I've built a script to oscillate a series of movements, including weigh shifting of the torso, and some additional weight shifting can be achieved through Abducting one leg and Adducting the other. So I messed with that until my brain cramped, then TDAY happened whereupon I had too much of everything for three days, followed with a requisite bout of gastroenteritis.

Then I got excited about this neck, and just decided to go ahead with it. Now I'm getting back on track to explore walking some more. The InMoov needs to be able to completely shift it's weight to one leg long enough to move the other leg forward, if only a shuffle. When we walk we spend a full 60% of the time in single limb support in a continual process of falling forward then stopping our fall.

@mayaway, Nice work on that neck, it moves pretty good. I know a few Inmoov builders are using that design. I know you have a plan for tracking with 3 servos because ARC only can control 2 servos for tracking purposes, can you post your ideas? Also, You should start a separate thread with your ideas, designs and progress you are making on getting your InMoov to walk. I know a lot of people would be interested in it.

@bhouston Thanks Bob! I may not need to pursue my plan as Drupp's code (posted below) from the EZ app store 'New Neck for Inmoov' may do the trick. At a glance it looks like he uses the eyes to track, keeps track of the neck servo positions, and nudges (within tested limits) the head to follow the eyes. If the tracked object goes out of range everything is returned to center. Pretty clever! Drupp's code: (you can load his EZB file and look everything over.)

My idea was to read the XY Outputs from the tracking control, then write equations to convert that into positioning the three Parloma Neck servos to achieve the same X, Y result. I did see this code for the Penguin.cpp shoulder:

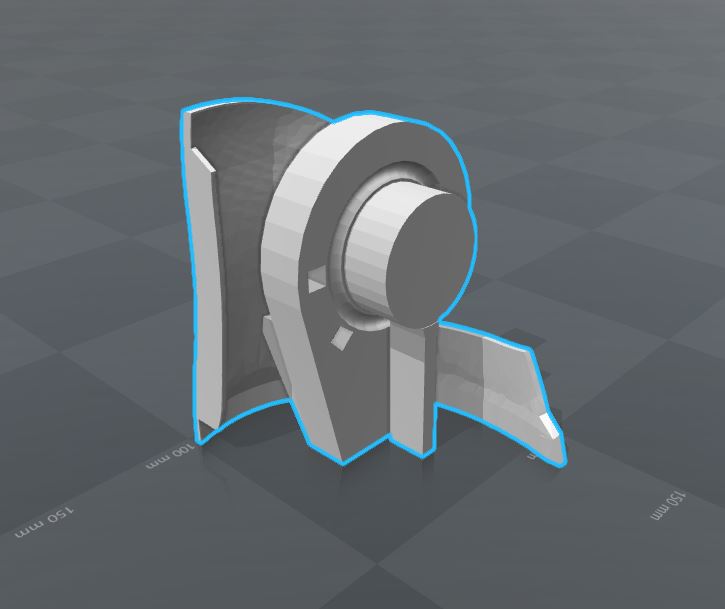

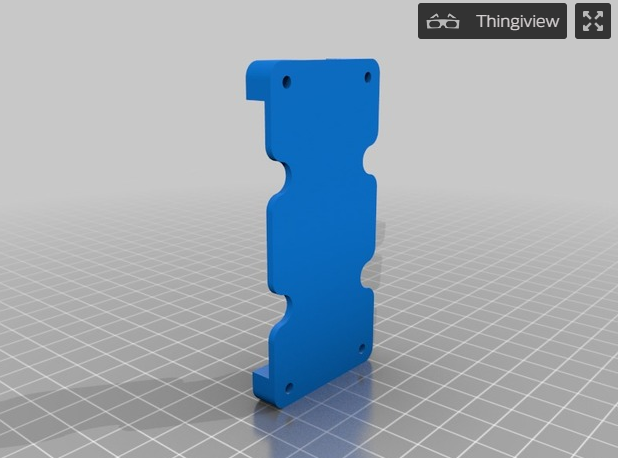

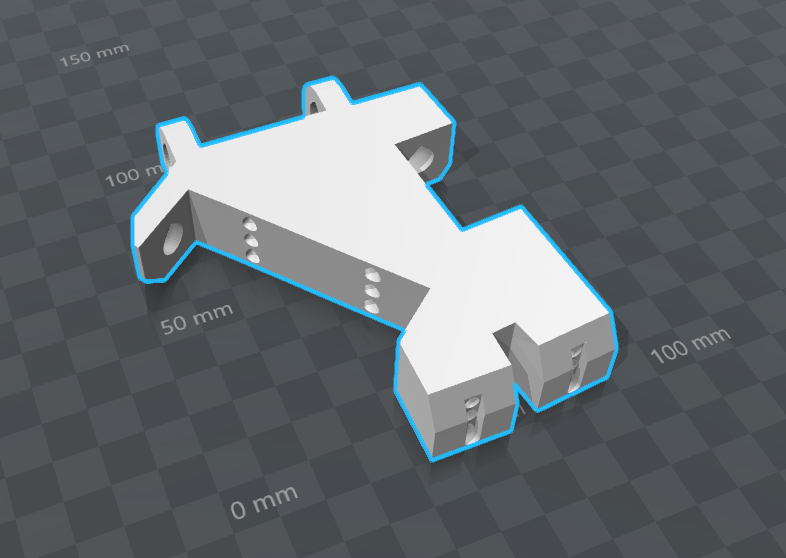

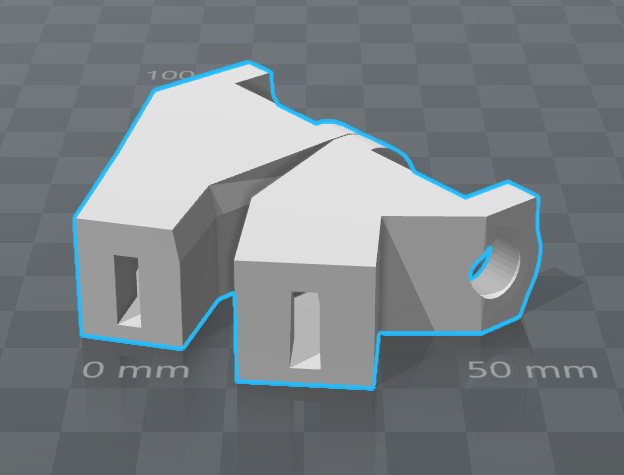

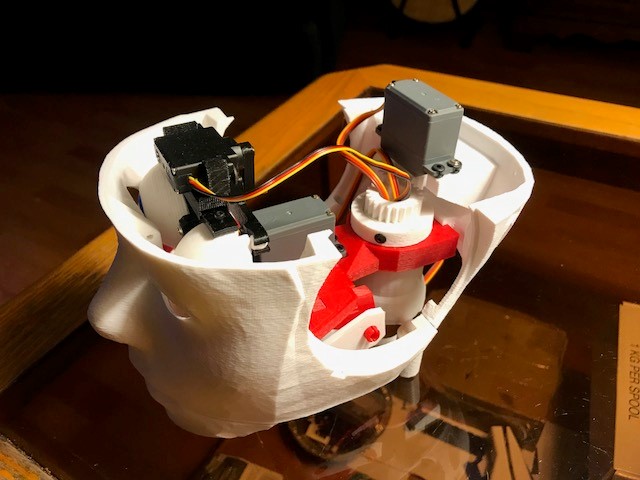

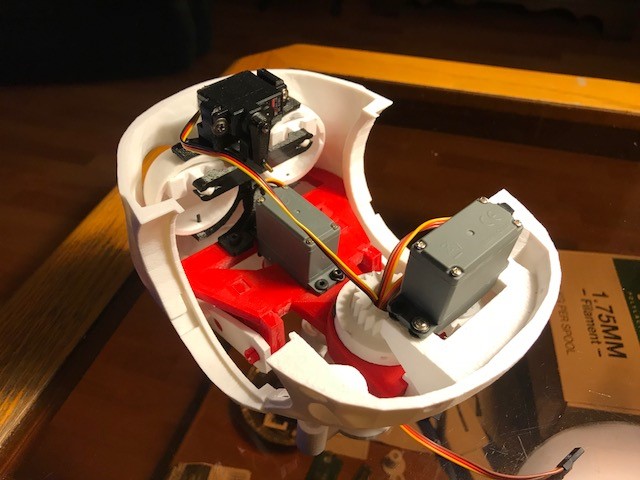

This image shows the position of Parloma Servos D0, D1, D2 in the mounting frame.

I've updated my project if you guys want to take a look. Might be helpful to see how I laid out my project. Emphasis on chatting using Pandorabot.

Hi @Perry_S, I have the Pandorabot in place too, and created and uploaded an automation.html that calls scripts and custom Movement Panel events based on recognized commands, in addition to just chatting... You must have something similar. Best, Stephen

@Perry, thanks for posting this. I gave it a try but the panderbot is very slow to respond. Like 1-2 minutes. I have very slow internet so I am thinking its that. Do you get slowed responses?