jp15sil24

Wheel Slip Impact On Navigation

I am experimenting with navigation using my WheelBot and am considering modifying it into a telepresence robot. Currently, I'm utilizing a combination of the following ARC skills: Continuous servo Movement Panel, Better Navigator, Joystick, Camera Device, and RPLIDAR (Model C1). I've enabled the RPLIDAR skill parameters: use NMS and fake NMS pose hint event, and I'm using the pose hint source with Hector in the Better Navigator skill.

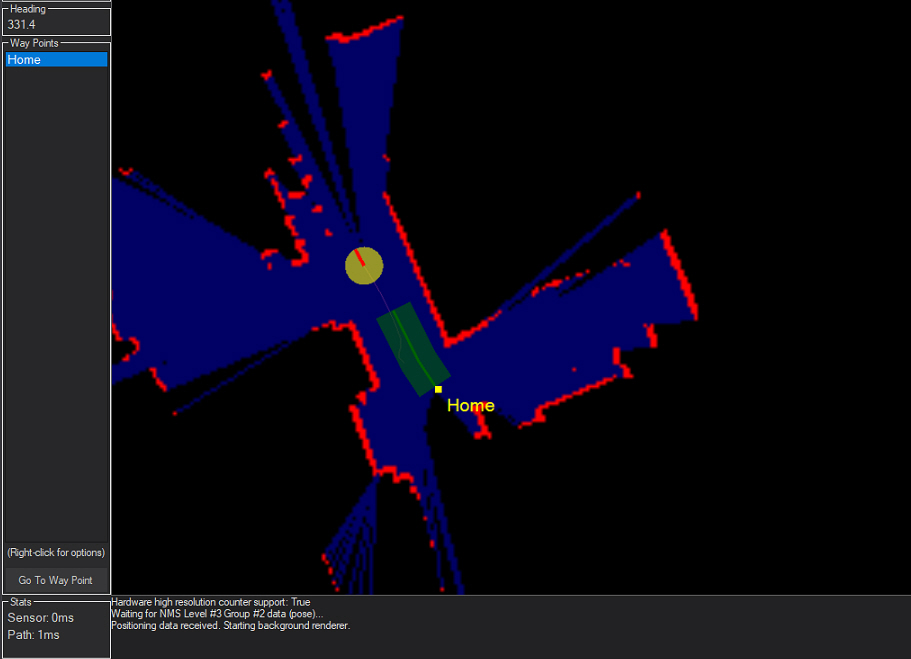

In one of my tests, the robot successfully navigated a 1.5-meter course and returned to its "home" position. However, during a second test, I noticed only half of the return path was visible. Normally, the entire return path should be displayed in green (refer to the LIDAR image below).

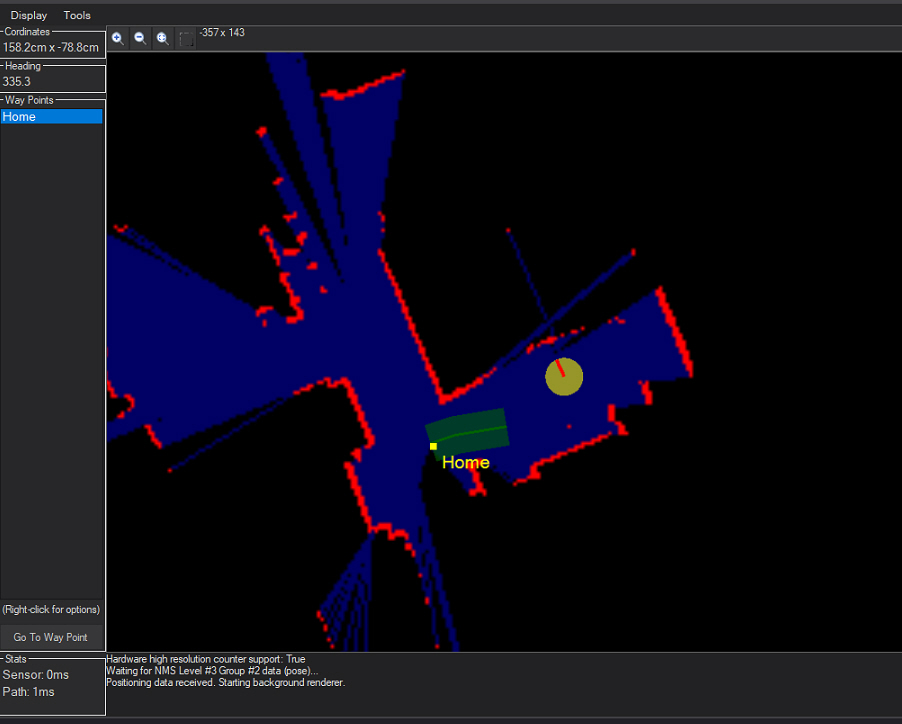

It appears that some information was lost during navigation. In another test, the yellow dot representing the robot was correctly positioned but then suddenly shifted to another location, as shown in the second LIDAR image.

The original position was similar to the photo above (LIDAR Image 1).

I'm not entirely sure if my observations are helpful in explaining this behavior, but I wanted to share them. My floor is tiled, and I've noticed the robot’s wheels sometimes slip, slightly spinning in place. I do not have encoders on the wheels. Could this affect navigation accuracy?

If wheel slippage is influencing navigation, could someone explain how wheels without encoders affect navigation?

If it's not related to the wheels, could you please provide some guidance on how to address this issue? I'm considering adding spikes to the wheels if this might help. Thank you!

Here's why having the lidar mounted in the center and having your drive wheels aligned symmetrically is so important for SLAM and overall navigation:

Consistent Rotation Center:

When the lidar is exactly at the robot’s center of rotation, every time the robot turns, the sensor pivots around its own position. This makes the math behind the SLAM algorithms much simpler because the relative positions of obstacles are measured around a fixed, known point. If the sensor is off-center, the measured points can shift in unpredictable ways as the robot rotates, resulting in errors in the produced map.

Accurate Pose Estimation:

SLAM algorithms rely heavily on accurate pose estimation (i.e., knowing exactly where the robot is and how it's oriented). When your wheels are perfectly centered front-to-back, the robot rotates on the spot without any translational drift. This means that the lidar data accurately reflects the environment. With an off-center mounting, rotational motion inadvertently introduces translation, causing the map to become distorted or "shifted" - the kind of errors you saw as incomplete tracking.

Simplified Data Processing:

With a centered lidar, you eliminate the need for compensating math in your SLAM algorithm. When the sensor is not centered, the algorithm must incorporate complex calculations to adjust the scan data based on the offset, which can lead to inaccuracies if not precisely calibrated. This is why many robot vacuums and TurtleBot-style platforms position their sensors at the center.

Reliable Return-to-Home and Navigation:

When the geometric relationships between the robot’s chassis, drive wheels, and lidar are accurately maintained, navigation functions such as return-to-home become much more reliable. Any misalignment can manifest as unexpected zigzagging or erratic movement because the mapping of real-world coordinates to your robot’s SLAM-derived positions is off.

In summary, centering the lidar and ensuring that your wheels allow for pure rotation benefits the SLAM process by keeping scan data consistent and minimizing the transformation errors the algorithms must correct. This leads to better maps, more accurate navigation, and more reliable autonomous behavior.

Does this clarify the importance of sensor and wheel alignment for your platform? Let me know if you’d like more details or have additional questions on optimizing your robot’s configuration!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

thanks for the explanation. I learn something every day.

I like hearing that! Means Synthiam's working!

I just got back from a school with grade 6 students. Boy, they surprised me so much. Before I even started talking and the students saw the robots, they had their hands up. They asked questions like, "Do those robots have speech recognition?" and "What do you program in?" - all sorts of technical questions! I was blown away. The stuff that adults are learning today, kids already know.

yes and they learn fast. There is a huge amount to learn with Synthiam. We're not stuck in a daily routine.

i modified the design, it looks like the turblebot 3 now. It can turn on the spot, almost perfectly. The T265 is placed above the LIDAR. What’s strange is that the RPLIDAR skill doesn’t display anything, and the real-time values are all at 4000. I remember seeing in previous tests that these values would normally change - I must have forgotten something again, but I can’t figure out what. The navigator displays the room. here a picture. I’ll keep running tests, but maybe I have a problem with the RPLIDAR!!!

The v25 of the RP Lidar has a fix for the UI render. The UI render can be disabled to save CPU time. The previous version of the rp lidar robot skill appeared to have a bug with enabling the UI render. Mostly, the UI render is disabled and only used for debugging purposes.

Hi Synthiam Team,

Unfortunately, I still have no good news - I'm continuing to experience issues with navigation.

My first design was completely off, so I rebuilt the system using a TurtleBot 3 chassis with two 360 servos, an RPLIDAR placed in the middle, and a T265 camera mounted 20 cm higher and 5 cm behind the center. The robot moves well mechanically, but the navigation is very poor.

I tried many combinations:

RPLIDAR + T265

RPLIDAR only

Navigator set to Hector, External, Fused, Dynamic

Disabling the Navigator and reinstalling it

With short, straight movements, the robot can return to its starting point. But with longer paths (e.g. 3 meters), it fails. It might turn left or right randomly, stop after 10-30 cm, or display a return path that’s completely outside the room.

Since I couldn’t get it working, I decided to switch to a Roomba 600 chassis. I bought a used one in good condition and modified it using the SCI cable and H/W UART. I followed the Synthiam video on connecting Roomba to EZB via H/W UART 1.

Using the iRobot Roomba Movement Panel skill, I can control the robot fine: sending init, powering on/off, and receiving sensor streaming data. I also installed a camera, and color/object tracking works well. I decided to try using the better Navigator this time.

I followed the procedure described in the Roomba Movement Panel skill exactly:

Enable "Send position to NMS" in the skill config

Connect the Roomba and enable sensor streaming (data is received)

Press reset/power off (to reset wheel encoders and set home position)

Place the Roomba on the ground (this is position 0,0)

Press the clean button (light comes on)

Press init and enable sensor streaming again

Up to this point, everything looks good.

Then I started the T265 and the RPLIDAR. Here’s where things get strange:

If I use pose hints like External, Average, Fused, or Dynamic, the yellow dot (representing the robot position) becomes unstable - it either spins on the spot or jumps to random positions. It only looks somewhat better when I select "Hector" and "Fake NMS" in the RPLIDAR skill.

I tried a 1.5-meter run and then asked the robot to return home. It couldn’t - it stayed in place, without showing any error. Sometimes I see the message: "Busy... Skipping location scan event (if unsure, ask about this on the forum)"

I also tested with just the RPLIDAR (without T265), and it wasn’t any better. Manual control works fine. Mapping looks good, and obstacles appear in the right places, so the RPLIDAR seems to be working. I tested the T265 using Intel RealSense Viewer, and it looks fine as well.

This is disappointing for my telepresence robot project. I was quite confident that the Roomba setup would finally work.

Maybe I’m missing something, but after many hours of testing and trying different configurations, I’m out of ideas.

Any help or suggestions would be greatly appreciated. here are 3 pictures.

Do you have a photo of the robot so I can see the configuration?

Also, turn on the lidar UI render to ensure the lidar is facing the correct direction for the robot's heading direction. The first photo looks great but I can't quite figure out what's happening with the others, specifically the last.

Is the robot moving very slow with these settings...

and verify by enabling UI render that the lidar render is facing the correct direction.