jp15sil24

Wheel Slip Impact On Navigation

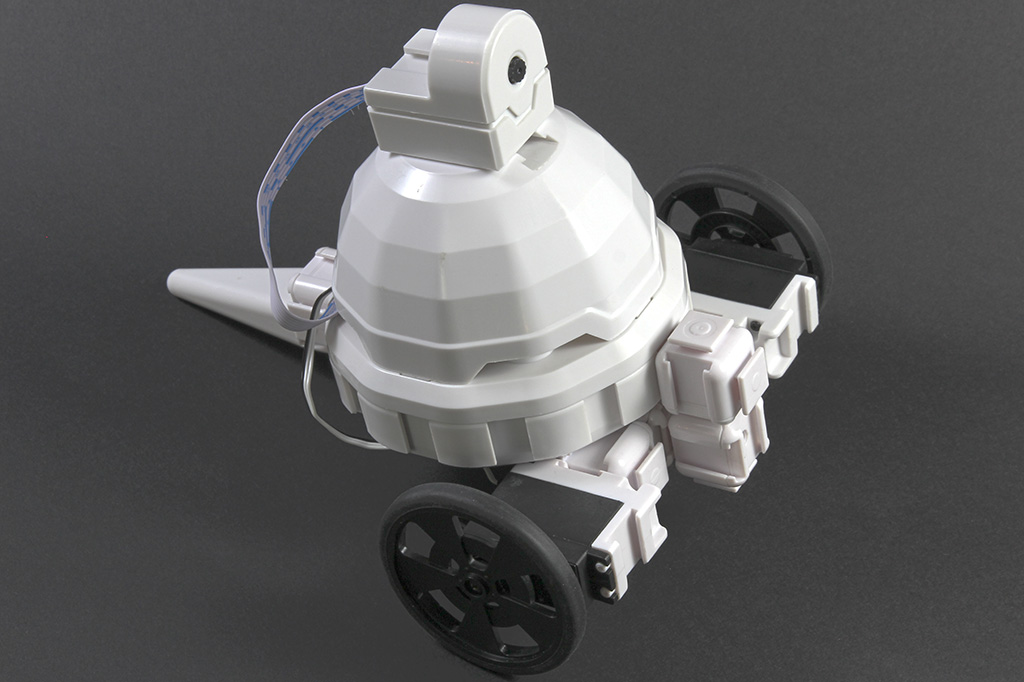

I am experimenting with navigation using my WheelBot and am considering modifying it into a telepresence robot. Currently, I'm utilizing a combination of the following ARC skills: Continuous servo Movement Panel, Better Navigator, Joystick, Camera Device, and RPLIDAR (Model C1). I've enabled the RPLIDAR skill parameters: use NMS and fake NMS pose hint event, and I'm using the pose hint source with Hector in the Better Navigator skill.

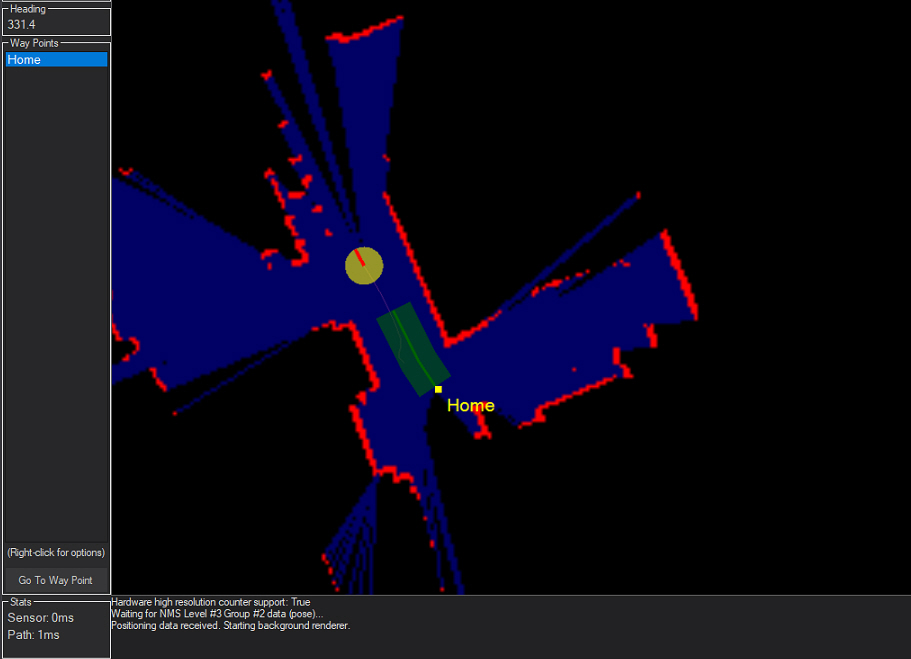

In one of my tests, the robot successfully navigated a 1.5-meter course and returned to its "home" position. However, during a second test, I noticed only half of the return path was visible. Normally, the entire return path should be displayed in green (refer to the LIDAR image below).

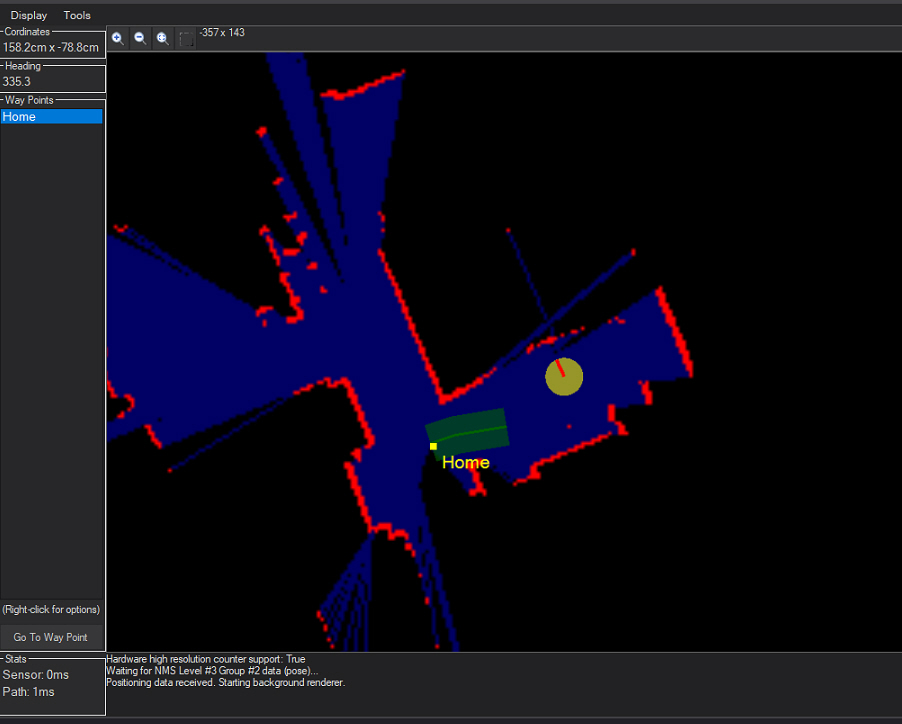

It appears that some information was lost during navigation. In another test, the yellow dot representing the robot was correctly positioned but then suddenly shifted to another location, as shown in the second LIDAR image.

The original position was similar to the photo above (LIDAR Image 1).

I'm not entirely sure if my observations are helpful in explaining this behavior, but I wanted to share them. My floor is tiled, and I've noticed the robot’s wheels sometimes slip, slightly spinning in place. I do not have encoders on the wheels. Could this affect navigation accuracy?

If wheel slippage is influencing navigation, could someone explain how wheels without encoders affect navigation?

If it's not related to the wheels, could you please provide some guidance on how to address this issue? I'm considering adding spikes to the wheels if this might help. Thank you!

Related Hardware (view all EZB hardware)

Related Robot Skills (view all robot skills)

Below is an explanation and some suggestions regarding wheel slip and how it can affect navigation accuracy in your ARC project.

How Wheel Slip Affects Navigation

Lack of Accurate Odometry Feedback

Without wheel encoders, the robot cannot accurately measure how much each wheel has rotated. In many navigation systems, the odometry (dead-reckoning data) is used either directly or as a pose hint to refine the robot’s position. When your wheels occasionally slip on the tiled floor, the expected movement does not match the actual travel distance. This results in incorrect pose estimates.

Impact on Pose Estimation and SLAM

In your setup, you are using the RPLIDAR with the "fake NMS pose hint event" and are relying on the Hector SLAM data as the source for the pose hint in The Better Navigator. When there is wheel slippage, the expected movement of the robot may not be clearly represented in the LiDAR scan matching. This can manifest as:

Using Fake Pose Hint with Hector SLAM

Enabling the "fake NMS pose hint event" allows Hector SLAM to generate pose hints in environments where there is no external odometry. However, if your robot is experiencing wheel slippage, even Hector SLAM may struggle to create a continuous or reliable map because the actual movement isn’t being reported accurately.

Suggestions to Improve Navigation Accuracy

Improve Traction

Enhance Odometry

Tune Your Navigation Parameters

Next Steps

Do these suggestions align with what you were thinking, or would you like further details on any of the adjustments? Feel free to ask if you need more clarification or step-by-step guidance on configuring any of these features.

For more details on The Better Navigator or general navigation tips, please visit:

The Better Navigator Manual

And for hardware support on AdventureBot, see:

AdventureBot Hardware Support

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

The image looks like the robot is moving too quickly. The lidar spins and grabs frames. The SLAM requires several frames to build confidence in its map. The robot must move very slowly, it's not a race . Try again moving as super slow, and you'll see it work flawlessly.

. Try again moving as super slow, and you'll see it work flawlessly.

Wheel slippage has nothing to do with this if you're not using wheel encoders. Using Fake Hint, the slam system uses the map to identify it's position.

If the robot is skipping to a different location on the map, it's because the map is incomplete. If the map is incomplete, it's because the slam did not get enough frames. If the slam did not get enough frames, it's because the robot is moving too quickly.

Below is an explanation that expands on DJ_Sures’ observations and some additional suggestions for your setup:

Key Points Raised by DJ_Sures:

Robot Speed and Frame Capture:

The behavior you’re observing-such as the incomplete return path and sudden jumps in position-appears to be caused by the robot moving too quickly. The RPLidar needs to capture several frames in order for the SLAM system (using Hector via the fake pose hint) to build a reliable map. When the robot moves too fast, the LIDAR doesn’t capture enough data, resulting in an incomplete map or abrupt shifts in the robot’s perceived position.

Wheel Encoders and Fake Pose Hint:

When using fake pose hints, the system relies solely on the SLAM scans to update the map. Without wheel encoders or another form of external odometry, any discrepancy is due to frame capture issues (i.e., the robot’s speed) rather than wheel slip.

Additional Suggestions to Address the Issue:

Reduce Your Robot's Speed:

Monitor LIDAR Scanning Performance:

Review and Tune SLAM Settings:

Test Incrementally:

Conclusion:

Based on DJ_Sures’ input and your own observations, the inconsistent mapping is most likely due to the robot moving too quickly for the SLAM algorithm to gather sufficient frame data. Working at a slower pace should help produce a more continuous and reliable map. Wheel slippage isn’t a contributing factor here if you're not using wheel encoders.

Please let me know if you’d like assistance with adjusting any specific ARC settings or if you have any further questions regarding your navigation setup. For detailed guidance, you might also review the following resources:

Feel free to ask if you need further clarification!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

great and thanks for explanations. Even the wheel slip is not the problem, I have to improve the traction, maybe bigger wheel,other material. I will lower the speed as much as possible and check parameters.

I modified my Wheelbot by placing the drive wheels at the front instead of the back. I thought this would improve traction. TurtleBot uses this type of drive. There is a bit less slipping, but it's still not optimal. I really need to change something-maybe a lighter battery or different wheel materials. I'll have to see.

I noticed something: if the robot moves normally, without jerks due to slipping, the return-to-home tracking in Better Navigator is correct. However, when the robot moves, slips, moves again, and slips again, the tracking is incomplete-part of the green tracking line is missing. The movement speed is very slow, about 20 cm in 3.5 seconds.

Another observation: two or three times, when I requested a return to the home position, the robot started zigzagging, moving very little-left, right-before stopping. I was testing with small movements, around 1.5 meters.

I’m using my Wheelbot as a prototype to develop a telepresence robot. I want to make sure everything works correctly before creating a finalized product, and since navigation is quite important for a telepresence robot, I need it to be reliable.

I have the same setup as DJ in his video "Add Lidar Navigation to a Robot," except that I'm using the RPLIDAR C1 model and a SBC running Windows 11.

Is your lidar direct center of the robot chassis? Also, the wheels position must rotate the robot exactly on the spot. Meaning, having the wheels centered between front/back on the robot chassis.

if you look at a TurtleBot or a robot vacuum you’ll see what I mean. The wheel position and lidar centering is really important.

Oh dear, no! The wheels are at the front of the robot, and the lidar is 7 cm behind, not at the center of the chassis. So, everything is wrong in my design. I got a TurtleBot chassis, so I’m going to use it.

Ah yes - that'll do it. There's a bunch of math involved in why the robot's rotation must maintain the lidar to be centered. That is why you commonly see that configuration on robot platforms with lidars, specifically robot vacuums. Robot vacuums are probably the leading use-case for lidar on robots because they're the most common mass-consumer product that uses a lidar. @Athena would better explain why having the lidar located in the center and the wheel centered between the front/back is essential for SLAM.