Our Microsoft team recently picked up a few of the EZ Robot Developer kits as part of an innovation project exercise lead by one of our MACH Program new hires (Microsoft Academy College Hires), and has been moving in bringing ideas to life - innovating - and working with the local robotics community to strengthen ideas within our own products. Shortly after getting started, the team had an idea to see what may be possible by combining some of our recent AI services with the ARC. We had a chance to talk through some ideas with DJ and before we knew it EZ Robot had rolled out plug-ins integrating Microsoft Cognitive Services.

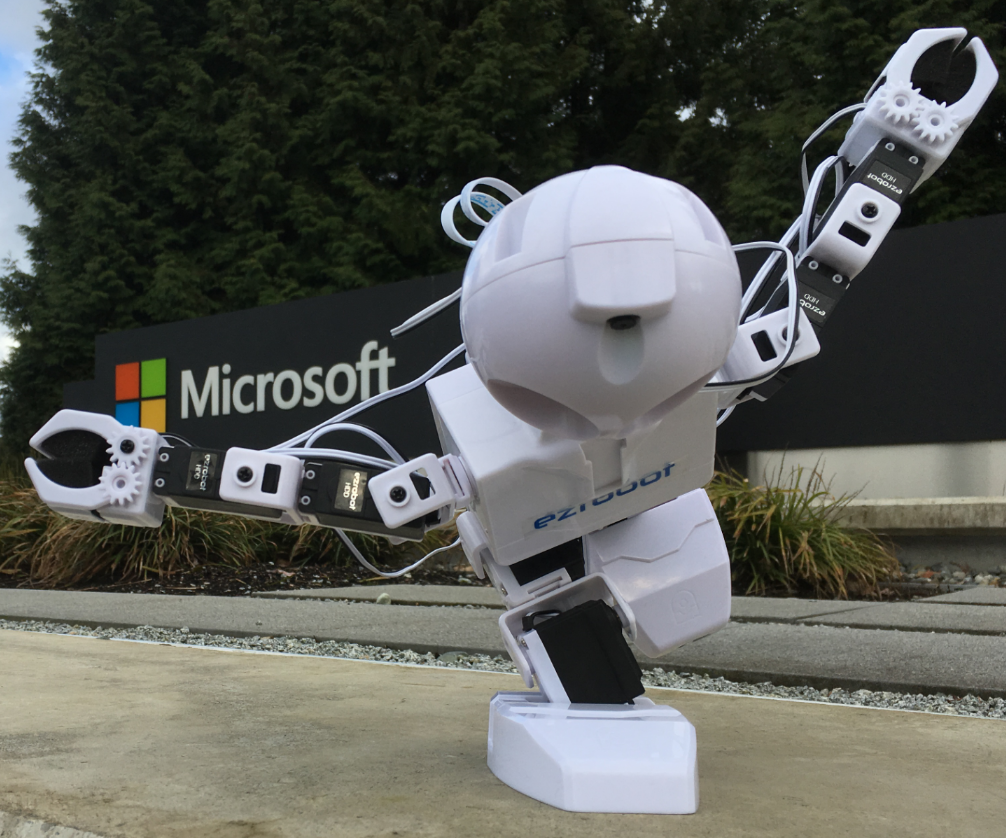

I first saw DJ's thread featuring some of the new Cognitive Services plug-ins Feb 24, and was extremely impressed. EZ Robot has taken some of Microsoft's most technologically sophisticated and powerful technology - and found a use that truly democratizes AI for everyone - making JD one of the world's most intelligent robots. After seeing the video, we had to have one for Main Campus.

I wanted to post a shout out to DJ and the team for some truly inspirational work - your product is amazing, extremely easy to use, and is helping us to really excite and energize new hires with the potential of what platforms such as ARC can do with Microsoft AI technologies (Chatbot, Cognitive Services, Cortana Intelligence Suite). Awesome stuff.

At this point we've picked up a number of sets and have an internal project running with our new-hires to see just how creative folks can be in both physical form factor and the scenarios these technologies can make possible. Among our team is a 10year old 'junior' colleague, helping us to program some of the conversational aspects and to demonstrate just how truly powerful this combination is - accessible to young and old and requiring only imagination (and a battery ) to power it.

The JD bot himself has been busy in presentations, as one of the world's smartest and most capable robots, helping us to share the excitement internally around just how powerful the new services can be - in a mobile form factor that can do handstands - and possibly manage a call center.

We can't post any confidential material, but as an extremely satisfied and excited customer of EZ Robot - I'm looking forward to share what I can about JD's ongoing adventures on campus - and to highlight some of the exciting thing's he's up to. We'll share what we can as well on the ongoing project through a separate post, and certainly encourage ideas to the project's development.

DJ & team - truly awesome innovation. Great to see so much creativity from a Microsoft Partner leveraging our development stack!

Other robots from Synthiam community

Kashyyyk's R2-Q5 Droid Build With Ez-B4

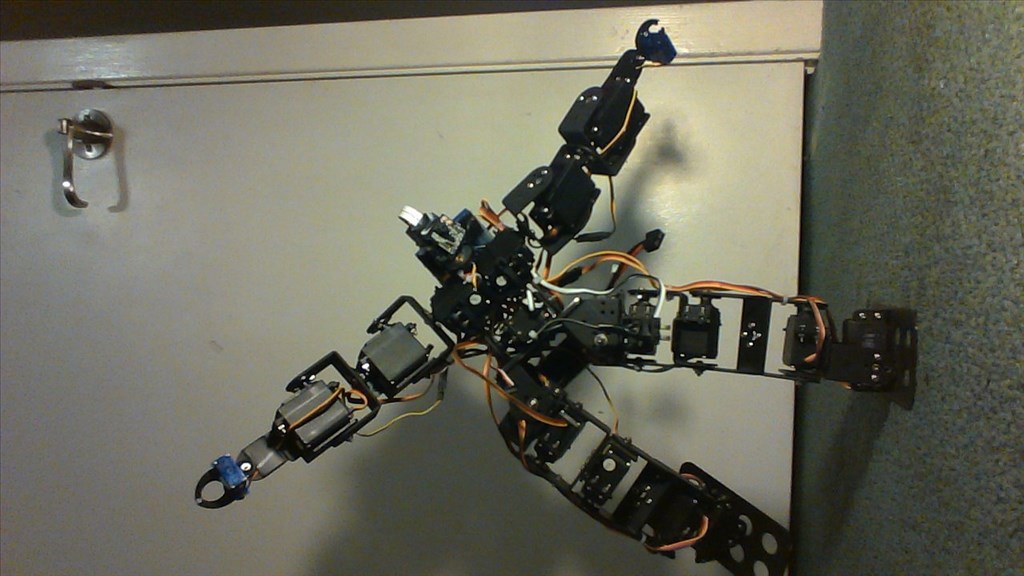

Cliffordkoperski's F.K.Q. Robot

You will never be able to get 3d coordinates from a single camera - depth perception requires 2 cameras. For example, put a patch on your eye and try to operate throughout the day... you'll never be able to pick anything up

You "can" pretend to know the 3d depth of an object if you already know the size of the object, however. By determining the width/height of the object detected in relation to a pre-determined size based on a known distance.

I would start by checking out the robot program. We have a good episode on a similar technique here: https://synthiam.com/Community/Tutorials/111

Thanks DJ!

Put a glyph on your sodas so the robot can get you a drink! Multi million dollar robot use glyphs too.

Technically using a glyph is a proven methodology for identifying objects, still I want to explore new and uncharted territories.

@Mcsdaver

Yes the robot will navigate (crudely) towards the glyph. However, without the ability to know where it is (the arm and robot itself) in 3 dimensional space the robot may find the can but will have issues reaching out to grab it... This is why your robot will need a 3d camera or 2 cameras. It needs to know where the can is in x,y and z coordinates... Not to mention you need to compute servo positions (4 or more depending on how many servos the arm has) to send to the arm servos in order to position it correctly. The end of the arm (gripper) needs to know where the object is in order to grab it... x,y and z...I think it is doable. Do you remember the roomba beer fetching ezrobot?

If the robot moved into a position that was a specific distance from the glyph, a pre-programmed AutoPosition action can move the arm and pickup the item - assuming it’s always in the same place.

Using the robot program tutorial I posted earlier, you can have the robot move into exact distance position from the glyph by the size of the image.

Once in position, the known position of the can is easy to pickup.

The only trouble would be ensuring the can is in the correct position every time. This might mean a can dispenser in the fridge.

True. Still I want to be able to easily find any item. Of course that is one of the many goals of AI.

On the topic of robot depth perception, I remember when you added multi-camera support to ARC over a decade ago. You had this one red humanoid robot with two wireless web cameras for eyes and there were plans for 3D games.