A little back ground. Besides make up effects, I started teaching myself computer animation about 20 years ago. About 8 years ago I bought a motion capture suit to add motion to my cgi characters. I pondered the possiblity to take that captured motion into the real world. Currently, that concept includes robots. As my Alan robot develops into a full body project, I again wondered about moving him with motion capture.

I found a company in China that created a new motion capture suit that used IMU at the joints and some pretty nifty software. In the past you were restricted to a volume created by cameras that tracked position using reflective balls on your joints. This new system could capture a person in any space doing almost anything. The price is about a 10th of the old type suits.

Anyways I approach our very own member, PTP. We worked on another project early last year. When I say worked together, I had an idea and this brilliant man executed it. We are very lucky to have him here on this forum. He has contributed much and has the same passion for robots as we all do. So I approached him with the idea of the Persecption Neuron motion capture suit controlling servos. He was interested. So instead of buying the suit at $1400 I bought the $100 glove that uses the same technology and software and sent that to him.

So in the video you can watch PTP set up and use the glove and software to control servos. I've edited this video from 14 min to 4 min. So I'll add here part of what I cut was his warning that there is a lot of white magic to get this to work. That the software and hardware return odd behaviors to the plug in that are not predictable. But it's amazing what he was able to produce thus far in this demo. I'm sure he will add more to this thread.

Other robots from Synthiam community

Steve's Mini 6 Fabricated Robot

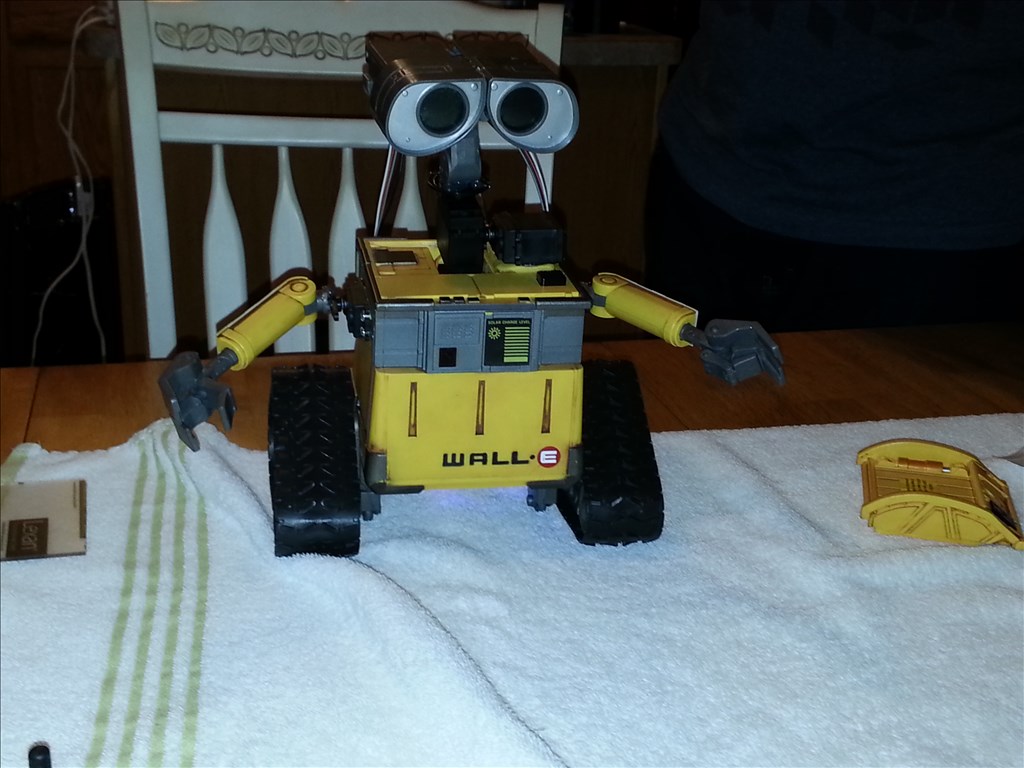

Slim6072's My Wall-E

ptp never ceases to amaze me!

Will, DJ: Thanks for the kind words.

Will thanks for editing the video. I've been under antihistamine medication for a few days, during the video i felt slow and sleepy ... the original video had a lot repetitions and haa moments.

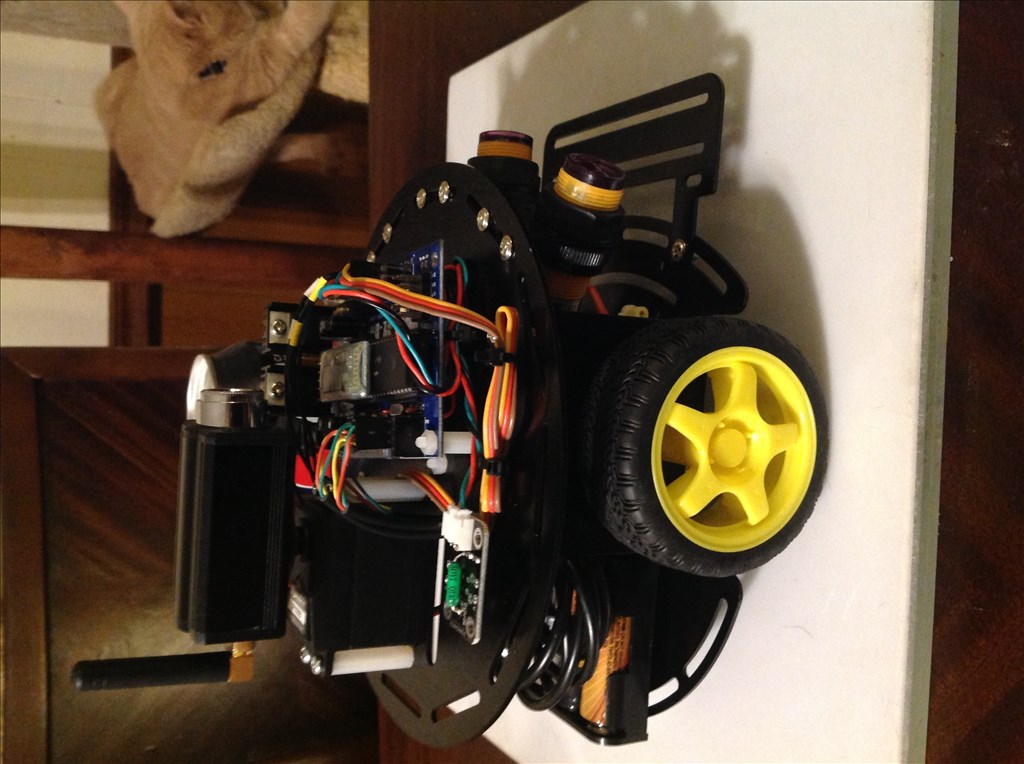

The tracking concept is based on IMU sensors similar to:

https://synthiam.com/Shop/AccessoriesDetails.aspx?prevCat=104&productNumber=1359

the neuron mocap can track up to 58 joints.

It's possible to reproduce the idea using cheap available IMUs, although i don't think you need one IMU per joint.

Similar to the kinect and other 3d sensors, some positions & rotations are inferred based on other joints and body physics.

A quick video regarding IMU tracking and why is hard:

https://www.youtube.com/watch?v=o-tGwkzKRcg

To summarize, besides the hardware the software makes the difference, using specific & proprietary algorithms (this is what i call the black magic) you can obtain really good results.

All IMUs share similar problems: noise and Gyro drift, some IMUs use a magnetometer to help with the drift, but the magnetometer is sensible to magnetic forces... so you add another variable and you have another problem to solve.

Until I watched the video for the first time did I understand how the motion is applied to the bones inside Neuron software. What I have done in the past was take a BVH file and apply to the skeleton inside my 3d animation software (Lightwave and Maya) and map the rotational values of the joints inside those programs to a servo using a Ardurino. All the black magic had already been solved inside the 3d animation program. Neuron and the way IMUs work seems to be calculating the angle between two bones, not measuring an actual joint rotation.

I had thrown around the idea of building a telemetry suit. Where at each joint there would be a potentiometer, but could not figure out how to make it wireless. Just another idea if this one become erratic.

Hey guys, good to see you are working on a solution getting this done!

As you might remember, I was working on a similar issue with @WBS00001a while ago, but the project kind of grinded into hold. The EZ-B being controlled by a task based OS, was not really suited to handle the incoming data stream...we tried live control and reading the data out of a file, it both cause erratic movement. So how do you guys handle this? The servo movement seems to be super smooth!

I am working on a solution to build helper Objects inside of 3ds max which are easy to place and link, to extract the rotational values for each corresponding servo. But since your plugin supports .bvh if I got it correctly, this would be the solution to my problem....all the joints can be setup to extract to rotation correctly! This would kill it @ptp

For the full suit it would be 58 joints with 3 DOF each, which is a lot of servos to keep track of...not counting hands and facial expression!

Good to see you guys are on it, I hope I can also show some progress soon...but radians, euler angles and rotation matrix stuff drives me crazy!

I have great respect for those plugins you pulled out of your hat @ptp

And ALAN goes FULL BODY! You are awesomly insane @fxrtst

@ptp are you Protuguese? Sounds like it. cool