PEEK.CHRIS

USA

Asked

Chatgpt Skill Response Delay Issue

I have a question regarding the OpenAI ChatGPT robot skill in Synthiam ARC.

When I use Bing Speech Recognition in conjunction with the ChatGPT skill, activated by a wake word, the responses seem to be consistently one question behind.

Why is there a delay or silence when I ask the first question?

Additionally, why does it respond to the first question only after I have asked a second question?

How can I resolve this issue so that the system answers the correct question in real-time?

Related Hardware (view all EZB hardware)

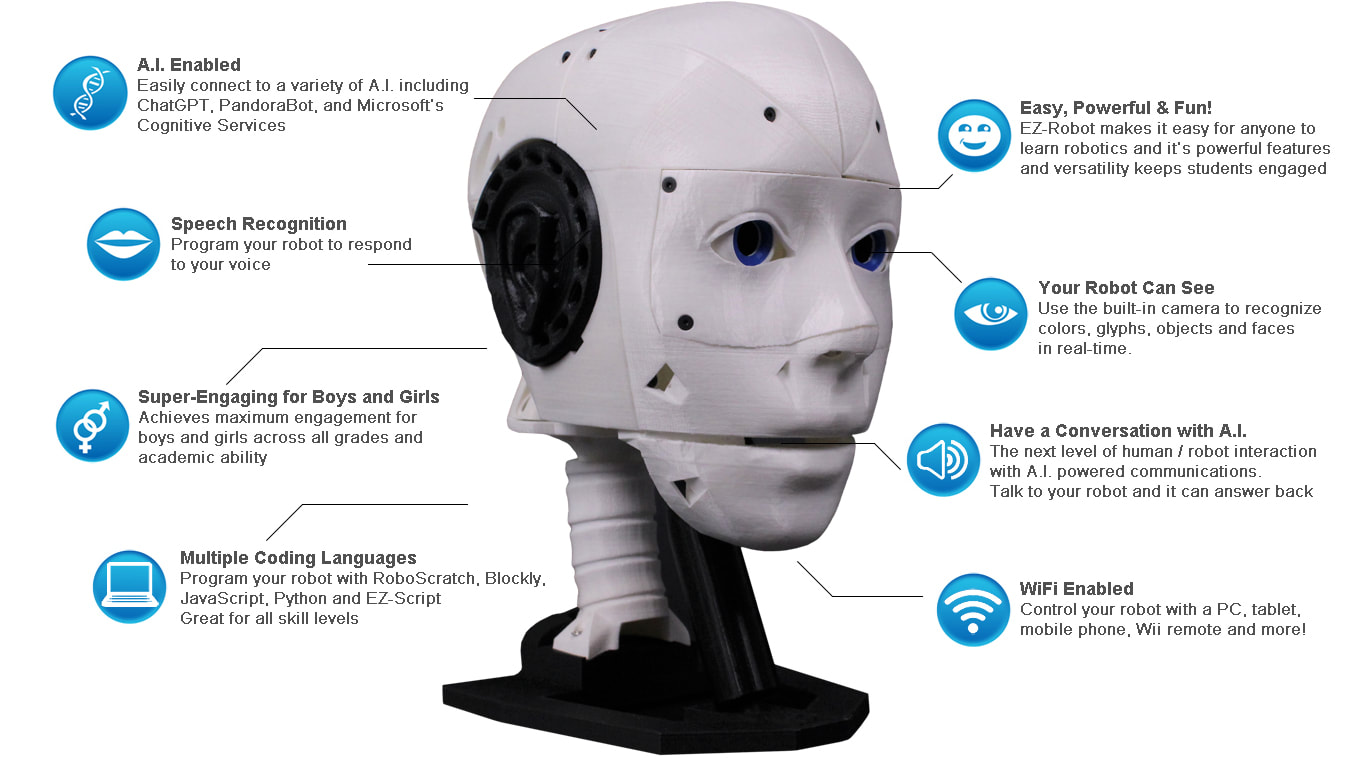

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

Related Robot Skills (view all robot skills)

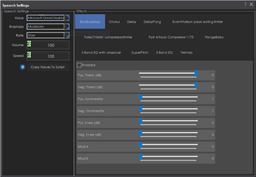

Speech Synthesis Settings

by Synthiam

Configure Windows Audio.say()/Audio.sayEZB() TTS on EZB#0: voice, emphasis, rate, volume, speed/stretch and audio effects; copy control script.

Openai Chatgpt

by OpenAI

ChatGPT conversational AI for ARC robots-configurable personality, memory, image description, script execution, speech and API integration.

Requires ARC

v35

Some things aren't adding up with these details lol, I'm confused. The code you posted above is...

Your project has the following robot skills.

At some point, you had added 2 openai chat gpt skills, removed one, and kept the one with the 2 on the end. But the code you posted references 'OpenAI ChatGPT' and not 'OpenAI ChatGPT 2'. The ControlCommand in the code you provided does not reference 'OpenAI ChatGPT 2'. I'm not sure how what you have even works at all. haha

@Synthiam_support, we need an update to ARC that displays the version of the robot skill plugins in the debug to clarify better what users have going on. We don't know what version the robot skills are or if they're the newest. I'm guessing not, but I also can't understand how this project even works

@Chris, with what you have posted, I don't see how it can work at all, let alone partially work. Can you share your project? That's the easiest then I can tell you how to fix it. My first suggestion is to remove the 'OpenAI ChatGPT 2' and add a new one so it has the correct name 'OpenAI ChatGPT' used in your ControlCommand scripts.

But again, I just can't understand how it works at all when the robot skills in the ControlCommand is named entirely different. haha is it April 1st?

@Chris, can you post the project file either by saving it to the cloud and making it public or uploading it here using the FILE option in the editor?

If you do not want your project shared, you can create a new project that demonstrates the same behavior and share it using either method.

That Is a pretty fair assessment. I really appreciate the help and responsiveness. I definitely got excited and dove in headfirst. I've added and removed a ton of skills over and over again. To my detriment I tend to get my hands dirty and learn by trial and error instead of reading documentation and going through the proper tutorials, so It is no Suprise that everything can be a bit of a mess.

I think what I will do is just scrap the project version and build a new one from scratch with lessons learned and see if that resolves things.

Completely unrelated question: I am using an EZB ioTiny setup in client mode. When I try to use the EZ-B connection skill to scan the network I can never see it and I just enter the IP address manually after looking it up on my router. It goes right though the range but doesn't see it, It should be broadcasting its presence? But I can't see any option on its setup page that explicitly says so. Not sure if there is an issue with the EZ-B not broadcasting or with the skill not picking it up.

I saved project to synthiam cloud as "AI_TESTBED_Chris"

I don't see the project AI_TESTBED_Chris. Is it saved as public? Due to privacy restrictions, we can't see apps that aren't marked as public. They're not accessible by anyone unless they're public.

I think it is public now. Just has one tag for "incomplete"

Oh this is easy. Your script is in the "Start Listening" for Bing Speech Recognition.

The script is in the wrong place

I'll add a few tips for ya as well.

you have the chat gpt robot skill configured to use the Azure Text To Speech, which is great. But you also have the speech settings robot skill added. Just know that the speech settings only work with the internal "robotic" Windows voice that you get when using the Audio.say() and Audio.sayEZB() commands. https://synthiam.com/Support/Skills/Audio/Speech-Synthesis-Settings?id=16054

In the auto position, some scripts can run for each action. The button is red when a script is added to it. The scripts currently use the "Audio.say()" commands. So, the voices between Auto Position and gpt will be different. You'll want to edit those and move them to the ControlCommand for the Azure text-to-speech if you wish to have the voices the same.