PEEK.CHRIS

USA

Asked

Chatgpt Skill Response Delay Issue

I have a question regarding the OpenAI ChatGPT robot skill in Synthiam ARC.

When I use Bing Speech Recognition in conjunction with the ChatGPT skill, activated by a wake word, the responses seem to be consistently one question behind.

Why is there a delay or silence when I ask the first question?

Additionally, why does it respond to the first question only after I have asked a second question?

How can I resolve this issue so that the system answers the correct question in real-time?

Related Hardware (view all EZB hardware)

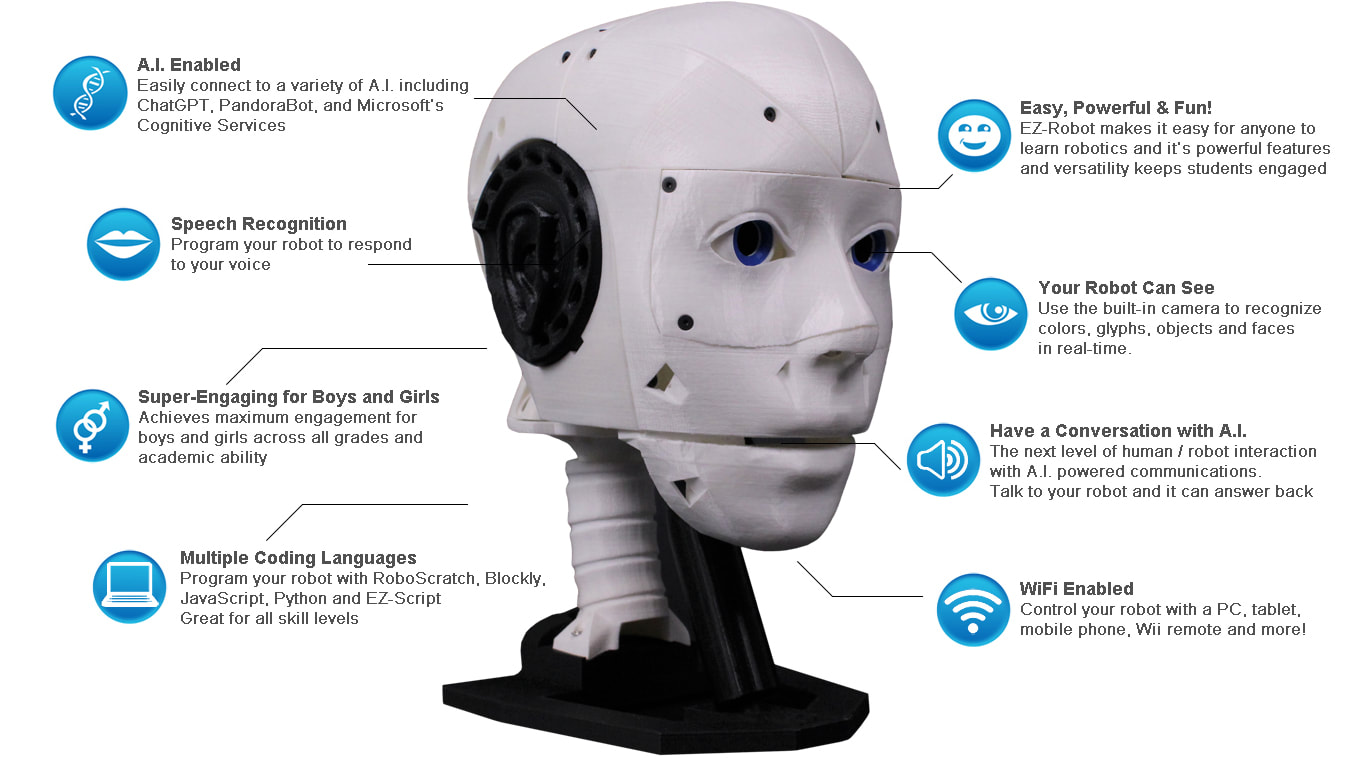

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

Related Robot Skills (view all robot skills)

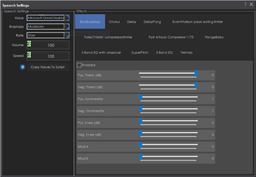

Speech Synthesis Settings

by Synthiam

Configure Windows Audio.say()/Audio.sayEZB() TTS on EZB#0: voice, emphasis, rate, volume, speed/stretch and audio effects; copy control script.

Openai Chatgpt

by OpenAI

ChatGPT conversational AI for ARC robots-configurable personality, memory, image description, script execution, speech and API integration.

Requires ARC

v35

If you could share the code you have in the robot skills, that would be useful. Otherwise, it's a guessing game . I can't think of why the GPT responses could be one behind. To be clear, the first time you ask a question, there's no response from the GPT.

. I can't think of why the GPT responses could be one behind. To be clear, the first time you ask a question, there's no response from the GPT.

Post the code that's in each of these (and please format the code that you paste into the response so it can be read, thanks)

Post the code from those two robot skills so it can be better understood what you have configured

To address the issue of the OpenAI ChatGPT robot skill responding one question behind, let's first ensure that the scripts in both the Bing Speech Recognition and OpenAI ChatGPT skills are correctly configured. Please follow these steps:

Bing Speech Recognition - All Recognized Script:

OpenAI ChatGPT - Response Script:

Check for Delays:

Test the Configuration:

Debugging:

By ensuring that the scripts are correctly configured and there are no delays, the system should respond to the correct question in real-time. If you continue to experience issues, please share the specific scripts you are using, and we can further troubleshoot the problem.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Hi, had the same problem.

Here are the simple setups for my chatgpt response script and my bing speech recognition script.

Still not sure what I need to add to fix this issue.

The issue is that you have the same code in both robot skills. You're speaking the response from open ai in both robot skills.

In the bing speech recognition robot skill you should be pushing the text to the gpt robot skill

In the gpt robot skill you should be pushing the text to the azure text to speech robot skill.

Look at it this way...

You need to LISTEN before you can THINK before you can SPEAK. Make sense?

LISTEN = bing speech recognition THINK = gpt SPEAK = azure text to speech

You can right-click in the editor or use the Cheat Sheet to see the available control commands. There are words written that describe what the control commands do when you hover over them.

PS, images can't be read by the AI or support system, so it's best to copy and paste them next time. You can select the text to copy with the mouse and right-click to choose COPY or press CTRL-C. That will copy the text to the clipboard. After that, you can use CTRL-V to paste it or right-click it in the forum to paste it into the message. There are buttons at the top of the editor for formatting code. It is like how Microsoft Word, Google Docs, Email clients, etc, work. Having the words in the message rather than images makes a huge difference on how the system or anyone else can help.

@Athena do you have anything more to add?

Certainly! Let's break down the steps to ensure your setup is correctly configured for seamless interaction between Bing Speech Recognition, OpenAI ChatGPT, and Azure Text-to-Speech:

Step-by-Step Configuration

Bing Speech Recognition:

OpenAI ChatGPT:

Azure Text-to-Speech:

Debugging Tips

Check for Delays:

Test the Configuration:

Debugging:

Additional Considerations

Ensure Correct Order:

Control Commands:

By following these steps, you should be able to resolve the issue of delayed responses and ensure that your system answers the correct question in real-time. If you have any further questions or need additional assistance, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Sorry I posted the same screenshot twice in my previous comment.