PEEK.CHRIS

USA

Asked

Chatgpt Skill Response Delay Issue

I have a question regarding the OpenAI ChatGPT robot skill in Synthiam ARC.

When I use Bing Speech Recognition in conjunction with the ChatGPT skill, activated by a wake word, the responses seem to be consistently one question behind.

Why is there a delay or silence when I ask the first question?

Additionally, why does it respond to the first question only after I have asked a second question?

How can I resolve this issue so that the system answers the correct question in real-time?

Related Hardware (view all EZB hardware)

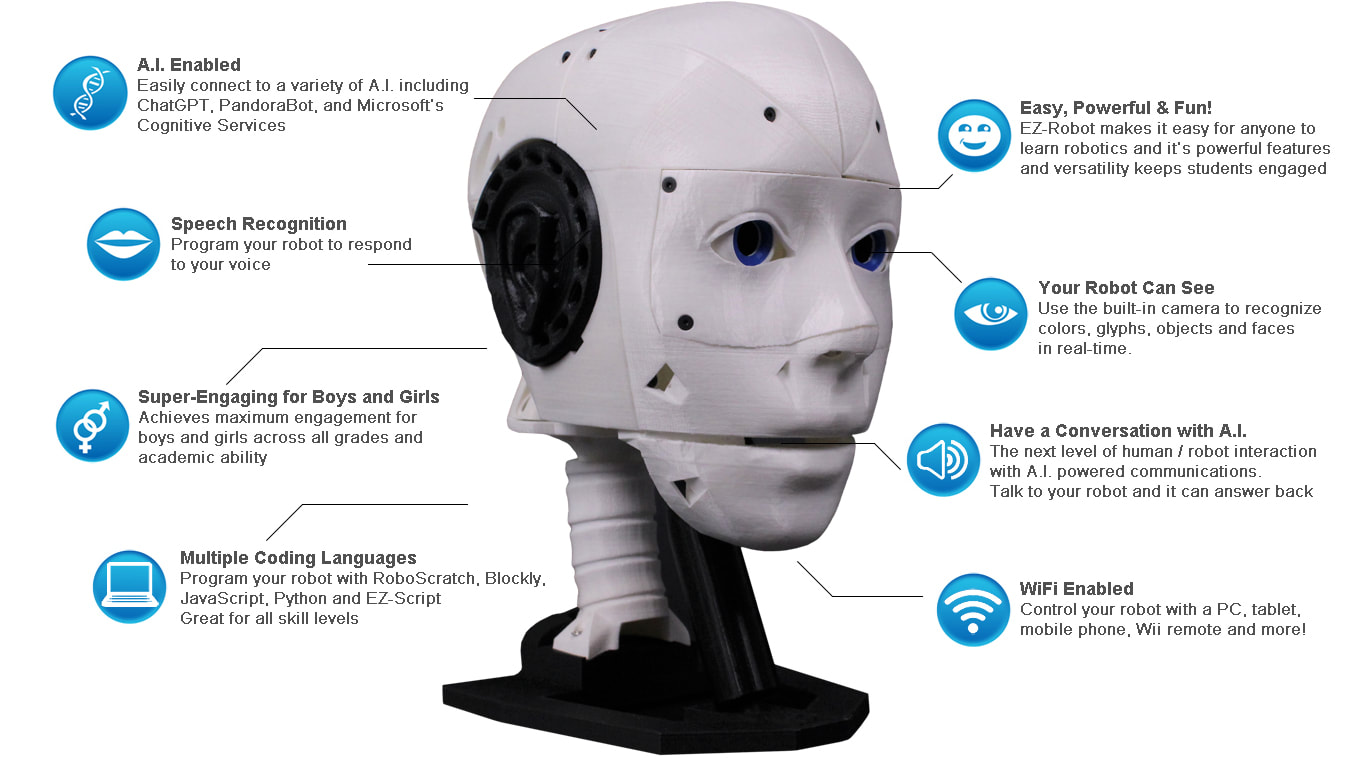

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

Related Robot Skills (view all robot skills)

Speech Synthesis Settings

by Synthiam

Configure Windows Audio.say()/Audio.sayEZB() TTS on EZB#0: voice, emphasis, rate, volume, speed/stretch and audio effects; copy control script.

Openai Chatgpt

by OpenAI

ChatGPT conversational AI for ARC robots-configurable personality, memory, image description, script execution, speech and API integration.

Requires ARC

v35

Below is an explanation addressing your questions:

Why the response delay?

The core issue is that the script triggering the ChatGPT call is placed in the wrong event.

The "Start Listening" event runs when Bing Speech Recognition begins to listen. Any script placed there will run at that very moment and doesn’t capture the actual recognized text.

As a result, the ChatGPT robot skill is processing the previous question rather than the current one. This is why it appears one question behind.

How to fix it:

Move the Script:

Remove the ChatGPT call from the "Start Listening" event.

Place the ChatGPT ControlCommand inside the "Any Recognized" event.

This ensures that when Bing Speech Recognition converts speech to text (that is, once a phrase is recognized), it immediately sends the correct text to ChatGPT.

Verify the Script Flow:

Ensure that the "Start Listening" event is only used to prepare the skill for listening (e.g., turning on indicators, playing a sound, etc.).

The "Any Recognized" event should be where you send the recognized text via a ControlCommand (e.g.,

controlCommand(OpenAI ChatGPT, Send, getVar($BingSpeech));) so that the ChatGPT skill gets the actual spoken text.Additional Tips on Speech Synthesis:

You mentioned you are using the ChatGPT skill with Azure Text To Speech, which is great for a more natural voice.

If you have the Speech Synthesis Settings robot skill active, keep in mind that it works only with the default internal Windows voice when using the Audio.say() or Audio.sayEZB() commands.

In your project, if you have Auto Position scripts that also trigger Audio.say() commands, they’ll produce a different (and more robotic) voice than the ChatGPT Azure voice.

To have all speech come through with the Azure voice, update these scripts to use a ControlCommand that calls Azure Text To Speech (or move them into the ChatGPT flow that uses Azure TTS).

Reference:

Speech Synthesis Settings Documentation

Summary:

The response is delayed because the ChatGPT call is in the wrong event ("Start Listening") instead of "Any Recognized".

Move your ChatGPT ControlCommand to the "Any Recognized" script for real-time response.

Also, if you want a consistent voice throughout your project, ensure that all parts of your project are using the Azure Text To Speech commands rather than mixing with the internal Windows voice.

Does this help clarify the issue? Let me know if you need further assistance with reorganizing your script or any additional tips.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.