Doombot

Repost :

@Everyone

I am designing a charging dock for my bot, and a lot of what @Rich said, I'm already doing in the background...I'm already in touch with a few great programmers willing to help - compensated of course. However I think we need to approach this problem from a different angle? If sensors or coding is so much work, why not do it from the engineering/mechanical perspective? I have a few ideas I'm drawing up, I will post this on the forum maybe we can all dissect it.

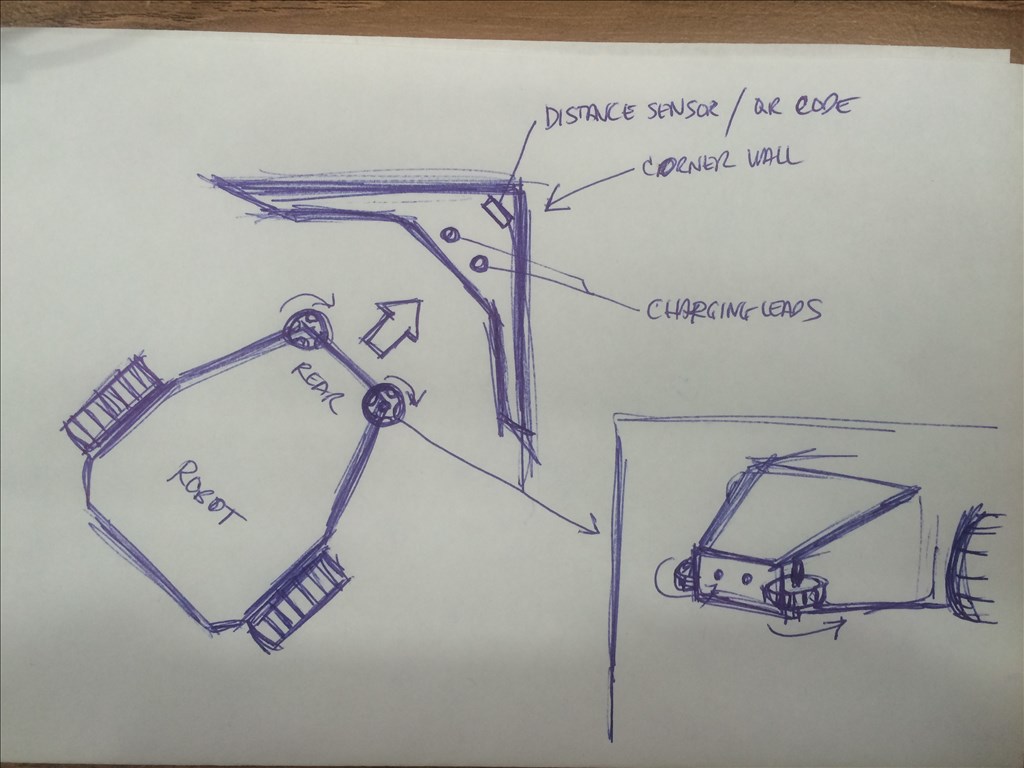

Here's one of them(rough sketch of course be kind):

I was thinking omni wheels going on the back of the bot, sideways, so all it needs to do is find the QR code or distance sensor, and the wheels would pretty much "force" the robot to go to the corner of the wall, where the charging leads could touch. we can do it to where the charging leads are hidden or have some cover on it, that will pop open (using the distance sensor) when the bot is within the vicinity. Any thoughts? This seems doable to me.

You could use a mechanical lever to open/expose the charging connectors similar to how MK Electrical do with their UK plug sockets to avoid live parts being accessible until safe (in this case, when the earth pin is pushed in it drops the covers exposing and opening up the live and neutral "holes", no earth means you cannot push anything in to the live connectors).

A spring loaded lever which the weight of the robot could hold down could open up similar holes for connections to go in to and therefore be very safe and no requirement for the charger to ever need isolating from the mains power supply.

@Rich... That's right, I work with Euro Plugs at my job all time. Brilliant.

With a QR code posted in the charging nest, when the robot enters the charging nest and sees the QR code, have a servo on the robot open a door exposing the charging plug, pin, etc.

@rgordon

Yep that's in my original plan...not only a QR Code but you can also use Glyphs or the distance sensor too...you can even use color...if you can get JD to pick up a red ball why not approach a red charging dock, then the guide omni wheels can do the rest...

However I do like @Rich's idea. For these things you need something more simple and fool proof, like an actual physical switch or something to mechanically actuate the cover to expose the hot charging leads...That's why I wanted to use something like an Arduino just for that purpose...plus it doesnt need to depend on ARC or another EZb to work.

@Doombot I got slammed last week. Just had a moment to look in on the forums. But I have a "Dog house" (for lack of a better description) charging house outside thing for the scouts.

They roll in when on stand-down and monitor sensors for outside activity and charge at the same time.

I like the Roomba style you have drawn but on the big boys I have probes that insert into the bot after hitting the park sensor.

I still owe D.Cochran a EIA report on servers but after I get this client done I'll get some pictures of the charger if you are interested

@Pacowang

Please do man! I'm interested...is it autonomous? how do you get it to dock?

@Anthony... I personally don't think of a remote control bot as "real" robot in the true sense of the word... Having a robot do things autonomously is yes a huge challenge (depending on what you want it to do), but it is so much more rewarding... The shelves are full of boring remote control robot toys... If you're into that, then that's cool... Me personally? I want more... That's why I took the time (and still learning) to use d.cochran's AI. AI will enhance all my future bots that I make... My bots are going to navigate and think on their own....

@Anthony My plan roughly is , when the bot's battery is low, maybe at 5%, >

I'm gonna try to write individual scripts on each of these actions and basically piece them together...in theory this may work but please I'm trying to learn so correct me if anybody has a better way of doing this.

:P