PRO

Sproket

USA

Asked

— Edited

Hello All-

Im sure im missing something, but here is what Im working with.

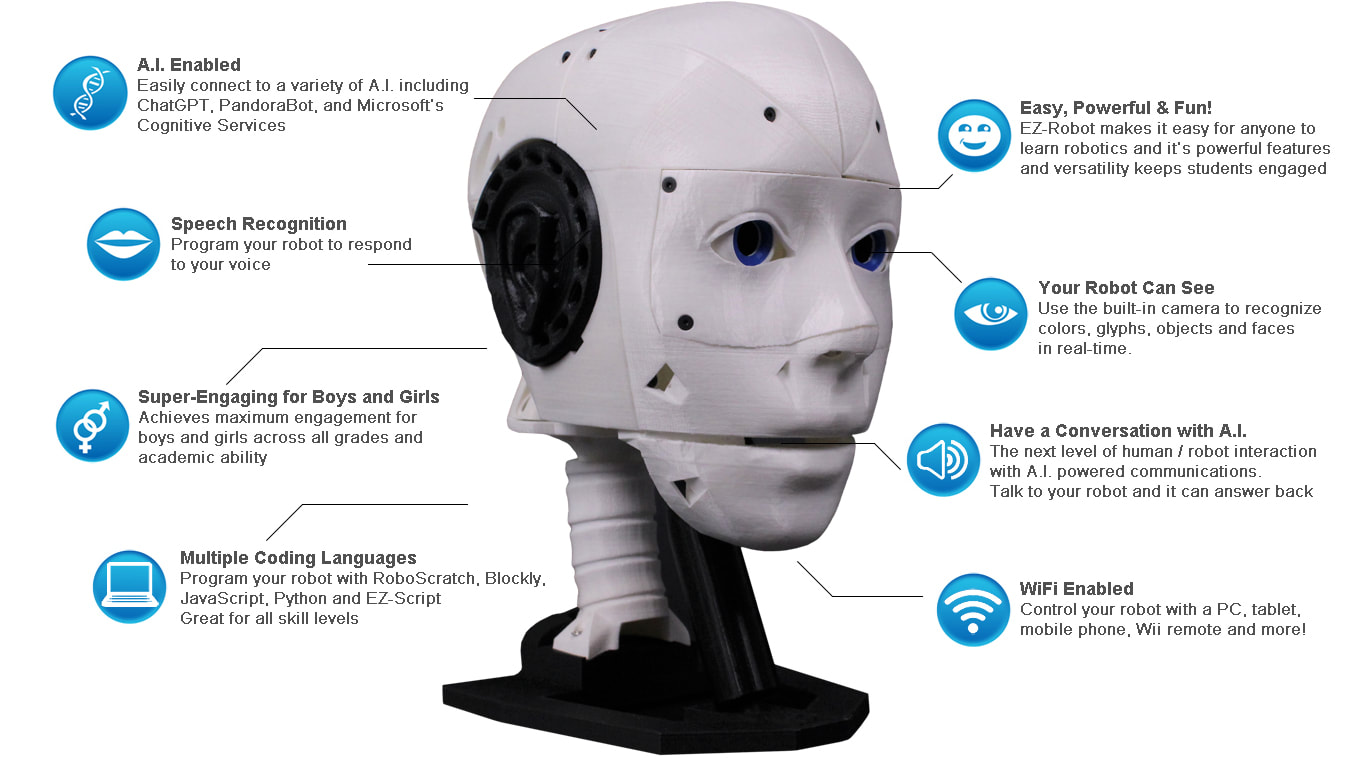

EZ robot head- Azure Text to Speech Bing Speech Recognition with VAD OpenAI ChatGpt

Everything works great but cant get the mouth to move from response "Using speaker from robot head"

Azure selected to use "Speak out of EZB" If I dont use Azure text to speech it works. Am I missing a variable?

Thank you All.

Related Hardware (view all EZB hardware)

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

Related Robot Skills (view all robot skills)

Talk Servo V2

by Synthiam

Animate servos to simulate jaw/mouth with ARC text-to-speech; configurable vowel/consonant timing, start sync, multi-servo control, pause/stop.

Requires ARC

v5

Azure Text To Speech

by Microsoft

Azure TTS for ARC robots: generate natural, customizable neural voices for companion, educational, assistive, and entertainment applications.

Requires ARC

v11

Hello!

It sounds like you're having an issue with getting the mouth movement to synchronize with the Azure Text to Speech output on your EZ-InMoov Robot Head. When using Azure Text to Speech, the audio is generated and played back, but it doesn't inherently control the servo for the mouth movement. You'll need to create a mechanism within your ARC project to move the mouth in sync with the speech.

Here's a general approach to solving this issue:

Sound servo Control: Use the Sound servo robot skill to move a servo based on the sound output of the computer. This skill will move a servo when sound is played from the computer's speaker. You can find the Sound servo robot skill in ARC by navigating to "Add Robot Skill" and then selecting "Misc" to find the Sound servo skill.

Configure Sound Servo: Once you've added the Sound servo skill to your project, configure it to control the servo that operates the robot's mouth. You'll need to specify the correct port that the mouth servo is connected to and adjust the settings to match the movement you desire.

Test the Configuration: After configuring the Sound servo skill, test it by using the Azure Text to Speech skill to speak a phrase. The Sound servo skill should detect the audio and move the mouth servo accordingly.

Adjustments: You may need to fine-tune the settings in the Sound servo skill to get the mouth movement to look natural. This can include adjusting the sensitivity, the range of servo movement, and the center position.

Remember, the Sound servo skill relies on the audio output of your computer, so make sure that the Azure Text to Speech skill is configured to output sound through the computer's speakers.

If you need further assistance with setting up the Sound servo skill, you can refer to the support documentation for that skill on the Synthiam website: https://synthiam.com/Support/Skills/Misc/Sound-Servo

By following these steps, you should be able to get the mouth movement working with the Azure Text to Speech output on your EZ-InMoov Robot Head. If you encounter any issues or have further questions, feel free to reach out for more help.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

From the Talk servo v2 manual page

It works only with the built-in say commands. However, we quickly changed the talk servo v2 robot skill that will allow you to use it.

Instructions

Update to the latest Talk servo v2 robot skill (https://synthiam.com/Support/Skills/Audio/Talk-Servo-v2?id=19940)

Use the ControlCommand() "speak" and that will speak the text.

*Note: I edited your question to include the Talk servo v2 robot skill.

Appending to the previous advice, you can also use the latest Azure Text To Speech robot skill's new options. There is the ability to have the script run the Talk servo v2 ControlCommand for each new time it is speaking.

Put this in the Azure Text To Speech script...

Thank you very much and Happy Holidays.

I did get Azure Speech to Text to speak and move Talk servo using- ControlCommand("Azure Text To Speech", "speak", "Hello i am speaking to you"); ControlCommand("Talk servo v2", "Speak", "The text that is speaking");

Robot output- Hello i am speaking to you, with mouth moving. Using selected voice from Azure.

Can I script this to speak response from Open AI-ChatGpt and Azure speech to text (selected voice) with mouth moving?

If not I get it but Thank you all for what you do!

Rebooting ARC downloading newest update for Azure text to speech

YOU GUYS ROCK ! Its working with Azure text to speech (selected voice) and Talk servo V2 and OpenAI-ChatGpt.

Mouth moving with Speech.

Thank you- what a great Mod/Update. I feel like part of the team.

Cant say ENOUGH. What a great Holiday gift to us All.

Thank you very much for the positive feedback. Enjoy!

Azure voices

https://speech.microsoft.com/portal/voicegallery