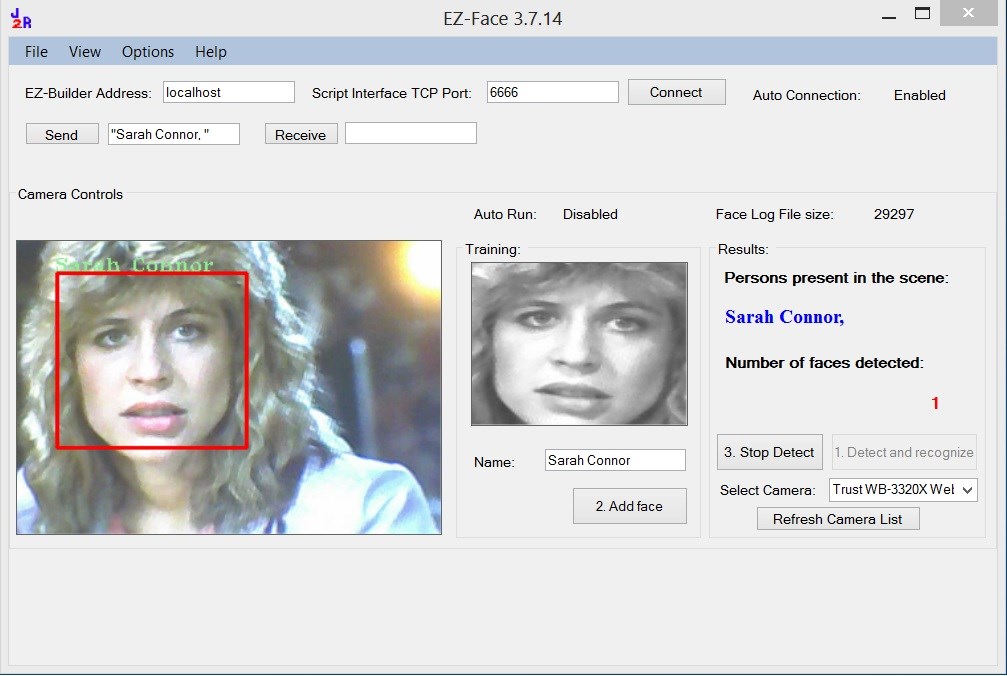

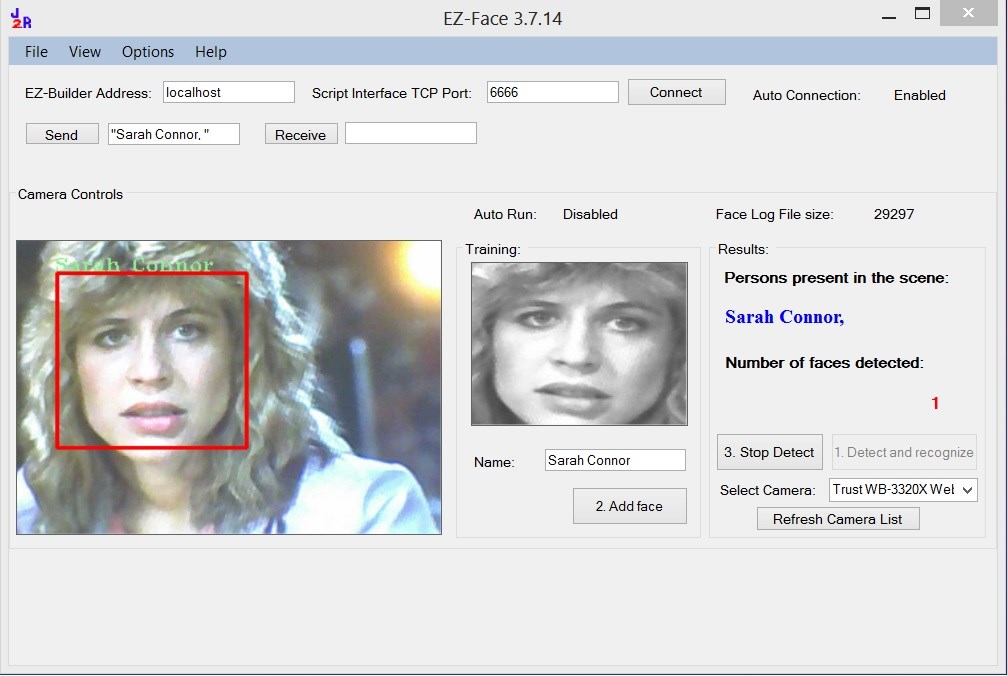

EZ-Face is the first in what I plan to develop into a suite of supporting application for ARC and other robotics applications. EZ-Face performs multiple face recognition. It has a interface for training faces and assigning names. When the application sees faces that are recognized the names are displayed and visually you'll see boxes around the faces with the names assigned. If a face is detected but not recognized there will be a display of a box around the face with no name. The more pictures of a face you train, the easier it is for the application to recognize a face.

This is a stand alone application developed in C# under Visual Studio.NET 2013. You should have .NET framework 4.5 and it is for Windows7 and Windows8.x systems.

This project showcase explains the technology behind the application and highlights development milestones.

Technology: Developed in .NET Visual Studio 2013 (you can use express versions with the source code) Designed to work with ARC but could be integrated into other software or robotic systems Is a standalone application Is open source, source code is included Uses emgu cv wrapper for .NET (Open CV)

Resources: (Things I found helpful in creating the application) ARC Telnet interface tutorial (Enable Telnet as the first part Shows, this is used to test communications manually to ARC via TCP/IP): https://synthiam.com/Tutorials/Help.aspx?id=159 If you do not have Telnet installed on your system go to this site: https://technet.microsoft.com/en-us/library/cc771275 ARC SDK Tutorial 52: https://synthiam.com/Community/Questions/4952&page=1 ARC script for listening to the TCP/IP port for variables: https://synthiam.com/Community/Questions/5255

Acknowledgements: DJ Sures, for making EZ-Robot and ARC so robust Rich, for his help with ARC scripting Sergio, for his emgu cv examples

Basic Usage Direction (after download and install): 1.) Open ARC and load the included EZ-Face example 2.) Click on the Script start button (this sets up the communications from the ARC side of things) 3.) Open the EZ-Face application 4.) Refresh your camera list (click the button) 5.) Select your camera (in the drop down list) 6.) Click the "1. Detect and recognize" button 7.) Train at least one face 8.) Change the local address and port number as needed (the local IP address may not be your computer's address - you can enter "localhost" and leave the port set to 6666 unless you changed that setting in ARC) 9.) Click File and select Save User Settings (to store your changes) 10.) Click Connection (this opens the communication line to ARC from the EZ-Face app side 11.) Allow EZ-Face to recognize the face you trained - then with your computer speakers turned on ARC should speak "Hello (the name of the face you trained)" 12.) If the example work - integrate in your EZ-Robot applications as you see fit

Tips: 1.) If after training several faces if you get false recognition of faces (faces recognized with the wrong name) - to correct this you should train the incorrectly recognized faces with the correct name. After a couple of training pictures are stored the accuracy of the face recognition will improve. 2.) Do not train faces with one camera, then switch to another camera for face recognition - recognition accuracy will drop.

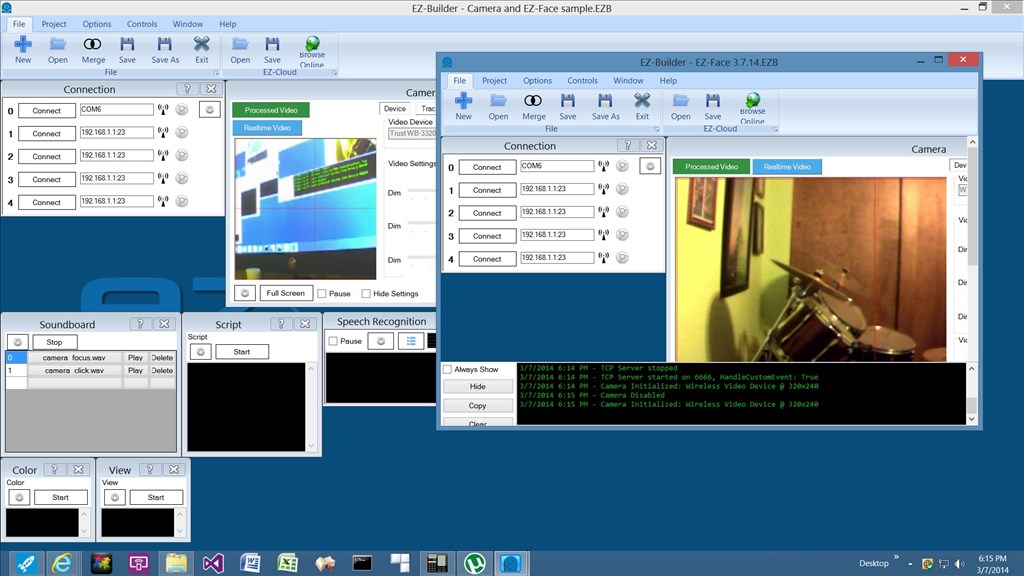

Using Two Cameras: What I found worked best was to start ARC, select the camera I wanted and started the camera feed, then I started EZ-Face. If I reverses the process (even though I was selecting a different camera) I would get a black image in ARC.

2.26.14 Update: I still have several improvements I want to make before I upload the first public version of the application.

3.2.14 Update: The first public version is ready for release and is posted at the link below. This version has many user improvements to allow you store many settings, including http and port address, camera device, logging of faces in a text file (up to 1mb of data before the file auto deletes), face variable output to ARC, face training and more.

3.3.14 Update:

I updated the script, version 3.3.14 has the HTTP server panel (which is not used - you don't need to start it) but it does show you your computer's IP address so you can enter it in EZ-Face. Remember to save your settings under the File Menu. I also changed the script so it will no only speak for variable values greater than "" or NULL.

EZ-Face3.3.14.EZB

3.7.14 Update: I updated the EZ-Face application: "localhost" is now the default address, new option for auto connect, functions to receive commands from ARC or other 3rd party application to stop and start the camera feed within EZ-Face. There is also a new ARC project with several new scripts to test out the functions. Please go to my site to download the latest version. You will also find a video there that demonstrations the new functions and provides directions for setup and usages.

Download: The latest version will be published here: https://www.j2rscientific.com/software For support and reporting any errors please use the ContactUs feature from https://www.j2rscientific.com with the subject line "EZ-Face".

I welcome any and all feedback!

Thank you

Other robots from Synthiam community

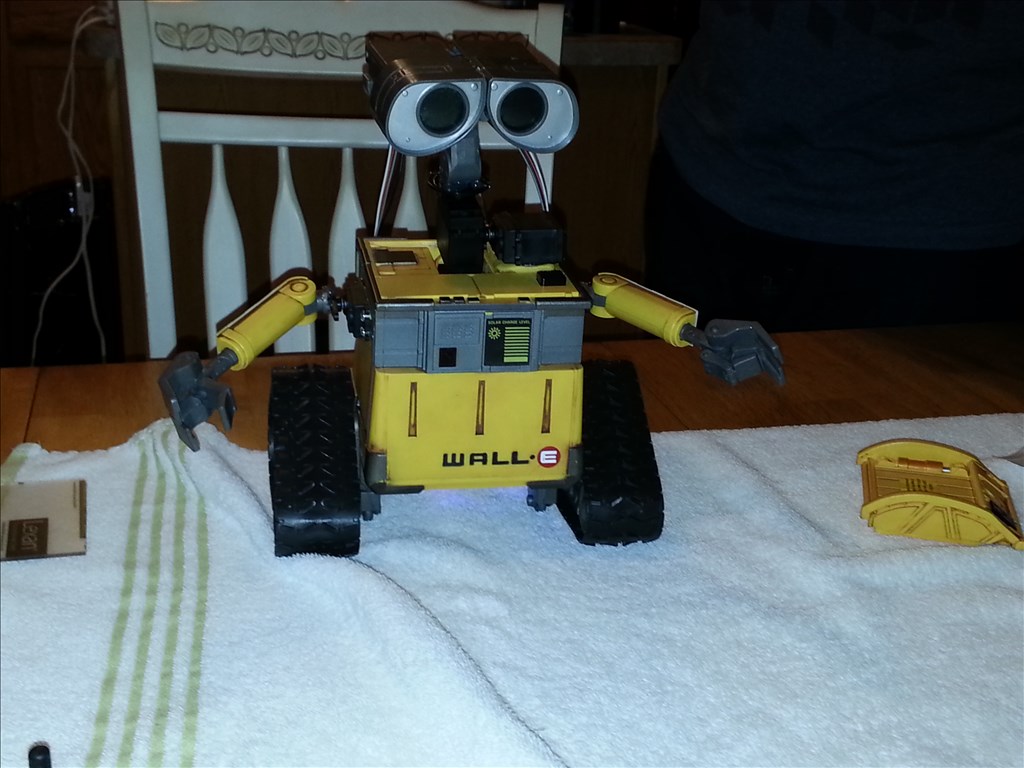

Slim6072's My Wall-E

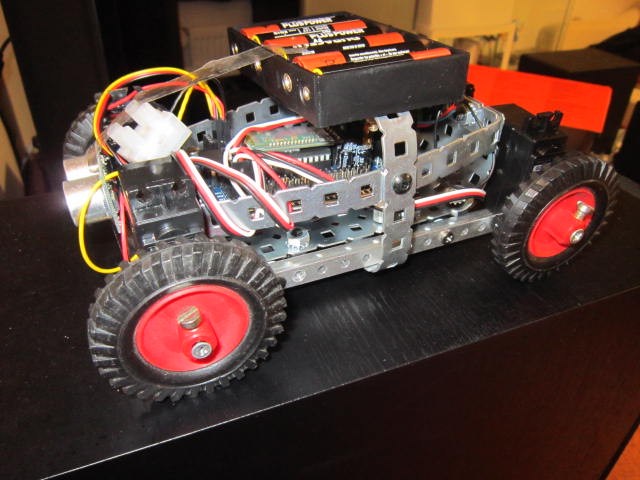

Cosplaying's Project Mini Rover

Can EZ-Face identify a person's face and say their name in a greeting?

@bhouston have you see the video I posted? The video demonstrates EZ-Face doing just that.

Hi Guys, I'm a newbie to the forum but have been following it for a while and frankly it's by far the best and most helpful that I have seen bar none, and has by far the most way out projects. I have the EZB V4 and camera on order and hope to be starting a project soon - meanwhile I've been playing with ARC - AWESOME!

So I tried out EZ Face - really nice job and excellent video instuctable! Many, many, thanks Justin for sharing your hard work here. For me EZ-Face functionality was one of THE features that a robot should have and it delivers on that! As a note of interest I added all the family faces and it was interesting to see how the Open CV got a little confused between my daughter and my wife and then me and my son. It settled all the arguments of who the kids take after https://synthiam.com/emicons/emo_smile.gif My guess is that the Open CV is sensitive to lighting conidtions but a new foto or two seems to quickly sort that.

I'll keep following the posts and bit by bit try and understand the scripting better.

I new here I'll look for that video.

@JustinRatliff just read all this thread awesome job

@JustinRatliff - you may be interested in this links to show how others were thinking of object recognition:

QBO Blog

I read somewhere that the Open CV needs a fair amount of images before it starts working well.

I am using EZ Face on InMoov Project. Using left eye at this time. I am about 6 weeks into this project.

@Jack Your robot is amazing! To see EZ-Face used in your robot is just awesome! Thanks for sharing!