Hey there!

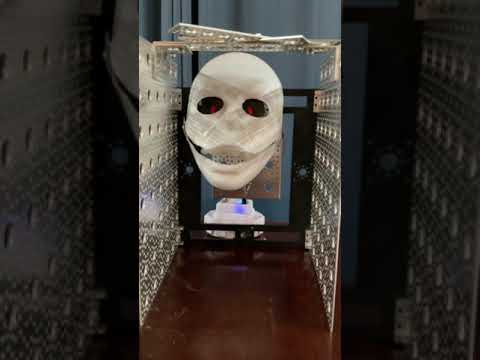

Here is my latest project. This time it's an emotion generating project. Through speech recognition activation, your robot will respond with emotions. Happy, sad, angry or tired, they are all there.

It is built as a development project, so you guys can continue the development.

It works by having an always running main script checking values. Those values change based on what emotion you want. Happy? Set $happy true and all others false. Sad? Set $sad true and all others false.

Currently, Emotions has integrated the Personality Generator, and RGB Animator to help bring personality to your robots. Currently, it is best compatible with the JD Revolution robot as it has the RGB sensor.

Further development can be done by me if people who are less script skilled want something added.

Unlike my most recent project, Emotions is all built into Ez-builder, nothing extra. Simply find the file in the EZ-Cloud or in this thread, download it and your off!

To add emotions is a little difficult as you must set it to false by every script that wants a different emotion. As it stands, that means adding an extra variable to to at least 8 other scripts. And you have to add its call on in the "Emotion Manager". But once that's done(a 10 minute ordeal at best) your good to go!

If you want to add actions to an emotion, simply click the edit button of the emotion in the "script manager" and add the command to start that action.

I hope you guys like Emotions, and cant wait to see the robots using it!

Disclaimer: This project is untested with robots. It may not work correctly with your robot at first. Use at your own risk. The creator under the alias Technopro is not responsible for any damage as a result of using this project.

Enjoy your day!

Tech

Other robots from Synthiam community

Csa459's Ok Guys Wet And Dry Vac For Robot Start With

Kashyyyk's VINCENT From The Black Hole

And as for the limited amount of functions, I wanted it to be easilt editable for others to incorporate into there robot. Each robot is different so if i made servo movement actions in the scripts, another robot might spin around and break off something.

I did notice some servo routines in there for, what did those servos does on your robot?

Servo speed was put in there because that can be set easily for other robots. Or removed completely depending on the application. And it proves as example. I didn't test it with any of my robots.

Thanks for the project! I'm also working on an emotion model which will probably be finished in a few months. I'll try out your system on my Roli soon.

Yes, your the one I actually got the inspiration from. I would have credited you for the idea but I couldn't find the post to name you. I'm excited to see what you make. It is an external program am I not correct?

Correct. My project is pretty big so it takes a while. Warning: wall of text.

Currently the status is that:

Currently I'm working on a Prolog library to transform the spoken sentence into a semantic representation such that it can be used as a query on the agent's knowledge/beliefbase, which will be a combination of Java objects, Semantic Web technology and Prolog. Using this speech-interface setup I don't have to pre-program all commands in ARC.

For example now I can start my robot facing the wall. It will then try to find and greet me as an initial goal. This results in the agent software switching on the face recognition module and looking around with the camera, periodically it'll say "where are you?". If the robot can't find me, then I can say something like "l'm behind you" and the agent will reason that it has to turn the body to face me. Once the camera detects my face it waves at me, switches off face recognition and drops the goal.

Once I have the knowledge/beliefbase setup properly I will finally move on to the emotion modules. For this I'll use as a foundation Bas Steunebrink's formal model of emotions (see his thesis here) and combine it with my own models for runtime monitoring and control (I'm still writing my own thesis).

The emotion model has three separate subsystems: emotion elicitation, experience, and regulation. Emotion elicitation causes immediate emotions such as happiness when I give the robot a toy. Emotion experience takes as input these immediate emotions and produces a mood. These are longer lasting effects such as a happy or brooding mood. The mood will always slowly decay to neutral, when no emotional input occurs over time. Finally emotion regulation causes the agent to act upon emotion. E.g., using your project, a switch in mood can cause the eyes of a JD head (which I intend to buy for my Roli) to use a specific mood associated animation.

Using my quick prototypes I find it much easier to perform relatively complex behavior through my agent software, rather than purely in EZ-Script. Especially something like emotions, which permeates a lot of aspects from the robot, is hard to implement and maintain using only EZ-Script. So the framework is pretty complex with a lot of subsystems, but when you get the hang of it, building A.I. for EZ-Robots (and other systems) becomes easier.

That's just the gist of it

@BasTesterink

Very interesting. The work by Bas Steunebrink's is fascinating. I look forward to seeing your work as well. I was wondering though, are the lines in the quote reversed concerning mode of operation? That is to say, should it have been:Thanks.

@WBS00001 To my knowledge, ARC cannot via EZ-Script use telnet to push information out of the system. But it can use HTTPGet in EZ-Script to push information.

The other way around, you cannot use a HTTPGet command to set some variables and start a script form outside of ARC. But you can use telnet for that.

For instance:

When the agent wants to find me it sends the telnet command:

The EZ-Script is:

(comments: because face recognition has many small false positives, the script uses a minimal camera object width so that it will only consider it a positive when I'm fairly close to the camera. In the future I'll try to use other, more accurate, face recognition software)

The $PERCEPTURL variable is set by an init script and refers to my agent's internet address.

My design principle is to use EZ-Script to only specify high level observations and actions in small scripts, and let the agent software do all the reasoning/decision making.

I hope that clarifies it a bit.