jp15sil24

I am transitioning from EZ-Script to JavaScript because I heard that ARC X will no longer support EZ-Script. I've been struggling for hours with simple tasks that don't seem to work. I plan to write more complex code later. Here's what I want the code to do:

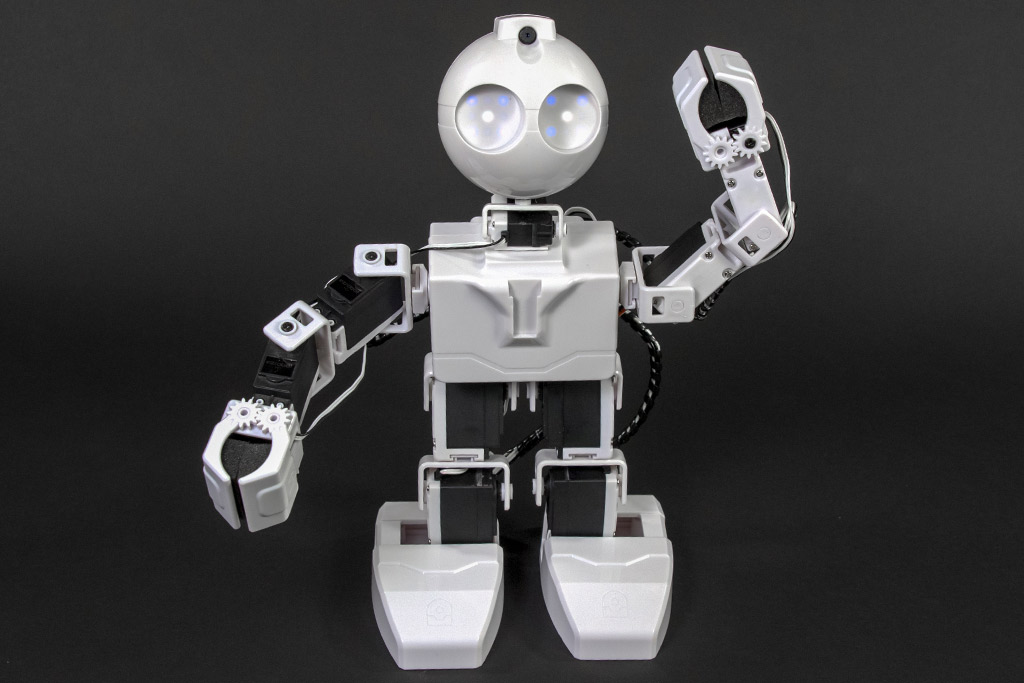

JD should introduce himself, and I will respond. JD should recognize my response and reply accordingly. If JD can't recognize what I'm saying, it should start the process again. Could you please review the code below?

//************** Start Here ***************

Audio.sayWait("Hi, my name is JD"); // JD's introduction

sleep(500);

ControlCommand("Bing Speech Recognition", "StartListening");

sleep(3000);

print("Answer is: " + getVar("$BingSpeech")); // For testing purposes

if (getVar("$BingSpeech") == "Hi JD" || getVar("$BingSpeech") == "Hello JD") {

sleep(1000);

Audio.say("Hello Philip");

} else {

Audio.say("Sorry, I don't understand. Can you say that again?");

}

The syntax seems correct since there are no errors, but the problem is that the $BingSpeech variable holds the previous input instead of the current one. For example, if I start the script a second time and say "Hello JD," the print statement displays "Hi JD" because I said "Hi JD" the first time. It seems that the $BingSpeech variable retains the last input. Perhaps clearing the variable would help, but I'm not sure how to do that.

I hope my explanation is clear. Additionally, could you modify the script to include a loop when the response is not "Hello JD" or "Hi JD"? I tried using a goto statement, but that's specific to EZ-Script.

Related Hardware (view all EZB hardware)

Let's address the issues you're facing with your JavaScript code in ARC. The main problem seems to be that the

$BingSpeechvariable retains the previous input. To handle this, you can clear the variable after processing it. Additionally, I'll help you implement a loop to keep asking for input until the correct response is received.Here's a revised version of your script:

Key Changes:

Clear the Variable: A function

clearBingSpeech()is added to reset the$BingSpeechvariable to an empty string after processing the input. This ensures that the variable does not retain the previous input.Recursive Function: The

handleResponse()function is used to handle the speech recognition and response. If the response is not recognized, it calls itself again, effectively creating a loop until the correct response is received.Function Structure: The code is organized into functions to make it more modular and easier to manage.

This script should help JD recognize your response and reply accordingly, retrying if the response is not understood. If you have any further questions or need additional assistance, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

The problem is more complicated than Athena is answering. She’s trying to help by assuming you’re on the right track and building off where you started. The issue is you’re writing the program linear.

Programming these days is event based. That means you trigger an action and the next code is executed by that action.

but before I write more to help, I need to understand something. This is an important question moving forward for your conversation expectations for the robot. Because it matters what the next steps are based on your answer.

Are you expecting the robot to have an open dialog conversation or do you want every question to have expected specific responses (ie "Hello JD", "How are you?", etc)

You've probably noticed that I'm not a programmer-my thinking is linear and focused on one task at a time. I thought Athena's solution with function calls was great. My main project is to build a larger robot, like an Inmoov humanoid, but with wheels or on a rolling chassis, capable of having open-dialogue conversations and moving autonomously at home. At the moment, I’m working on small robots to learn ARC, develop different skills, and practice with JavaScript, servos, etc. Before tackling something bigger, I believe this approach is the best way to prepare. For my current project with JD on a chassis, I was thinking of using specific responses. I control the chassis with a joystick/voice and JD with voice commands to play sounds. Now, your question has made me think :-/ . Why not do more with this chassis+JD setup? I could later reuse this configuration for my 'Inmoov humanoid' project. Since the next steps matter so much, I want my current project (JD+chassis) to support open-dialogue conversations and be able to move autonomously around my house.

Funny, that happens to me too when I use Bing speech recognition and the chatgpt skills. It is always 1 input behind. If I say "HI", nothing happens in chatgpt, then I say for example "How are you" and in chatgpt appears "H" and so on.

That’s because you’re both thinking linear.

each robot skill is a task. And a task has a result. So you trigger a task with a control command, and the result of the task is executed in its own script.

trigger Bing to listen from a script

Bing listens

Bing is fine listening and now executes its own script whwre you process the result

the reason I asked how you intend to use the speech conversation is because I can’t help you without knowing the answer. I need to know if you want a linear "expected" results or open ended dialog like ai.

because they are different ways to program.

if you want predictable results with a linear conversation of choices , use the dialog conversation menu robot skill.

if you find that too complicated and want an old basic style linear if/then giant program of expected conditions, use the Audio.waitForSpeech() command

lastly if you want an ai dialog flow as if your robot has a personality then use open ai gpt robot skill

Lastly read the manual for the ControlCommand I think is important. The ControlCommand does not wait. It triggers an action which happens asynchronously.

remember you’re working with a computer in a multitasking operating system. All the tasks are running at once. So you’re instructing a task to do something. When it’s done doing it, it executes its own script.

That makes sense. Thanks DJ.

I was on my phone responding to those previous messages so that I can give you more details now. I know it's all documented in the Getting Started guide, but sometimes, talking about it with a human is easier. Here I am, a human.

Okay... so asynchronous programming is how computers work in multitasking operating systems. Back in the old days of basic, you'd run a program linear. There was one thread, one program doing one thing at a time. But back in the late 1990s, that changed, primarily due to object-oriented programming models, and that's where variables can become more complex. A raw primitive variable consists of numbers, strings, or boolean. That's how you might remember programming back in school. You assign a value to a variable...

That makes x equal the value of 3. More technically, the variable X points to a memory location that has the value of 3 in it. However, object-oriented programming, which has been around for a long time, has significantly impacted how asynchronous programming works today. This is because variables became complex. Variables with OO (object-oriented) point to memory regions with a structure that becomes objects. For example...

Now, the variable FOO is still a pointer to a chunk of memory, but instead, that memory is complex and contains a structure. By doing this, objects became more complicated, and everything became an object. Even your program is an object. Everything is an object that inherits objects that inherits objects, like a family tree.

Because of this, the compiler design realized that if an entire program was in an object, that object could run in its thread. So you could have a main thread launching little objects like programs in other threads. The object-oriented design made multi-threading super easy and accessible. That is why computers can do more than one thing at a time.

Now, just like how you can have Excel and Chrome running simultaneously, you can click and interact with those two programs while they run in the background. Back then, the program would not run in the background, especially on the first GUIs like the Apple Macintosh. Only the foreground program ran; other programs would stop until you moved into the foreground. This was also how early MS Windows worked until Windows 3.11, which ran in 386-enhanced mode. That is when multitasking for Windows became a thing.

Synthiam ARC is a graphical representation of programming. Think of each robot's skill as a program. Each robot skill can send instructions to each other as control commands ( ControlCommand() ). The ControlCommand instructs another robot skill to do perform a task. In your script X you say "Hey, do this thing", and the robot skill goes off and does it. At this point, your script X doesn't care about whether or not that thing was done. This is because the robot skill does the thing and launches its script after doing it.

In the Bing Speech Recognition sense, your main script tells Bing to listen. But when it's done listening and converting the text, Bing runs its script with the result.

1. Linear Programming

If you wanted to program linearly, like the 80s MS-DOS basic, you'd put everything in a single script-one giant, huge, long script that does everything only at once and nothing else. That script would be a giant, long list of IF/ELSE statements. It's not common these days and not recommended, but it does have specific use cases.

Here's an example of a linear program for a conversation with pre-defined excepted responses...

As you can imagine, that program would grow big and be pretty difficult to manage, so no one does it.

2. Converation Menu

This robot skill performs nested choices similar to what the previous example would be doing. But it does it much more elegantly without having to write a bunch of monotonous code that makes you want to pluck your eyeballs out of your face holes. The manual for it is here: https://synthiam.com/Support/Skills/Audio/Conversational-Menu?id=21091

You create a bunch of choices and responses, and the robot can do the things. It's good if you have a robot that needs to perform specific, predefined tasks.

3. AI Dialog

This is how robots are meant to be interacted with. You use the Open AI Chat GPT robot skill. You provide a personality for the robot. You provide a bunch of example code it can run to perform tasks. For example, you can provide lists of auto positions it can run, etc. Just give it as much information about what it can do in the personality description.

Now, you can talk to the robot by combining Bing speech recognition and open AI chat gpt robot skills. The robot can do everything but also has a personality while doing it. The manual for it is here: https://synthiam.com/Support/Skills/Artificial-Intelligence/OpenAI-ChatGPT?id=21559