Zxen

Australia

Asked

— Edited

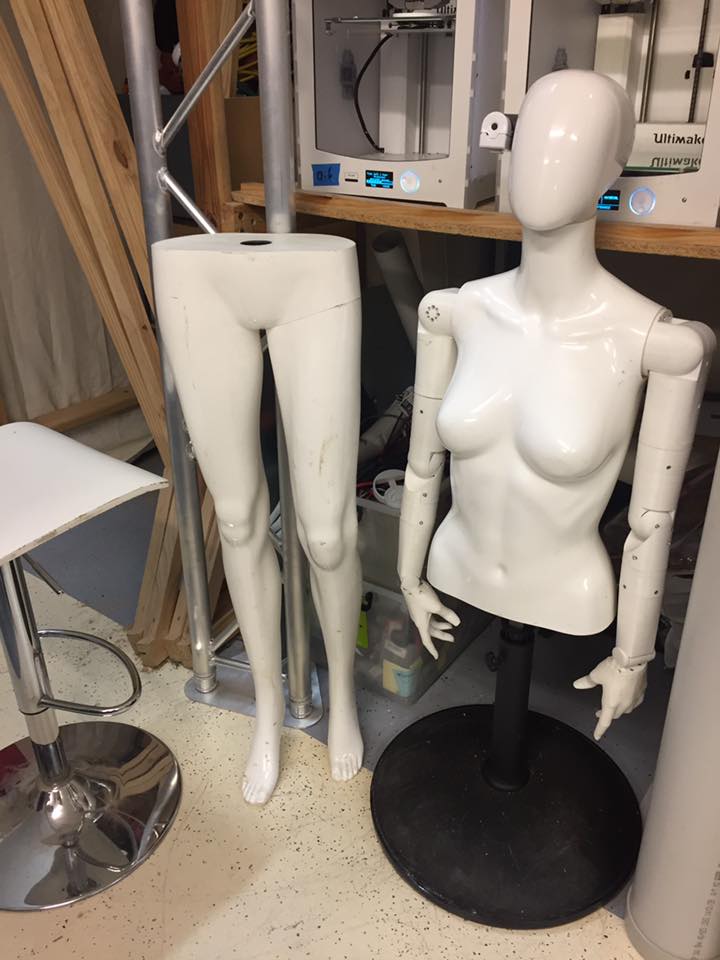

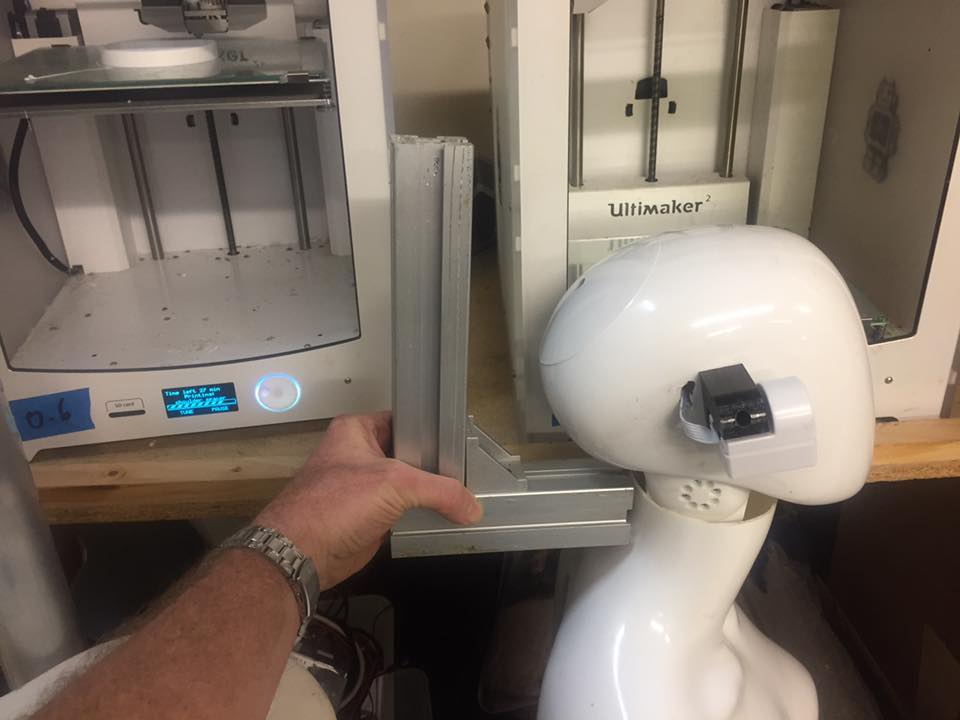

I need to extend the camera from it's circuit board and mount it in something like a cable dot on the side of the head facing forward. At the moment she looks borg. Not good. This is for department stores for women's fashion. Can I separate the camera with wires so its just a tiny dot?

How do I do that please?

PS The camera will definitely not be placed in the face of this particular mannequin head - It will be under the chin or facing forward from the ear.

@Zxen with a tiny bit of tweaking and some decent lighting you can definitely get some favorable results with ARC facial tracking. Check out this video I just posted:

I used the standard JD example project. I added a script that made Servospeed 0 for the pan and tilt servos: D0 & D1 (The fastest speed). I also slowed down the camera frame rate a touch with the slider bar. The last thing that I changed was the vertical increment steps, I changed it to 2.

*Edit: I also adjusted the Grid lines to be closer to center

This is encouraging @Jeremie. Thanks for the suggestions. Your tracking is so smooth!

So just so I understand better: *Set servo speeds to zero so servos will move as fast as possible. *Slow down camera frame rate a little. (Why is this?) *Changed was the vertical increment steps. (Why is this and will this setting be different for others?) *Tighten up the grid lines. I assume this is because tracking takes place in the two side boxes that this gives the software more room to work trying to bring the object back to center?

Also, is the camera you're using in this demo stationary or mounted on the part of the robot that is panning back and forth?

Thanks again!

Thanks for the info on the camera not sure how I missed that, might have been while working on Guardians ?! I have a box of old cameras I'll have to try and sell then I'll pick up a few of the new ones!

Also thanks for the tut on the tracking. I never thought about setting the speed first. That is fast and pretty smooth tracking. I look forward to trying it out with hard core Alan this week.

@Dave Schulpius

Correct, I wanted the servos to react as fast as possible. I slowed down the camera frame rate a little bit to decrease the chance of missed frames due to communication slow downs over WiFi. Yes, you are correct. In the tracking settings I changed the vertical increment steps to move a little farther with each movement, this will likely be different for each robots/servo. Distance away from the person being tracked will also affect this value. Yes, you are correct again DaveThanks!

Smooth tracking Jeremie! I want to achieve that. How many incremental steps does he move at a time? 1 horizontal and 2 vertical did you say? Do you have powerful WiFi in your house or something? What if you use the phone app instead of a computer? Does it need Wifi to work or are calculations done internally? The video of your face looks like real time - if I decrease the frame rate I get lag, while you do not. Is that your Wifi Router quality thats responsible?

I think I'm right - WiFi connection speed must be the answer. You're getting 10-14fps even after slowing down and I'm only getting 2-3fps maximum. Its either WiFi or the video card on my computer. Anyone know the reason for frame rate discrepancies? (I have the new v2 communication board.) How close is your router to your EZB? What kind of graphics card do you have?

@Zxen

I was using 3 horizontal incremental steps. I was connected via AP mode (no router involved) I believe I was averaging 10fps. Have you tried AP mode instead of client mode (connected to router)?