Zxen

Australia

Asked

— Edited

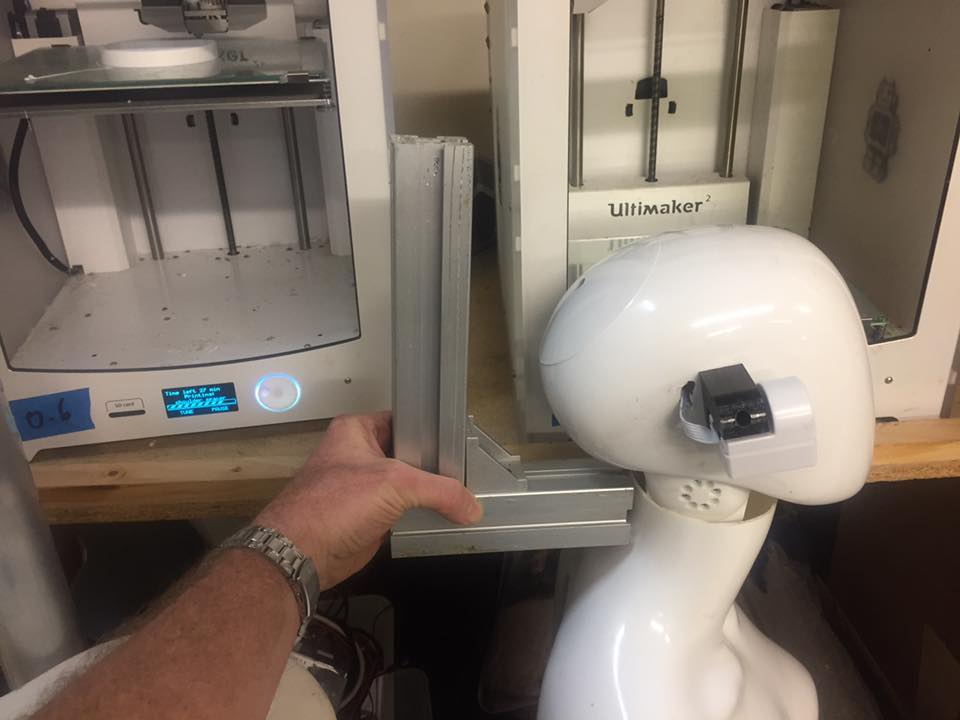

I need to extend the camera from it's circuit board and mount it in something like a cable dot on the side of the head facing forward. At the moment she looks borg. Not good. This is for department stores for women's fashion. Can I separate the camera with wires so its just a tiny dot?

How do I do that please?

PS The camera will definitely not be placed in the face of this particular mannequin head - It will be under the chin or facing forward from the ear.

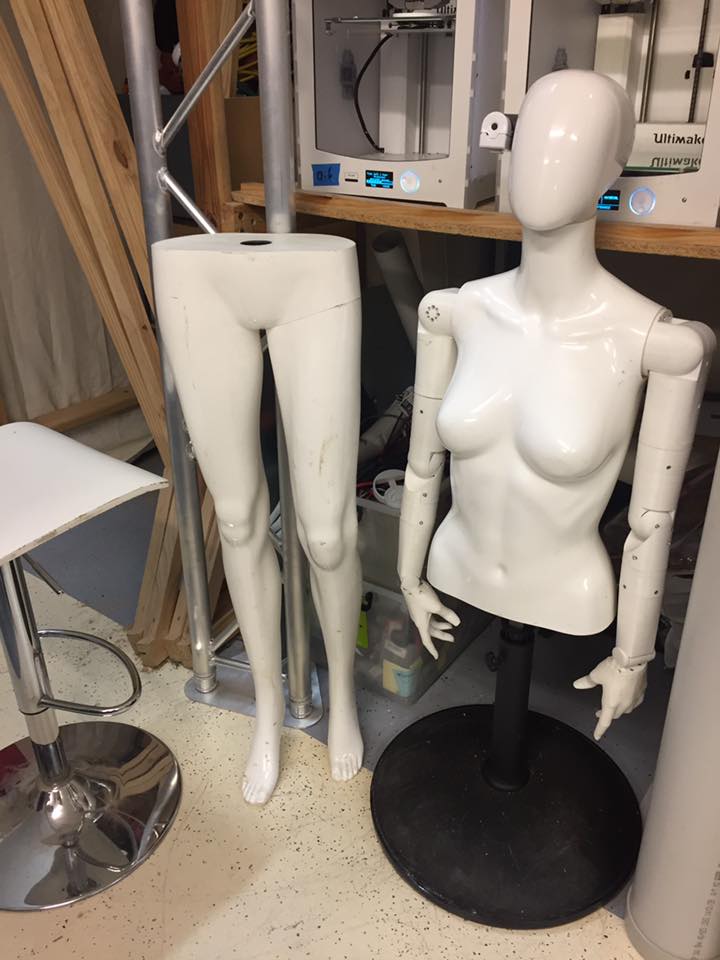

If a robot is always going to wear the same clothes, then I could do that (hide a camera in a necklace or bow tie). I even considered separating the head from the body so the gap could accommodate the camera. But when she, like most humans, lowers her chin, it conceals the neck. Besides that, high neckline clothing is not uncommon. I also think that a good neck is important for her beauty, but I haven't solved that yet. I'm looking into making foam torsos at the moment so she can move like a human - her shoulders could be made more human, for example. Also consider that the neck is going to be bearing the weight of the entire dancing robot, and needs to click out easily for clothing changes (except in the case of torso only displays perhaps). The arms need to click out too, but that's a different topic.

Ah, I have a better understanding. After you do some camera trials for the tracking I think you will see the direction many of us have been leading you. Maybe try the remote stationary camera. Also realize a web / usb camera can also be used in the ARC software. This would be connected directly to a computer which allows you to mount the camera anywhere. Also multiple cameras. A lot of options available.

'Also realize a web / usb camera can also be used in the ARC software.' 'Also multiple cameras.'

Really?

That would be great. I can't think of how to apply it but I would love a link to a lesson or a project using interesting camera setups. I'd love the head being able to look people up and down and the robot says something like, 'Oh wow! Like, who are you wearing?' I might even put a microphone in her hand so she's like a red carpet journalist. Imagine if she could call people over based on colour recognition. 'Excuse me, you in the green. Come over here, I want to talk to you!' That would be considered AI. I also want her to mimic body movements using a Kinect sensor, which I consider an advanced mod - afterwards. I also want to give her a Pandorabots.com library of responses - I'll use an actress to voiceover her speech rather than rely on a computer voice.

I could place a stationary camera in a wall setup. Also, I could make part of her neck beam pan (rotate), and place a camera in that, so the software can figure out the tilt alignment necessary to see someone's face height if that works well. Please note that her entire body may rotate to show the clothing in 360 degrees. Also, it would be very cool to have one camera so that multiple robots can face something together - the birthday girl for example.

Go back to the basic camera information in ARC, and click "?" as DJ would say. There is a LOT of information within ARC including many tutorials. "The Yellow Duck" is an example of color recognition with a response. Scripting can allow one camera to do many functions.

People here in the Community can give suggestions and advice, but only you know what your needs are. Learn the basics and you will begin to gain a better understanding of what the EZB controllers can do with a camera.

By the way, the Palette robot seems to have a dot in the upper neck which could be a camera.

There are many sensors available which will do what you want. There is a sensor / camera (by Omron) which detects gender, age, gaze, emotion and facial recognition. It could return information which will allow your robot to ignore men and young children, but target only women. You just need to learn the ARC capabilities and combine it with these sensors. (Information is already available in a plugin.)

There is a lot of cool stuff available and things you can do with an EZ-Robot control. You have to look at example in the tutorials, and on the community forum.

Yes, I did notice the palette neck camera. I may rethink that if I decide to tell stores to never cover the neck with clothes and can trust that the stationary system will work at close range (although it seems impossible) and that the robot face will look directly at the person's face no matter the proximity (which seems miraculous). I've completed the camera tutorials once and played with the controls, but that was before I spent months building the chassis so I forgot / did not know how to apply it at the time.

Thanks for the Omron camera reference (https://www.ia.omron.com/products/category/sensors/vision-sensors_machine-vision-systems/smart-camera/index.html). I would have thought that was not compatible with the ezbv4 and such capabilities were driven by software. Having said that, I did speak about using a Kinect sensor, which has been unnecessarily difficult in my brief experiments (I guess I spent around 100 hours or so on it).

I found my other ez robot camera, and it's internals are different. I hope the new camera is the same design as the one I put in the side of her face, rather than this double decker one:

If you consider using a single board computer within the mannequin you will gain a lot of options. I am installing a Latte Panda in my two robots. ( ANTONN AND RAFIKI) . This will allow me to use a lot of hardware directly connected to the computer.

Try playing with 2 stationary cameras. See if you can track center to left with one and center to right with the other. This way you may scan left to right until someone comes by. Just a thought. Edit:: try a web cam.

Ok. I will let you go organize your options and play with the EZB and cameras.