Zxen

Australia

Asked

— Edited

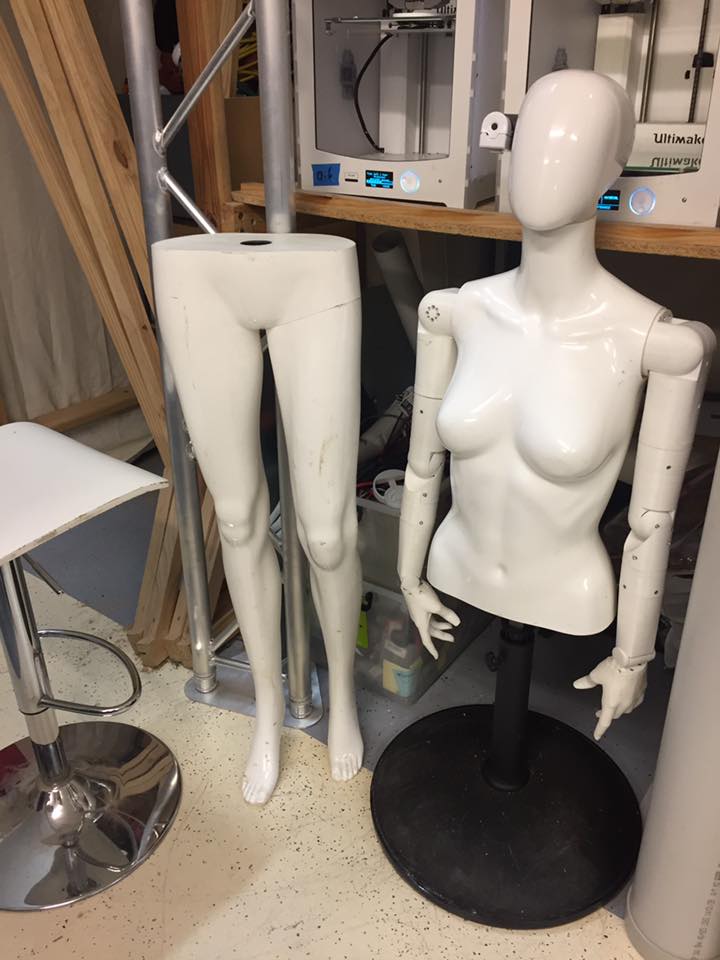

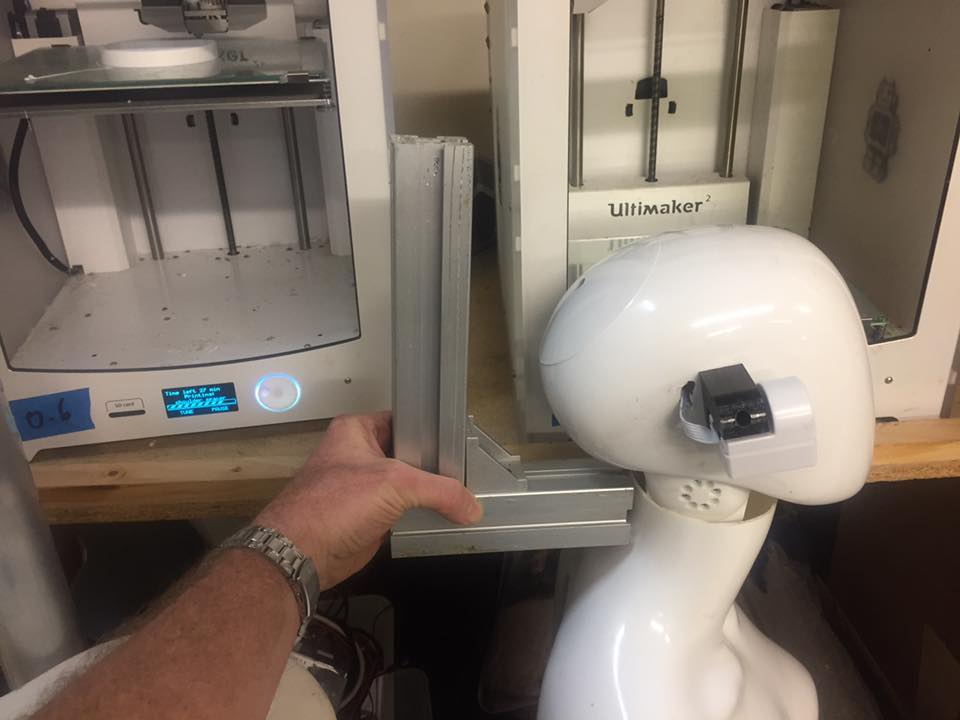

I need to extend the camera from it's circuit board and mount it in something like a cable dot on the side of the head facing forward. At the moment she looks borg. Not good. This is for department stores for women's fashion. Can I separate the camera with wires so its just a tiny dot?

How do I do that please?

PS The camera will definitely not be placed in the face of this particular mannequin head - It will be under the chin or facing forward from the ear.

It makes more sense to use two cameras if there is no fish eye lens to see everything that a head could see in an arc, but this still leaves a LOT of logical problems. But how would you plug both of them in? When it hits centre, how does the first camera know when its time to stop, second to continue? How does a stationary camera know where the head is facing to begin with? I cannot just experiment wildly with every conceivable method. It would take forever. The theory needs to be tight before many hours of work. And as you must know, every brick wall is soul destroying, and often results in complete project abandonment (CPA). I do thank you for your suggestions, but I'm not going to experiment with every method, and that goes for all my inventions. If someone in a forum has a solution, it is worth asking in the first place. If people don't know, then its usually better to not ask, because that can lead to getting confused and CPA. In fact CPA is difficult to avoid.

IT sounds like you need to spend a little time experimenting with the camera tracking as opposed to trying to figure out a complete solution and fleshing it all out. Don 't overthink it. As you experiment you will find solutions to your questions. Make sure the EZR tracking routines are even adequate for your application. You have the hardware, set it up, do some tracking, then figure out how to integrate it.

Incremental progress like that will prevent CPA as you put it.

@Zxen a couple of suggestions, if I may.

If you are having difficulty mounting inside of a curved Head I could suggest using the new version 2 EZ-Robot camera and peel the lens away from the PCB to change the angle a little. It is just held against the PCB with double-sided tape.

My second suggestion would be to make only a 1mm hole somewhere in the mannequin's head. A 1mm hole is all you need for the actual lens portion to see.

Now you may have to drill out a small cavity for the rest of the lens but the opening only need to be 1mm. Here's an example, created with my amazing MS paint skills LOL.

I should also note that the camera v2 lens is interchangeable with Fish eyes lenses that are somewhat difficult to find but do exist. But note that you will need a larger viewing hole to incorporate a larger lens; it have would be 10mm in diameter.

Oh and yes I can confirm that you can definitely extend the camera cable, we've gone as far as 10 feet successfully but we've never really tested out the maximum range.

Very good explanation Jeremie. I may eventually put a 1mm hole in the face.

I have spent hours testing the facial and colour tracking and its just like I remember. EXTREMELY SLOW AND UNRELIABLE REACTION TIME.

The motors in my robot's head are fast - she can nod and shake her head faster than a real human. But when she is tracking, she moves like a pigeon. I get best results when I shrink the grid and I am moving my face further away, but I basically need to move past at less than 1km/h so she doesn't lose me.

Can someone please tell me how to fix this so its smooth?

Is it slow because it requires WiFi? Video is being sent wirelessly to my computer fast enough, so I don't understand whats causing this delay thats so extreme she moves in slow motion. Are the calculations being done in the ARC software or on the board? She only moves smoothly if I tell her to move 5 or more steps at a time, but this often results in overshooting the mark, after which her head begins to thrash violently to get back to the mark.

@jeremie, is this camera ( v2) released yet? If so what are the changes?

@ Zxen I feel your pain. I've tried to get smooth motion from tracking and the best thing to play with is the increments steps found in the camera set up where your servos are set up.

The newer camera is a single board instead of a double decker board and is smaller - not sure about other changes. Do you know where the processing is done? Would it be more responsive to connect from the ezb to the computer with ethernet or something? Do I need a spotlight on my face so it can see the face more easily? I found that colour detection works better when you increase the saturation of the video output. If its being done inside the board, is there a way to make it process faster? Anyone know the smoothest facial tracking demo video on this site?

This appears to work smoothly, so its possible.

@fxrtst Yeah for sure! Version 2 of the camera has been in the wild for some time now. I believe we had discussed it in the past when you were looking for dimensions and we released not long after that.

Some of the changes are:

Version 2 of the camera is meant to match the operation of version 1 but with some quality of life improvements from a hardware and firmware perspective.