This is a test with the Intel RealSense T265 tracking camera for localization with the EZ-Robot AdventureBot. I use 3 ultrasonic sensors as well to demonstrate the navigation messaging system, but that's not the full point of the video. Ultrasonic sensors are terrible for mapping A 360 degree lidar will do a much better job. I'll get to that in the future... but for now, we're playing with localization and way points.

So, the fact that this robot can get back to exactly where it started and the realsense maps that position... wow, i have to say wow! My USB 3.0 active extension cable is only 16 feet long, so that's as far as i can go without putting the realsense in the robot. For the time being, this is how we test. It's also a good test because the wheels slip like crazy, so it demonstrates why wheel encoders are bad news bears.

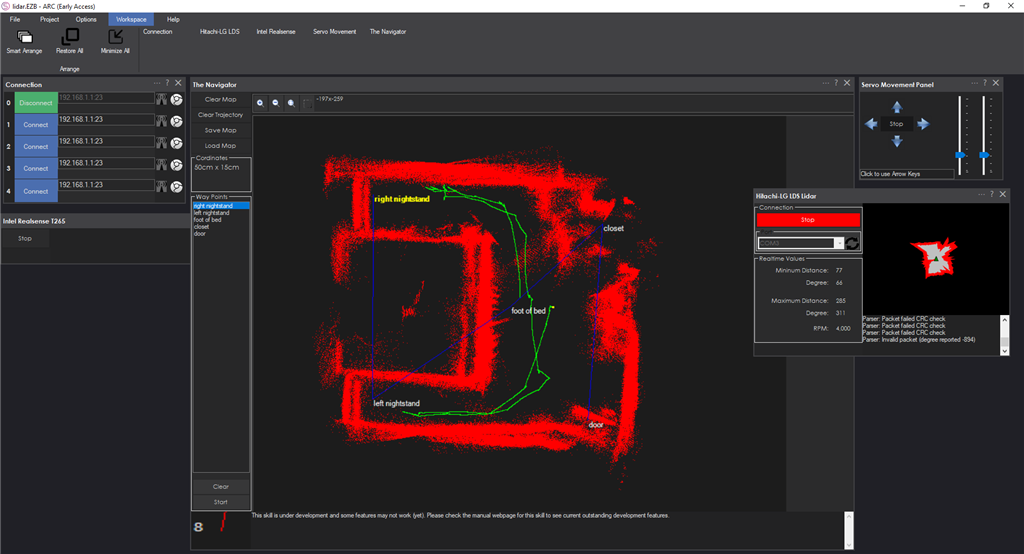

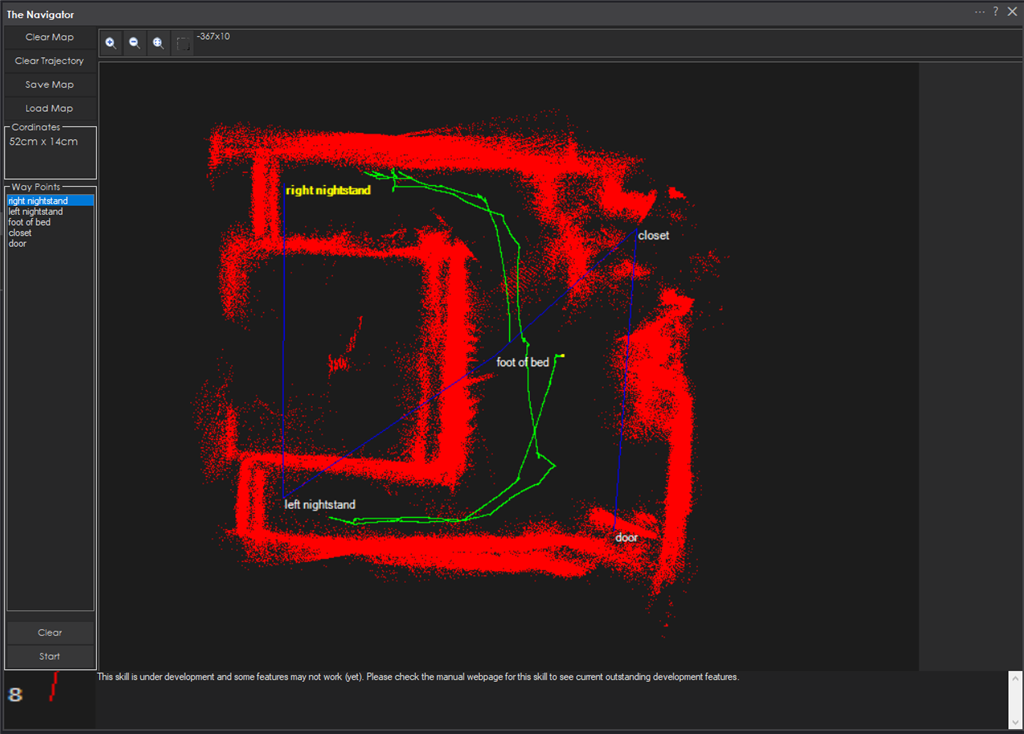

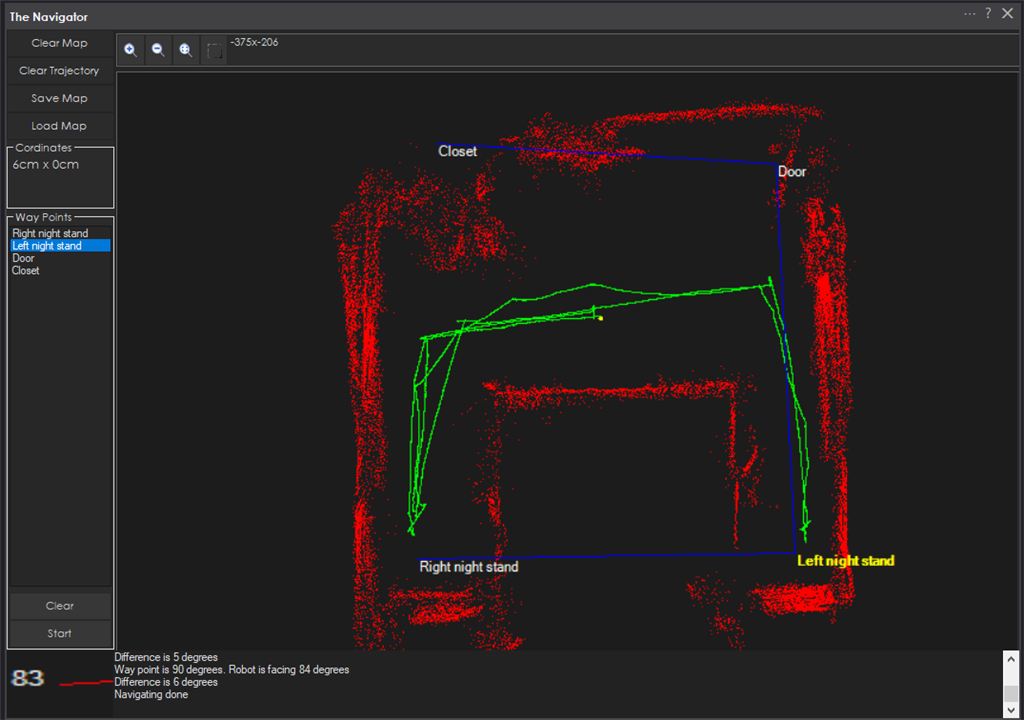

Preparation for Part #4 Removing false positives and filtering the data from the Lidar, then adding a SLAM algorithm to allow detected objects to only be static for a certain period of time. This allows things in the way to move, like humans, and not be part of the map. I wanted to make a robot that can navigate around my bedroom to each night stand, closet and doorway. So I used a combination of the 360 Lidar and 3 ultrasonic distance sensors to scan my bedroom. I threw a bunch of clothes on the floor to simulate obstacles, which you can see around the closet.

Closer inspection, you can see the way-points and the navigation paths i took to create them. I'm implementing a path finding algorithm right now for the next update - which splits the map into quadrants based on your defined robot size. It then finds a path by using your robot's width.

Here's with filtering to remove false positives. This gives the path finding system less false positives to worry about

Part #3 We give way-points names

Part #2 In this video, i demonstrate multiple way-points and controlling them through control commands

Part #1 Just testing this Intel RealSense T265 out and seeing how it works with the ARC NMS (Navigation messaging system)

Other robots from Synthiam community

Rich's My Project Jarvis

Knusel's Johnny 5 Hannover

Weren't you guys developing a Lidar SLAM module .....I remember reading you were having trouble with a supplier or something like in 2019. Are you going to develop the software around an existing piece of hardware, if so which one?

This is getting exciting.

We were working with hitachi/lg on a lidar. But the cost and volume was terrible. The biggest issue we found was we couldn’t get any slam software to work correctly. They all would go out of sync after some time. The maps would warp and twist.

we gave up because there was no real way for us to get positioning

until now! The realsense solves that it seems

as for a 360 lidar... it really doesn’t matter what we choose. The new Navigation Messaging System in ARC makes any sensor at all work. Absolutely anything will work

Awesome! So excited!

Wow looks like I may be getting that Realsense cam, very exciting news for Navigating!

Spot on DJ! Any chance of getting the odometry from the roomba to the navigator?

If anyone finds a good low cost LIDAR that works I would love to hear about it. There are a few at Robotshop for under $100 US but I don’t want to waste money on something if it doesn’t work very well.

very good part 1

thanks

EzAng

Proteusy, the iRobot roomba control panel is scheduled to push its sloppy wheel encoding into the nms.

take a look here: https://synthiam.com/Support/ARC-Overview/robot-navigation-messaging-system