This is a test with the Intel RealSense T265 tracking camera for localization with the EZ-Robot AdventureBot. I use 3 ultrasonic sensors as well to demonstrate the navigation messaging system, but that's not the full point of the video. Ultrasonic sensors are terrible for mapping A 360 degree lidar will do a much better job. I'll get to that in the future... but for now, we're playing with localization and way points.

So, the fact that this robot can get back to exactly where it started and the realsense maps that position... wow, i have to say wow! My USB 3.0 active extension cable is only 16 feet long, so that's as far as i can go without putting the realsense in the robot. For the time being, this is how we test. It's also a good test because the wheels slip like crazy, so it demonstrates why wheel encoders are bad news bears.

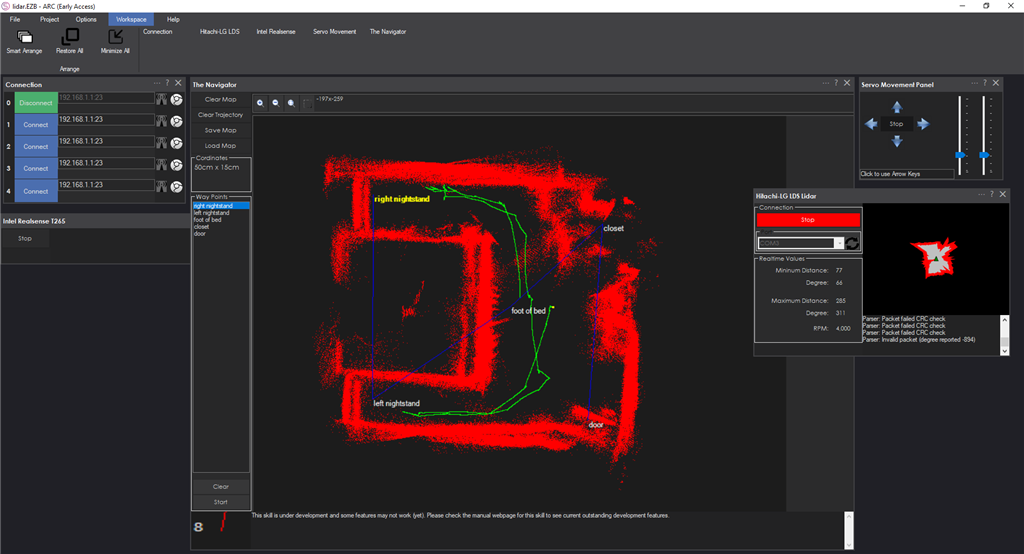

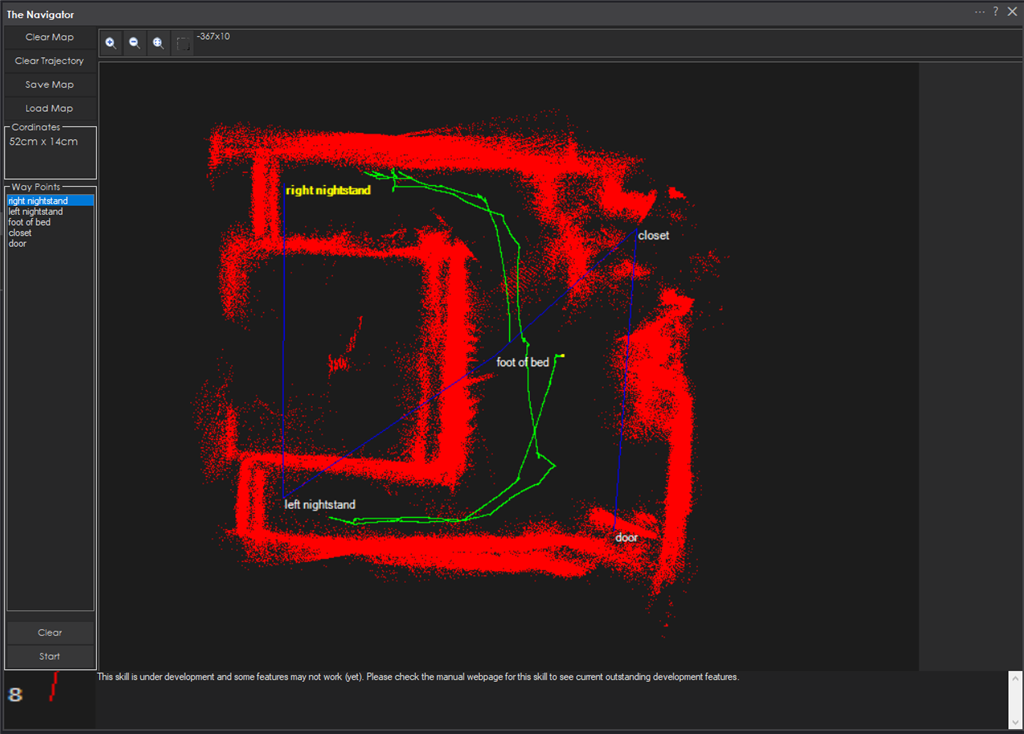

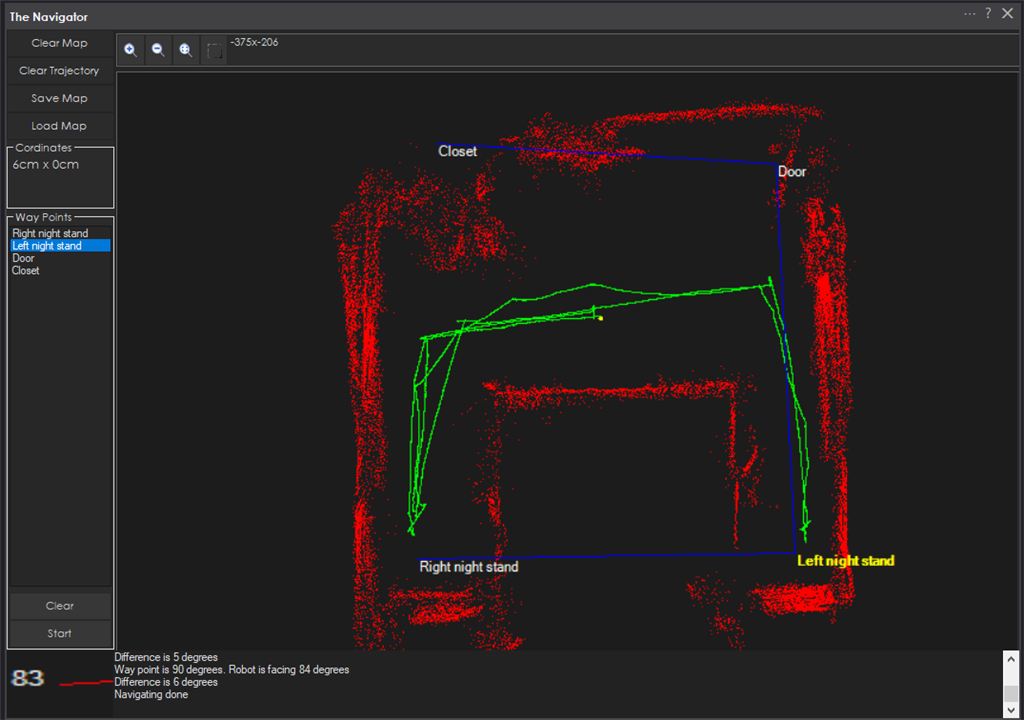

Preparation for Part #4 Removing false positives and filtering the data from the Lidar, then adding a SLAM algorithm to allow detected objects to only be static for a certain period of time. This allows things in the way to move, like humans, and not be part of the map. I wanted to make a robot that can navigate around my bedroom to each night stand, closet and doorway. So I used a combination of the 360 Lidar and 3 ultrasonic distance sensors to scan my bedroom. I threw a bunch of clothes on the floor to simulate obstacles, which you can see around the closet.

Closer inspection, you can see the way-points and the navigation paths i took to create them. I'm implementing a path finding algorithm right now for the next update - which splits the map into quadrants based on your defined robot size. It then finds a path by using your robot's width.

Here's with filtering to remove false positives. This gives the path finding system less false positives to worry about

Part #3 We give way-points names

Part #2 In this video, i demonstrate multiple way-points and controlling them through control commands

Part #1 Just testing this Intel RealSense T265 out and seeing how it works with the ARC NMS (Navigation messaging system)

Other robots from Synthiam community

Rgordon's Project Magnus On Hold

Jstarne1's Diy Airsoft Mech Warrior From A Rad Robot Video...

Lol. Will there be any conversion factor (cm/s) or you just push that raw sloppy in?

haha - well, the conversion will be standard across all roomba's because its' the number of ticks per wheel rotation. I don't recall off the top of my head but it's published in their documentation.

Updated with the video #2 of the series demonstrating way points. Look at the top of this robot description for PART #2 of this series

Is that Realsense camera readily available? so many web companies I want stuff have delays on back orders,can never get what I want. I really like how clear that camera is too!

Edit----Oh I see that video is just the EZ Cam right? LOL! looks good when Lots of light in the room! Realsense just showing the radar map part.

Looking forward to part 3. Is the LattePanda or Rock Pi X powerful enough to run this so you can cut the cord? Love to see some obstacle avoidance in next demo as well.

With colour ball tracking and a few of these bots who know where their goal posts are a game of robocup would be fun (although a little expensive, I guess we need to find a cheaper sensor).

If I was a realestate agent during Covid, selling Condos I would be all over this. Upgraded roomba Robot sits charging in the corner, You click on the floor plan, the robot drives into the room. You can take over and do some basic controls (access camera controls to look around) then click on another area in floor plan and off you go. Let them keep the robot when they buy the condo as a telepresence / security robot / vacuum that actually works and doesn’t smash into the furniture.

The location processing is done within the sensor. So the latte panda or any sbc would work with this. Just needs usb and the respective robot skills

I REALLY enjoyed the vids DJ! It gave me much relief that you had similar experimental results to what I have seen that this sensor is capable of. You never know how something will work until you test it in a given environment...so its really great to see it working for someone else...and integrated with the other skills too!

Its also great to see it working without the encoders for someone else...which is how I plan on using it too. The SDK supports integrating encoders into the SLAM algo on the sensor, so this would be a great (but not necessary) addition to the skill in a future version. It would probably improve the accuracy even further when trying to drive correct paths, especially in visually uninteresting areas. I have not been a fan of encoders either in the past, but from everything I read, they can play an important part in SLAM in particular as one part of the pose probability calc. For me, so far, I am happy with the accuracy without encoders in my office. From what I have read, the lack of encoders can become more of an issue when the bot is moving through hallways and other areas that have less interesting visual features to focus on (at multiple angles). Does my assessment seem accurate on this?

This is all exciting stuff. I am looking ahead to your next prototype and video. Here is a bit of a wishlist of sorts. I would think others like me will want to mount the T265 on a moving head...likely pan and tilt. This complicates the whole nav thing if the head is out of line and changing relative to the driving path...maybe that is already handled somehow. I am trying to integrate a compass into this mix which may not be necessary in the short run but I think is desirable for me and others. I used it in the past to maintain a bot on a proper heading while driving or between waypoints. Have any thoughts on compass integration? In future discussions once more people get on board with the T265, my guess is we will all start talking more about integrating depth cams, and persistent 2D or 3D gridmaps. Is that the path forward as you or others see it?

You could push compass data into the location stream of the NMS. But i wouldn't think the compass would be as accurate as the intel realsense's heading. You'd have to run a pretty hefty complimentary or kalman on the compass data and lean heavily on the t265's pose for stability.

Here's the NMS overview: https://synthiam.com/Support/ARC-Overview/robot-navigation-messaging-system