PRO

jp15sil24

Germany

Asked

,

Do we currently need to manually load an occupancy grid or map into the Synthiam ARC software, and if so, what is the procedure for doing this? I have noticed that the robot skill does not have a settings user interface, as the 3-dot menu doesn’t open any configuration options.

Additionally, is the Wavefront robot skill fully developed and functional at this point, or are there still features related to map loading and configuration that are in development?

Related Hardware (view all EZB hardware)

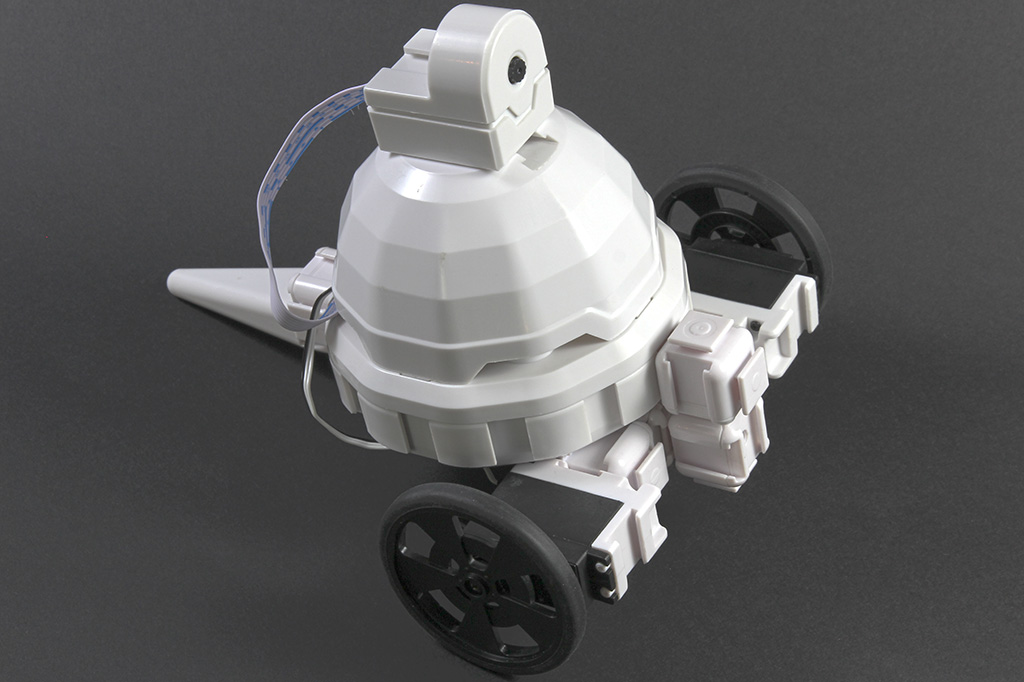

Adventurebot

by EZ-Robot

Two-wheeled WiFi robot with camera using EZ-B v4 brain-affordable, expandable AdventureBot kit built from EZ-Bits, sold by EZ-Robot.

Wi-Fi / USB

Servos

24

Camera

Audio

UART

3

I2C

ADC

8

Digital

24

Related Robot Skills (view all robot skills)

Wavefront

by rz90208

Wavefront grid planner computes collision-free, deterministic paths from start to goal using known occupancy maps for indoor robots.

Requires ARC

v2

EZ-SLAM

by Synthiam

Creates a map in a global variable; proof-of-concept SLAM visualizer (very experimental).

Requires ARC

v25

Short answer

What Wavefront expects (important)

How to load a map into ARC (three recommended ways)

Notes about that script:

Why the Wavefront skill has no settings UI

Is Wavefront "fully developed"?

Pointers and links

Next steps - how I can help you now

Tell me which option you'd like and what map format/data you already have, and I’ll provide the exact script or stepbystep instructions.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.