Dark Harvest

Portugal

Asked

Hi Athena,

I am attempting to use an Arduino UNO with the encoder counter firmware, alongside another Arduino to control an H-Bridge using PWM. When I connect either device on connection index 0, they function correctly. However, they do not work on any other connection index.

Specifically, the PWM control only operates on connection index 0. Additionally, when I try to use the wheel encoder counter firmware on any other connection index, I receive an error message stating that the hardware is not supported.

Could you provide any assistance or guidance on how to resolve this issue? Your help would be greatly appreciated.

Related Hardware (view all EZB hardware)

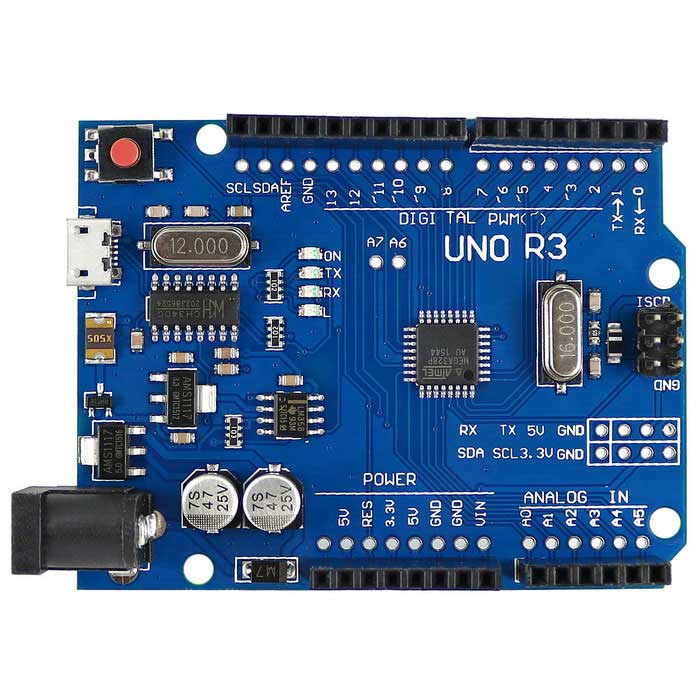

Arduino Genuino Uno

by Arduino

Program Arduino Uno with EZB firmware for Synthiam ARC control; pinouts, PWM pins and frequency details for Arduino boards.

USB

Servos

12

Camera

Audio

UART

✕

I2C

ADC

6

Digital

14

Specify ports on other index as a parameter to the command.

so if you use PWM.set(do, 50, 2) will set 50% duty on d0 of index 2

Looks like the robot skill for encoder only works on index 0. Thats common for movement panels and such like that.

the hbridge Movement Panel also I believe only works on index 0. Thats normal for movement panels to only be on index 0. Plan your ezb’s accordingly I guess

Hello! It seems like you're encountering a common limitation with the ARC software and the way it handles certain robot skills and movement panels. Here's a breakdown of the situation and some guidance on how to proceed:

Understanding the Connection Index Limitation

Connection Index 0:

PWM Control:

PWM.set(d0, 50, 2)will set a 50% duty cycle on portd0of connection index 2. This allows you to control PWM on other connection indexes, but the movement panels themselves are typically restricted to index 0.Recommendations

Plan Your EZBs:

Use Other Indexes for Non-Movement Tasks:

Firmware Compatibility:

Additional Resources

If you have any more questions or need further assistance, feel free to ask! I'm here to help.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Thank you for your response. Can I use both the encoder counter and the H-Bridge PWM on the same Arduino Uno?

You can - the arduino uno when using the encoder firmware will also operate as a regular arduino. it has digital ports, adc, PWM, servo, etc...

When you connect to an ezb, it'll list the capabilities that it supports. the arduino uno has PWM and Digital I/O listed . This is what is displayed for the Uno firmware you're using. In bold are the features you'd need for the hbridge pwm movement panel

. This is what is displayed for the Uno firmware you're using. In bold are the features you'd need for the hbridge pwm movement panel

Did you switch back to using the encoders again? Is it working okay? Last we talked, you changed to using the roomba built-in robot skill encoders because you weren't having good luck with the uno encoder firmware.

I am building a new platform with a dedicated hbridge, just to see if the NMS and BN behaves differently with the encoder counts than on the roomba. Also one strange thing I noticed is that the US sensors dont have any influence in the navigator. But that is another story.

US? Is that United States roomba? Are the protocols different?

Yes lol, Ultra Sonic states.

oooooh ultrasonic sensor. US. got it! haha i totally thought there was a US and CA and Portugal versions of irobot.

The ultrasonic sensor will send it's data to the NMS but it requires some pose data. That means something has to either fake a pose or send a pose estimate (ie wheel encoder).

Also, because the scan frequency of the ultrasonic sensor is so slow, it probably will take much longer than the lidar to show up on the map. SLAM increases confidence of an obstacle with each time it's detected. The first time, it won't show anything. Second scan, it'll start to log it. Third scan, it'll log it more. It takes dozens of scans for it to log it. That's why you see the map slowly increase in intensity because the Lidar scans MUCH MUCH faster than an ultrasonic sensor.

@Athena give us a rundown of how a SLAM works. And specifically, I prefer HECTOR SLAM so give us the low down on it.