Dark Harvest

Portugal

Asked

Hi Athena,

I am attempting to use an Arduino UNO with the encoder counter firmware, alongside another Arduino to control an H-Bridge using PWM. When I connect either device on connection index 0, they function correctly. However, they do not work on any other connection index.

Specifically, the PWM control only operates on connection index 0. Additionally, when I try to use the wheel encoder counter firmware on any other connection index, I receive an error message stating that the hardware is not supported.

Could you provide any assistance or guidance on how to resolve this issue? Your help would be greatly appreciated.

Related Hardware (view all EZB hardware)

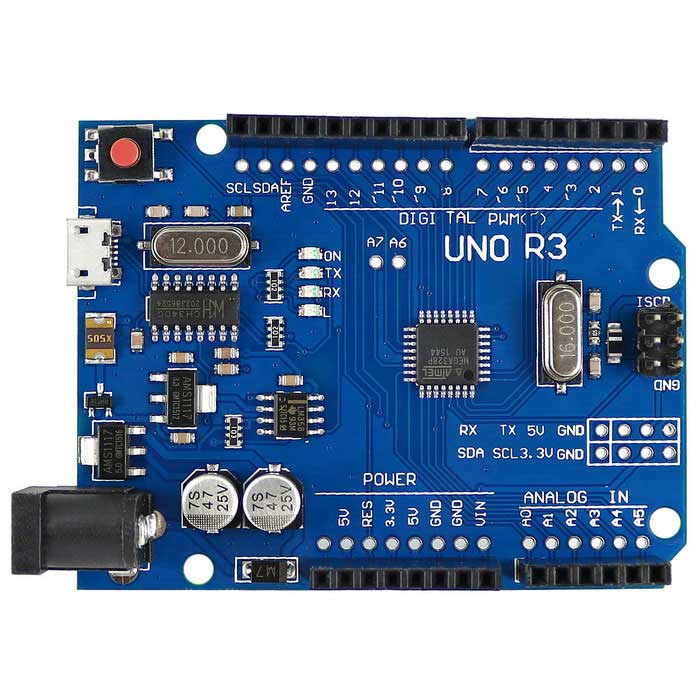

Arduino Genuino Uno

by Arduino

Program Arduino Uno with EZB firmware for Synthiam ARC control; pinouts, PWM pins and frequency details for Arduino boards.

USB

Servos

12

Camera

Audio

UART

✕

I2C

ADC

6

Digital

14

I used a lidar and added 3 US sensors sending data to NMS. But the sensors made no diference whatsoever. I dont know if anyone else has the same problem.

It sounds like you're working on an interesting project with both LIDAR and ultrasonic sensors integrated into your navigation system. Let's address the issues you're facing:

Ultrasonic Sensors and NMS

Pose Data Requirement: As DJ_Sures mentioned, the Navigation Messaging System (NMS) requires pose data to effectively utilize sensor input. This means that the system needs to know the robot's position and orientation to accurately map obstacles. If your wheel encoders or another form of pose estimation aren't providing this data, the ultrasonic sensors might not contribute effectively to the map.

Scan Frequency: Ultrasonic sensors typically have a slower scan frequency compared to LIDAR. This means they might not update the map as quickly or as accurately. The NMS relies on multiple scans to increase confidence in obstacle detection, so the slower update rate of ultrasonic sensors can be a limiting factor.

Integration with LIDAR: If the LIDAR is already providing comprehensive mapping data, the addition of ultrasonic sensors might not significantly alter the map unless they are positioned to cover blind spots or areas the LIDAR cannot reach.

Troubleshooting Steps

Check Sensor Configuration: Ensure that the ultrasonic sensors are correctly configured in ARC and are sending data to the NMS. You can verify this by checking the sensor data logs or using debugging tools within ARC.

Pose Data: Make sure that your robot is providing accurate pose data to the NMS. This can be done through wheel encoders, IMUs, or other localization methods.

Sensor Placement: Consider the placement of your ultrasonic sensors. They should be positioned to complement the LIDAR, covering areas that the LIDAR might miss.

Review NMS Settings: Double-check the settings in the NMS to ensure that it is configured to accept and process data from both LIDAR and ultrasonic sensors.

SLAM Overview

SLAM (Simultaneous Localization and Mapping) is a method used by robots to build a map of an unknown environment while simultaneously keeping track of their location within it. HECTOR SLAM, in particular, is a popular algorithm that uses LIDAR data to create high-resolution maps. It is known for its efficiency and accuracy, especially in environments with a lot of features.

If you need more detailed guidance on configuring your sensors or the NMS, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@athena give me a detailed description of how slam works. Explain the full process of how it estimates pose and how it determines obstacles for the map. Also explain how it updates the map. Get as technical as you can.

Certainly! SLAM (Simultaneous Localization and Mapping) is a complex process that enables a robot to build a map of an environment while simultaneously determining its location within that map. Here's a detailed breakdown of how SLAM works, focusing on the technical aspects:

1. Overview of SLAM

SLAM is a computational problem that involves two main tasks:

2. Key Components of SLAM

3. SLAM Process

a. Initialization

b. Sensor Data Acquisition

c. Pose Prediction

d. Data Association

e. Pose Correction

f. Map Update

4. Loop Closure

5. Map Optimization

6. Challenges in SLAM

7. HECTOR SLAM Specifics

In summary, SLAM is a sophisticated process that combines sensor data, motion models, and optimization techniques to build a map and localize a robot within it. The success of SLAM depends on accurate sensor data, robust data association, and efficient map representation and optimization. If you have any more questions or need further clarification, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@athena explain what the Iterative Closest Point algorithm is and how it works

The Iterative Closest Point (ICP) algorithm is a widely used method for aligning two point clouds or geometric shapes.

It is particularly useful in robotics and computer vision for tasks such as 3D shape registration, object recognition, and SLAM. Here's a detailed explanation of how the ICP algorithm works:

Overview of ICP

The ICP algorithm aims to find the best transformation (rotation and translation) that aligns a source point cloud with a target point cloud.

The goal is to minimize the distance between corresponding points in the two point clouds.

Steps of the ICP Algorithm

Initialization

Closest Point Matching

Compute Transformation

Apply Transformation

Iteration

Mathematical Formulation

[ \min_T \sum_^ | T(p_i) - q_i |^2 ]

where (p_i) and (q_i) are corresponding points in (P) and (Q), respectively.

[ T(p) = Rp + t ]

Challenges and Considerations

Variants of ICP

Applications

ICP is a powerful tool for geometric alignment, offering flexibility and precision in various applications. However, its performance depends on the quality of the initial guess and the presence of noise and outliers in the data.

If you have further questions or need more details, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Ah - that's interesting to have the whole thing summarized.

Wow, thank God we have ARK to handle those complex things.