Mac

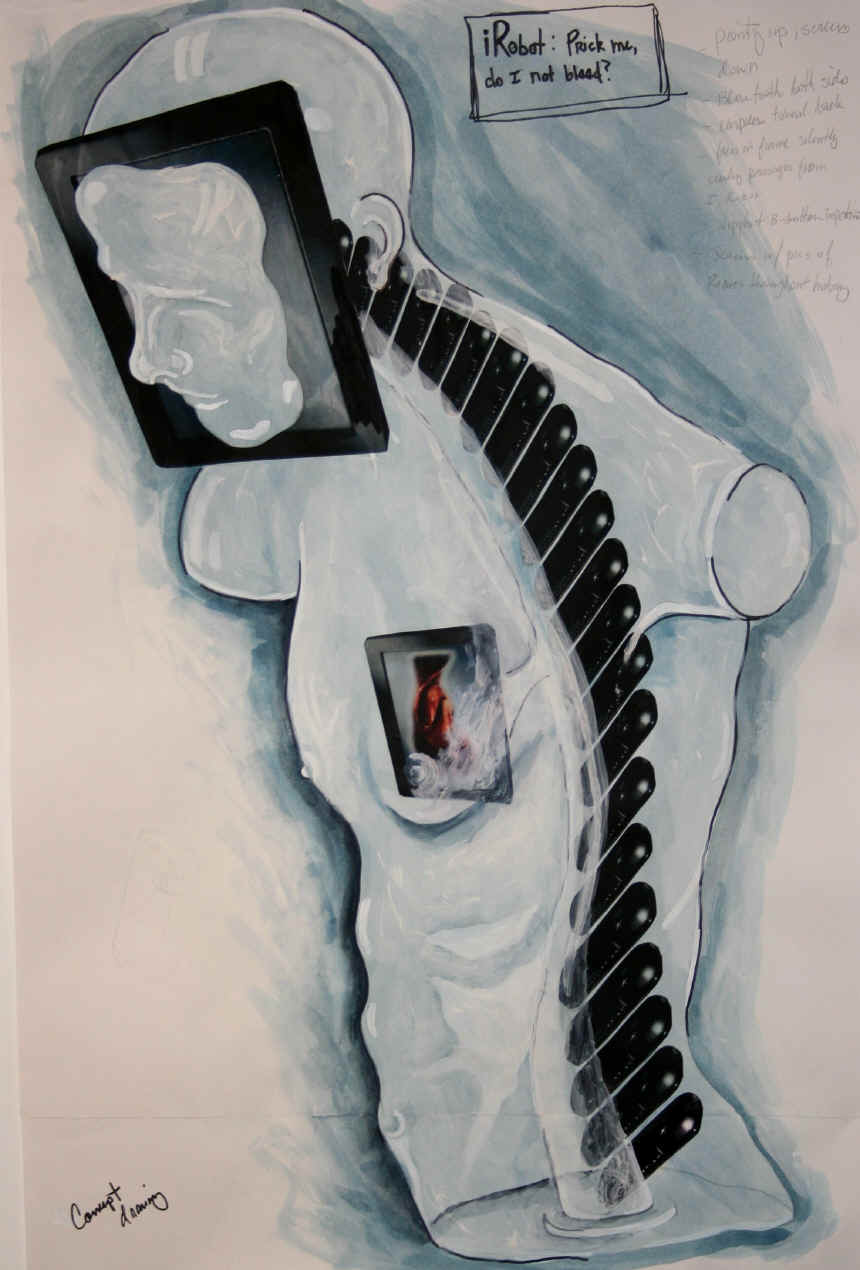

Hello everyone, I'm working on a robotic sculpture called "iRobot: Prick me, do I not bleed?" It's part of my series "The Classics" https://studio407.net/recent_work.htm

The robotics are pretty simple, the head/nook/face uses face tracking with the camera and servo and eventually I will have the torso swivel to face the viewer and I will implement speech.

But my issue is, seeing as it may end up in an art exhibition or two, I would like the sculpture to be autonomous. The "plan" was to have an android tablet installed in the support pedestal running a custom mobile app. But, am I understanding that the mobile app would be an adjunct to the PC running ARC and not the main processing unit? In other words, will I still need a laptop running windows and ARC communicating with the EZb v4 along with the app?

If this is the case, would, say, a Microsoft Surface Pro 4 (i5 processor) have the ability to run ARC, be installed in the pedestal, and make the sculpture autonomous?

I have an "in" with Barnes and Noble (my wife is the store manager, lol) so I could get an extremely good price on Galaxy Tab S2 Nook which has an octocore processor but ARC for the PC won't run on the Android, true?

This is my first post. I love that DJ had the foresight and vision to take all the scattered C++, Arduino, and Processor code and package it in an easy to use graphical interface.

Many thanks, -Mac

@ptp correct, there is no mobile control for the camera. However, there is a camera object that can be added to the mobile interface.

You can find out what supported features exist here: https://synthiam.com/Tutorials/Help.aspx?id=196

Thanks @Mac for the very kind words. Coming from an Associate Professor of Art with the mechanical skill to build a car, this is a huge compliment! It's also nice to find another fan of LIS. You must be a big fan to have watched all three seasons that many times. I do wish the scripts were better though. Do you know NetFlix is going to to a reboot of the show in 2018? They already have most of the actors signed on. Can't wait.

More to this topic's point; I wish I had more to offer. I've been so busy getting the B9 built and basically working that I haven't really branched off into some of the other excellent features of the Ez Robot Platform. I'm extremely interested in this topic as I'm really looking forward to setting up a mobile interface to control some body movements along with the arms when I want. Again, I'm not sure what all I can accomplish with the Mobile App but it's going to be fun to learn. I also plan to find the best tracking method so my B9 can turn and follow as people walk by and respond when it notices movement or a face. this sounds a lot like what you're trying to do so I'll be watching and learning from the "Professor" if you are willing to share your process.

Good luck on your build and please keep us informed as you go.

More on the tracking with mobile topic...

Here's an exert from the JD project's Mobile Interface. This is the code which sits in the Checkbox for "Color Tracking".

Find out more about EZ-Script and ControlCommand() in the activity tutorial here: https://synthiam.com/Tutorials/Lesson/23?courseId=6

Of course, the camera control on the PC would need to have the servo/movement/scripts configured. Using the ControlCommand() you may enable/disable tracking types.

If your camera is to enable tracking right from the get-go, then simply add the ControlCommand() to enable tracking in the Connection Control, or have the checkbox set on the PC Camera control when the project is saved to the cloud.

@ptp I was just looking at the Surface. A nice little unit with a nice big price tag, lol. I'm wondering if could simply use the Surface to run the PC version of Builder. I kind of like the complex graphical look of Builder more than the look of the apps. And it might make me appear smarter than I really am blush

@guru Thanks for the links. I did see the controls list which is why I started going down this road in the first place but I was confused as to why I wasn't getting the control tabs. But it sounds like, from what DJ was saying, is that I can create my own. I did read about the check boxes, just wasn't sure what their purpose was.

@DJ Thanks for the input but I didn't mean to make you work on a Saturday eek I think I've been avoiding the ezScript tutes because I've spent the last couple of months trying to teach myself Arduino and got a little burnt-out. Then I found your site and I thought "Why the heck didn't I find this two months ago?!" Not time wasted though. I know a little syntax and command structure now. I can read an arduino sketch better than I can write one. I'll keep playing with the mobile interface builder and see what I come up with.

All great info. Thanks everyone

So if you're avoiding EZ-Script, that's okay. Simply save the Camera's Tracking State (i.e. the checkboxes on the tracking tab) when you save your project. Is your tracking going to move two servos (horizontal/vertical)?

If so, then that's quite simple and will never need to touch ez-script.

load camera control

select and START camera source device (i.e. ez-b v4 camera)

press GEAR icon to open configure menu

Select servo TRACKING checkbox

Specify the horizontal and vertical servos and their ranges

Save the config menu

Switch to TRACKING tab on the camera control

Select your desired tracking type

There, your robot is tracking.

Add a mobile interface control to the project,

Edit the mobile interface and add a camera to the view.

Also add a CONNECTION to the view

Save the mobile interface

Now all you have to do is save it to the cloud to load onto your device

Voila. You have a vision tracking mobile app

@Mac One bit of advice is don't over think things.... EZ Robot is as it's namesake suggests... easy.... Doing basic things like face tracking can literally as @DJ mentions, be a few clicks of the mouse....

Later on as you gain more experience... you can really rock your projects by learning ez scripting.....

Sorry fellas, I'm working on the spine and my hands are covered in clay. The white things are plastic "blanks" for the cell phones which will run videos of robots through the ages as well as videos of the myriad of ways we are desecrating the Earth (which is why the robots take over, to protect us from ourselves - in the Asimov story).

Okay DJ, I've done all that you outlined but in builder only. I mounted the camera to the servo (just a single servo for now, to move the head left and right) and it tracks colors and faces quite nicely.

So what you're saying is that the mobile Interface Builder reads from the ARC project that's currently open and adds the necessary controls? That's pretty darn cool.

I'll upload a vid of the project using the Arduino and a couple of ultrasonic sensors to track movement. It had problems that I couldn't iron out through code or hardware and I wanted to use a camera anyway, so here I am.

Thanks Richard, I like that approach. I'm usually the one lecturing my students to slow down, take their time. But I have to present this thing to the board of trusties in about two months and I still have about...mmm...four months of work to do eek

DJ, I'll figure this out, dude. It's Saturday and you're still young! Go party or something. I know I would if I could.