Mac

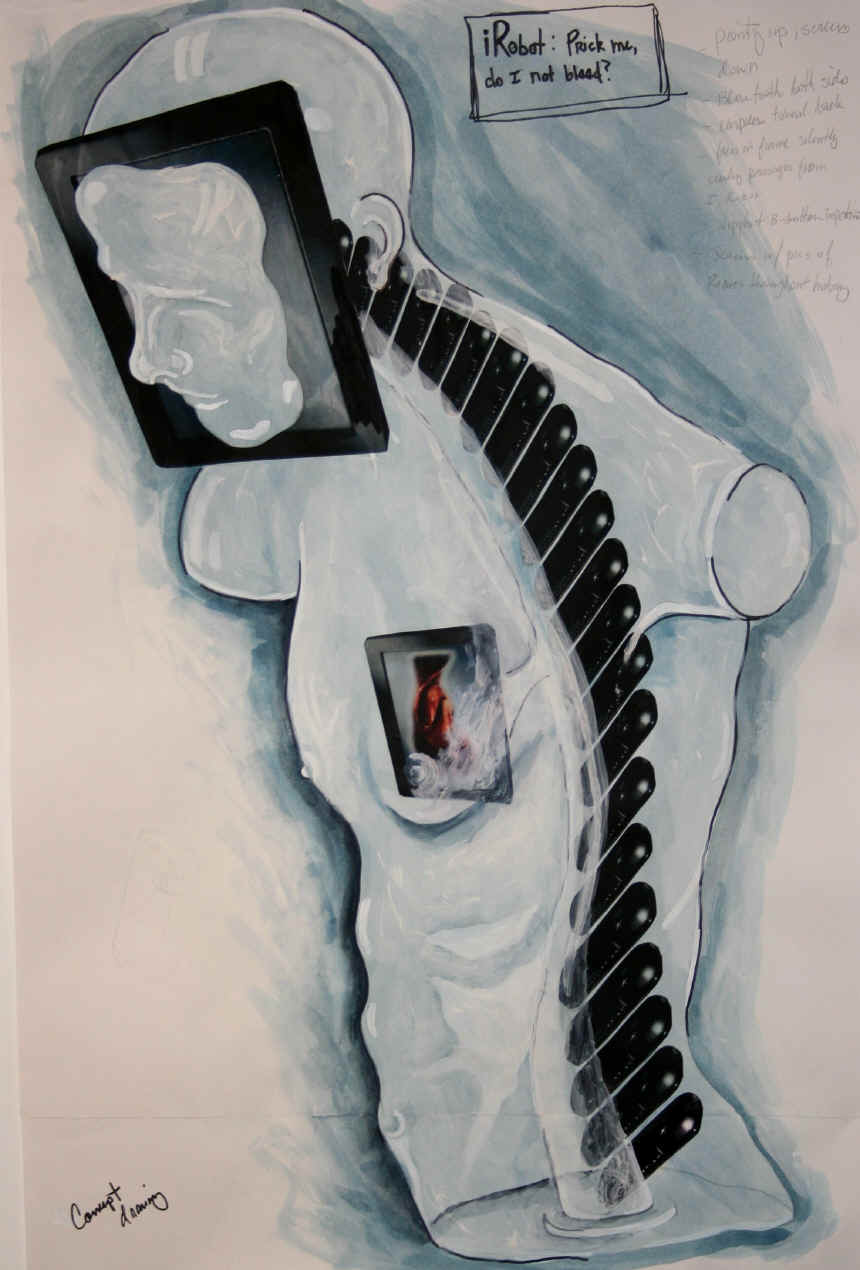

Hello everyone, I'm working on a robotic sculpture called "iRobot: Prick me, do I not bleed?" It's part of my series "The Classics" https://studio407.net/recent_work.htm

The robotics are pretty simple, the head/nook/face uses face tracking with the camera and servo and eventually I will have the torso swivel to face the viewer and I will implement speech.

But my issue is, seeing as it may end up in an art exhibition or two, I would like the sculpture to be autonomous. The "plan" was to have an android tablet installed in the support pedestal running a custom mobile app. But, am I understanding that the mobile app would be an adjunct to the PC running ARC and not the main processing unit? In other words, will I still need a laptop running windows and ARC communicating with the EZb v4 along with the app?

If this is the case, would, say, a Microsoft Surface Pro 4 (i5 processor) have the ability to run ARC, be installed in the pedestal, and make the sculpture autonomous?

I have an "in" with Barnes and Noble (my wife is the store manager, lol) so I could get an extremely good price on Galaxy Tab S2 Nook which has an octocore processor but ARC for the PC won't run on the Android, true?

This is my first post. I love that DJ had the foresight and vision to take all the scattered C++, Arduino, and Processor code and package it in an easy to use graphical interface.

Many thanks, -Mac

No... You only need a PC and ARC in order to develop your mobile app... After that you can just your your mobile android tablet or phone. No need for a separate laptop running ARC...

Very cool and very, very creative! I love your concepts and work. I hope you continue to share this with us.

What Richard R said. He nailed it.

Excellent, excellent news, guys. Thanks so much. And thanks for the kind words, Dave. I've been watching your progress on the B9 and I have to say, what you're doing is.....intimidating. But in a really good way. I have a '71 Olds Cutlass that I've been restoring in my garage/studio and I've been through all three seasons maybe 12-15 times; watching as I work. So now I lovingly refer to the Olds as the Jupiter III

I have a '71 Olds Cutlass that I've been restoring in my garage/studio and I've been through all three seasons maybe 12-15 times; watching as I work. So now I lovingly refer to the Olds as the Jupiter III

Again, thanks for the insight and thanks for the info, Richard. I'll update this thread as I make progress. I have to say this has been the most ambitious artwork I've taken on.

-Mac

I've been through the tutorials and watched the vids but I don't understand how to control the camera in the mobile app. I don't see how to add the control tabs (tracking, color, device, motion, etc) in the mobile interface. Is there a thorough tutorial that I'm not finding?

@Mac,

I would recommend a mini PC or a Microsoft Surface (Intel) with Windows 10

Android version: Camera control i.e. face tracking is not implemented although you can access the EZB camera feed.

Android version: Text to Speech output is only supported on the tablet speaker (no output on EZB speaker i.e. SayEZB)

Speech Recognition not implemented.

There are other limitations, only relevant if you will use them.

See https://synthiam.com/Tutorials/Help.aspx?id=196 for the supported mobile controls. I agree with ptp, a Windows computer is better suited for an autonomous robot.

You can get small, DC powered windows computers or inexpensive tablets that are more than capable of running ARC.

See https://synthiam.com/Community/Questions/8714 for a good discussion of what is available.

Alan

Face tracking on android or iOS is actually better than PC because it uses hardware processing.

Mobile control capabilities can be viewed on the mobile interface builder manual page, which lists the supported tracking types.

The tracking methods are enabled or disabled using control commands. You can add check boxes to enable or disable the tracking type. View the six or jd project for example.

Lastly, the activities section in the learn tutorials has examples of how to use control command. So does every other tutorial involving eZscript.

I'm on my phone and not able to write more than this

Dj

I mentioned not supported because the camera control says not implemented.