smiller29

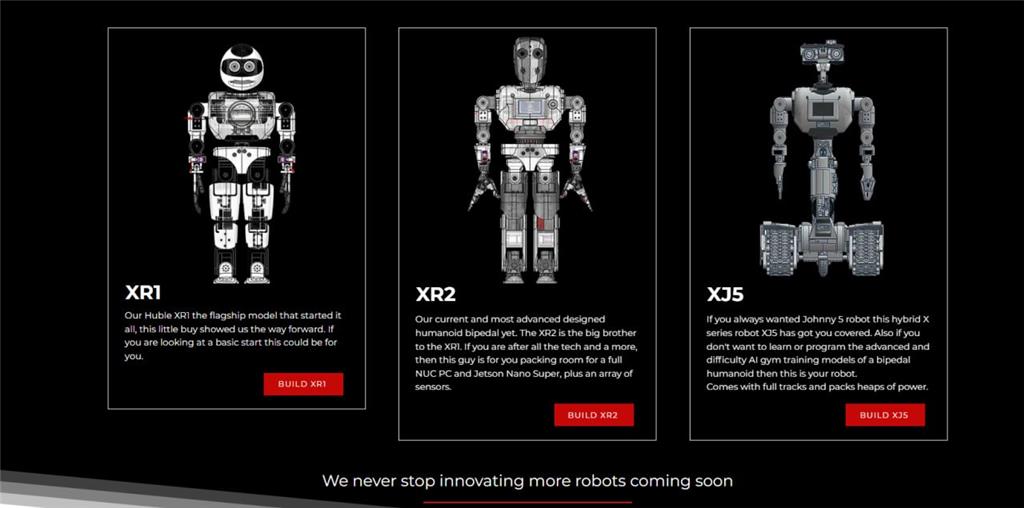

The Birth Of The XR-1 DIY Robot

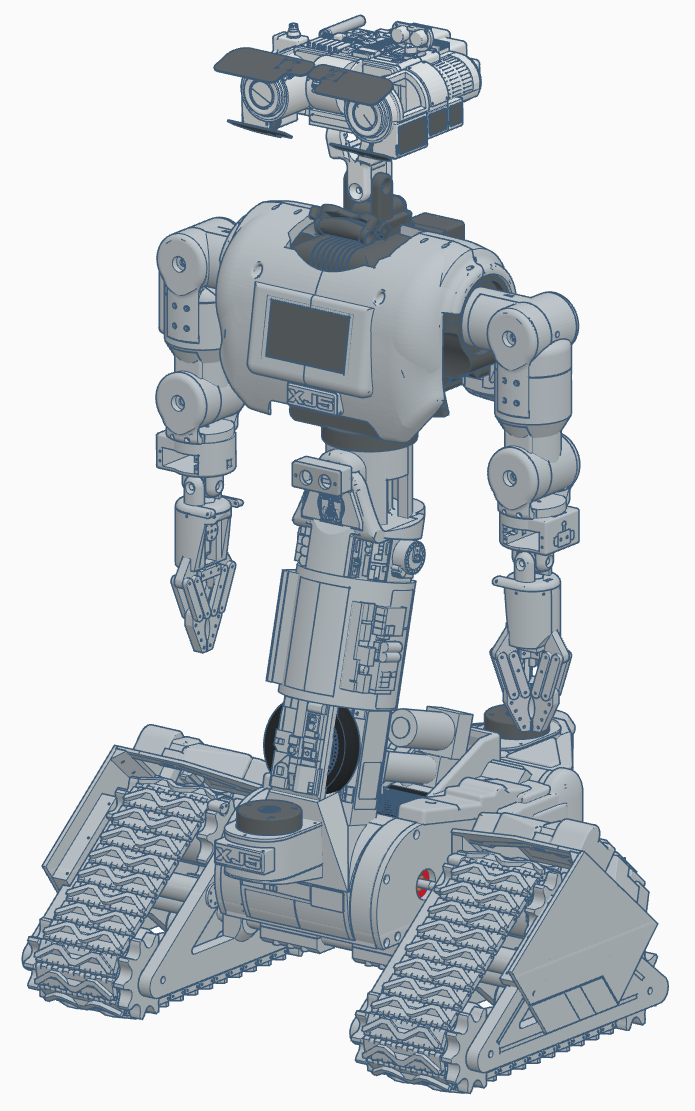

Introducing the Updated XJ-5: A New Class of Hobby Robots

The XJ-5 robot platform, available at Robots Builder, is known for its user-friendly design, emphasizing accessibility and ease of assembly. Designed to make robotics more approachable, the XJ-5 is meticulously engineered for simple 3D printing and assembly using standard components such as servos and bearings.

Our objective is to simplify the building process so that enthusiasts and robot hobbyists of all skill levels can engage in constructing their own robots. We've dedicated significant time to perfecting the design, enabling users to quickly progress to the exciting tasks of programming and teaching their robots using Synthiam's ARC software. The XJ-5 is highly customizable, featuring a variety of hands and heads to allow personalization of each creation. To foster a collaborative and open community, we're proud to announce that the XJ-5 is entirely open source, inviting users to contribute, modify, and share their innovations globally. Get ready to embark on a journey of creativity and exploration with the XJ-5!

For more information, check out Robots Builder.

As a creator of the XJ-5 and our other XR series, I will be utilizing the Synthiam ARC platform for robot control, and I hope others here will join me in developing an ARC project to support the XJ-5 robot. As of January 9th, 2024, we have started posting files for 3D printing. Meanwhile, we are developing documentation and updating the website, so please check back regularly.

My goal is to develop a walking, talking, listening, and seeing robot the size of a small child using ARC and its various features. As I progress, I will be sharing more about my efforts here.

Now, let's delve into the project, focusing on the new and improved XR1, the XJ-5, which I have been designing and building over the past year.

As a young enthusiast, I loved the movie Short Circuit and was inspired by the Johnny 5 robot, motivating me to create a similar robot. Although not a direct replica of Johnny 5, it embodies some of its iconic features. About a year ago, DJ suggested opting for a robot with wheels or tracks over a bipedal robot due to the complexity of achieving stable walking, a challenge I am familiar with from our XR2 project. But that's a story for another time.

Now, in May 2025, I've nearly completed the assembly and will soon start implementing ARC and the AI brain I've been developing to enhance ARC support. Expect more updates soon.

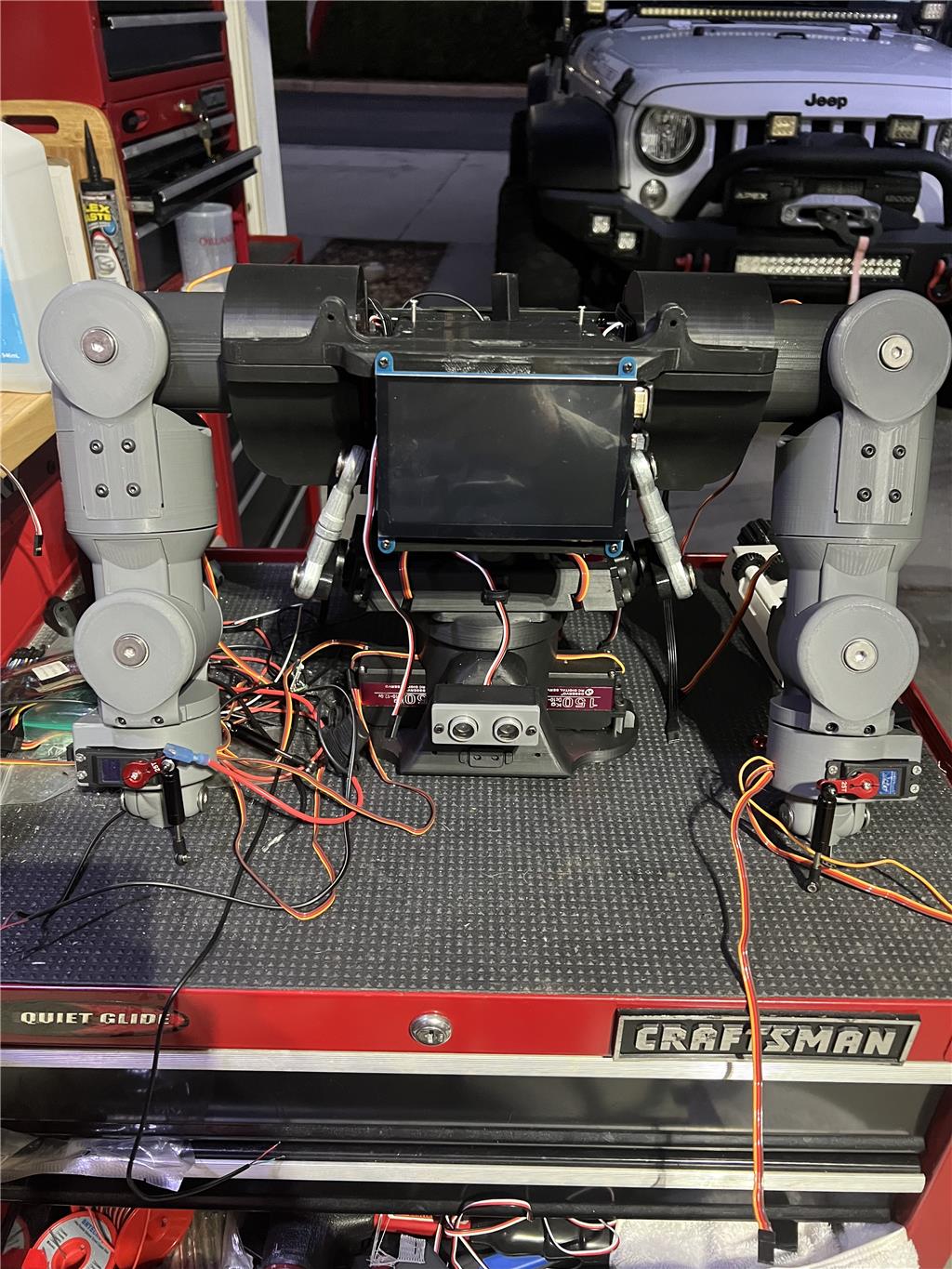

I have started building the robot's torso from the hips up, as shown in the pictures below. The shoulders are not entirely installed because I'm awaiting the arrival of the correct screws. Please excuse the messy workbench.

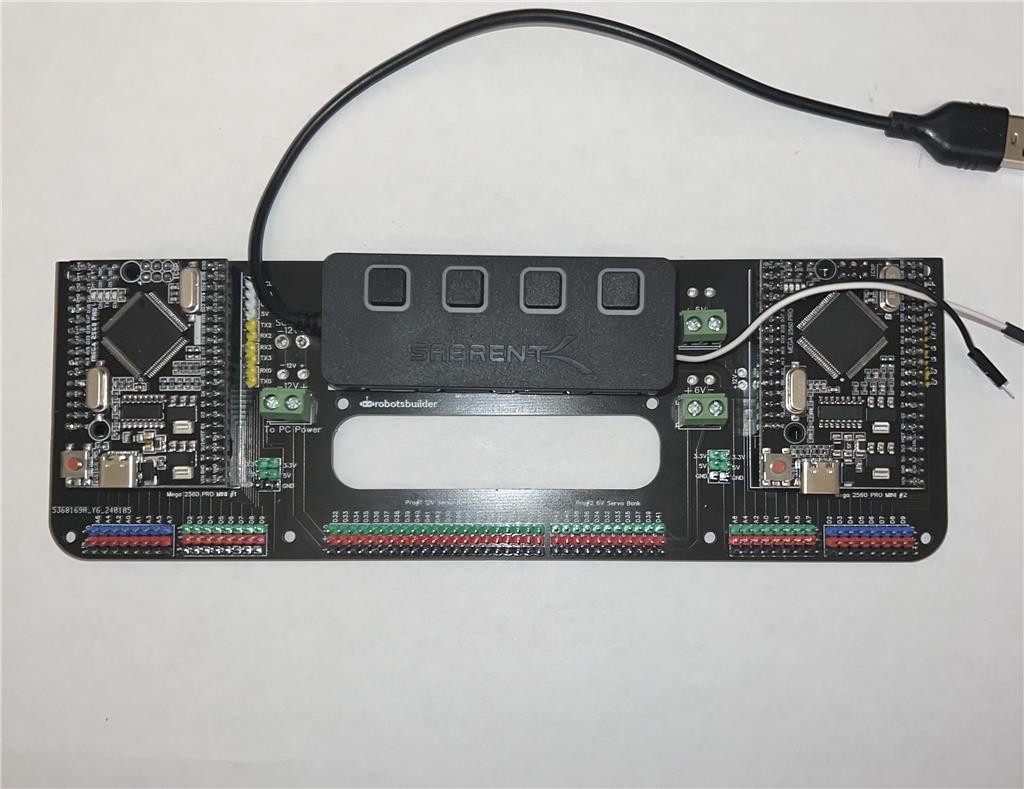

To support the robot, I've designed a set of custom boards with ARC firmware that will connect to the onboard PC, located in the chest area.

The above board will be mounted on the backplate between the two shoulder sockets and connected to a USB hub linked to the PC. Two additional USB cables, alongside servo power, run down to the boards in each hand. The USB camera and headboard will connect to the PC's other two USB ports. ARC will operate headlessly on the Windows 11 Pro PC using RDP for remote connectivity. Additionally, there's an MPU connected to the main board, which I plan to leverage with ARC.

I've added the shoulders and arms down to the wrists, ensuring they are strong and capable.

I've decided to create a Johnny 5-style head for my build featuring two cameras. Here's the completed head. I've included six more servos for the eye flaps, bringing the total count to 50 servos. The design incorporates a directional mic array and ultrasonic radar for enhanced audio and visual capabilities. For sound, two speakers are mounted in the head with an audio amplifier.

I've also opted to integrate a 5-inch touch display into the XR-1, as shown below.

This display will offer additional options for operator interaction using ARC remote controls.

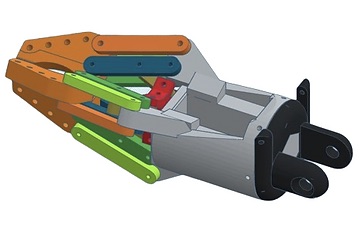

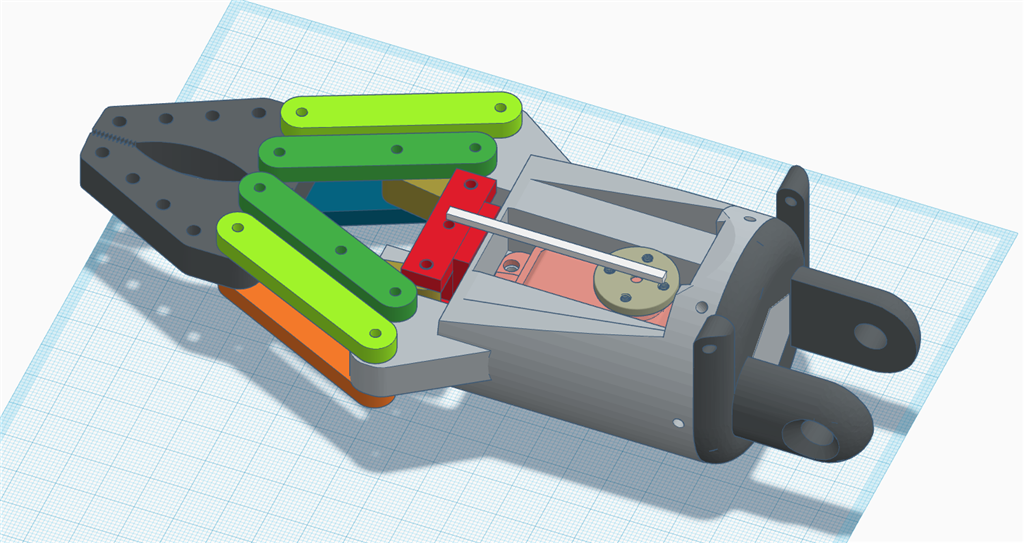

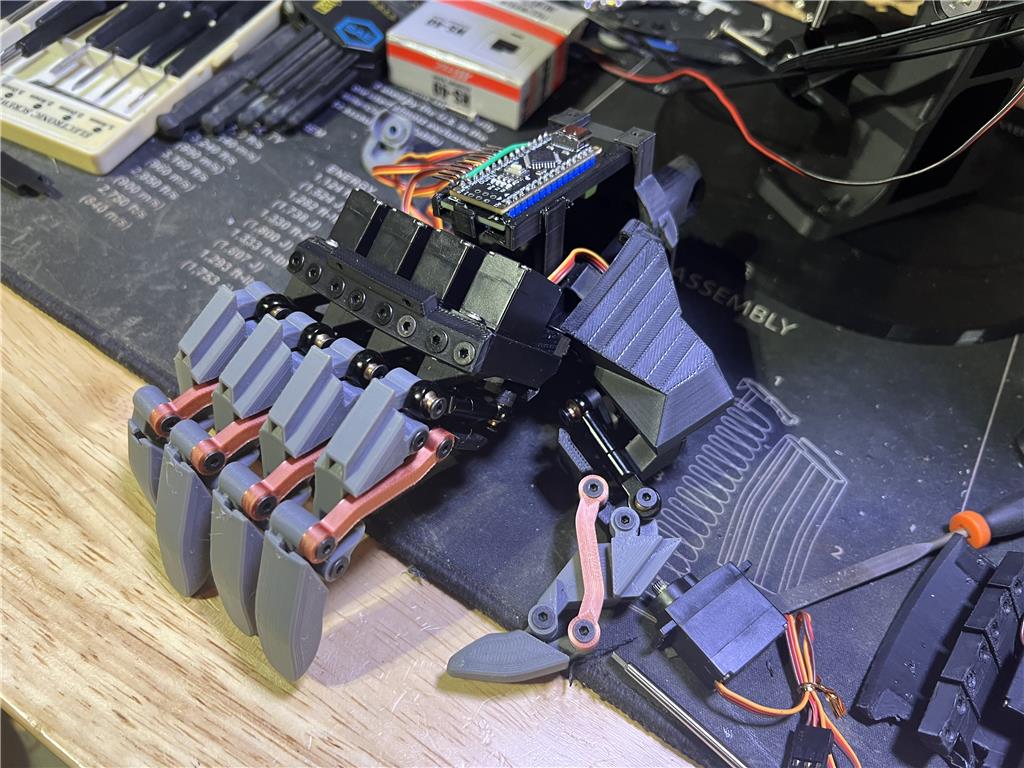

I've created both two and three-finger claw grippers and human-like hands, providing versatility in interaction for the XR-1.

When placing a 360-degree LiDAR sensor like the RPLIDAR A1 on your XR-1 robot, there are several factors to consider to ensure optimal performance:

Placement Tips:

Height from the Floor:

Field of View:

Protection:

Integration with Other Sensors:

Testing and Adjustment:

Example Placement:

Conclusion:

The key is to ensure that the LiDAR has a clear and unobstructed view of the environment. Regular testing and adjustments will help you find the optimal placement for your specific use case. If you have any specific constraints or additional questions, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@athena thanks. Can you also explain how a lidar like the rplidar a1 works?

also explain how SLAM works when used with a lidar. The best SLAM for Synthiam is The Better Navigator.

the system for merging sensors and navigation in Synthiam ARC is called NMS (navigation messaging service)

How LiDAR Works:

LiDAR (Light Detection and Ranging) is a remote sensing method that uses light in the form of a pulsed laser to measure distances. Here's a breakdown of how a LiDAR sensor like the RPLIDAR A1 works:

Emission of Laser Pulses:

Detection of Reflected Pulses:

Distance Calculation:

Data Collection:

How SLAM Works with LiDAR:

SLAM (Simultaneous Localization and Mapping) is a computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. Here's how SLAM works when used with a LiDAR sensor:

Data Acquisition:

Feature Extraction:

Pose Estimation:

Map Building:

Localization:

Loop Closure:

The Better Navigator and NMS in Synthiam ARC:

Synthiam's ARC platform provides advanced tools for integrating sensors and navigation using the Navigation Messaging System (NMS) and The Better Navigator SLAM skill.

The Better Navigator:

Navigation Messaging System (NMS):

Example Workflow:

Setup:

Mapping:

Navigation:

By leveraging The Better Navigator and NMS in Synthiam ARC, you can create a robust and accurate navigation system for your XR-1 robot, enabling it to explore and interact with its environment effectively.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

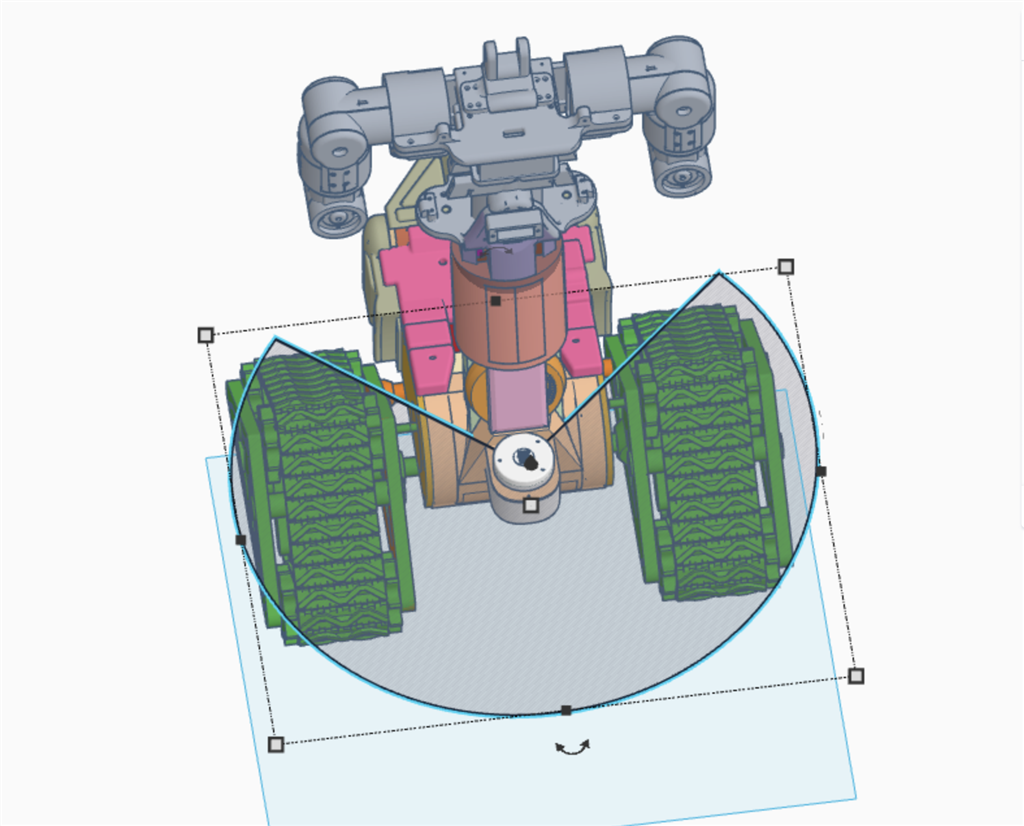

So what are your thoughts about this mounting location?

your diagram has the lidar only seeing 180 degrees.

@athena explain how many degrees the lidar should be able to see for a reliable slam pose estimation. Explain that a small pipe or something in the way behind it can be okay.

Athena can’t see images. It’s best to describe the image in degrees visible to the lidar. She’ll be able to assist you more than me. All I can do is ask Athena to help you.

DJ it see's a little over 255 degrees in this location.

No it’s not. It’s 180. Maybe 190. Draw lines directly out from the sensor. Anything past the 90 degree CW or -90 CCW is just hitting the body.

Pretend the lidar is a spinning flashlight but it’s a very small laser. So it just looks directly out at the angle. You’d have to move your lidar out 30cm away from the width of the body to see 270 cm (just guessing numbers here but Athena can explain more)

@athena can explain the math to you. But using the width of the body and how far the lidar is out in front of the body determines the total angles the lidar can see. Assuming the lidar is placed in the center of the front of the body.

this show 260 degrees of clear line of site.If I added a second one in the back does ARC merge the two data streams together automatically?