smiller29

The Birth Of The XR-1 DIY Robot

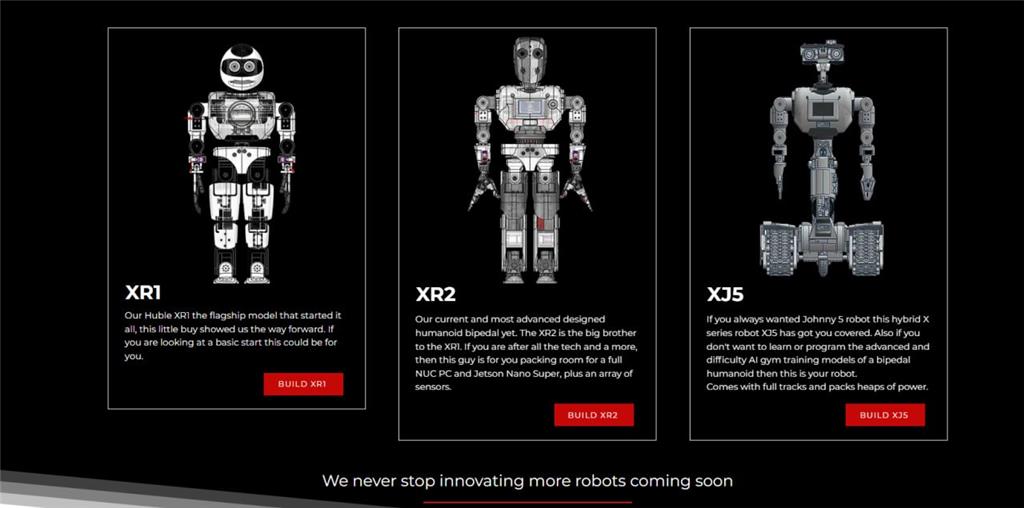

Introducing the Updated XJ-5: A New Class of Hobby Robots

The XJ-5 robot platform, available at Robots Builder, is known for its user-friendly design, emphasizing accessibility and ease of assembly. Designed to make robotics more approachable, the XJ-5 is meticulously engineered for simple 3D printing and assembly using standard components such as servos and bearings.

Our objective is to simplify the building process so that enthusiasts and robot hobbyists of all skill levels can engage in constructing their own robots. We've dedicated significant time to perfecting the design, enabling users to quickly progress to the exciting tasks of programming and teaching their robots using Synthiam's ARC software. The XJ-5 is highly customizable, featuring a variety of hands and heads to allow personalization of each creation. To foster a collaborative and open community, we're proud to announce that the XJ-5 is entirely open source, inviting users to contribute, modify, and share their innovations globally. Get ready to embark on a journey of creativity and exploration with the XJ-5!

For more information, check out Robots Builder.

As a creator of the XJ-5 and our other XR series, I will be utilizing the Synthiam ARC platform for robot control, and I hope others here will join me in developing an ARC project to support the XJ-5 robot. As of January 9th, 2024, we have started posting files for 3D printing. Meanwhile, we are developing documentation and updating the website, so please check back regularly.

My goal is to develop a walking, talking, listening, and seeing robot the size of a small child using ARC and its various features. As I progress, I will be sharing more about my efforts here.

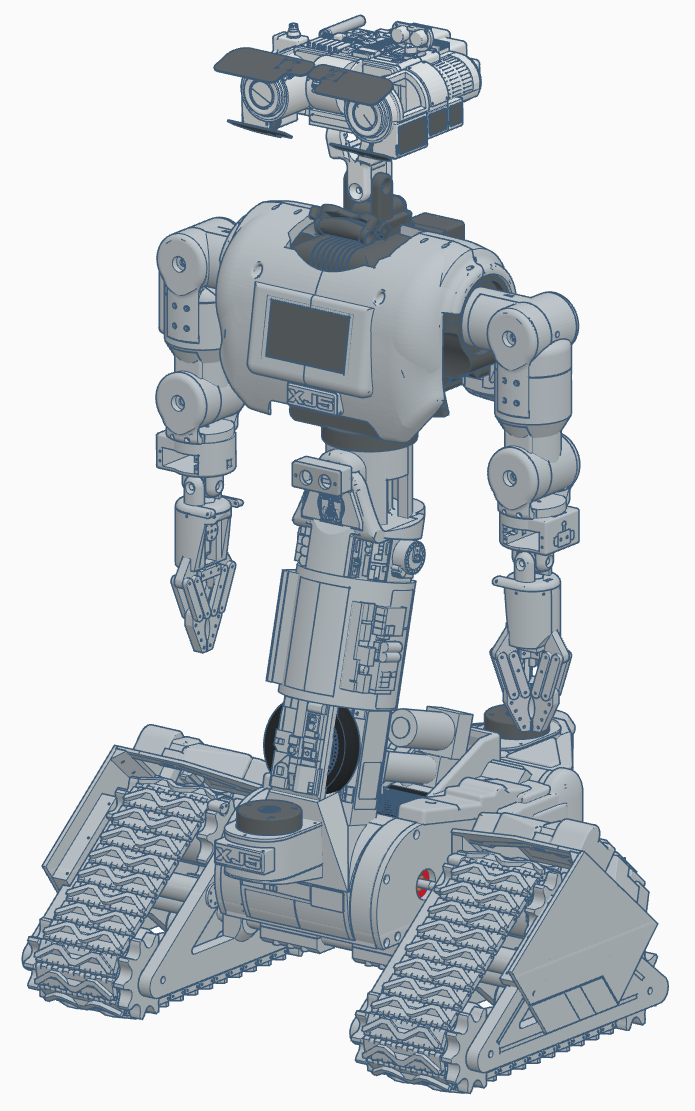

Now, let's delve into the project, focusing on the new and improved XR1, the XJ-5, which I have been designing and building over the past year.

As a young enthusiast, I loved the movie Short Circuit and was inspired by the Johnny 5 robot, motivating me to create a similar robot. Although not a direct replica of Johnny 5, it embodies some of its iconic features. About a year ago, DJ suggested opting for a robot with wheels or tracks over a bipedal robot due to the complexity of achieving stable walking, a challenge I am familiar with from our XR2 project. But that's a story for another time.

Now, in May 2025, I've nearly completed the assembly and will soon start implementing ARC and the AI brain I've been developing to enhance ARC support. Expect more updates soon.

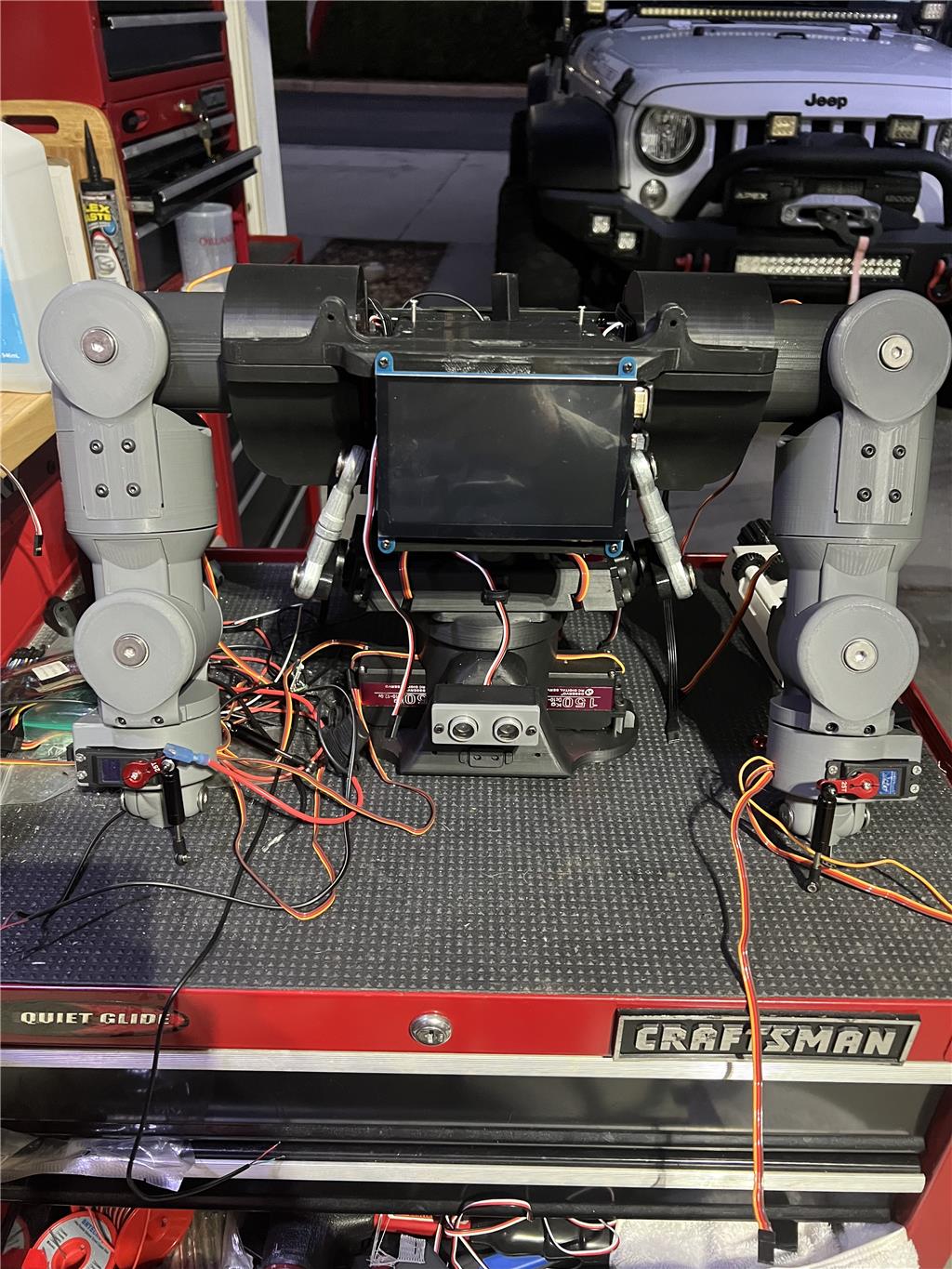

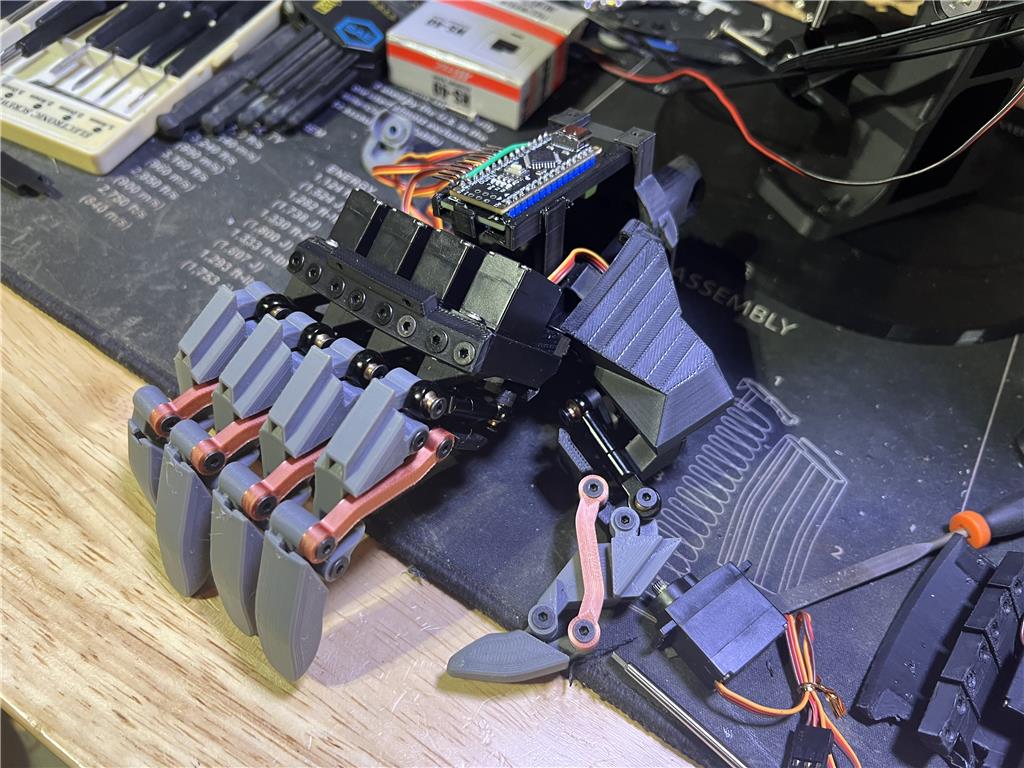

I have started building the robot's torso from the hips up, as shown in the pictures below. The shoulders are not entirely installed because I'm awaiting the arrival of the correct screws. Please excuse the messy workbench.

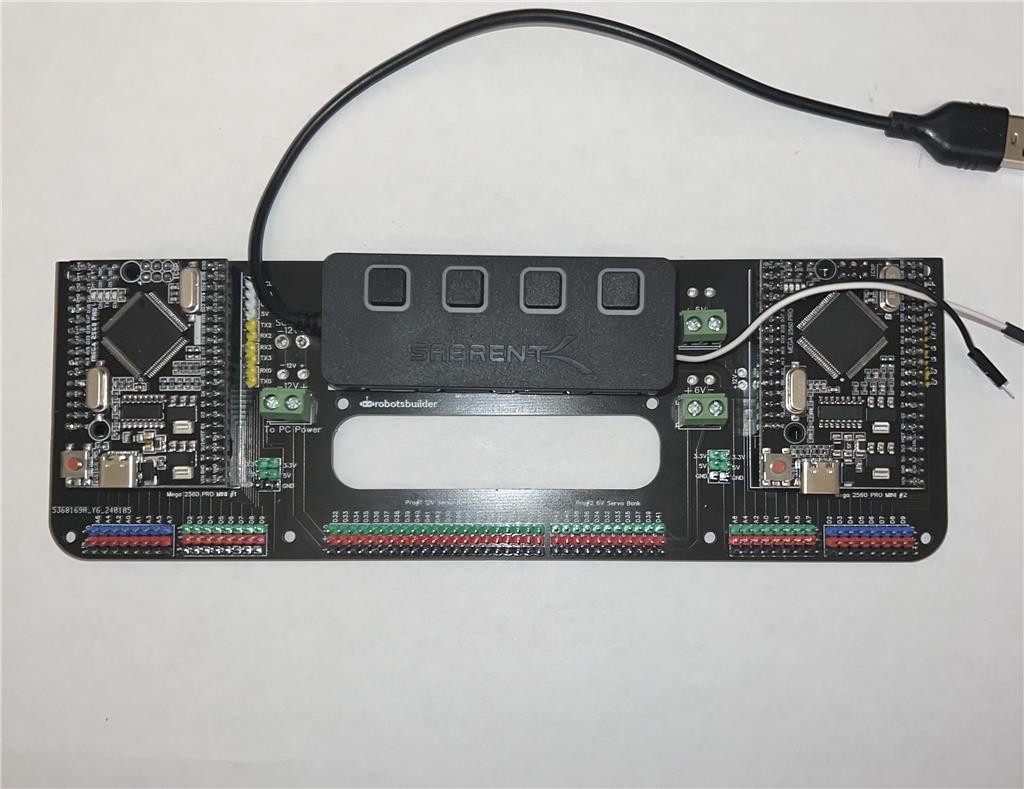

To support the robot, I've designed a set of custom boards with ARC firmware that will connect to the onboard PC, located in the chest area.

The above board will be mounted on the backplate between the two shoulder sockets and connected to a USB hub linked to the PC. Two additional USB cables, alongside servo power, run down to the boards in each hand. The USB camera and headboard will connect to the PC's other two USB ports. ARC will operate headlessly on the Windows 11 Pro PC using RDP for remote connectivity. Additionally, there's an MPU connected to the main board, which I plan to leverage with ARC.

I've added the shoulders and arms down to the wrists, ensuring they are strong and capable.

I've decided to create a Johnny 5-style head for my build featuring two cameras. Here's the completed head. I've included six more servos for the eye flaps, bringing the total count to 50 servos. The design incorporates a directional mic array and ultrasonic radar for enhanced audio and visual capabilities. For sound, two speakers are mounted in the head with an audio amplifier.

I've also opted to integrate a 5-inch touch display into the XR-1, as shown below.

This display will offer additional options for operator interaction using ARC remote controls.

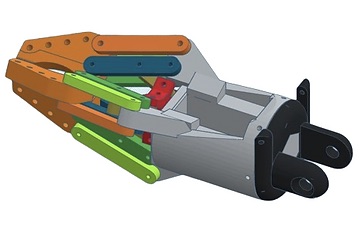

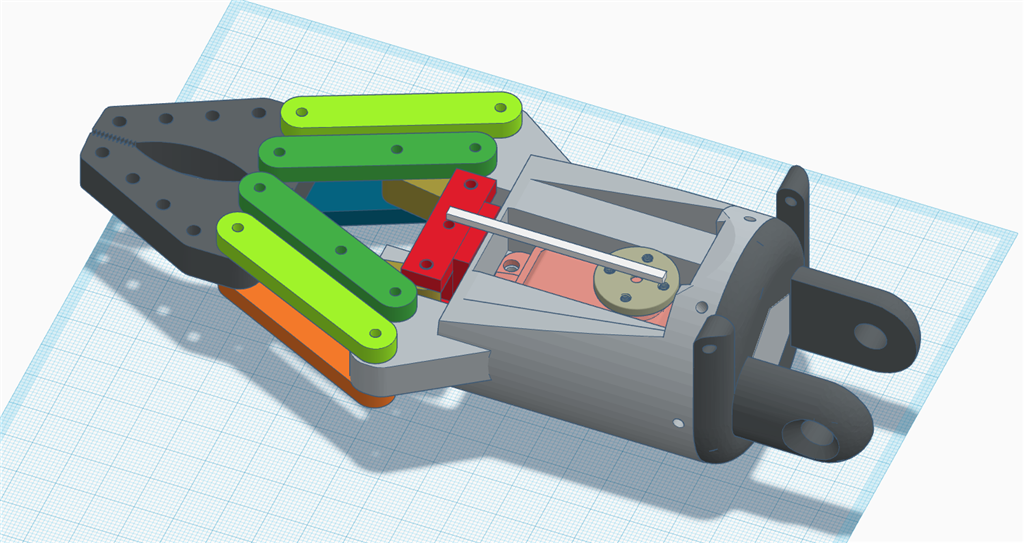

I've created both two and three-finger claw grippers and human-like hands, providing versatility in interaction for the XR-1.

Pretty cool robot! How tall is it?

@Jeremie, He is about 105cm or 42 inches tall I think he is currently 44DOF at this point depending what you do in the head it could be more.

The current design will have a very small but powerful Windows 11 Pro PC on board with USB hub connecting an Arduino Nano in the head and Nano in each hand and two Mega Pro's on the back for everything else. It will also have other sensors.

Very cool. Great work. I took a look at your BOM, I am glad you used hobby servos and gave links to AliExpress . Every time I go to build a project it’s just buy a dozen of these $1500 dynamixal servos

Nice work smiller29

Anyway section by section part by part he is being uploaded, we should be at 80% by end of week less the head and hands only.

The head is very close to being completed. The hands are going to take a bit longer as we are working to make a strong functional set of hands and with the child size they are smaller so it creates a challenge. We have been working on many concepts but have not decided which one to use at this point.

We have added a link to SYNTHIAM on the website for a software solution for the XR-1 so I hope you get some added traffic to your site.

I am hopeful that the Arduino Mega Firmware is going to work on the Mega Pro Mini without having to change it.

My testing starts today I hope if I find issues I can get some help from DJ to fix things to support these boards because our project is depending on them.

Looks really exciting. Thanks for sharing. Good luck with the roll out.