After a few researches I have learned that robots can learn through deep reinforcement learning, imitation learning, programmable demonstration and unsupervised learning. This is cool and complex stuff.

But there's something that came up in my mind regarding this. Since almost 2 (or maybe 3) years, companies have developed the VR Technology, which allows you to do several things (including playing videogames). You can control everything, including game characters, with your body while being in a room.

This made me think. Is it possible to actually control a robot through the VR Technology?

I make you an example. Let's say I build a humanoid robot (using EZ-Robot). I connect the robot to the VR Technology (and since it is a wireless technology, it is obvious that there aren't cables) and turn every device on. When I move a part of my body, the robot does the same. If I walk forward, the robot does the same.

Is it possible somehow? If so, what do you think will be the level of articulation of my robot? confused

Other robots from Synthiam community

Lumpy's Lumpy's Wall-E

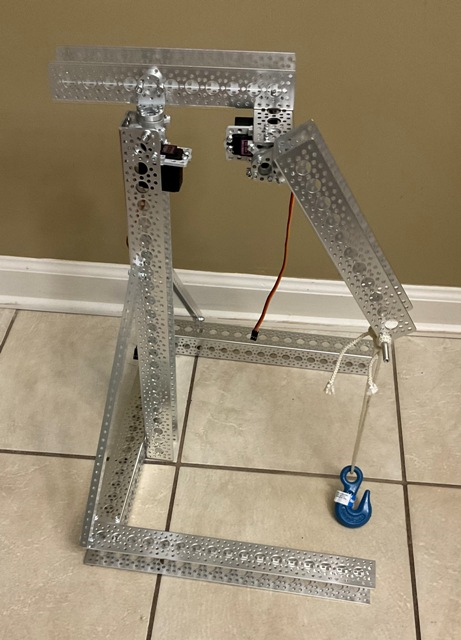

Dave's New Building System At Servocity, Gobilda

Why did you select the Project Showcase tag for this post? This is not a post for a robot. You are not showcasing a robot. This is a question and should not be a project showcase, the other tags are correct. However, Project Showcase is incorrect.

If you have an ez-robot, you can either use the Oculus Rift or Vuzix - both are already supported.

Here is a direct link to the oculus rift plugin: https://synthiam.com/redirect/legacy?table=plugin&id=59

And here is link to the Vuzix: https://synthiam.com/Tutorials/Help.aspx?id=166

Oh man, you are right. Thank you very much for pointing that out. I didn't notice that I made that mistake.

Those links are really useful. They're what I need for my robot. But now I was wondering if there is any open thread for the Oculus Rift Plugin. Moreover, could that plugin allow me to actually control the whole robot's body? confused

You can control as many servos as you wish. The ARC software allows you to add 72 servos per axis. The VR system has 2 axis, up/down and left/right. So you can connect 72 servos per axis.

The MULTI servo button is universal across all ARC controls that use servos. You will find out more in the LEARN section here: https://synthiam.com/Tutorials/Lesson/49?courseId=6

We have 3 axis (X, Y and Z), which means 216 servos! That's a lot of servos. The VR System has 2 axis, which means 144 servos. Still a lot of servos.

Excellent. Just...excellent!

Is the Multi servo Button useful in case I use more than 2-4 servos?

The Occulus Rift is only tracking head movement, so you could use it for any motion that is connected to the robots head...but I guess they will also ship it with controllers now which will allow to track your hand movements. Those would need to be connected to the ARC application somehow!

Since your servos do not care about 3D space but only about rotation I am not sure if this would be what you are really looking for technically!

I guess what you would want are those new robotic servos that can talk back and send rotation values to you robot while you have them attached to your body!

I saw a project like this...let me check!

I am totally sure it can be done with ARC...this platform is awesome and you will always get help from within this community!

https://www.youtube.com/watch?v=poPeO7xxA90

https://www.youtube.com/watch?v=9gOJZdMGZZE&list=PLpwJoq86vov-v97fBMRfm-A9xv8CzX8Hn