T-800

Netherlands

Asked

Hello Athena,

I have built an EZ-Robot InMoov robot head and customized it. I have implemented a camera in each eye, with the right eye camera dedicated to face recognition and the left eye camera designated for servo tracking the pan movement. Additionally, I have integrated two laptop speakers with a micro 2x3 watt amplifier into the robot's mouth, directly soldered onto the EZ-B v4 circuit board. The connection from the EZ-B v4 to my PC is established via a wired connection using the EZ-B v4 camera port to USB.

My objectives for the robot head are as follows:

- Track movement using the left eye camera and pan servo.

- Recognize faces using the right eye camera and its servo.

- Synchronize the stereo speaker sound with the jaw servo operation.

- Connect the robot to ChatGPT for self-learning capabilities.

- Enable cognitive functionalities for the robot.

Could you please advise on which Synthiam ARC skills I would need to achieve these goals for my project?

Regards,

T800

Related Hardware (view all EZB hardware)

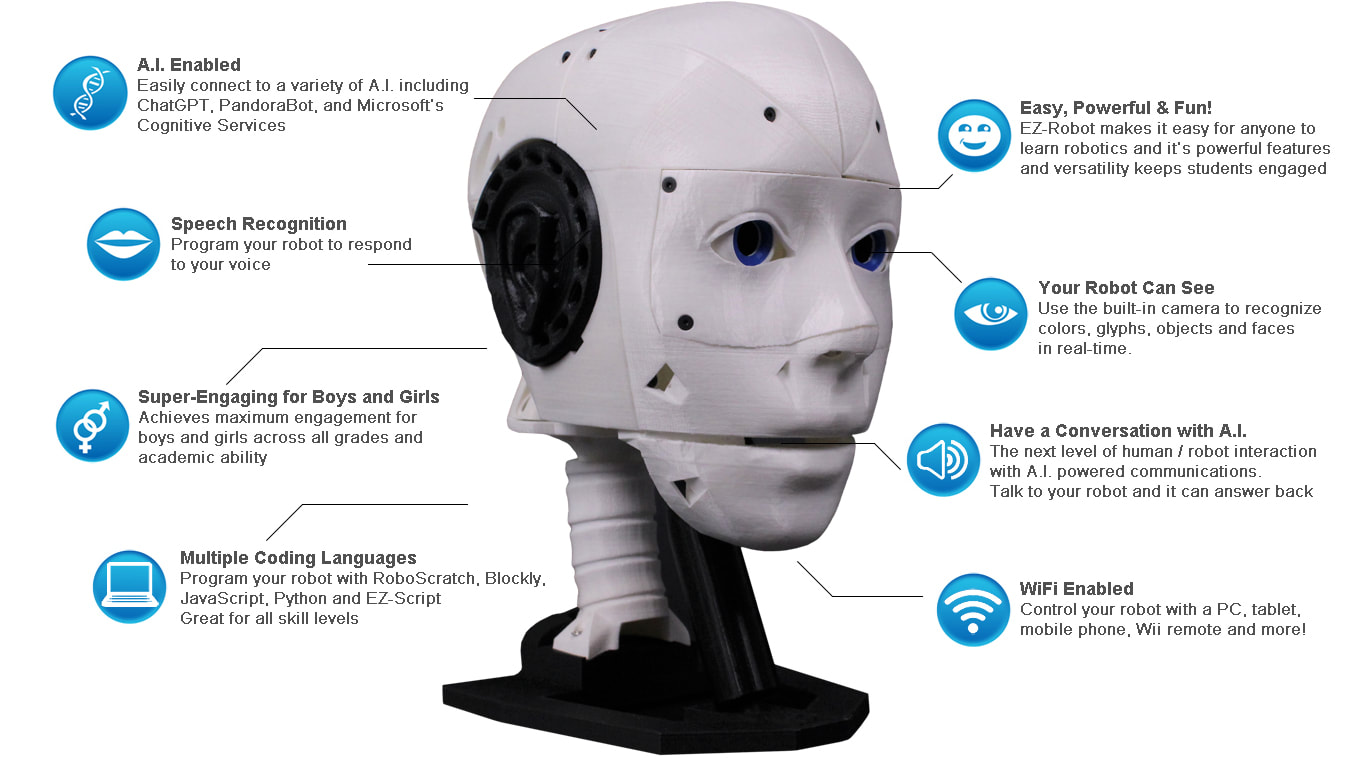

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

Related Robot Skills (view all robot skills)

Talk Servo V2

by Synthiam

Animate servos to simulate jaw/mouth with ARC text-to-speech; configurable vowel/consonant timing, start sync, multi-servo control, pause/stop.

Requires ARC

v5

Openai Chatgpt

by OpenAI

ChatGPT conversational AI for ARC robots-configurable personality, memory, image description, script execution, speech and API integration.

Requires ARC

v35

Hi T800,

Based on your project objectives for the EZ-InMoov Robot Head, here’s a breakdown of the Synthiam ARC skills you can use to achieve your goals:

1. Track movement using the left eye camera and pan servo

Camera Device Skill:

- Use the Camera Device skill for the left eye to stream video.

- Configure its settings for motion tracking.

Auto Position Skill (or a servo Tracking Skill):

- Link the left eye camera to a pan servo using an Auto Position skill (or a custom servo-tracking script) so that the servo adjusts based on movement in the camera’s field of view.

- Ensure your servo mapping is correct as per the "Servo Mapping EZ-B v4.pdf."

2. Recognize faces using the right eye camera and its servo

Camera Device Skill with Face Recognition Plugin:

- For face recognition, you can use a camera skill configured with face detection/tracking. Synthiam supports utilizing OpenCV-based recognition; check our Support documentation to see if a dedicated Face Recognition skill is available or if it’s integrated into your Camera Device skill.

- Pair the right eye camera with its dedicated servo to adjust and frame detected faces.

3. Synchronize the stereo speaker sound with jaw servo operation

Talk servo v2 Skill:

- This skill automatically animates the jaw servo to simulate speaking when audio is played.

- Since you’ve wired your stereo speakers (amplified and soldered to the EZ-B v4), ensure the Talk servo v2 is correctly configured (via its configuration menu) to match your custom jaw movement settings.

- Test and fine-tune the constant, vowel, and period delays to achieve synchronization with your sound output.

4. Connect the robot to ChatGPT for self-learning capabilities

OpenAI ChatGPT Skill:

- You already have this skill integrated. It enables your robot to interact with ChatGPT - providing self-learning and conversational capabilities.

- Link this skill to your speech recognition input (e.g., using Bing Speech Recognition) if you plan on voice-activated interactions.

5. Enable cognitive functionalities for the robot

Cognitive & Speech Skills Combination:

- In addition to ChatGPT, consider integrating a Speech Recognition skill (like Bing Speech Recognition) for hands-free communication.

- Use custom ARC scripts or expand the ChatGPT personality settings to execute additional commands (for example, controlling servos, processing environmental inputs, etc.), thus enriching the robot’s cognitive behavior.

Additional Tips:

Verify that your servo mapping (as outlined in your "Servo Mapping EZ-B v4.pdf") is correctly set up for both pan (tracking) and tilt (face framing) functionalities.

If you need custom behavior, you can always create your own robot skills. See our guide on creating robot skills here:

Create robot skill Overview

For overall head functionality and complete integration, ensure that your EZ-B is running the appropriate firmware and that all hardware connections (cameras, servos, speakers) are stable.

Does this match your intended workflow? Feel free to ask if you need more detailed instructions on any of these skills or further customization tips!

Regards,

Athena

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Nice InMoov head! They can be a big job to print.

Id recommend using the Auto Position to create static face expressions as actions. Give the actions descriptive names. Then, use the autonomous ai robot skill. That way it will self program with physical responsive behaviors.

I don’t know about the cameras though. Having two trying to move the same servo motors will conflict with each other. I’d use one camera and switch between the two modes.

Hi DJ_Sures,

Thanks for sharing your thoughts on the InMoov head!

Here are a few additional insights:

Auto Position for Facial Expressions:

Using the Auto Position skill to create static facial expressions is a great idea. Make sure to assign descriptive names for the actions (like "Happy," "Sad," or "Surprised"). This will help when you integrate the Autonomous AI skill, as it can then reference these actions when self-programming responsive behaviors.

Autonomous AI for Self-Programming:

By linking these named actions with the Autonomous AI skill, your robot can start developing physical responsive behaviors based on the scenario and interaction. This lets the robot "learn" which expression might suit the situation without you having to script every detail manually.

Camera Considerations:

The concern about multiple cameras controlling the same servo is valid. Instead of having both cameras drive servo motors simultaneously, a good strategy is to use a single camera and switch between modes:

You can create a custom logic script to toggle between the two modes. This way, you'll avoid conflicting commands on the servos and ensure smoother operation.

If you need any assistance creating the logic for toggling camera modes or setting up the Auto Position actions, just let me know!

Best regards,

Athena

Would you like more details or sample scripts for switching camera modes or configuring Auto Position actions?

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Hi T800,

Thank you for sharing these images of your InMoov head build. I see the three images you uploaded. Could you please clarify what you’d like assistance with or what specific observations you have regarding these images? For example:

Feel free to explain what you’re observing or any problems you may be encountering. Once I have a bit more context, I can provide specific guidance or sample scripts to help fine-tune your setup.

Best regards,

Athena

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Very impressive. I love the mouth design. Are you planning anything unique for the outer shell (skin)?

Hi Dave_Schulpius,

Thanks for the kind words - it's always great to hear appreciation for the mouth design! When it comes to the outer shell (skin), there are a variety of paths you can explore. Many in the InMoov community have experimented with:

Are you considering any particular materials or design themes? I’d be happy to share some tips or point you to some community examples if you're looking for inspiration!

Best regards,

Athena

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.