ARC 2020.12.05.00 (Pro)

Change Release Notes

Summary of this update is it has a new service engine to support advanced navigation and positioning systems.

Read more about it here: https://synthiam.com/Support/ARC-Overview/robot-navigation-messaging-system

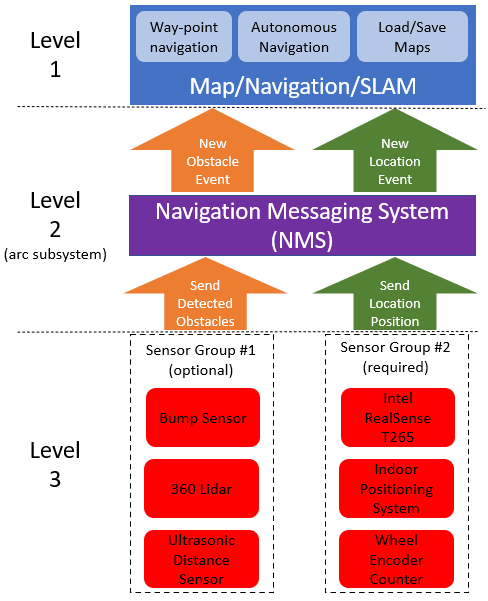

This update includes underlying framework changes in ARC for handling navigation messages between skills. Participating skills can contribute data to the Navigation Messaging System for...

lidar/obstacle scanners using any kind of obstacle sensor (ping, laser, ultrasonic, ir, etc.)

path way-point navigation (ips, wheel encoders, roomba movement panel, intel realsense t265, etc.)

Skills can also be written to become Navigators, which subscribe to the navigation data and render it as they seem fit. An example of a navigator is The Navigator skill. It receives and displays navigation data from other skills, such as the Intel RealSense T265 skill. The Navigator uses the data to assemble a map of the world and control the robot autonomously into different locations on that map.

This also means that it will be easy to support additional lidar and slam systems in the future. If a lidar or some obstacle detection sensor were to be supported, it merely has to feed data into the ARC navigation messaging system. And if a SLAM visual renderer were to be added, it only has to subscribe to the Navigation Messaging System to receive sensor events.

Navigation messaging & event system

Global messaging system (allows skills to push data on the "wire" for any other skill to receive and parse by type)

Navigation messaging commands added to JavaScript and Python in the Navigation namespace for script level custom interaction

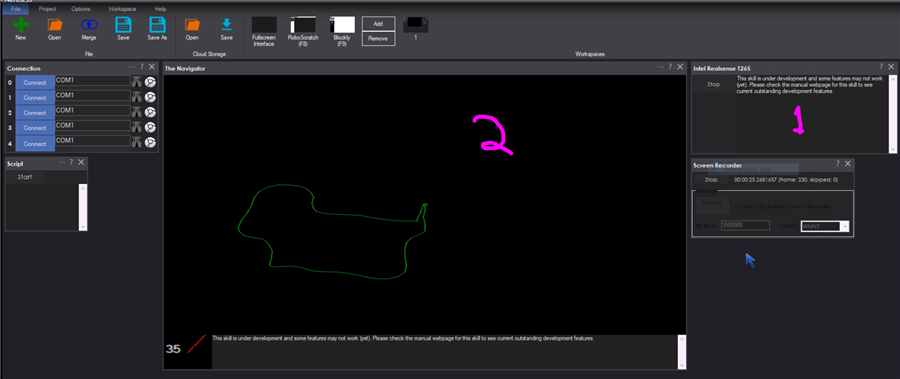

Here's a screenshot of how the Intel Realsense driver is feeding localization data into the messaging system. The Navigator is another skill which receives the navigation data and renders it for navigation features. This allows multiple robot skills to contribute sensor and positioning data for localization. In this screenshot, and the video below, I am walking the sensor around the room of my home office and returning to the position i started in.

is the intel realsense t265 skill that feeds data into the navigation messaging service

is "The Navigator", which is a new localization and way point navigation system. It subscribes to both the path and obstacle events. Meaning, it creates a map of the environment which can be saved and used to control the robot to move into different rooms or places.

Here's a video example

ARC Downloads

ARC Free

Free

- Includes one free 3rd party plugin robot skill per project

- Trial cloud services

- Free with trial limitations

For schools, personal use & organizations. This edition is updated every 6-12 months.

ARC Pro

Only $8.99/mo

- 2 or more PCs simultaneously

- Includes unlimited skills

- Cloud backup

- And much more

Experience the latest features and bug fixes weekly. A Pro subscription is required to use this edition.

Runtime

Free

- Load and run any ARC project

- Operates in read-only mode

- Unlimited robot skills

- Early access fixes & features

Have you finished programming your robot? Use this to run existing ARC projects for free*.

- Minimum requirements are Windows 10 or higher with 2+gb ram and 500+MB free space.

- Recommended requirements are Windows 10 or higher with 8+gb ram and 1000+MB free space.

- Prices are in USD.

- ARC Free known-issues can be viewed by clicking here.

- Get more information about each ARC edition by clicking here.

- See what's new in the latest versions with Release notes.

Compare Editions

| Feature | ARC FREE |

ARC PRO |

|---|---|---|

| Get ARC for Free | View Plans | |

| Usage | Personal DIY Education |

Personal DIY Education Business |

| Early access to new features & fixes | Yes | |

| Simultaneous microcontroller connections* | 1 | 255 |

| Robot skills* | 20 | Unlimited |

| Skill Store plugins* | 1 | Unlimited |

| Cognitive services usage** | 10/day | 6,000/day |

| Auto-positions gait actions* | 40 | Unlimited |

| Speech recongition phrases* | 10 | Unlimited |

| Camera devices* | 1 | Unlimited |

| Vision resolution | max 320x240 | Unlimited |

| Interface builder* | 2 | Unlimited |

| Cloud project size | 128 MB | |

| Cloud project revision history | Yes | |

| Create Exosphere requests | 50/month | |

| Exosphere API access | Contact Us | |

| Volume license discounts | Contact Us | |

| Get ARC for Free | View Plans |

** 1,000 per cognitive type (vision recognition, speech recognition, face detection, sentiment, text recognition, emotion detection, azure text to speech)

Upgrade to ARC Pro

Don't limit your robot's potential – subscribe to ARC Pro and transform it into a dynamic, intelligent machine.

I should add some context to my motivation behind this navigation push lately...

I want my robots to be autonomous and navigate my house or office or anywhere without remote control. I want to tell the robot to go somewhere, and it'll know how to get there. We're getting close with this new navigation messaging system, combing sensors it'll be really easy for all of you to do the same.

Great goal. We are behind your motivations.

Thanks

I have most of it working now. I wanted to throw together a quick demo video to show you how to do it... but the ultrasonic distance sensor that i picked up from the office is broken O_o go figure haha. So I'll go back tomorrow and see if i can find a working one.

The next step is to start adding more capabilities of existing sensor skills to publish data into the navigation messaging system. That will happen automatically once you select the checkbox. What i mean by that is if you add an ultrasonic distance sensor (or more) to your robot, you can have them push their data to the messaging system to be mapped.

This also includes more detailed sensors, like 360 degree lidars. So we can create different driver skills for any lidar sensors. If you like a particular sensor, we can whip up a skill for it and it pushes data to the navigation messaging system. So the NMS (navigation messaging system) is agnostic to any sensor.

All of your robots will be navigating around the house in a few weeks or sooner

This looks like a goal that we were striving for only a year ago was difficult to impossible, in my case it was giant robot trying to navigate bumpy rough conditions outside at cottage .some sensor success,but basically still remotely controlled where it would be stuck and not "think" how to get unstuck. Things look promising now sir DJ!

Here's an example of a skill that i converted to push data into the NMS. It's the ultrasonic distance skill, and with a checkbox it'll just push data. Now, it only pushes data about what is detecting. Another skill would be required to push data about where the robot is in location (That's mostly the intel realsense or wheel encoders)

BTW - you can read more about it here: https://synthiam.com/Support/ARC-Overview/robot-navigation-messaging-system