Hey guys.

So, I've had a quick play with the new Cognitive Sentiment Control today...

Found in ARC under... Project, Add, Artificial Intelligence, Cognitive Sentiment,

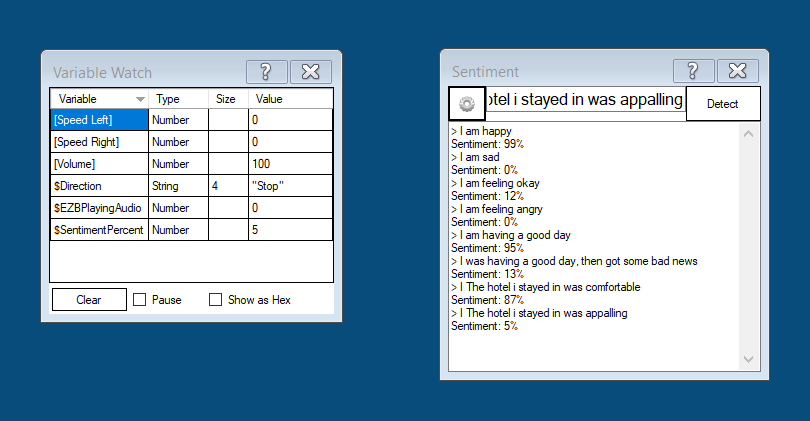

and have been trying things simple phrases like "I am happy.", "I am angry.","I am sad.", then tried pasting customer reviews from shopping websites, and looking at the returned values. After pressing "Detect" then looking at the Cognitive Sentiment Control dialogue along with the Variable watcher, it works pretty well with positive reviews returning back high $SentimentPercentage values, and bad reviews returning lower percentage values.

So, I wanted to ask everyone, what practical ideas can you think of to use the Cognitive Sentiment control as I must admit, I'm struggling to think of practical applications to make good use of it. Any and all thoughts/ideas are welcome. I'm not asking just for myself, but for the rest of the community as well.

You could have a global variable that keeps track of how happy the robot is based on user input.

create a global variable and initialize it in the connection control to something like a value of 10

every time there is user speech, modify the variable by adding or subtracting based on the sentiment of the input. If the sentiment > 50% the increase variable value by 1. If sentiment value < 50% then decrease variable value by 1.

actions and responses can be dependent on how far that variable is from the value of 10. Greater than 10 means the robot is happy and less than 10 means the robot is sad - all based on user input.

Hey DJ. Thanks for your response.

That is interesting. Admittedly, I have only been playing with this for an hour or two and have been thinking from the users point of view, i.e, the user saying how they feel. Reversing that and to have a global variable that keeps track of a robots "emotions" (so to speak) is something I didn't think of and sound's pretty cool.

In the above quote, you say the control will connect to the Pandorabot server. Can I ask, can the Cognitive Sentiment control be used with AIMLBot and Bing Speech Recognition controls as well? I only ask as I have gone off using Pandorabot because of their server "down time" and much prefer your implementation of the AIMLBot which is far better and I use all of the time now.

I would still love to hear from other members to see how they would/will use this control.

@Steve what are you using to edit the files of AIML files? I'm trying to find an easy editor to alter the files to create my own bot or alter the existing ones. I think way back when David C introduced EZ-AI he included a very easy editor, but i've since lost that computer and the files on it.

Edit: Found it "Program O"

I am implementing an emotional index to my robot. I have been thinking of a method like DJ was suggesting by incrementing and decrementing the index based on the Sentiment value. I will emote the robot by 3 means that I can think of right now. Color - Lights in the head would have lighter happier tones when he is happy and darker colors when angry. Like blue to red. Same for some body lighting Sound - Queues certain sound effects when happy or angry Movement - Positive and negative body posture gestures.

Any other possibilities?

The AIMLBot plugin includes an editor, find out more here: https://synthiam.com/redirect/legacy?table=plugin&id=242

Awsome DJ Thank you!

....in the trillions of patterns anyone know where to simply change the name spoken by the bot, i.e "my name is ez robot" I tried the obvious "name" under the n.aiml file, could not find it there.

Check the settings file: