Proteus

Portugal

Asked

— Edited

Is it possible to have two computers running ARC and have them linked over Wi-Fi. Like master slave to share workload? One handling vision and ai, and the other sensors and navigation?

Related Hardware (view all EZB hardware)

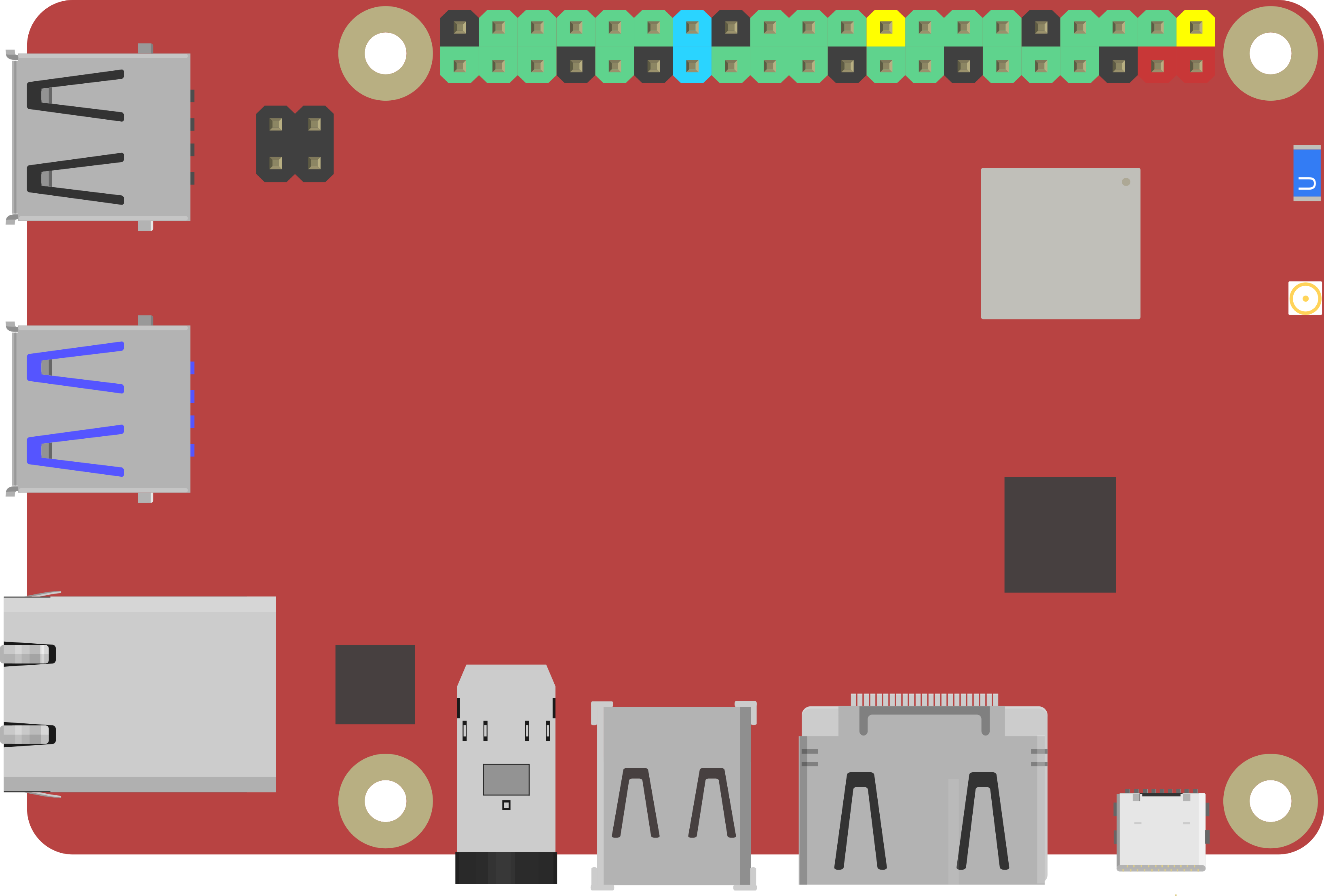

Rock Pi X

by Radxa

Control robots with Synthiam ARC on ROCK Pi X: affordable x86 SBC running Windows, Z8350 quad-core, 4K HDMI, Wi‑Fi, drivers and setup tips.

Wi-Fi / USB

Servos

✕

Camera

Audio

UART

✕

I2C

ADC

✕

Digital

✕

I guess you can connect them, in a sense (like nodes). Because at the end of the day, the servos and i/o is the only thing that's interacting with real hardware. So you technically could send servo positions to another ARC instance. The question sounds like it's asking about distributed processing, but that's a far larger conversation and wifi would not be useful. You'd need to extend the bus via usb3 or fiber at 40gbps+ with dedicated hardware. Look into vm instances how cpu and resource is shared across hardware if that's where you're heading. However, I have a difficult time answering the question because the answer is huge

See datacenters.

You've also flagged rock pi as the hardware for this question. Can you spend a bit of time and share your thoughts in greater detail?

You can also run multiple ARC programs running on same computer, I run 3 at a time doing different things.

When you run navigation, vision processing, speech recognition, various sensors, etc, the sbc just cant keep up with the workload. I was thinking something like having two computers running ARC and have some Kinde of topic share (request remote ARC to execute a robot skill and return the result).

Hmmm - a good starting point is to take a look at the program and see if any loops or duplicate controls (ie having distance sensor skills added but using getping in code instead of using variable)

Ive been thinking since you asked about how to divide up the processing - but the bottle neck on most of the tasks will be network latency.. and a way to maintain the modularity of the framework.

I’ll continue thinking a bit about some ideas for ya

I am currently using an intel compute stick while i wait for my RockPi X. Perhaps it will suffice. But never the less it would be cool to be able to have 2 ARC instances talking to each other.

You can off load a lot of tasks in ARC to cloud services using skills and plugins like cognitive vision, speech recognition, chat bots etc. If you want redundancy you could do VMWare HA cluster with 2 PC's (or 3 for NAS). I agree that it would be good to have a distributed architecture especially when you start to look at more complex compute intensive robotic systems. Perfect world would be a containerized version of ARC with every skill running in a separate micro service container in a kubernetes cluster so you can scale indefinitely and have high availability. We can dream can't we.

Nink that’s not too far off from the way ARC is designed. That’s kind of the thing I was thinking of in a way - because each skill gets its own process / thread anyway. Unless the skill binds to global system events, like responding to a servo being moved. But there’s only a handful of skills like that.