yatakitombi

Philippines

Asked

— Edited

can EZ-Camera read the range of the object? because i need to know if the camera can calculate the distance of the object then grab it...

can EZ-Camera read the range of the object? because i need to know if the camera can calculate the distance of the object then grab it...

No. Not accurately. You would need at least 2 cameras and some complex scripting.

The issue is the same as if you only had one eye. Your brain estimates distance based on using both eyes and what it has learned is the distance between them. It also bases this information on the size of the object and the size of the objects around it. Ping sensors and ir sensors can do this based on the time it takes for a signal to be sent out and then returned to the bot. The combination of these two methods will get you a more accurate measure of how far you are from an object.

many advanced robots are now placing cameras on the hands of the robot also. This allows your hand to guide itself into position from a perspective that is far better for grabbing an object. This with an ir sensor on the arm, along with a switch to know that you are now in contact with the object, would be your best bet. It would be more expensive.

I use a ping sensor beside the camera to calculate distance to an object or wall....

If you knew the size of the object, then you could calculate the distance using ez-script.

@DJ, might you have an example of that or perhaps could one be added in a future release of ARCs? I total follow what you are saying in "theory" but I can't wrap my head around how to apply using a know size calculation in a script to determine range via vision.

I think this will be moot if DJ pulls yet another rabbit out of his hat and introduces his camera based indoor navigation system he has mentioned... DJ, if your listening I am keep my credit card warm and cozy for that....

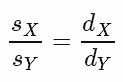

You'll need to know 3 things to determine the distance of an object based on it's apparent size.

First you need to know the size of the object at a known distance. So let's call them sX and dX

We then need to know the apparent size, let's call it sY. From this we can work out the Apparent Object Distance, or dY.

The formula to work out dY (or any of the other three) is

orSo, if we know we have an object that is 25" wide at 30" from the camera and we know it is 17" wide according to the camera at an unknown distance which we want to work out...

sX = 25 dX = 30 sY = 17 dY = ?

As always, I push people to think a little for themselves... Let's see who can do the calculation for the above example or better yet write the script

Note: this assumes the object is directly ahead at 0 degrees

edit: Changed the example values to make it a little more fun size and distance of 1" was boring...

size and distance of 1" was boring...

LOL, rich saves the day

and Richard R you're right, the localized positioning is in the works. Once we're comfortable with the logistics of the existing sku's, we will start manufacturing the new ez-bit components such as positioning