Zxen

Australia

Asked

— Edited

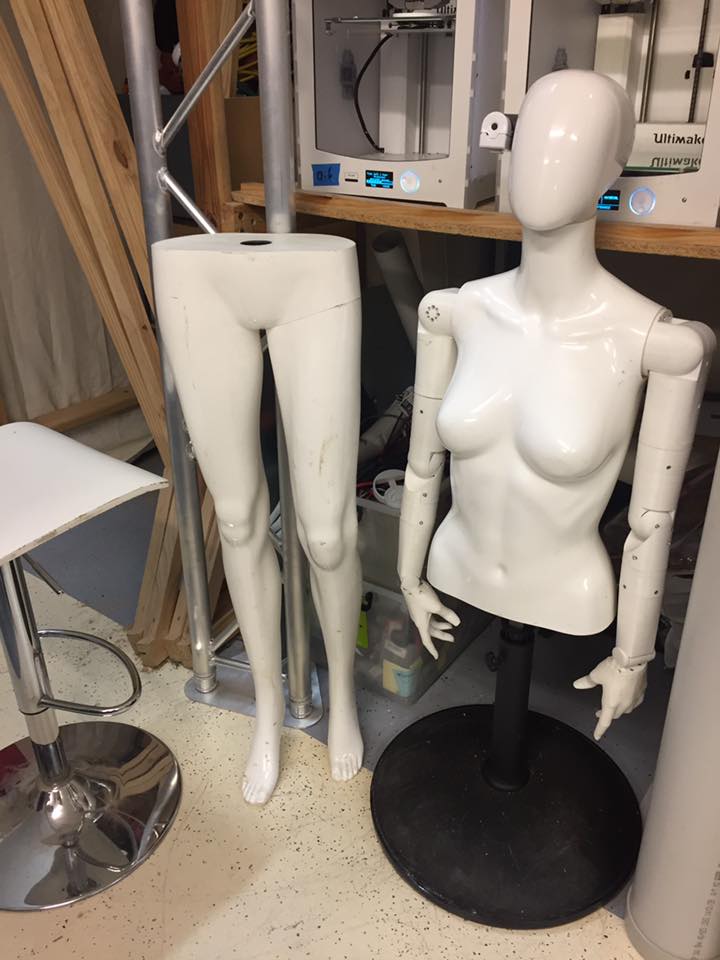

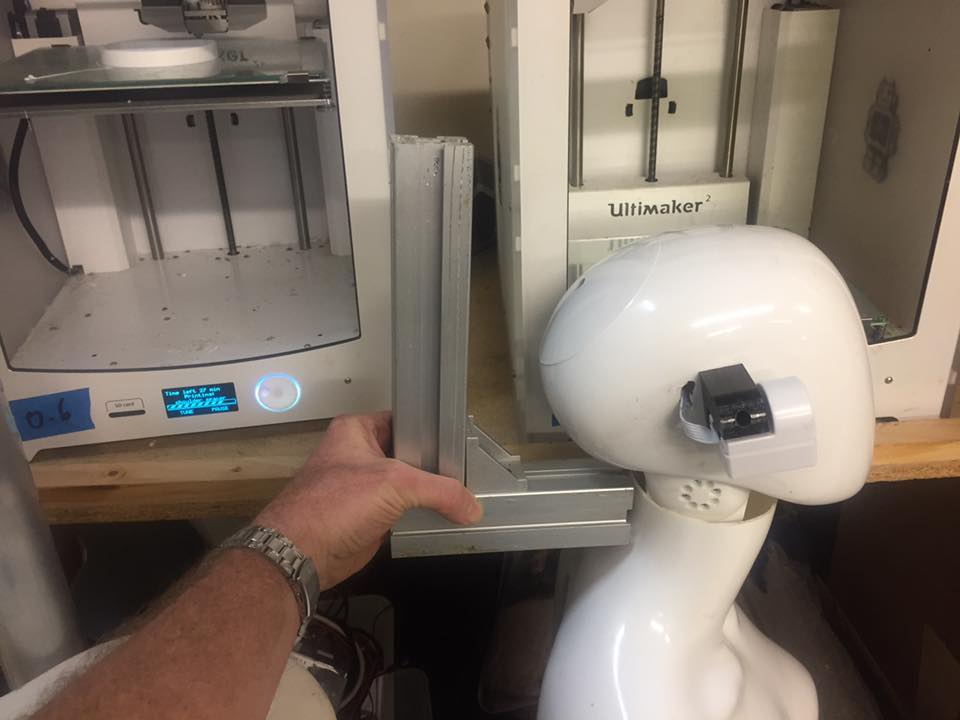

I need to extend the camera from it's circuit board and mount it in something like a cable dot on the side of the head facing forward. At the moment she looks borg. Not good. This is for department stores for women's fashion. Can I separate the camera with wires so its just a tiny dot?

How do I do that please?

PS The camera will definitely not be placed in the face of this particular mannequin head - It will be under the chin or facing forward from the ear.

You can easily remove the camera from its housing and install it.

Sorry just read your post again...not sure if that can be done but then again it could be.

From memory not sure if their is a ribbon, if there is then a ribbon extension cable would suffice.

Get a EZ-B v4 Camera Extension Cable (60cm)

I have a lot of camera cables. I was asking about the camera without the circuit board. Is it simple enough to desolder it, then run wires back to the board so the camera (without the circuit board) can be extended? I want a 1cm diameter camera on the side of the head, not a borg attachment like it is now.

If its too hard, can I buy a different camera that is compatible with the ezbv4 that I can control from ARC as normal?

I went and had a look at mine, unless you know what your doing i wouldn't tackle it, but if you do then you would want a longer ribbon, but it looks fiddly.

I don't see why the camera de-cased wouldn't fit in there, you would be left with the exact same look in the end.

It has a really good heat sink on it and will be facing towards the back of course so there's no chance of it heating up plastics, etc.

Im currently finishing off the head on my project, and the de-cased camera fits in there easily, its quite a small head so you'll be fine.

Thanks for checking, but I dont want the same look. At the moment I have a fashion mannequin for department stores with a tumour sticking out of the side of her head. It needs to be tiny - 1cm x 1cm x 1cm max.

Aha i see.

There are other camera's that are compatible for sure.

Really? I was worried that the ezbv4 had a patented input. Is the cable standard for circuit cameras?

I found this one - the first one I looked at: https://thepihut.com/products/adafruit-spy-camera-for-the-raspberry-pi

@NEWAGETOMY

To clarify you can use other cameras with ARC but only the ezb4 camera plugs directly into the ezb4 board. This is the only camera that will do this...